Fujitsu Limited.

Researchers from Fujitsu Limited and the University of Tokyo developed Quantization Error Propagation (QEP), a framework that reforms layer-wise post-training quantization to actively compensate for cumulative errors. This significantly reduces perplexity and improves zero-shot accuracy for large language models, particularly enabling practical 2-bit and 3-bit quantization.

Fujitsu Research presents the Geometrically Local Quantum Kernel (GLQK), a framework for learning quantum many-body data that leverages the exponential clustering property to address scalability challenges. It achieves a sample complexity scaling linearly with system size for general states and as a constant for translationally symmetric states, markedly outperforming existing shadow kernel methods in numerical experiments up to 80 qubits.

28 Oct 2025

Researchers developed a method for efficient magic state distillation, replacing complex code transformations in Magic State Cultivation (MSC) with lattice surgery. This approach reduces spacetime overhead by over 50% while maintaining comparable logical error rates, and introduces a lookup table for a further 15% reduction through early rejection.

29 Oct 2025

Researchers from Fujitsu and Osaka University developed a 'decoder switching' framework for real-time quantum error correction that resolves the inherent speed-accuracy tradeoff. The system combines a fast, soft-output weak decoder with a slower, accurate strong decoder, achieving logical error rates comparable to or better than the strong decoder alone while maintaining the average decoding speed of the weak decoder. The switching rate to the strong decoder was found to decay exponentially with increasing code distance.

The paper enhances the STAR architecture with new error suppression and resource state preparation schemes to improve analog rotation gates. This work shows that quantum phase estimation for an (8x8)-site Hubbard model can be performed with fewer than 4.9x10

⁴ qubits and a 9-day execution time at a 10

⁻⁴ physical error rate, demonstrating a practical quantum advantage over classical methods for materials simulation.

This research demonstrates that large language model (LLM) decoders within Vision-Language Models (VLMs) can actively compensate for deficiencies in visual representations provided by their vision encoders. Experiments showed VLMs maintaining high performance on a fine-grained object part identification task even when visual input was degraded, indicating a dynamic and adaptive interaction between modalities.

This paper studies bandit convex optimization with constraints, where the learner aims to generate a sequence of decisions under partial information of loss functions such that the cumulative loss is reduced as well as the cumulative constraint violation is simultaneously reduced. We adopt the cumulative \textit{hard} constraint violation as the metric of constraint violation, which is defined by ∑t=1Tmax{gt(xt),0}. Owing to the maximum operator, a strictly feasible solution cannot cancel out the effects of violated constraints compared to the conventional metric known as \textit{long-term} constraints violation. We present a penalty-based proximal gradient descent method that attains a sub-linear growth of both regret and cumulative hard constraint violation, in which the gradient is estimated with a two-point function evaluation. Precisely, our algorithm attains O(d2Tmax{c,1−c}) regret bounds and O(d2T1−2c) cumulative hard constraint violation bounds for convex loss functions and time-varying constraints, where d is the dimensionality of the feasible region and c∈[21,1) is a user-determined parameter. We also extend the result for the case where the loss functions are strongly convex and show that both regret and constraint violation bounds can be further reduced.

30 May 2025

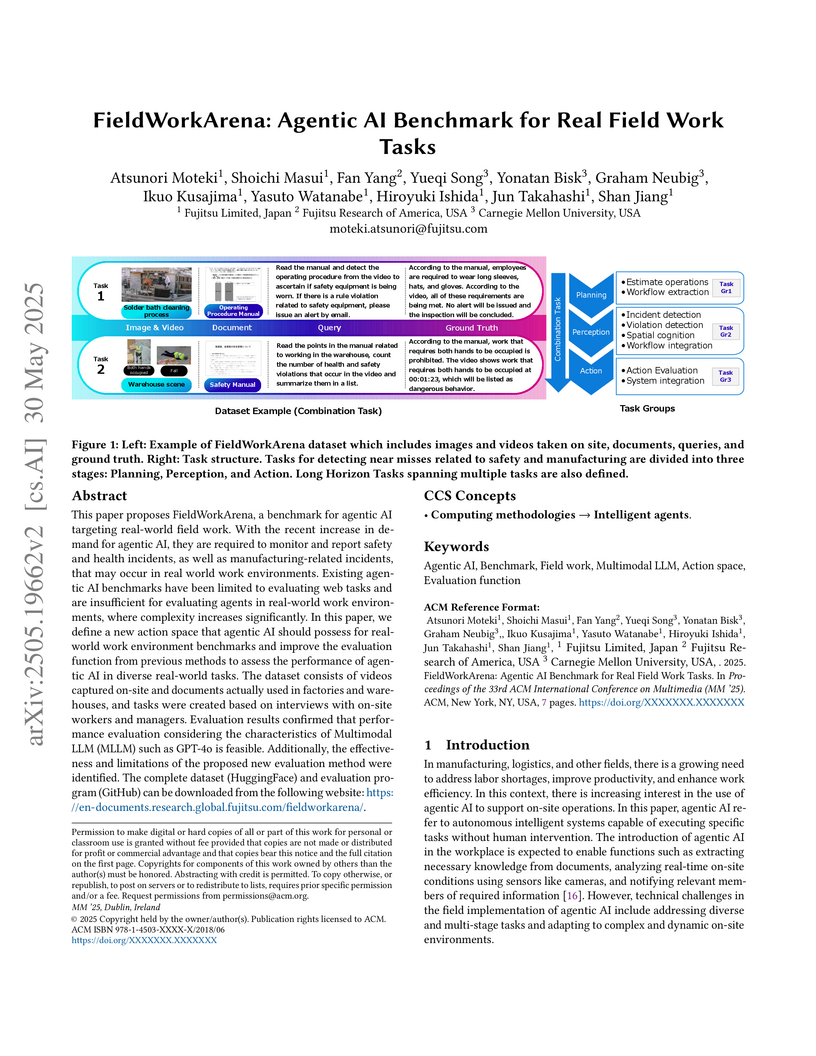

This paper proposes FieldWorkArena, a benchmark for agentic AI targeting

real-world field work. With the recent increase in demand for agentic AI, they

are required to monitor and report safety and health incidents, as well as

manufacturing-related incidents, that may occur in real-world work

environments. Existing agentic AI benchmarks have been limited to evaluating

web tasks and are insufficient for evaluating agents in real-world work

environments, where complexity increases significantly. In this paper, we

define a new action space that agentic AI should possess for real world work

environment benchmarks and improve the evaluation function from previous

methods to assess the performance of agentic AI in diverse real-world tasks.

The dataset consists of videos captured on-site and documents actually used in

factories and warehouses, and tasks were created based on interviews with

on-site workers and managers. Evaluation results confirmed that performance

evaluation considering the characteristics of Multimodal LLM (MLLM) such as

GPT-4o is feasible. Additionally, the effectiveness and limitations of the

proposed new evaluation method were identified. The complete dataset

(HuggingFace) and evaluation program (GitHub) can be downloaded from the

following website:

this https URL

Neural network potentials (NNPs) offer a powerful alternative to traditional force fields for molecular dynamics (MD) simulations. Accurate and stable MD simulations, crucial for evaluating material properties, require training data encompassing both low-energy stable structures and high-energy structures. Conventional knowledge distillation (KD) methods fine-tune a pre-trained NNP as a teacher model to generate training data for a student model. However, in material-specific models, this fine-tuning process increases energy barriers, making it difficult to create training data containing high-energy structures. To address this, we propose a novel KD framework that leverages a non-fine-tuned, off-the-shelf pre-trained NNP as a teacher. Its gentler energy landscape facilitates the exploration of a wider range of structures, including the high-energy structures crucial for stable MD simulations. Our framework employs a two-stage training process: first, the student NNP is trained with a dataset generated by the off-the-shelf teacher; then, it is fine-tuned with a smaller, high-accuracy density functional theory (DFT) dataset. We demonstrate the effectiveness of our framework by applying it to both organic (polyethylene glycol) and inorganic (L10GeP2S12) materials, achieving comparable or superior accuracy in reproducing physical properties compared to existing methods. Importantly, our method reduces the number of expensive DFT calculations by 10x compared to existing NNP generation methods, without sacrificing accuracy. Furthermore, the resulting student NNP achieves up to 106x speedup in inference compared to the teacher NNP, enabling significantly faster and more efficient MD simulations.

24 Oct 2025

Runtime optimization of the quantum computing within a given computational resource is important to achieve practical quantum advantage. In this paper, we propose a runtime reduction protocol for the lattice surgery, which utilizes the soft information corresponding to the logical measurement error. Our proposal is a simple two-step protocol: operating the lattice surgery with the small number of syndrome measurement cycles, and reexecuting it with full syndrome measurement cycles in cases where the time-like soft information catches logical error symptoms. We firstly discuss basic features of the time-like complementary gap as the concrete example of the time-like soft information based on numerical results. Then, we show that our protocol surpasses the existing runtime reduction protocol called temporally encoded lattice surgery (TELS) for the most cases. In addition, we confirm that the combination of our protocol and the TELS protocol can reduce the runtime further, over 50% in comparison to the naive serial execution of the lattice surgery. The proposed protocol in this paper can be applied to any quantum computing architecture based on the lattice surgery, and we expect that this will be one of the fundamental building blocks of runtime optimization to achieve practical scale quantum computing.

Quantized neural network training optimizes a discrete, non-differentiable objective. The straight-through estimator (STE) enables backpropagation through surrogate gradients and is widely used. While previous studies have primarily focused on the properties of surrogate gradients and their convergence, the influence of quantization hyperparameters, such as bit width and quantization range, on learning dynamics remains largely unexplored. We theoretically show that in the high-dimensional limit, STE dynamics converge to a deterministic ordinary differential equation. This reveals that STE training exhibits a plateau followed by a sharp drop in generalization error, with plateau length depending on the quantization range. A fixed-point analysis quantifies the asymptotic deviation from the unquantized linear model. We also extend analytical techniques for stochastic gradient descent to nonlinear transformations of weights and inputs.

This paper studies a distributionally robust portfolio optimization model

with a cardinality constraint for limiting the number of invested assets. We

formulate this model as a mixed-integer semidefinite optimization (MISDO)

problem by means of the moment-based ambiguity set of probability distributions

of asset returns. To exactly solve large-scale problems, we propose a

specialized cutting-plane algorithm that is based on bilevel optimization

reformulation. We prove the finite convergence of the algorithm. We also apply

a matrix completion technique to lower-level SDO problems to make their problem

sizes much smaller. Numerical experiments demonstrate that our cutting-plane

algorithm is significantly faster than the state-of-the-art MISDO solver

SCIP-SDP. We also show that our portfolio optimization model can achieve good

investment performance compared with the conventional robust optimization model

based on the ellipsoidal uncertainty set.

Chinzei et al. established a fundamental trade-off between gradient measurement efficiency and expressivity in deep quantum neural networks, proving that more expressive networks incur higher measurement costs. The authors introduced the Stabilizer-Logical Product Ansatz (SLPA), a quantum neural network architecture that achieves this theoretical efficiency limit, reducing the total measurement shots needed for training by factors like eight for certain tasks while maintaining performance.

Proteins are complex biomolecules that perform a variety of crucial functions within living organisms. Designing and generating novel proteins can pave the way for many future synthetic biology applications, including drug discovery. However, it remains a challenging computational task due to the large modeling space of protein structures. In this study, we propose a latent diffusion model that can reduce the complexity of protein modeling while flexibly capturing the distribution of natural protein structures in a condensed latent space. Specifically, we propose an equivariant protein autoencoder that embeds proteins into a latent space and then uses an equivariant diffusion model to learn the distribution of the latent protein representations. Experimental results demonstrate that our method can effectively generate novel protein backbone structures with high designability and efficiency. The code will be made publicly available at this https URL

Simulation of quantum many-body systems is a promising application of quantum computers. However, implementing the time-evolution operator as a quantum circuit efficiently on near-term devices with limited resources is challenging. Standard approaches like Trotterization often require deep circuits, making them impractical. This paper proposes a hybrid quantum-classical algorithm called Local Subspace Variational Quantum Compilation (LSVQC) for compiling the time-evolution operator. The LSVQC uses variational optimization to reproduce the action of the target time-evolution operator within a physically reasonable subspace. Optimization is performed on small local subsystems based on the Lieb-Robinson bound, allowing for cost function evaluation using small-scale quantum devices or classical computers. Numerical simulations on a spin-lattice model and an ab initio effective model of strongly correlated material Sr2CuO3 demonstrate the algorithm's effectiveness. It is shown that the LSVQC achieves a 95% reduction in circuit depth compared to Trotterization while maintaining accuracy. The subspace restriction also reduces resource requirements and improves accuracy. Furthermore, we estimate the gate count needed to execute the quantum simulations using the LSVQC on near-term quantum computing architectures in the noisy intermediate-scale or early fault-tolerant quantum computing era. Our estimation suggests that the acceptable physical gate error rate for the LSVQC can be significantly larger than for Trotterization.

Fujitsu Research of America and Princeton University researchers develop Merge to Mix, a method that uses model merging to accelerate dataset mixture selection for fine-tuning language models, demonstrating that averaging parameters from models individually fine-tuned on each dataset can serve as an effective surrogate for mixture-fine-tuned models with strong correlation (enabling efficient exploration of dataset combinations without costly fine-tuning evaluations) and consistently outperforming similarity-based selection baselines across computer vision and language tasks.

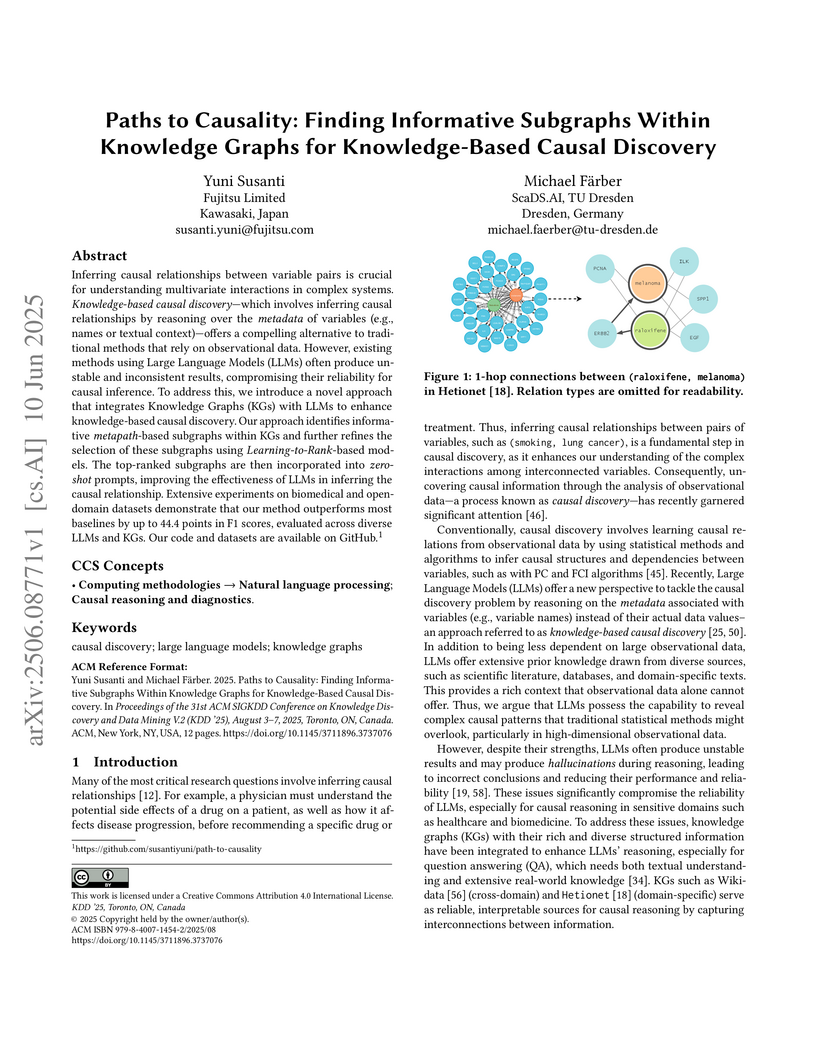

A method called "Paths to Causality" enhances large language model reliability for causal discovery by intelligently integrating knowledge graphs through a specialized subgraph ranking framework. It achieved up to a 44.4-point F1 score increase over ungrounded LLM baselines and outperformed traditional statistical causal inference methods.

13 Aug 2025

A collaborative team from Fujitsu Research of Europe, Fujitsu Limited, and Cohere developed MAPS, the first multilingual benchmark for agentic AI systems across 12 languages. The benchmark demonstrates that agent performance can degrade by up to 16% and security vulnerabilities can increase by up to 17% in non-English settings, with the impact varying significantly by task type.

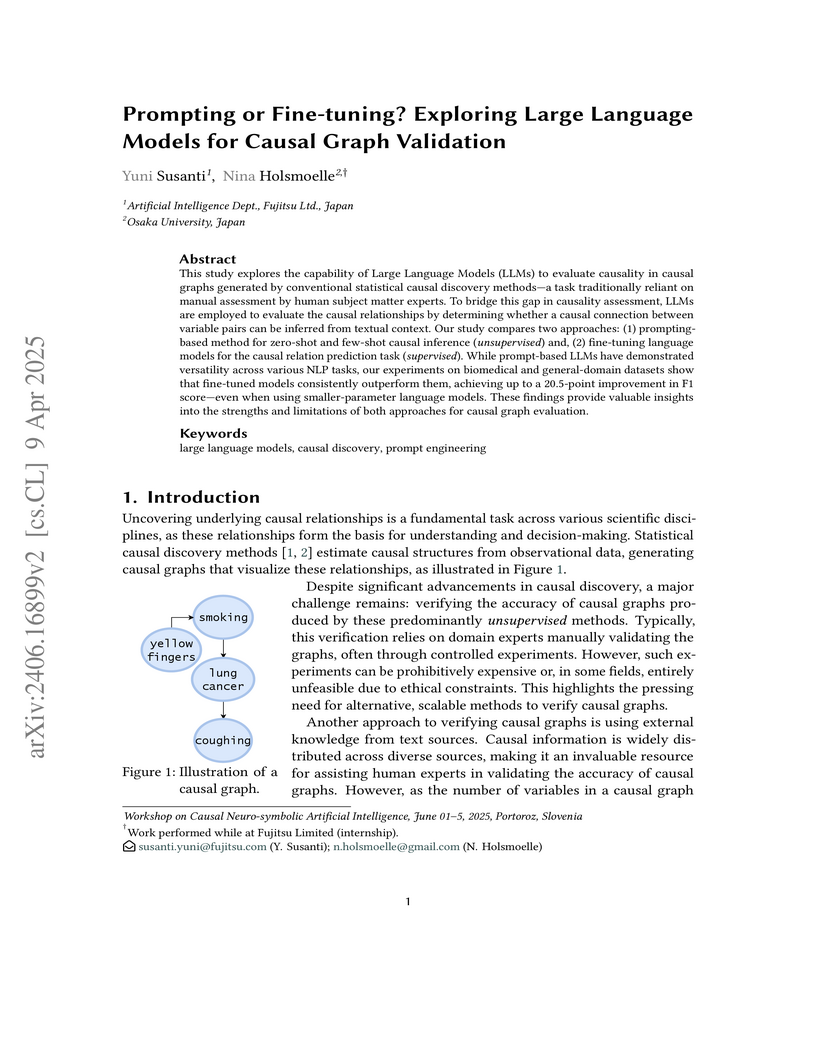

This study explores the capability of Large Language Models (LLMs) to

evaluate causality in causal graphs generated by conventional statistical

causal discovery methods-a task traditionally reliant on manual assessment by

human subject matter experts. To bridge this gap in causality assessment, LLMs

are employed to evaluate the causal relationships by determining whether a

causal connection between variable pairs can be inferred from textual context.

Our study compares two approaches: (1) prompting-based method for zero-shot and

few-shot causal inference and, (2) fine-tuning language models for the causal

relation prediction task. While prompt-based LLMs have demonstrated versatility

across various NLP tasks, our experiments on biomedical and general-domain

datasets show that fine-tuned models consistently outperform them, achieving up

to a 20.5-point improvement in F1 score-even when using smaller-parameter

language models. These findings provide valuable insights into the strengths

and limitations of both approaches for causal graph evaluation.

A new general-purpose solver called Parallel Quasi-Quantum Annealing (PQQA) is developed, integrating continuous relaxation with a physics-inspired annealing process and GPU-accelerated gradient-based sampling, enhanced by communication across parallel runs. This method achieves competitive and often superior speed-quality trade-offs for large-scale NP-hard combinatorial optimization problems compared to existing state-of-the-art approaches.

There are no more papers matching your filters at the moment.