Hefei University of Technology

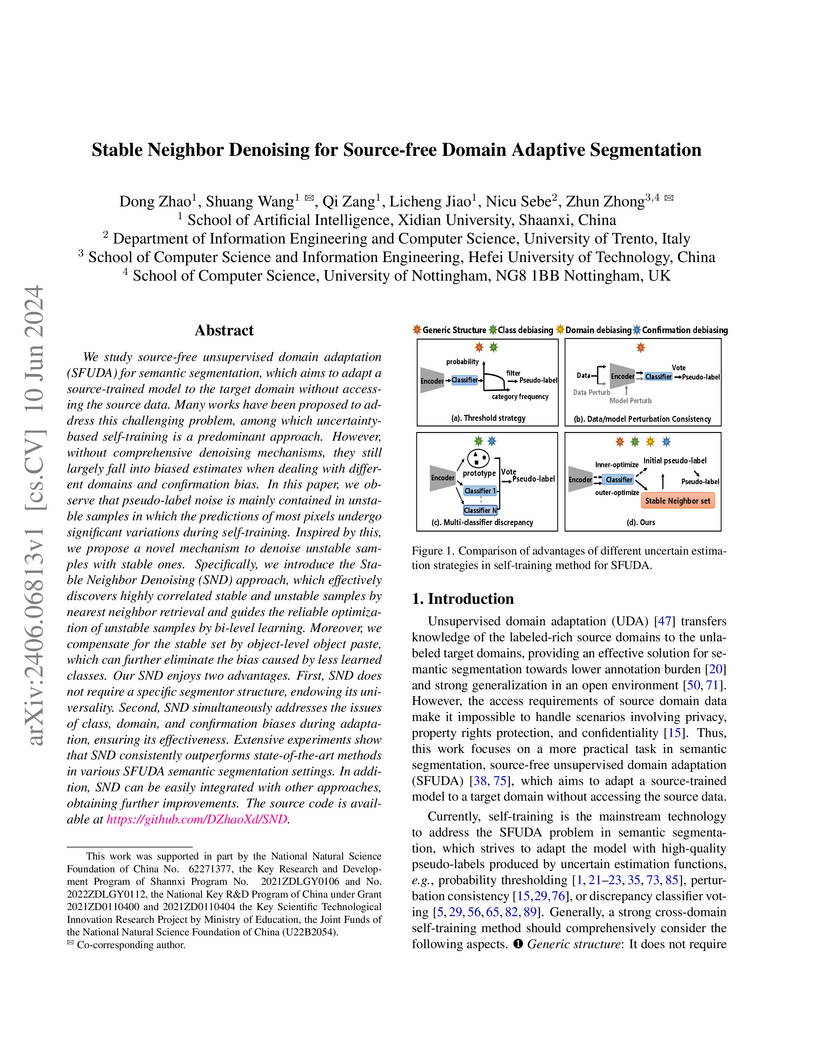

Researchers developed Stable Neighbor Denoising (SND), a method for Source-Free Unsupervised Domain Adaptive semantic segmentation that effectively denoises pseudo-labels by connecting stable and unstable target samples through bi-level optimization. It achieves state-of-the-art performance across diverse SFUDA benchmarks by addressing common biases and pseudo-label noise without requiring access to source data.

We present EgoBlind, the first egocentric VideoQA dataset collected from blind individuals to evaluate the assistive capabilities of contemporary multimodal large language models (MLLMs). EgoBlind comprises 1,392 first-person videos from the daily lives of blind and visually impaired individuals. It also features 5,311 questions directly posed or verified by the blind to reflect their in-situation needs for visual assistance. Each question has an average of 3 manually annotated reference answers to reduce subjectiveness. Using EgoBlind, we comprehensively evaluate 16 advanced MLLMs and find that all models struggle. The best performers achieve an accuracy near 60\%, which is far behind human performance of 87.4\%. To guide future advancements, we identify and summarize major limitations of existing MLLMs in egocentric visual assistance for the blind and explore heuristic solutions for improvement. With these efforts, we hope that EgoBlind will serve as a foundation for developing effective AI assistants to enhance the independence of the blind and visually impaired. Data and code are available at this https URL.

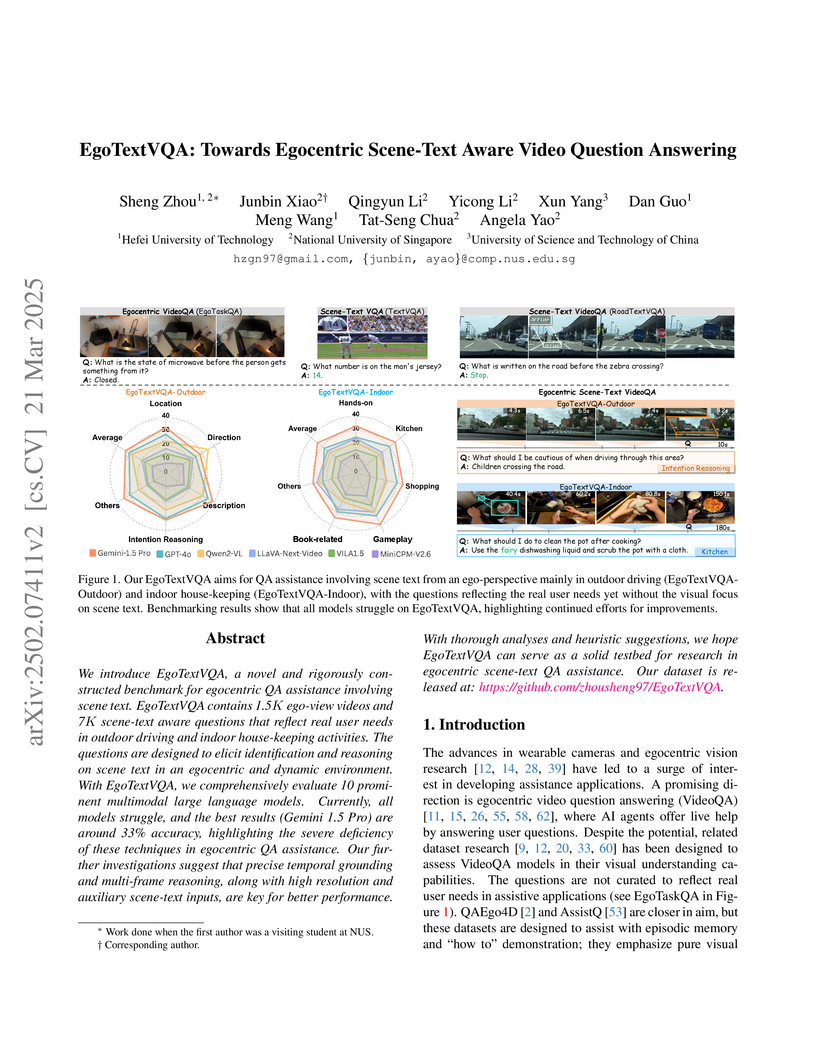

We introduce EgoTextVQA, a novel and rigorously constructed benchmark for

egocentric QA assistance involving scene text. EgoTextVQA contains 1.5K

ego-view videos and 7K scene-text aware questions that reflect real user needs

in outdoor driving and indoor house-keeping activities. The questions are

designed to elicit identification and reasoning on scene text in an egocentric

and dynamic environment. With EgoTextVQA, we comprehensively evaluate 10

prominent multimodal large language models. Currently, all models struggle, and

the best results (Gemini 1.5 Pro) are around 33\% accuracy, highlighting the

severe deficiency of these techniques in egocentric QA assistance. Our further

investigations suggest that precise temporal grounding and multi-frame

reasoning, along with high resolution and auxiliary scene-text inputs, are key

for better performance. With thorough analyses and heuristic suggestions, we

hope EgoTextVQA can serve as a solid testbed for research in egocentric

scene-text QA assistance. Our dataset is released at:

this https URL

Denoising diffusion probabilistic models (DDPMs) are becoming the leading paradigm for generative models. It has recently shown breakthroughs in audio synthesis, time series imputation and forecasting. In this paper, we propose Diffusion-TS, a novel diffusion-based framework that generates multivariate time series samples of high quality by using an encoder-decoder transformer with disentangled temporal representations, in which the decomposition technique guides Diffusion-TS to capture the semantic meaning of time series while transformers mine detailed sequential information from the noisy model input. Different from existing diffusion-based approaches, we train the model to directly reconstruct the sample instead of the noise in each diffusion step, combining a Fourier-based loss term. Diffusion-TS is expected to generate time series satisfying both interpretablity and realness. In addition, it is shown that the proposed Diffusion-TS can be easily extended to conditional generation tasks, such as forecasting and imputation, without any model changes. This also motivates us to further explore the performance of Diffusion-TS under irregular settings. Finally, through qualitative and quantitative experiments, results show that Diffusion-TS achieves the state-of-the-art results on various realistic analyses of time series.

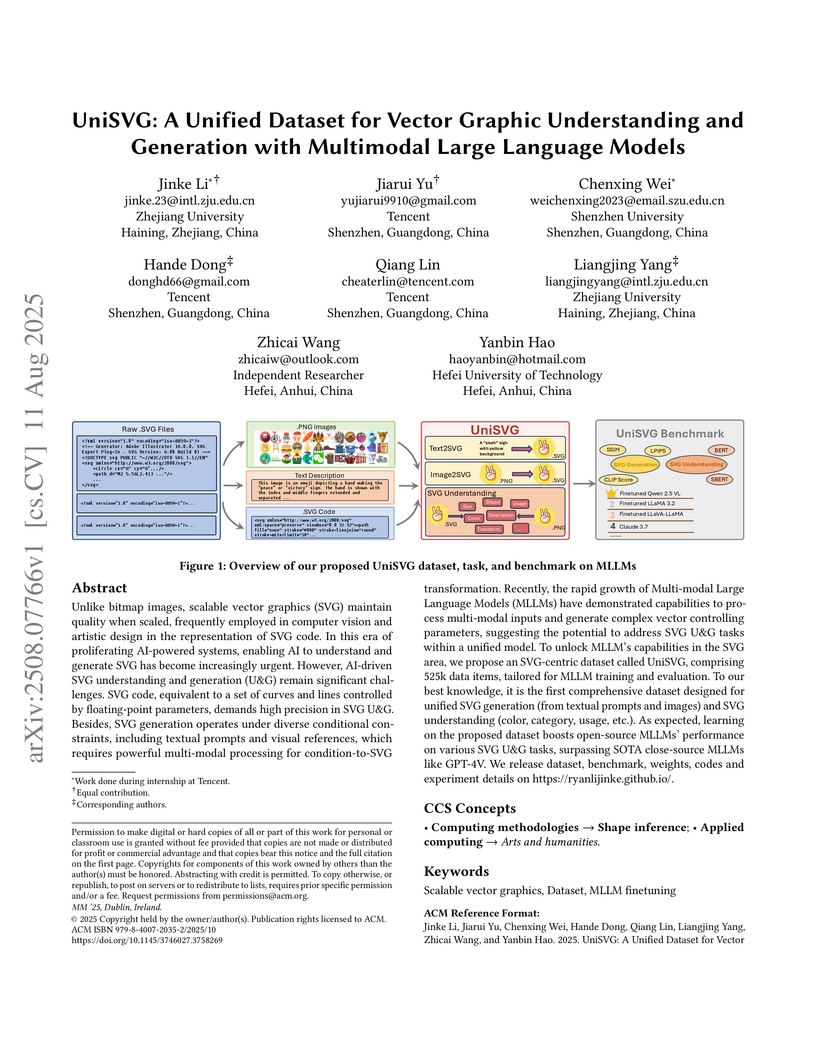

Unlike bitmap images, scalable vector graphics (SVG) maintain quality when scaled, frequently employed in computer vision and artistic design in the representation of SVG code. In this era of proliferating AI-powered systems, enabling AI to understand and generate SVG has become increasingly urgent. However, AI-driven SVG understanding and generation (U&G) remain significant challenges. SVG code, equivalent to a set of curves and lines controlled by floating-point parameters, demands high precision in SVG U&G. Besides, SVG generation operates under diverse conditional constraints, including textual prompts and visual references, which requires powerful multi-modal processing for condition-to-SVG transformation. Recently, the rapid growth of Multi-modal Large Language Models (MLLMs) have demonstrated capabilities to process multi-modal inputs and generate complex vector controlling parameters, suggesting the potential to address SVG U&G tasks within a unified model. To unlock MLLM's capabilities in the SVG area, we propose an SVG-centric dataset called UniSVG, comprising 525k data items, tailored for MLLM training and evaluation. To our best knowledge, it is the first comprehensive dataset designed for unified SVG generation (from textual prompts and images) and SVG understanding (color, category, usage, etc.). As expected, learning on the proposed dataset boosts open-source MLLMs' performance on various SVG U&G tasks, surpassing SOTA close-source MLLMs like GPT-4V. We release dataset, benchmark, weights, codes and experiment details on this https URL.

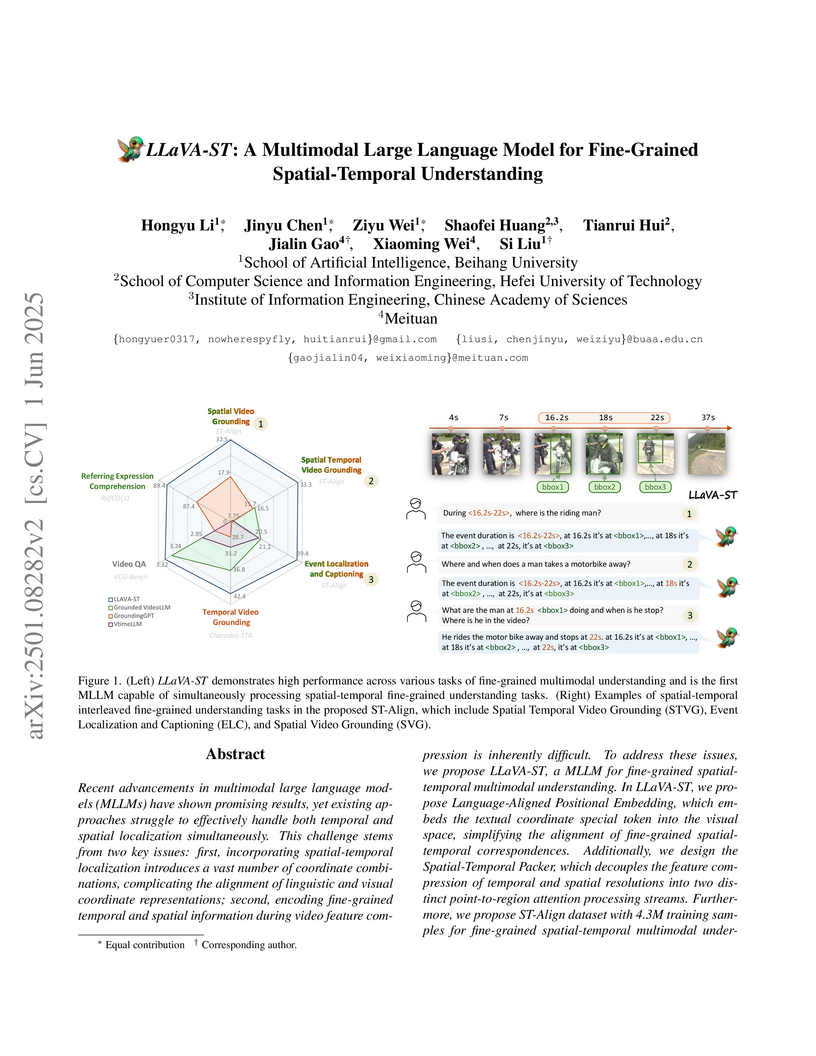

LLaVA-ST introduces a multimodal large language model capable of end-to-end fine-grained spatial, temporal, and interleaved spatial-temporal understanding in videos. The model sets new state-of-the-art performance across 11 diverse benchmarks, significantly improving localization accuracy and captioning.

TW-GRPO enhances visual reasoning in Multimodal Large Language Models by introducing token-level importance weighting for focused thinking and multi-level soft rewards for dense feedback. This framework achieved 50.4% accuracy on the CLEVRER benchmark, representing an 18.8% improvement over prior methods, and demonstrated more stable and efficient training dynamics.

15 Oct 2025

Researchers from the National University of Singapore and collaborators introduced FinDeepResearch, a benchmark and HisRubric evaluation framework designed to rigorously assess Deep Research (DR) agents in corporate financial analysis, measuring both structural coherence and information precision. The evaluation of state-of-the-art DR agents revealed that the highest-performing agent achieved only 37.9% accuracy, with significant challenges in complex interpretation and non-English financial markets.

This paper introduces Neural Graph Collaborative Filtering (NGCF), a novel framework that explicitly injects the collaborative signal from high-order connectivity into the embedding learning process using a message-passing architecture on the user-item graph. NGCF achieved improvements up to 11.97% in Recall@20 over strong baselines, demonstrating the benefit of propagating and aggregating affinity-aware messages across graph layers, particularly for users with sparse interaction data.

Multispectral object detection, which integrates information from multiple bands, can enhance detection accuracy and environmental adaptability, holding great application potential across various fields. Although existing methods have made progress in cross-modal interaction, low-light conditions, and model lightweight, there are still challenges like the lack of a unified single-stage framework, difficulty in balancing performance and fusion strategy, and unreasonable modality weight allocation. To address these, based on the YOLOv11 framework, we present YOLOv11-RGBT, a new comprehensive multimodal object detection framework. We designed six multispectral fusion modes and successfully applied them to models from YOLOv3 to YOLOv12 and RT-DETR. After reevaluating the importance of the two modalities, we proposed a P3 mid-fusion strategy and multispectral controllable fine-tuning (MCF) strategy for multispectral models. These improvements optimize feature fusion, reduce redundancy and mismatches, and boost overall model performance. Experiments show our framework excels on three major open-source multispectral object detection datasets, like LLVIP and FLIR. Particularly, the multispectral controllable fine-tuning strategy significantly enhanced model adaptability and robustness. On the FLIR dataset, it consistently improved YOLOv11 models' mAP by 3.41%-5.65%, reaching a maximum of 47.61%, verifying the framework and strategies' effectiveness. The code is available at: this https URL.

MustDrop introduces a multi-stage token dropping framework for Multimodal Large Language Models, systematically reducing visual token count throughout inference from vision encoding to decoding. This approach achieved an 88.5% FLOPs reduction on the LLaVA architecture while maintaining comparable accuracy on multimodal benchmarks.

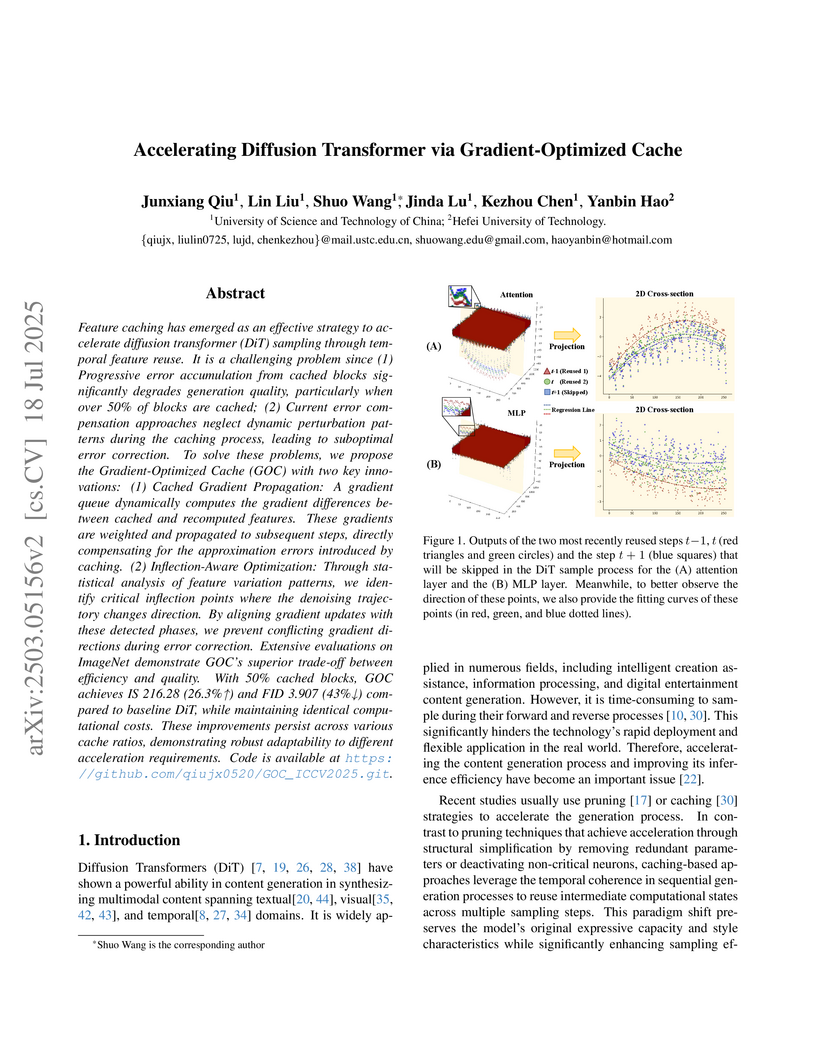

Researchers from the University of Science and Technology of China and Hefei University of Technology developed Gradient-Optimized Cache (GOC) to accelerate Diffusion Transformer (DiT) inference, effectively mitigating quality degradation from accumulated caching errors. GOC enhances generation quality, achieving up to a 26.3% improvement in Inception Score and a 43% reduction in FID for 50% cached models, with minimal additional computational overhead.

Kaleido is an open-sourced model for multi-subject reference video generation that synthesizes subject-consistent videos from multiple reference images, bridging the performance gap between open-source and proprietary systems. It achieved a subject consistency score of 0.956 and an S2V decoupling score of 0.319, outperforming existing open-source models and matching or exceeding closed-source counterparts in various metrics and user studies.

10 Feb 2023

Researchers from Macquarie University, Beihang University, and Hefei University of Technology systematically survey over 300 methods for automatic knowledge graph construction, organizing them into three stages: Knowledge Acquisition, Refinement, and Evolution. The work utilizes the Heterogeneous, Autonomous, Complex, and Evolving (HACE) theorem to frame challenges, providing a consolidated understanding of state-of-the-art approaches and outlining future research directions.

This work uncovers Multi-Embedding Attacks (MEA) as a critical vulnerability where multiple watermarks embedded into an image can destroy original forensic information, rendering proactive deepfake forensics ineffective. The proposed Adversarial Interference Simulation (AIS) training paradigm robustly mitigates MEA, reducing the average Bit Error Rate (BER) from over 38% to below 0.2% across state-of-the-art methods after multiple embedding attacks.

Text-guided image inpainting aims at reconstructing the masked regions as per text prompts, where the longstanding challenges lie in the preservation for unmasked regions, while achieving the semantics consistency between unmasked and inpainted masked regions. Previous arts failed to address both of them, always with either of them to be remedied. Such facts, as we observed, stem from the entanglement of the hybrid (e.g., mid-and-low) frequency bands that encode varied image properties, which exhibit different robustness to text prompts during the denoising process. In this paper, we propose a null-text-null frequency-aware diffusion models, dubbed \textbf{NTN-Diff}, for text-guided image inpainting, by decomposing the semantics consistency across masked and unmasked regions into the consistencies as per each frequency band, while preserving the unmasked regions, to circumvent two challenges in a row. Based on the diffusion process, we further divide the denoising process into early (high-level noise) and late (low-level noise) stages, where the mid-and-low frequency bands are disentangled during the denoising process. As observed, the stable mid-frequency band is progressively denoised to be semantically aligned during text-guided denoising process, which, meanwhile, serves as the guidance to the null-text denoising process to denoise low-frequency band for the masked regions, followed by a subsequent text-guided denoising process at late stage, to achieve the semantics consistency for mid-and-low frequency bands across masked and unmasked regions, while preserve the unmasked regions. Extensive experiments validate the superiority of NTN-Diff over the state-of-the-art diffusion models to text-guided diffusion models. Our code can be accessed from this https URL.

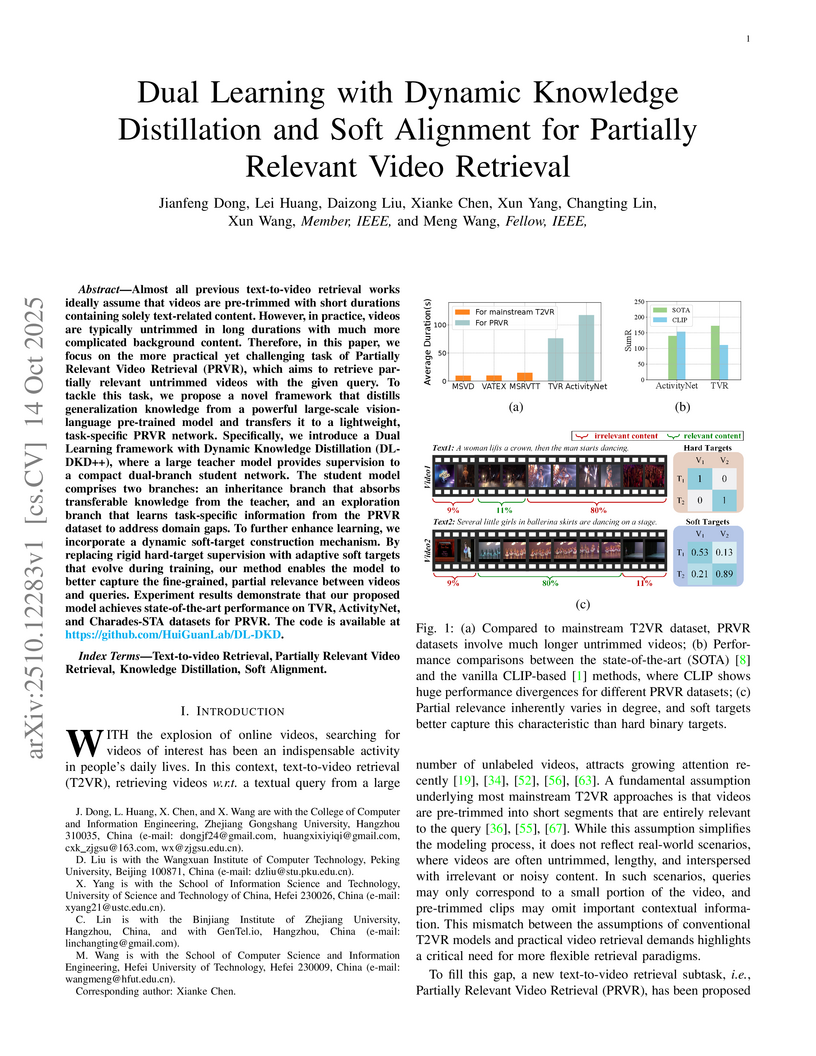

Researchers introduce DL-DKD++, a framework for partially relevant video retrieval that employs dual learning with dynamic knowledge distillation from large vision-language models and dynamic soft alignment for nuanced relevance scoring. The approach establishes new state-of-the-art performance on datasets like TVR (SumR 184.8), ActivityNet-Captions (SumR 149.9), and Charades-STA.

The swift advancement in Multimodal LLMs (MLLMs) also presents significant

challenges for effective knowledge editing. Current methods, including

intrinsic knowledge editing and external knowledge resorting, each possess

strengths and weaknesses, struggling to balance the desired properties of

reliability, generality, and locality when applied to MLLMs. In this paper, we

propose UniKE, a novel multimodal editing method that establishes a unified

perspective and paradigm for intrinsic knowledge editing and external knowledge

resorting. Both types of knowledge are conceptualized as vectorized key-value

memories, with the corresponding editing processes resembling the assimilation

and accommodation phases of human cognition, conducted at the same semantic

levels. Within such a unified framework, we further promote knowledge

collaboration by disentangling the knowledge representations into the semantic

and truthfulness spaces. Extensive experiments validate the effectiveness of

our method, which ensures that the post-edit MLLM simultaneously maintains

excellent reliability, generality, and locality. The code for UniKE is

available at \url{this https URL}.

Multimodal deepfake detection (MDD) aims to uncover manipulations across visual, textual, and auditory modalities, thereby reinforcing the reliability of modern information systems. Although large vision-language models (LVLMs) exhibit strong multimodal reasoning, their effectiveness in MDD is limited by challenges in capturing subtle forgery cues, resolving cross-modal inconsistencies, and performing task-aligned retrieval. To this end, we propose Guided Adaptive Scorer and Propagation In-Context Learning (GASP-ICL), a training-free framework for MDD. GASP-ICL employs a pipeline to preserve semantic relevance while injecting task-aware knowledge into LVLMs. We leverage an MDD-adapted feature extractor to retrieve aligned image-text pairs and build a candidate set. We further design the Graph-Structured Taylor Adaptive Scorer (GSTAS) to capture cross-sample relations and propagate query-aligned signals, producing discriminative exemplars. This enables precise selection of semantically aligned, task-relevant demonstrations, enhancing LVLMs for robust MDD. Experiments on four forgery types show that GASP-ICL surpasses strong baselines, delivering gains without LVLM fine-tuning.

We present \textbf{Met}a-\textbf{T}oken \textbf{Le}arning (Mettle), a simple and memory-efficient method for adapting large-scale pretrained transformer models to downstream audio-visual tasks. Instead of sequentially modifying the output feature distribution of the transformer backbone, Mettle utilizes a lightweight \textit{Layer-Centric Distillation (LCD)} module to distill in parallel the intact audio or visual features embedded by each transformer layer into compact meta-tokens. This distillation process considers both pretrained knowledge preservation and task-specific adaptation. The obtained meta-tokens can be directly applied to classification tasks, such as audio-visual event localization and audio-visual video parsing. To further support fine-grained segmentation tasks, such as audio-visual segmentation, we introduce a \textit{Meta-Token Injection (MTI)} module, which utilizes the audio and visual meta-tokens distilled from the top transformer layer to guide feature adaptation in earlier layers. Extensive experiments on multiple audiovisual benchmarks demonstrate that our method significantly reduces memory usage and training time while maintaining parameter efficiency and competitive accuracy.

There are no more papers matching your filters at the moment.