Hewlett Packard Enterprise

The recently released Ultra Ethernet (UE) 1.0 specification defines a transformative High-Performance Ethernet standard for future Artificial Intelligence (AI) and High-Performance Computing (HPC) systems. This paper, written by the specification's authors, provides a high-level overview of UE's design, offering crucial motivations and scientific context to understand its innovations. While UE introduces advancements across the entire Ethernet stack, its standout contribution is the novel Ultra Ethernet Transport (UET), a potentially fully hardware-accelerated protocol engineered for reliable, fast, and efficient communication in extreme-scale systems. Unlike InfiniBand, the last major standardization effort in high-performance networking over two decades ago, UE leverages the expansive Ethernet ecosystem and the 1,000x gains in computational efficiency per moved bit to deliver a new era of high-performance networking.

31 Jan 2025

University of Waterloo

University of Waterloo University of California, Santa Barbara

University of California, Santa Barbara Perimeter Institute for Theoretical PhysicsFermi National Accelerator LaboratoryUniversity of Wisconsin–MadisonApplied MaterialsQuantum MachinesHewlett Packard LabsHewlett Packard Enterprise1QB Information Technologies (1QBit)SynopsysUSRA Research Institute for Advanced Computer ScienceQolabNASA, Ames Research Center

Perimeter Institute for Theoretical PhysicsFermi National Accelerator LaboratoryUniversity of Wisconsin–MadisonApplied MaterialsQuantum MachinesHewlett Packard LabsHewlett Packard Enterprise1QB Information Technologies (1QBit)SynopsysUSRA Research Institute for Advanced Computer ScienceQolabNASA, Ames Research CenterA roadmap outlines how to build utility-scale quantum supercomputers by leveraging industrial semiconductor manufacturing processes and integrating quantum processing units as specialized accelerators within classical HPC systems, aiming to scale from hundreds to millions of qubits. The analysis provides resource estimates for complex chemistry problems, indicating that even with future hardware, millions of physical qubits and multi-year runtimes are needed for practical utility.

This paper critically re-evaluates RDMA over Converged Ethernet (RoCE) in hyperscale datacenter and HPC environments, identifying fundamental design limitations that hinder performance, scalability, and security for modern AI, HPC, and storage workloads. The analysis concludes that RoCE's inherited InfiniBand principles are ill-suited for contemporary Ethernet, necessitating a paradigm shift towards a modernized high-performance Ethernet.

We present an optimized implementation of the recently proposed information geometric regularization (IGR) for unprecedented scale simulation of compressible fluid flows applied to multi-engine spacecraft boosters. We improve upon state-of-the-art computational fluid dynamics (CFD) techniques along computational cost, memory footprint, and energy-to-solution metrics. Unified memory on coupled CPU--GPU or APU platforms increases problem size with negligible overhead. Mixed half/single-precision storage and computation on well-conditioned numerics is used. We simulate flow at 200 trillion grid points and 1 quadrillion degrees of freedom, exceeding the current record by a factor of 20. A factor of 4 wall-time speedup is achieved over optimized baselines. Ideal weak scaling is seen on OLCF Frontier, LLNL El Capitan, and CSCS Alps using the full systems. Strong scaling is near ideal at extreme conditions, including 80% efficiency on CSCS Alps with an 8-node baseline and stretching to the full system.

Machine learning has driven an exponential increase in computational demand,

leading to massive data centers that consume significant amounts of energy and

contribute to climate change. This makes sustainable data center control a

priority. In this paper, we introduce SustainDC, a set of Python environments

for benchmarking multi-agent reinforcement learning (MARL) algorithms for data

centers (DC). SustainDC supports custom DC configurations and tasks such as

workload scheduling, cooling optimization, and auxiliary battery management,

with multiple agents managing these operations while accounting for the effects

of each other. We evaluate various MARL algorithms on SustainDC, showing their

performance across diverse DC designs, locations, weather conditions, grid

carbon intensity, and workload requirements. Our results highlight significant

opportunities for improvement of data center operations using MARL algorithms.

Given the increasing use of DC due to AI, SustainDC provides a crucial platform

for the development and benchmarking of advanced algorithms essential for

achieving sustainable computing and addressing other heterogeneous real-world

challenges.

26 May 2025

This survey analyzes how classical software design patterns, in conjunction with the emerging Model Context Protocol (MCP), can establish a principled framework for robust, scalable, and secure communication in Large Language Model-driven multi-agent systems. The work demonstrates that these patterns and MCP reduce communication complexity and enhance real-world applications in high-stakes domains like financial services.

16 Jan 2025

CNRS

CNRS Sun Yat-Sen University

Sun Yat-Sen University University of Southern CaliforniaGhent University

University of Southern CaliforniaGhent University Tsinghua University

Tsinghua University Stanford University

Stanford University Université Paris-Saclay

Université Paris-Saclay CEA

CEA Princeton UniversityNational Institute of Standards and TechnologyThe University of British ColumbiaNorth Carolina State UniversityUniversity of TrentoIMECÉcole Polytechnique Fédérale de LausanneNational Research CouncilNTT CorporationLeibniz-Institute of Photonic TechnologyLetiHewlett Packard LabsHewlett Packard EnterpriseSapienza UniversityInstitut FEMTO-STInstitut National de la Recherche Scientifique-Énergie Matériaux Télécommunications (IN)Université Franche Comte CNRSUniversit

Grenoble AlpesEnrico Fermi” Research CenterQueens

’ University

Princeton UniversityNational Institute of Standards and TechnologyThe University of British ColumbiaNorth Carolina State UniversityUniversity of TrentoIMECÉcole Polytechnique Fédérale de LausanneNational Research CouncilNTT CorporationLeibniz-Institute of Photonic TechnologyLetiHewlett Packard LabsHewlett Packard EnterpriseSapienza UniversityInstitut FEMTO-STInstitut National de la Recherche Scientifique-Énergie Matériaux Télécommunications (IN)Université Franche Comte CNRSUniversit

Grenoble AlpesEnrico Fermi” Research CenterQueens

’ UniversityThis roadmap consolidates recent advances while exploring emerging applications, reflecting the remarkable diversity of hardware platforms, neuromorphic concepts, and implementation philosophies reported in the field. It emphasizes the critical role of cross-disciplinary collaboration in this rapidly evolving field.

We propose a self-correction mechanism for Large Language Models (LLMs) to

mitigate issues such as toxicity and fact hallucination. This method involves

refining model outputs through an ensemble of critics and the model's own

feedback. Drawing inspiration from human behavior, we explore whether LLMs can

emulate the self-correction process observed in humans who often engage in

self-reflection and seek input from others to refine their understanding of

complex topics. Our approach is model-agnostic and can be applied across

various domains to enhance trustworthiness by addressing fairness, bias, and

robustness concerns. We consistently observe performance improvements in LLMs

for reducing toxicity and correcting factual errors.

27 Nov 2024

Analog In-memory Computing (IMC) has demonstrated energy-efficient and low latency implementation of convolution and fully-connected layers in deep neural networks (DNN) by using physics for computing in parallel resistive memory arrays. However, recurrent neural networks (RNN) that are widely used for speech-recognition and natural language processing have tasted limited success with this approach. This can be attributed to the significant time and energy penalties incurred in implementing nonlinear activation functions that are abundant in such models. In this work, we experimentally demonstrate the implementation of a non-linear activation function integrated with a ramp analog-to-digital conversion (ADC) at the periphery of the memory to improve in-memory implementation of RNNs. Our approach uses an extra column of memristors to produce an appropriately pre-distorted ramp voltage such that the comparator output directly approximates the desired nonlinear function. We experimentally demonstrate programming different nonlinear functions using a memristive array and simulate its incorporation in RNNs to solve keyword spotting and language modelling tasks. Compared to other approaches, we demonstrate manifold increase in area-efficiency, energy-efficiency and throughput due to the in-memory, programmable ramp generator that removes digital processing overhead.

Improving Network Threat Detection by Knowledge Graph, Large Language Model, and Imbalanced Learning

Improving Network Threat Detection by Knowledge Graph, Large Language Model, and Imbalanced Learning

Network threat detection has been challenging due to the complexities of attack activities and the limitation of historical threat data to learn from. To help enhance the existing practices of using analytics, machine learning, and artificial intelligence methods to detect the network threats, we propose an integrated modelling framework, where Knowledge Graph is used to analyze the users' activity patterns, Imbalanced Learning techniques are used to prune and weigh Knowledge Graph, and LLM is used to retrieve and interpret the users' activities from Knowledge Graph. The proposed framework is applied to Agile Threat Detection through Online Sequential Learning. The preliminary results show the improved threat capture rate by 3%-4% and the increased interpretabilities of risk predictions based on the users' activities.

12 Feb 2024

The 6G-GOALS project proposes a paradigm shift for 6G networks from traditional bit-level communication to semantic and goal-oriented communication, aiming for efficient and sustainable AI-Native interactions. It outlines a novel O-RAN-aligned architecture with a 'semantic plane' and details foundational research pillars, alongside two planned proof-of-concepts for real-time semantic communication and cooperative robotics.

Research into optical spiking neural networks (SNNs) has primarily focused on spiking devices, networks of excitable lasers or numerical modelling of large architectures, often overlooking key constraints such as limited optical power, crosstalk and footprint. We introduce SEPhIA, a photonic-electronic, multi-tiled SNN architecture emphasizing implementation feasibility and realistic scaling. SEPhIA leverages microring resonator modulators (MRMs) and multi-wavelength sources to achieve effective sub-one-laser-per-spiking neuron efficiency. We validate SEPhIA at both device and architecture levels by time-domain co-simulating excitable CMOS-MRR coupled circuits and by devising a physics-aware, trainable optoelectronic SNN model, with both approaches utilizing experimentally derived device parameters. The multi-layer optoelectronic SNN achieves classification accuracies over 90% on a four-class spike-encoded dataset, closely comparable to software models. A design space study further quantifies how photonic device parameters impact SNN performance under constrained signal-to-noise conditions. SEPhIA offers a scalable, expressive, physically grounded solution for neuromorphic photonic computing, capable of addressing spike-encoded tasks.

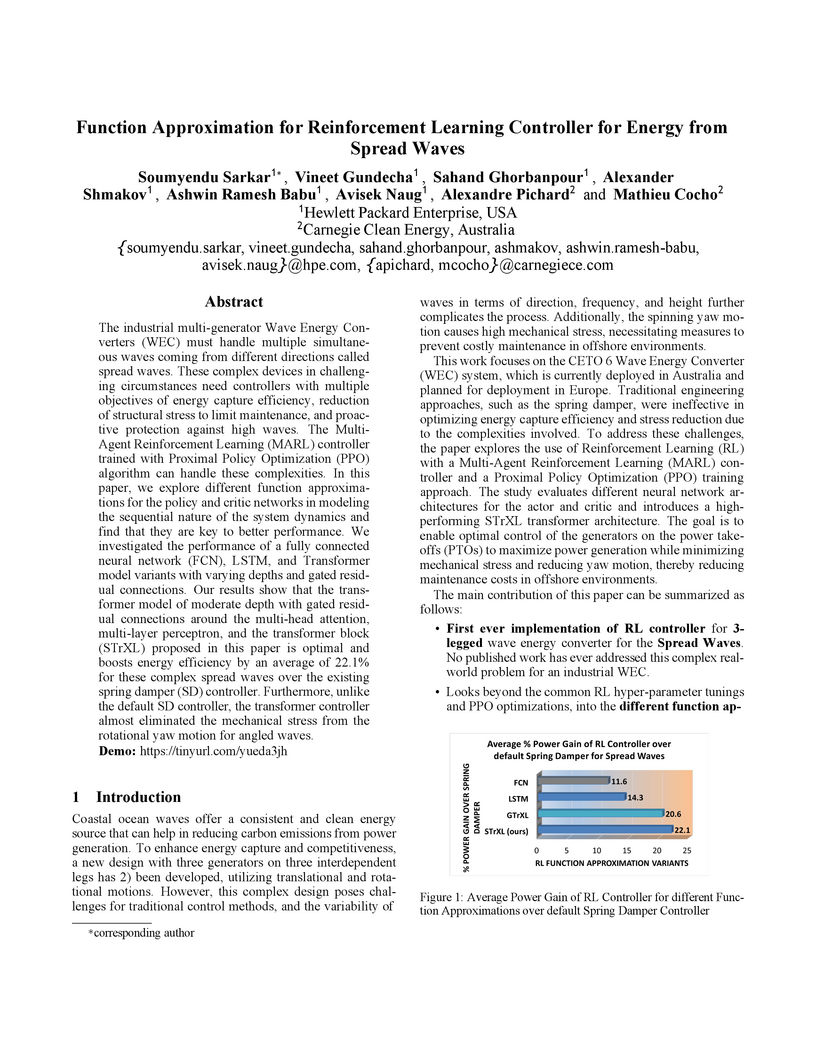

The industrial multi-generator Wave Energy Converters (WEC) must handle

multiple simultaneous waves coming from different directions called spread

waves. These complex devices in challenging circumstances need controllers with

multiple objectives of energy capture efficiency, reduction of structural

stress to limit maintenance, and proactive protection against high waves. The

Multi-Agent Reinforcement Learning (MARL) controller trained with the Proximal

Policy Optimization (PPO) algorithm can handle these complexities. In this

paper, we explore different function approximations for the policy and critic

networks in modeling the sequential nature of the system dynamics and find that

they are key to better performance. We investigated the performance of a fully

connected neural network (FCN), LSTM, and Transformer model variants with

varying depths and gated residual connections. Our results show that the

transformer model of moderate depth with gated residual connections around the

multi-head attention, multi-layer perceptron, and the transformer block (STrXL)

proposed in this paper is optimal and boosts energy efficiency by an average of

22.1% for these complex spread waves over the existing spring damper (SD)

controller. Furthermore, unlike the default SD controller, the transformer

controller almost eliminated the mechanical stress from the rotational yaw

motion for angled waves. Demo: this https URL

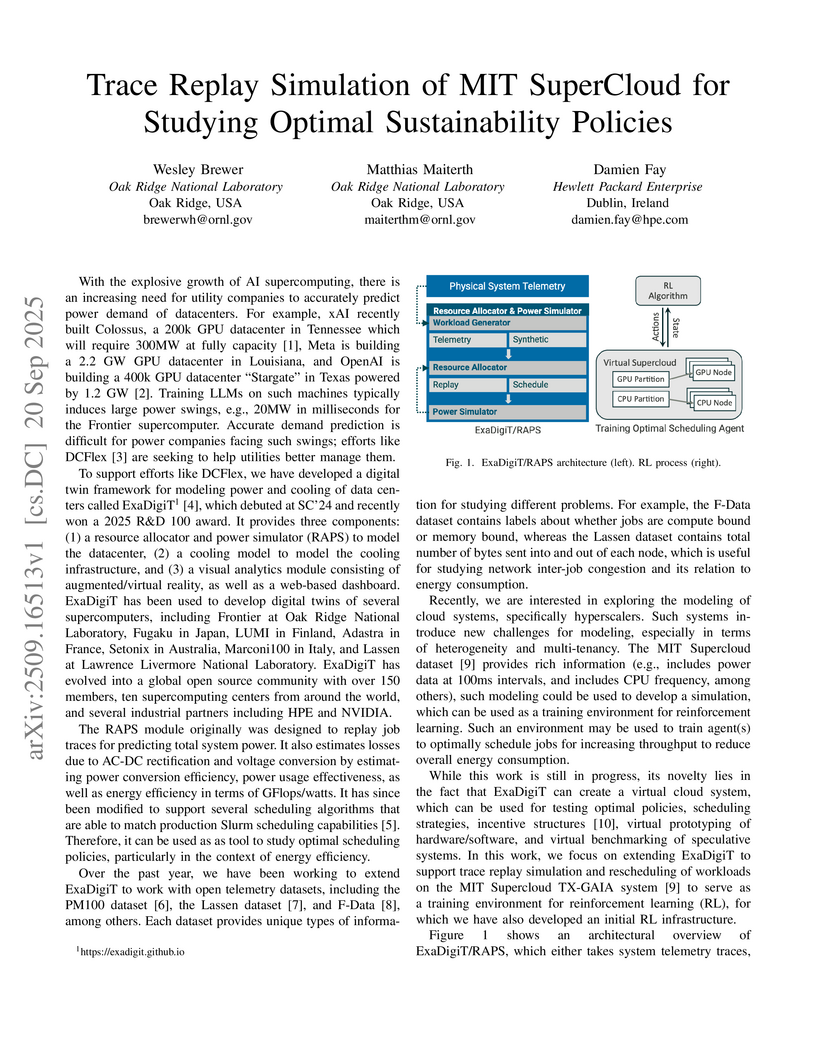

The rapid growth of AI supercomputing is creating unprecedented power demands, with next-generation GPU datacenters requiring hundreds of megawatts and producing fast, large swings in consumption. To address the resulting challenges for utilities and system operators, we extend ExaDigiT, an open-source digital twin framework for modeling power, cooling, and scheduling of supercomputers. Originally developed for replaying traces from leadership-class HPC systems, ExaDigiT now incorporates heterogeneity, multi-tenancy, and cloud-scale workloads. In this work, we focus on trace replay and rescheduling of jobs on the MIT SuperCloud TX-GAIA system to enable reinforcement learning (RL)-based experimentation with sustainability policies. The RAPS module provides a simulation environment with detailed power and performance statistics, supporting the study of scheduling strategies, incentive structures, and hardware/software prototyping. Preliminary RL experiments using Proximal Policy Optimization demonstrate the feasibility of learning energy-aware scheduling decisions, highlighting ExaDigiT's potential as a platform for exploring optimal policies to improve throughput, efficiency, and sustainability.

Many problems of interest in engineering, medicine, and the fundamental

sciences rely on high-fidelity flow simulation, making performant computational

fluid dynamics solvers a mainstay of the open-source software community. A

previous work (Bryngelson et al., Comp. Phys. Comm. (2021)) published MFC 3.0

with numerous physical features, numerics, and scalability. MFC 5.0 is a marked

update to MFC 3.0, including a broad set of well-established and novel physical

models and numerical methods, and the introduction of XPU acceleration. We

exhibit state-of-the-art performance and ideal scaling on the first two

exascale supercomputers, OLCF Frontier and LLNL El Capitan. Combined with MFC's

single-accelerator performance, MFC achieves exascale computation in practice.

New physical features include the immersed boundary method, N-fluid phase

change, Euler--Euler and Euler--Lagrange sub-grid bubble models,

fluid-structure interaction, hypo- and hyper-elastic materials, chemically

reacting flow, two-material surface tension, magnetohydrodynamics (MHD), and

more. Numerical techniques now represent the current state-of-the-art,

including general relaxation characteristic boundary conditions, WENO variants,

Strang splitting for stiff sub-grid flow features, and low Mach number

treatments. Weak scaling to tens of thousands of GPUs on OLCF Summit and

Frontier and LLNL El Capitan sees efficiencies within 5% of ideal to their full

system sizes. Strong scaling results for a 16-times increase in device count

show parallel efficiencies over 90% on OLCF Frontier. MFC's software stack has

improved, including continuous integration, ensuring code resilience and

correctness through over 300 regression tests; metaprogramming, reducing code

length and maintaining performance portability; and code generation for

computing chemical reactions.

17 Sep 2025

AI training and inference impose sustained, fine-grain I/O that stresses host-mediated, TCP-based storage paths. Motivated by kernel-bypass networking and user-space storage stacks, we revisit POSIX-compatible object storage for GPU-centric pipelines. We present ROS2, an RDMA-first object storage system design that offloads the DAOS client to an NVIDIA BlueField-3 SmartNIC while leaving the DAOS I/O engine unchanged on the storage server. ROS2 separates a lightweight control plane (gRPC for namespace and capability exchange) from a high-throughput data plane (UCX/libfabric over RDMA or TCP) and removes host mediation from the data path.

Using FIO/DFS across local and remote configurations, we find that on server-grade CPUs RDMA consistently outperforms TCP for both large sequential and small random I/O. When the RDMA-driven DAOS client is offloaded to BlueField-3, end-to-end performance is comparable to the host, demonstrating that SmartNIC offload preserves RDMA efficiency while enabling DPU-resident features such as multi-tenant isolation and inline services (e.g., encryption/decryption) close to the NIC. In contrast, TCP on the SmartNIC lags host performance, underscoring the importance of RDMA for offloaded deployments.

Overall, our results indicate that an RDMA-first, SmartNIC-offloaded object-storage stack is a practical foundation for scaling data delivery in modern LLM training environments; integrating optional GPU-direct placement for LLM tasks is left for future work.

Fusion energy research increasingly depends on the ability to integrate heterogeneous, multimodal datasets from high-resolution diagnostics, control systems, and multiscale simulations. The sheer volume and complexity of these datasets demand the development of new tools capable of systematically harmonizing and extracting knowledge across diverse modalities. The Data Fusion Labeler (dFL) is introduced as a unified workflow instrument that performs uncertainty-aware data harmonization, schema-compliant data fusion, and provenance-rich manual and automated labeling at scale. By embedding alignment, normalization, and labeling within a reproducible, operator-order-aware framework, dFL reduces time-to-analysis by greater than 50X (e.g., enabling >200 shots/hour to be consistently labeled rather than a handful per day), enhances label (and subsequently training) quality, and enables cross-device comparability. Case studies from DIII-D demonstrate its application to automated ELM detection and confinement regime classification, illustrating its potential as a core component of data-driven discovery, model validation, and real-time control in future burning plasma devices.

As machine learning workloads significantly increase energy consumption, sustainable data centers with low carbon emissions are becoming a top priority for governments and corporations worldwide. This requires a paradigm shift in optimizing power consumption in cooling and IT loads, shifting flexible loads based on the availability of renewable energy in the power grid, and leveraging battery storage from the uninterrupted power supply in data centers, using collaborative agents. The complex association between these optimization strategies and their dependencies on variable external factors like weather and the power grid carbon intensity makes this a hard problem. Currently, a real-time controller to optimize all these goals simultaneously in a dynamic real-world setting is lacking. We propose a Data Center Carbon Footprint Reduction (DC-CFR) multi-agent Reinforcement Learning (MARL) framework that optimizes data centers for the multiple objectives of carbon footprint reduction, energy consumption, and energy cost. The results show that the DC-CFR MARL agents effectively resolved the complex interdependencies in optimizing cooling, load shifting, and energy storage in real-time for various locations under real-world dynamic weather and grid carbon intensity conditions. DC-CFR significantly outperformed the industry standard ASHRAE controller with a considerable reduction in carbon emissions (14.5%), energy usage (14.4%), and energy cost (13.7%) when evaluated over one year across multiple geographical regions.

HPC systems use monitoring and operational data analytics to ensure efficiency, performance, and orderly operations. Application-specific insights are crucial for analyzing the increasing complexity and diversity of HPC workloads, particularly through the identification of unknown software and recognition of repeated executions, which facilitate system optimization and security improvements. However, traditional identification methods using job or file names are unreliable for arbitrary user-provided names (this http URL). Fuzzy hashing of executables detects similarities despite changes in executable version or compilation approach while preserving privacy and file integrity, overcoming these limitations. We introduce SIREN, a process-level data collection framework for software identification and recognition. SIREN improves observability in HPC by enabling analysis of process metadata, environment information, and executable fuzzy hashes. Findings from a first opt-in deployment campaign on LUMI show SIREN's ability to provide insights into software usage, recognition of repeated executions of known applications, and similarity-based identification of unknown applications.

23 Dec 2024

There is a continuing interest in using standard language constructs for

accelerated computing in order to avoid (sometimes vendor-specific) external

APIs. For Fortran codes, the {\tt do concurrent} (DC) loop has been

successfully demonstrated on the NVIDIA platform. However, support for DC on

other platforms has taken longer to implement. Recently, Intel has added DC GPU

offload support to its compiler, as has HPE for AMD GPUs. In this paper, we

explore the current portability of using DC across GPU vendors using the

in-production solar surface flux evolution code, HipFT. We discuss

implementation and compilation details, including when/where using directive

APIs for data movement is needed/desired compared to using a unified memory

system. The performance achieved on both data center and consumer platforms is

shown.

There are no more papers matching your filters at the moment.