IE University

The KG-RAG pipeline integrates Knowledge Graphs (KGs) with Large Language Models (LLMs) to enhance factual accuracy and reduce hallucinations in knowledge-intensive tasks. By grounding LLMs in a structured KG, the system achieved a 15% hallucination rate, halving that of a standard Embedding-RAG, while exhibiting lower Exact Match and F1 scores on complex questions.

23 Sep 2025

Mixed-Integer Quadratically Constrained Quadratic Programs arise in a variety of applications, particularly in energy, water, and gas systems, where discrete decisions interact with nonconvex quadratic constraints. These problems are computationally challenging due to the combination of combinatorial complexity and nonconvexity, often rendering traditional exact methods ineffective for large-scale instances. In this paper, we propose a solution framework for sparse MIQCQPs that integrates semidefinite programming relaxations with chordal decomposition techniques to exploit both term and correlative sparsity. By leveraging problem structure, we significantly reduce the size of the semidefinite constraints into smaller, tractable blocks, improving the scalability of the relaxation and the overall branch-and-bound procedure. We evaluate our framework on the Unit Commitment problem with AC Optimal Power Flow constraints to show that our method produces strong bounds and high-quality solutions on standard IEEE test cases up to 118 buses, demonstrating its effectiveness and scalability in solving MIQCQPs.

In this paper, we evaluate the creative fiction writing abilities of a

fine-tuned small language model (SLM), BART-large, and compare its performance

to human writers and two large language models (LLMs): GPT-3.5 and GPT-4o. Our

evaluation consists of two experiments: (i) a human study in which 68

participants rated short stories from humans and the SLM on grammaticality,

relevance, creativity, and attractiveness, and (ii) a qualitative linguistic

analysis examining the textual characteristics of stories produced by each

model. In the first experiment, BART-large outscored average human writers

overall (2.11 vs. 1.85), a 14% relative improvement, though the slight human

advantage in creativity was not statistically significant. In the second

experiment, qualitative analysis showed that while GPT-4o demonstrated

near-perfect coherence and used less cliche phrases, it tended to produce more

predictable language, with only 3% of its synopses featuring surprising

associations (compared to 15% for BART). These findings highlight how model

size and fine-tuning influence the balance between creativity, fluency, and

coherence in creative writing tasks, and demonstrate that smaller models can,

in certain contexts, rival both humans and larger models.

Researchers at Erasmus University and IE University developed the Autonomous Prompt Engineering Toolbox (APET), a system that enables GPT-4 to autonomously optimize its own prompts, reducing human dependency in prompt design. This approach led to accuracy improvements of up to 6.8% on tasks like geometric shapes and 4.4% on word sorting, while revealing limitations in highly specialized strategic domains.

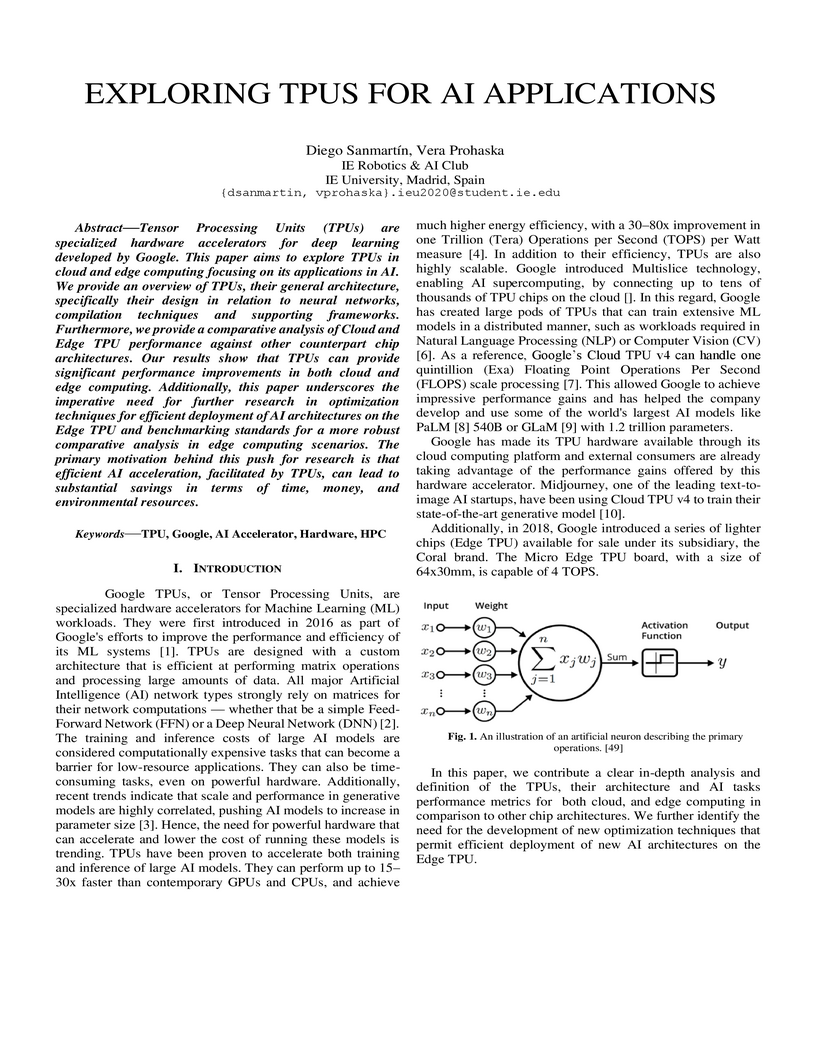

Tensor Processing Units (TPUs) are specialized hardware accelerators for deep

learning developed by Google. This paper aims to explore TPUs in cloud and edge

computing focusing on its applications in AI. We provide an overview of TPUs,

their general architecture, specifically their design in relation to neural

networks, compilation techniques and supporting frameworks. Furthermore, we

provide a comparative analysis of Cloud and Edge TPU performance against other

counterpart chip architectures. Our results show that TPUs can provide

significant performance improvements in both cloud and edge computing.

Additionally, this paper underscores the imperative need for further research

in optimization techniques for efficient deployment of AI architectures on the

Edge TPU and benchmarking standards for a more robust comparative analysis in

edge computing scenarios. The primary motivation behind this push for research

is that efficient AI acceleration, facilitated by TPUs, can lead to substantial

savings in terms of time, money, and environmental resources.

Tangible User Interfaces (TUI) for human--computer interaction (HCI) provide

the user with physical representations of digital information with the aim to

overcome the limitations of screen-based interfaces. Although many compelling

demonstrations of TUIs exist in the literature, there is a lack of research on

TUIs intended for daily two-handed tasks and processes, such as cooking. In

response to this gap, we propose SPICE (Smart Projection Interface for Cooking

Enhancement). SPICE investigates TUIs in a kitchen setting, aiming to transform

the recipe following experience from simply text-based to tangibly interactive.

SPICE uses a tracking system, an agent-based simulation software, and vision

large language models to create and interpret a kitchen environment where

recipe information is projected directly onto the cooking surface. We conducted

comparative usability and a validation studies of SPICE, with 30 participants.

The results show that participants using SPICE completed the recipe with far

less stops and in a substantially shorter time. Despite this, participants

self-reported negligible change in feelings of difficulty, which is a direction

for future research. Overall, the SPICE project demonstrates the potential of

using TUIs to improve everyday activities, paving the way for future research

in HCI and new computing interfaces.

Despite the abundance of current researches working on the sentiment analysis from videos and audios, finding the best model that gives the highest accuracy rate is still considered a challenge for researchers in this field. The main objective of this paper is to prove the usability of emotion recognition models that take video and audio inputs. The datasets used to train the models are the CREMA-D dataset for audio and the RAVDESS dataset for video. The fine-tuned models that been used are: Facebook/wav2vec2-large for audio and the Google/vivit-b-16x2-kinetics400 for video. The avarage of the probabilities for each emotion generated by the two previous models is utilized in the decision making framework. After disparity in the results, if one of the models gets much higher accuracy, another test framework is created. The methods used are the Weighted Average method, the Confidence Level Threshold method, the Dynamic Weighting Based on Confidence method, and the Rule-Based Logic method. This limited approach gives encouraging results that make future research into these methods viable.

11 Jun 2025

Understanding how housing prices respond to spatial accessibility, structural attributes, and typological distinctions is central to contemporary urban research and policy. In cities marked by affordability stress and market segmentation, models that offer both predictive capability and interpretive clarity are increasingly needed. This study applies discrete Bayesian networks to model residential price formation across Madrid, Barcelona, and Valencia using over 180,000 geo-referenced housing listings. The resulting probabilistic structures reveal distinct city-specific logics. Madrid exhibits amenity-driven stratification, Barcelona emphasizes typology and classification, while Valencia is shaped by spatial and structural fundamentals. By enabling joint inference, scenario simulation, and sensitivity analysis within a transparent framework, the approach advances housing analytics toward models that are not only accurate but actionable, interpretable, and aligned with the demands of equitable urban governance.

15 May 2025

The European Union Emissions Trading System (EU ETS) is a key policy tool for

reducing greenhouse gas emissions and advancing toward a net-zero economy.

Under this scheme, tradeable carbon credits, European Union Allowances (EUAs),

are issued to large emitters, who can buy and sell them on regulated markets.

We investigate the influence of financial, economic, and energy-related factors

on EUA futures prices using discrete and dynamic Bayesian networks to model

both contemporaneous and time-lagged dependencies. The analysis is based on

daily data spanning the third and fourth ETS trading phases (2013-2025),

incorporating a wide range of indicators including energy commodities, equity

indices, exchange rates, and bond markets. Results reveal that EUA pricing is

most influenced by energy commodities, especially coal and oil futures, and by

the performance of the European energy sector. Broader market sentiment,

captured through stock indices and volatility measures, affects EUA prices

indirectly via changes in energy demand. The dynamic model confirms a modest

next-day predictive influence from oil markets, while most other effects remain

contemporaneous. These insights offer regulators, institutional investors, and

firms subject to ETS compliance a clearer understanding of the interconnected

forces shaping the carbon market, supporting more effective hedging, investment

strategies, and policy design.

High-performance computing (HPC) is reshaping computational drug discovery by enabling large-scale, time-efficient molecular simulations. In this work, we explore HPC-driven pipelines for Alzheimer's disease drug discovery, focusing on virtual screening, molecular docking, and molecular dynamics simulations. We implemented a parallelised workflow using GROMACS with hybrid MPI-OpenMP strategies, benchmarking scaling performance across energy minimisation, equilibration, and production stages. Additionally, we developed a docking prototype that demonstrates significant runtime gains when moving from sequential execution to process-based parallelism using Python's multiprocessing library. Case studies on prolinamide derivatives and baicalein highlight the biological relevance of these workflows in targeting amyloid-beta and tau proteins. While limitations remain in data management, computational costs, and scaling efficiency, our results underline the potential of HPC to accelerate neurodegenerative drug discovery.

Forced variational integrators are given by the discretization of the Lagrange-d'Alembert principle for systems subject to external forces, and have proved useful for numerical simulation studies of complex dynamical systems. In this paper we model a passive walker with foot slip by using techniques of geometric mechanics, and we construct forced variational integrators for the system. Moreover, we present a methodology for generating (locally) optimal control policies for simple hybrid holonomically constrained forced Lagrangian systems, based on discrete mechanics, applied to a controlled walker with foot slip in a trajectory tracking problem.

Collaborative filtering models generally perform better than content-based filtering models and do not require careful feature engineering. However, in the cold-start scenario collaborative information may be scarce or even unavailable, whereas the content information may be abundant, but also noisy and expensive to acquire. Thus, selection of particular features that improve cold-start recommendations becomes an important and non-trivial task. In the recent approach by Nembrini et al., the feature selection is driven by the correlational compatibility between collaborative and content-based models. The problem is formulated as a Quadratic Unconstrained Binary Optimization (QUBO) which, due to its NP-hard complexity, is solved using Quantum Annealing on a quantum computer provided by D-Wave. Inspired by the reported results, we contend the idea that current quantum annealers are superior for this problem and instead focus on classical algorithms. In particular, we tackle QUBO via TTOpt, a recently proposed black-box optimizer based on tensor networks and multilinear algebra. We show the computational feasibility of this method for large problems with thousands of features, and empirically demonstrate that the solutions found are comparable to the ones obtained with D-Wave across all examined datasets.

When Artificial Intelligence (AI) is used to replace consumers (e.g., synthetic data), it is often assumed that AI emulates established consumers, and more generally human behaviors. Ten experiments with Large Language Models (LLMs) investigate if this is true in the domain of well-documented biases and heuristics. Across studies we observe four distinct types of deviations from human-like behavior. First, in some cases, LLMs reduce or correct biases observed in humans. Second, in other cases, LLMs amplify these same biases. Third, and perhaps most intriguingly, LLMs sometimes exhibit biases opposite to those found in humans. Fourth, LLMs' responses to the same (or similar) prompts tend to be inconsistent (a) within the same model after a time delay, (b) across models, and (c) among independent research studies. Such inconsistencies can be uncharacteristic of humans and suggest that, at least at one point, LLMs' responses differed from humans. Overall, unhuman-like responses are problematic when LLMs are used to mimic or predict consumer behavior. These findings complement research on synthetic consumer data by showing that sources of bias are not necessarily human-centric. They also contribute to the debate about the tasks for which consumers, and more generally humans, can be replaced by AI.

Scientific workflows increasingly involve both HPC and machine-learning tasks, combining MPI-based simulations, training, and inference in a single execution. Launchers such as Slurm's srun constrain concurrency and throughput, making them unsuitable for dynamic and heterogeneous workloads. We present a performance study of RADICAL-Pilot (RP) integrated with Flux and Dragon, two complementary runtime systems that enable hierarchical resource management and high-throughput function execution. Using synthetic and production-scale workloads on Frontier, we characterize the task execution properties of RP across runtime configurations. RP+Flux sustains up to 930 tasks/s, and RP+Flux+Dragon exceeds 1,500 tasks/s with over 99.6% utilization. In contrast, srun peaks at 152 tasks/s and degrades with scale, with utilization below 50%. For IMPECCABLE.v2 drug discovery campaign, RP+Flux reduces makespan by 30-60% relative to srun/Slurm and increases throughput more than four times on up to 1,024. These results demonstrate hybrid runtime integration in RP as a scalable approach for hybrid AI-HPC workloads.

30 Sep 2025

We introduce a novel prior distribution for modelling the weights in mixture models based on a generalisation of the Dirichlet distribution, the Selberg Dirichlet distribution. This distribution contains a repulsive term, which naturally penalises values that lie close to each other on the simplex, thus encouraging few dominating clusters. The repulsive behaviour induces additional sparsity on the number of components. We refer to this construction as sparsity-inducing partition (SIP) prior. By highlighting differences with the conventional Dirichlet distribution, we present relevant properties of the SIP prior and demonstrate their implications across a variety of mixture models, including finite mixtures with a fixed or random number of components, as well as repulsive mixtures. We propose an efficient posterior sampling algorithm and validate our model through an extensive simulation study as well as an application to a biomedical dataset describing children's Body Mass Index and eating behaviour.

We present tntorch, a tensor learning framework that supports multiple

decompositions (including Candecomp/Parafac, Tucker, and Tensor Train) under a

unified interface. With our library, the user can learn and handle low-rank

tensors with automatic differentiation, seamless GPU support, and the

convenience of PyTorch's API. Besides decomposition algorithms, tntorch

implements differentiable tensor algebra, rank truncation, cross-approximation,

batch processing, comprehensive tensor arithmetics, and more.

09 Jan 2024

In this paper we investigate the use of staged tree models for discrete

longitudinal data. Staged trees are a type of probabilistic graphical model for

finite sample space processes. They are a natural fit for longitudinal data

because a temporal ordering is often implicitly assumed and standard methods

can be used for model selection and probability estimation. However, model

selection methods perform poorly when the sample size is small relative to the

size of the graph and model interpretation is tricky with larger graphs. This

is exacerbated by longitudinal data which is characterised by repeated

observations. To address these issues we propose two approaches: the

longitudinal staged tree with Markov assumptions which makes some initial

conditional independence assumptions represented by a directed acyclic graph

and marginal longitudinal staged trees which model certain margins of the data.

The computational analysis of poetry is limited by the scarcity of tools to

automatically analyze and scan poems. In a multilingual settings, the problem

is exacerbated as scansion and rhyme systems only exist for individual

languages, making comparative studies very challenging and time consuming. In

this work, we present \textsc{Alberti}, the first multilingual pre-trained

large language model for poetry. Through domain-specific pre-training (DSP), we

further trained multilingual BERT on a corpus of over 12 million verses from 12

languages. We evaluated its performance on two structural poetry tasks: Spanish

stanza type classification, and metrical pattern prediction for Spanish,

English and German. In both cases, \textsc{Alberti} outperforms multilingual

BERT and other transformers-based models of similar sizes, and even achieves

state-of-the-art results for German when compared to rule-based systems,

demonstrating the feasibility and effectiveness of DSP in the poetry domain.

Several approaches have been developed to capture the complexity and

nonlinearity of human growth. One widely used is the Super Imposition by

Translation and Rotation (SITAR) model, which has become popular in studies of

adolescent growth. SITAR is a shape-invariant mixed-effects model that

represents the shared growth pattern of a population using a natural cubic

spline mean curve while incorporating three subject-specific random effects --

timing, size, and growth intensity -- to account for variations among

individuals. In this work, we introduce a supervised deep learning framework

based on an autoencoder architecture that integrates a deep neural network

(neural network) with a B-spline model to estimate the SITAR model. In this

approach, the encoder estimates the random effects for each individual, while

the decoder performs a fitting based on B-splines similar to the classic SITAR

model. We refer to this method as the Deep-SITAR model. This innovative

approach enables the prediction of the random effects of new individuals

entering a population without requiring a full model re-estimation. As a

result, Deep-SITAR offers a powerful approach to predicting growth

trajectories, combining the flexibility and efficiency of deep learning with

the interpretability of traditional mixed-effects models.

This study explores the concept of creativity and artificial intelligence

(AI) and their recent integration. While AI has traditionally been perceived as

incapable of generating new ideas or creating art, the development of more

sophisticated AI models and the proliferation of human-computer interaction

tools have opened up new possibilities for AI in artistic creation. This study

investigates the various applications of AI in a creative context,

differentiating between the type of art, language, and algorithms used. It also

considers the philosophical implications of AI and creativity, questioning

whether consciousness can be researched in machines and AI's potential

interests and decision-making capabilities. Overall, we aim to stimulate a

reflection on AI's use and ethical implications in creative contexts.

There are no more papers matching your filters at the moment.