Indian Institute of Information Technology Allahabad

A comprehensive survey and benchmark evaluated 18 state-of-the-art activation functions across diverse network architectures and data modalities, including image, text, and speech. The study reveals that the optimal activation function depends on the specific network and data type, demonstrating that newer adaptive functions can outperform traditional ones in certain scenarios while highlighting trade-offs between accuracy and computational efficiency.

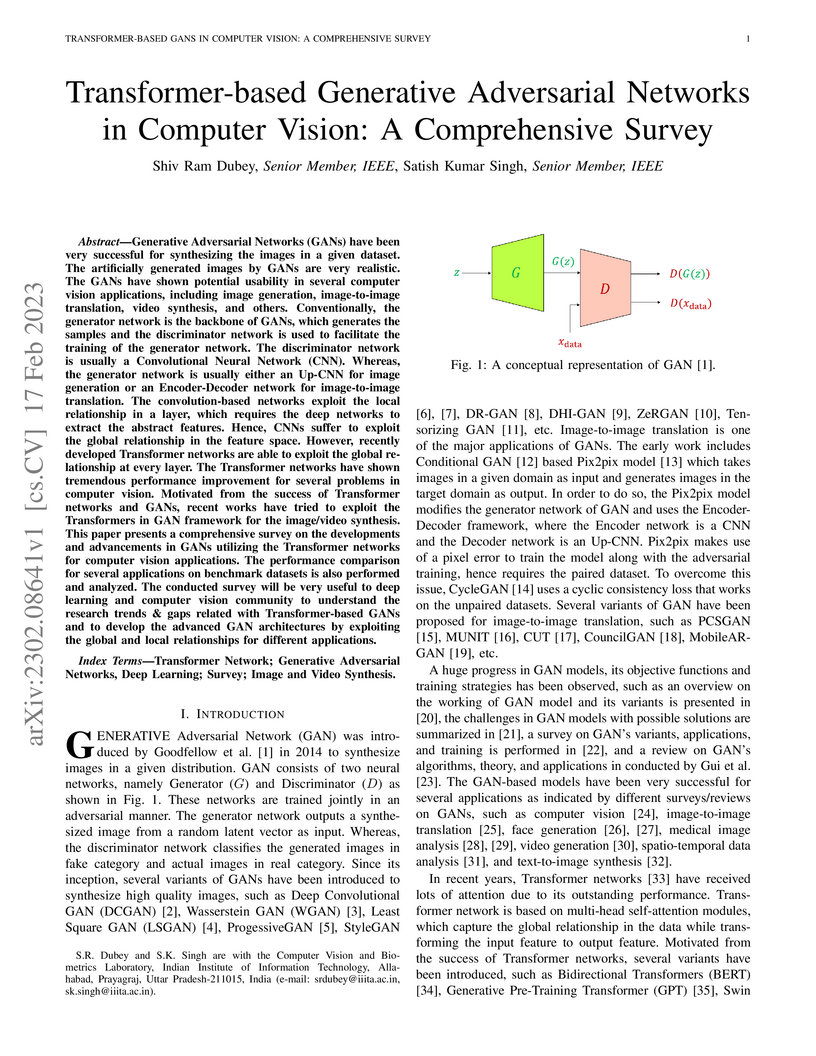

Generative Adversarial Networks (GANs) have been very successful for synthesizing the images in a given dataset. The artificially generated images by GANs are very realistic. The GANs have shown potential usability in several computer vision applications, including image generation, image-to-image translation, video synthesis, and others. Conventionally, the generator network is the backbone of GANs, which generates the samples and the discriminator network is used to facilitate the training of the generator network. The discriminator network is usually a Convolutional Neural Network (CNN). Whereas, the generator network is usually either an Up-CNN for image generation or an Encoder-Decoder network for image-to-image translation. The convolution-based networks exploit the local relationship in a layer, which requires the deep networks to extract the abstract features. Hence, CNNs suffer to exploit the global relationship in the feature space. However, recently developed Transformer networks are able to exploit the global relationship at every layer. The Transformer networks have shown tremendous performance improvement for several problems in computer vision. Motivated from the success of Transformer networks and GANs, recent works have tried to exploit the Transformers in GAN framework for the image/video synthesis. This paper presents a comprehensive survey on the developments and advancements in GANs utilizing the Transformer networks for computer vision applications. The performance comparison for several applications on benchmark datasets is also performed and analyzed. The conducted survey will be very useful to deep learning and computer vision community to understand the research trends \& gaps related with Transformer-based GANs and to develop the advanced GAN architectures by exploiting the global and local relationships for different applications.

Shailesh Kumar's PhD thesis from IIIT-Allahabad extends the understanding of gravitational memory effects and Bondi-Metzner-Sachs (BMS) asymptotic symmetries from asymptotic null infinity to the near-horizon regions of black holes. The work establishes a classical framework for "soft hair" and proposes a method to detect supertranslation "hair" through observable changes in black hole photon sphere dynamics.

Surveillance footage can catch a wide range of realistic anomalies. This

research suggests using a weakly supervised strategy to avoid annotating

anomalous segments in training videos, which is time consuming. In this

approach only video level labels are used to obtain frame level anomaly scores.

Weakly supervised video anomaly detection (WSVAD) suffers from the wrong

identification of abnormal and normal instances during the training process.

Therefore it is important to extract better quality features from the available

videos. WIth this motivation, the present paper uses better quality

transformer-based features named Videoswin Features followed by the attention

layer based on dilated convolution and self attention to capture long and short

range dependencies in temporal domain. This gives us a better understanding of

available videos. The proposed framework is validated on real-world dataset

i.e. ShanghaiTech Campus dataset which results in competitive performance than

current state-of-the-art methods. The model and the code are available at

this https URL

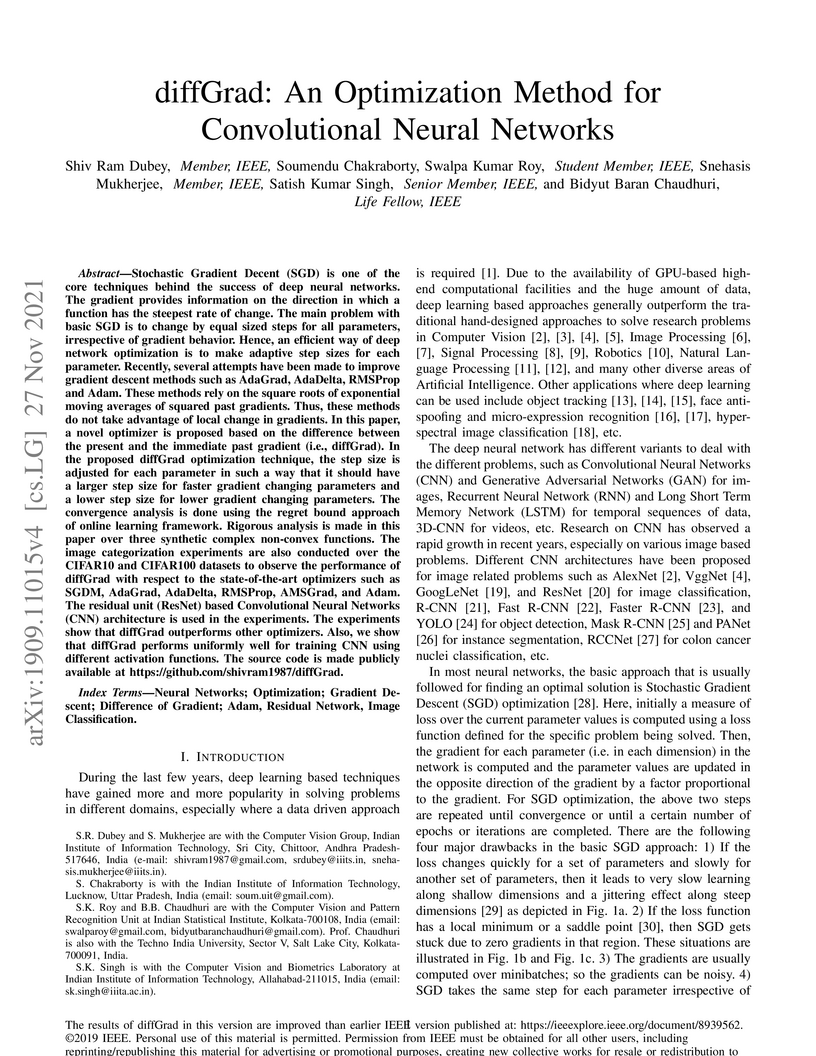

Stochastic Gradient Decent (SGD) is one of the core techniques behind the

success of deep neural networks. The gradient provides information on the

direction in which a function has the steepest rate of change. The main problem

with basic SGD is to change by equal sized steps for all parameters,

irrespective of gradient behavior. Hence, an efficient way of deep network

optimization is to make adaptive step sizes for each parameter. Recently,

several attempts have been made to improve gradient descent methods such as

AdaGrad, AdaDelta, RMSProp and Adam. These methods rely on the square roots of

exponential moving averages of squared past gradients. Thus, these methods do

not take advantage of local change in gradients. In this paper, a novel

optimizer is proposed based on the difference between the present and the

immediate past gradient (i.e., diffGrad). In the proposed diffGrad optimization

technique, the step size is adjusted for each parameter in such a way that it

should have a larger step size for faster gradient changing parameters and a

lower step size for lower gradient changing parameters. The convergence

analysis is done using the regret bound approach of online learning framework.

Rigorous analysis is made in this paper over three synthetic complex non-convex

functions. The image categorization experiments are also conducted over the

CIFAR10 and CIFAR100 datasets to observe the performance of diffGrad with

respect to the state-of-the-art optimizers such as SGDM, AdaGrad, AdaDelta,

RMSProp, AMSGrad, and Adam. The residual unit (ResNet) based Convolutional

Neural Networks (CNN) architecture is used in the experiments. The experiments

show that diffGrad outperforms other optimizers. Also, we show that diffGrad

performs uniformly well for training CNN using different activation functions.

The source code is made publicly available at

this https URL

This paper proposes an Information Bottleneck theory based filter pruning method that uses a statistical measure called Mutual Information (MI). The MI between filters and class labels, also called \textit{Relevance}, is computed using the filter's activation maps and the annotations. The filters having High Relevance (HRel) are considered to be more important. Consequently, the least important filters, which have lower Mutual Information with the class labels, are pruned. Unlike the existing MI based pruning methods, the proposed method determines the significance of the filters purely based on their corresponding activation map's relationship with the class labels. Architectures such as LeNet-5, VGG-16, ResNet-56\textcolor{myblue}{, ResNet-110 and ResNet-50 are utilized to demonstrate the efficacy of the proposed pruning method over MNIST, CIFAR-10 and ImageNet datasets. The proposed method shows the state-of-the-art pruning results for LeNet-5, VGG-16, ResNet-56, ResNet-110 and ResNet-50 architectures. In the experiments, we prune 97.98 \%, 84.85 \%, 76.89\%, 76.95\%, and 63.99\% of Floating Point Operation (FLOP)s from LeNet-5, VGG-16, ResNet-56, ResNet-110, and ResNet-50 respectively.} The proposed HRel pruning method outperforms recent state-of-the-art filter pruning methods. Even after pruning the filters from convolutional layers of LeNet-5 drastically (i.e. from 20, 50 to 2, 3, respectively), only a small accuracy drop of 0.52\% is observed. Notably, for VGG-16, 94.98\% parameters are reduced, only with a drop of 0.36\% in top-1 accuracy. \textcolor{myblue}{ResNet-50 has shown a 1.17\% drop in the top-5 accuracy after pruning 66.42\% of the FLOPs.} In addition to pruning, the Information Plane dynamics of Information Bottleneck theory is analyzed for various Convolutional Neural Network architectures with the effect of pruning.

Exponential growth in heterogeneous healthcare data arising from electronic health records (EHRs), medical imaging, wearable sensors, and biomedical research has accelerated the adoption of data lakes and centralized architectures capable of handling the Volume, Variety, and Velocity of Big Data for advanced analytics. However, without effective governance, these repositories risk devolving into disorganized data swamps. Ontology-driven semantic data management offers a robust solution by linking metadata to healthcare knowledge graphs, thereby enhancing semantic interoperability, improving data discoverability, and enabling expressive, domain-aware access. This review adopts a systematic research strategy, formulating key research questions and conducting a structured literature search across major academic databases, with selected studies analyzed and classified into six categories of ontology-driven healthcare analytics: (i) ontology-driven integration frameworks, (ii) semantic modeling for metadata enrichment, (iii) ontology-based data access (OBDA), (iv) basic semantic data management, (v) ontology-based reasoning for decision support, and (vi) semantic annotation for unstructured data. We further examine the integration of ontology technologies with Big Data frameworks such as Hadoop, Spark, Kafka, and so on, highlighting their combined potential to deliver scalable and intelligent healthcare analytics. For each category, recent techniques, representative case studies, technical and organizational challenges, and emerging trends such as artificial intelligence, machine learning, the Internet of Things (IoT), and real-time analytics are reviewed to guide the development of sustainable, interoperable, and high-performance healthcare data ecosystems.

Deep learning has brought the most profound contribution towards biomedical image segmentation to automate the process of delineation in medical imaging. To accomplish such task, the models are required to be trained using huge amount of annotated or labelled data that highlights the region of interest with a binary mask. However, efficient generation of the annotations for such huge data requires expert biomedical analysts and extensive manual effort. It is a tedious and expensive task, while also being vulnerable to human error. To address this problem, a self-supervised learning framework, BT-Unet is proposed that uses the Barlow Twins approach to pre-train the encoder of a U-Net model via redundancy reduction in an unsupervised manner to learn data representation. Later, complete network is fine-tuned to perform actual segmentation. The BT-Unet framework can be trained with a limited number of annotated samples while having high number of unannotated samples, which is mostly the case in real-world problems. This framework is validated over multiple U-Net models over diverse datasets by generating scenarios of a limited number of labelled samples using standard evaluation metrics. With exhaustive experiment trials, it is observed that the BT-Unet framework enhances the performance of the U-Net models with significant margin under such circumstances.

The exponential expansion of real-time data streams across multiple domains needs the development of effective event detection, correlation, and decision-making systems. However, classic Complex Event Processing (CEP) systems struggle with semantic heterogeneity, data interoperability, and knowledge driven event reasoning in Big Data environments. To solve these challenges, this research work presents an Ontology based Complex Event Processing (OCEP) framework, which utilizes semantic reasoning and Big Data Analytics to improve event driven decision support. The proposed OCEP architecture utilizes ontologies to support reasoning to event streams. It ensures compatibility with different data sources and lets you find the events based on the context. The Resource Description Framework (RDF) organizes event data, and SPARQL query enables rapid event reasoning and retrieval. The approach is implemented within the Hadoop environment, which consists of Hadoop Distributed File System (HDFS) for scalable storage and Apache Kafka for real-time CEP based event execution. We perform a real-time healthcare analysis and case study to validate the model, utilizing IoT sensor data for illness monitoring and emergency responses. This OCEP framework successfully integrates several event streams, leading to improved early disease detection and aiding doctors in decision-making. The result shows that OCEP predicts event detection with an accuracy of 85%. This research work utilizes an OCEP to solve the problems with semantic interoperability and correlation of complex events in Big Data analytics. The proposed architecture presents an intelligent, scalable and knowledge driven event processing framework for healthcare based decision support.

Personality computing and affective computing have gained recent interest in many research areas. The datasets for the task generally have multiple modalities like video, audio, language and bio-signals. In this paper, we propose a flexible model for the task which exploits all available data. The task involves complex relations and to avoid using a large model for video processing specifically, we propose the use of behaviour encoding which boosts performance with minimal change to the model. Cross-attention using transformers has become popular in recent times and is utilised for fusion of different modalities. Since long term relations may exist, breaking the input into chunks is not desirable, thus the proposed model processes the entire input together. Our experiments show the importance of each of the above contributions

This paper proposes an automatic subtitle generation and semantic video

summarization technique. The importance of automatic video summarization is

vast in the present era of big data. Video summarization helps in efficient

storage and also quick surfing of large collection of videos without losing the

important ones. The summarization of the videos is done with the help of

subtitles which is obtained using several text summarization algorithms. The

proposed technique generates the subtitle for videos with/without subtitles

using speech recognition and then applies NLP based Text summarization

algorithms on the subtitles. The performance of subtitle generation and video

summarization is boosted through Ensemble method with two approaches such as

Intersection method and Weight based learning method Experimental results

reported show the satisfactory performance of the proposed method

To meet next-generation IoT application demands, edge computing moves

processing power and storage closer to the network edge to minimise latency and

bandwidth utilisation. Edge computing is becoming popular as a result of these

benefits, but resource management is still challenging. Researchers are

utilising AI models to solve the challenge of resource management in edge

computing systems. However, existing simulation tools are only concerned with

typical resource management policies, not the adoption and implementation of AI

models for resource management, especially. Consequently, researchers continue

to face significant challenges, making it hard and time-consuming to use AI

models when designing novel resource management policies for edge computing

with existing simulation tools. To overcome these issues, we propose a

lightweight Python-based toolkit called EdgeAISim for the simulation and

modelling of AI models for designing resource management policies in edge

computing environments. In EdgeAISim, we extended the basic components of the

EdgeSimPy framework and developed new AI-based simulation models for task

scheduling, energy management, service migration, network flow scheduling, and

mobility support for edge computing environments. In EdgeAISim, we have

utilised advanced AI models such as Multi-Armed Bandit with Upper Confidence

Bound, Deep Q-Networks, Deep Q-Networks with Graphical Neural Network, and

ActorCritic Network to optimize power usage while efficiently managing task

migration within the edge computing environment. The performance of these

proposed models of EdgeAISim is compared with the baseline, which uses a

worst-fit algorithm-based resource management policy in different settings.

Experimental results indicate that EdgeAISim exhibits a substantial reduction

in power consumption, highlighting the compelling success of power optimization

strategies in EdgeAISim.

A systematic review categorizes methods addressing catastrophic forgetting in deep neural networks, providing a structured comparison based on their evaluation mechanisms and algorithmic behaviors. The study finds replay-based approaches consistently demonstrate robust performance across various continual learning scenarios, outperforming regularization methods in challenging settings.

In this paper, we present electromyography analysis of human activity -

database 1 (EMAHA-DB1), a novel dataset of multi-channel surface

electromyography (sEMG) signals to evaluate the activities of daily living

(ADL). The dataset is acquired from 25 able-bodied subjects while performing 22

activities categorised according to functional arm activity behavioral system

(FAABOS) (3 - full hand gestures, 6 - open/close office draw, 8 - grasping and

holding of small office objects, 2 - flexion and extension of finger movements,

2 - writing and 1 - rest). The sEMG data is measured by a set of five Noraxon

Ultium wireless sEMG sensors with Ag/Agcl electrodes placed on a human hand.

The dataset is analyzed for hand activity recognition classification

performance. The classification is performed using four state-ofthe-art machine

learning classifiers, including Random Forest (RF), Fine K-Nearest Neighbour

(KNN), Ensemble KNN (sKNN) and Support Vector Machine (SVM) with seven

combinations of time domain and frequency domain feature sets. The

state-of-theart classification accuracy on five FAABOS categories is 83:21% by

using the SVM classifier with the third order polynomial kernel using energy

feature and auto regressive feature set ensemble. The classification accuracy

on 22 class hand activities is 75:39% by the same SVM classifier with the log

moments in frequency domain (LMF) feature, modified LMF, time domain

statistical (TDS) feature, spectral band powers (SBP), channel cross

correlation and local binary patterns (LBP) set ensemble. The analysis depicts

the technical challenges addressed by the dataset. The developed dataset can be

used as a benchmark for various classification methods as well as for sEMG

signal analysis corresponding to ADL and for the development of prosthetics and

other wearable robotics.

It is essential to classify brain tumors from magnetic resonance imaging (MRI) accurately for better and timely treatment of the patients. In this paper, we propose a hybrid model, using VGG along with Nonlinear-SVM (Soft and Hard) to classify the brain tumors: glioma and pituitary and tumorous and non-tumorous. The VGG-SVM model is trained for two different datasets of two classes; thus, we perform binary classification. The VGG models are trained via the PyTorch python library to obtain the highest testing accuracy of tumor classification. The method is threefold, in the first step, we normalize and resize the images, and the second step consists of feature extraction through variants of the VGG model. The third step classified brain tumors using non-linear SVM (soft and hard). We have obtained 98.18% accuracy for the first dataset and 99.78% for the second dataset using VGG19. The classification accuracies for non-linear SVM are 95.50% and 97.98% with linear and rbf kernel and 97.95% for soft SVM with RBF kernel with D1, and 96.75% and 98.60% with linear and RBF kernel and 98.38% for soft SVM with RBF kernel with D2. Results indicate that the hybrid VGG-SVM model, especially VGG 19 with SVM, is able to outperform existing techniques and achieve high accuracy.

05 Oct 2025

Timely detection of critical health conditions remains a major challenge in public health analytics, especially in Big Data environments characterized by high volume, rapid velocity, and diverse variety of clinical data. This study presents an ontology-enabled real-time analytics framework that integrates Complex Event Processing (CEP) and Large Language Models (LLMs) to enable intelligent health event detection and semantic reasoning over heterogeneous, high-velocity health data streams. The architecture leverages the Basic Formal Ontology (BFO) and Semantic Web Rule Language (SWRL) to model diagnostic rules and domain knowledge. Patient data is ingested and processed using Apache Kafka and Spark Streaming, where CEP engines detect clinically significant event patterns. LLMs support adaptive reasoning, event interpretation, and ontology refinement. Clinical information is semantically structured as Resource Description Framework (RDF) triples in Graph DB, enabling SPARQL-based querying and knowledge-driven decision support. The framework is evaluated using a dataset of 1,000 Tuberculosis (TB) patients as a use case, demonstrating low-latency event detection, scalable reasoning, and high model performance (in terms of precision, recall, and F1-score). These results validate the system's potential for generalizable, real-time health analytics in complex Big Data scenarios.

18 Jul 2025

This study numerically solves inhomogeneous Helmholtz equations modeling acoustic wave propagation in homogeneous and lossless, absorbing and dispersive, inhomogeneous and nonlinear media. The traditional Born series (TBS) method has been employed to solve such equations. The full wave solution in this methodology is expressed as an infinite sum of the solution of the unperturbed equation weighted by increasing power of the potential. Simulated pressure field patterns for a linear array of acoustic sources (a line source) estimated by the TBS procedure exhibit excellent agreement with that of a standard time domain approach (k-Wave toolbox). The TBS scheme though iterative but is a very fast method. For example, GPU enabled CUDA C code implementing the TBS procedure takes 5 s to calculate the pressure field for the homogeneous and lossless medium whereas nearly 500 s is taken by the later module. The execution time for the corresponding CPU code is about 20 s. The findings of this study demonstrate the effectiveness of the TBS method for solving inhomogeneous Helmholtz equation, while the GPU-based implementation significantly reduces the computation time. This method can be explored in practice for calculation of pressure fields generated by real transducers designed for diverse applications.

In recent years, the challenge of 3D shape analysis within point cloud data has gathered significant attention in computer vision. Addressing the complexities of effective 3D information representation and meaningful feature extraction for classification tasks remains crucial. This paper presents Point-GR, a novel deep learning architecture designed explicitly to transform unordered raw point clouds into higher dimensions while preserving local geometric features. It introduces residual-based learning within the network to mitigate the point permutation issues in point cloud data. The proposed Point-GR network significantly reduced the number of network parameters in Classification and Part-Segmentation compared to baseline graph-based networks. Notably, the Point-GR model achieves a state-of-the-art scene segmentation mean IoU of 73.47% on the S3DIS benchmark dataset, showcasing its effectiveness. Furthermore, the model shows competitive results in Classification and Part-Segmentation tasks.

Brain tumor is the most common and deadliest disease that can be found in all age groups. Generally, MRI modality is adopted for identifying and diagnosing tumors by the radiologists. The correct identification of tumor regions and its type can aid to diagnose tumors with the followup treatment plans. However, for any radiologist analysing such scans is a complex and time-consuming task. Motivated by the deep learning based computer-aided-diagnosis systems, this paper proposes multi-task attention guided encoder-decoder network (MAG-Net) to classify and segment the brain tumor regions using MRI images. The MAG-Net is trained and evaluated on the Figshare dataset that includes coronal, axial, and sagittal views with 3 types of tumors meningioma, glioma, and pituitary tumor. With exhaustive experimental trials the model achieved promising results as compared to existing state-of-the-art models, while having least number of training parameters among other state-of-the-art models.

Deep learning and advancements in contactless sensors have significantly enhanced our ability to understand complex human activities in healthcare settings. In particular, deep learning models utilizing computer vision have been developed to enable detailed analysis of human gesture recognition, especially repetitive gestures which are commonly observed behaviors in children with autism. This research work aims to identify repetitive behaviors indicative of autism by analyzing videos captured in natural settings as children engage in daily activities. The focus is on accurately categorizing real-time repetitive gestures such as spinning, head banging, and arm flapping. To this end, we utilize the publicly accessible Self-Stimulatory Behavior Dataset (SSBD) to classify these stereotypical movements. A key component of the proposed methodology is the use of \textbf{VideoMAE}, a model designed to improve both spatial and temporal analysis of video data through a masking and reconstruction mechanism. This model significantly outperformed traditional methods, achieving an accuracy of 97.7\%, a 14.7\% improvement over the previous state-of-the-art.

There are no more papers matching your filters at the moment.