Institute of Computer ScienceThe Czech Academy of Sciences

05 Dec 2024

In this paper, we consider an analysis of temporal properties of hybrid systems based on simulations, so-called falsification of requirements. We present a novel exploration-based algorithm for falsification of black-box models of hybrid systems based on the Voronoi bias in the output space. This approach is inspired by techniques used originally in motion planning: rapidly exploring random trees. Instead of commonly employed exploration that is based on coverage of inputs, the proposed algorithm aims to cover all possible outputs directly. Compared to other state-of-the-art falsification tools, it also does not require robustness or other guidance metrics tied to a specific behavior that is being falsified. This allows our algorithm to falsify specifications for which robustness is not conclusive enough to guide the falsification procedure.

We provide an upper bound on the number of neurons required in a shallow

neural network to approximate a continuous function on a compact set with a

given accuracy. This method, inspired by a specific proof of the

Stone-Weierstrass theorem, is constructive and more general than previous

bounds of this character, as it applies to any continuous function on any

compact set.

We propose a novel machine learning approach for forecasting the distribution of stock returns using a rich set of firm-level and market predictors. Our method combines a two-stage quantile neural network with spline interpolation to construct smooth, flexible cumulative distribution functions without relying on restrictive parametric assumptions. This allows accurate modelling of non-Gaussian features such as fat tails and asymmetries. Furthermore, we show how to derive other statistics from the forecasted return distribution such as mean, variance, skewness, and kurtosis. The derived mean and variance forecasts offer significantly improved out-of-sample performance compared to standard models. We demonstrate the robustness of the method in US and international markets.

The world is abundant with diverse materials, each possessing unique surface appearances that play a crucial role in our daily perception and understanding of their properties. Despite advancements in technology enabling the capture and realistic reproduction of material appearances for visualization and quality control, the interoperability of material property information across various measurement representations and software platforms remains a complex challenge. A key to overcoming this challenge lies in the automatic identification of materials' perceptual features, enabling intuitive differentiation of properties stored in disparate material data representations. We reasoned that for many practical purposes, a compact representation of the perceptual appearance is more useful than an exhaustive physical this http URL paper introduces a novel approach to material identification by encoding perceptual features obtained from dynamic visual stimuli. We conducted a psychophysical experiment to select and validate 16 particularly significant perceptual attributes obtained from videos of 347 materials. We then gathered attribute ratings from over twenty participants for each material, creating a 'material fingerprint' that encodes the unique perceptual properties of each material. Finally, we trained a multi-layer perceptron model to predict the relationship between statistical and deep learning image features and their corresponding perceptual properties. We demonstrate the model's performance in material retrieval and filtering according to individual attributes. This model represents a significant step towards simplifying the sharing and understanding of material properties in diverse digital environments regardless of their digital representation, enhancing both the accuracy and efficiency of material identification.

06 Oct 2025

We propose a multi-agent epistemic logic capturing reasoning with degrees of plausibility that agents can assign to a given statement, with 1 interpreted as "entirely plausible for the agent" and 0 as "completely implausible" (i.e., the agent knows that the statement is false). We formalise such reasoning in an expansion of Gödel fuzzy logic with an involutive negation and multiple S5-like modalities. As already Gödel single-modal logics are known to lack the finite model property w.r.t. their standard [0,1]-valued Kripke semantics, we provide an alternative semantics that allows for the finite model property. For this semantics, we construct a strongly terminating tableaux calculus that allows us to produce finite counter-models of non-valid formulas. We then use the tableaux to show that the validity problem in our logic is PSpace-complete when there are two or more agents, and coNP-complete for the single-agent case.

Longhorn is an open-source, cloud-native software-defined storage (SDS)

engine that delivers distributed block storage management in Kubernetes

environments. This paper explores performance optimization techniques for

Longhorn's core component, the Longhorn engine, to overcome limitations in

leveraging high-performance server hardware, such as solid-state NVMe disks and

low-latency, high-bandwidth networking. By integrating ublk at the frontend, to

expose the virtual block device to the operating system, restructuring the

communication protocol, and employing DBS, our simplified, direct-to-disk

storage scheme, the system achieves significant performance improvements with

respect to the default I/O path. Our results contribute to enhancing Longhorn's

applicability in both cloud and on-premises setups, as well as provide insights

for the broader SDS community.

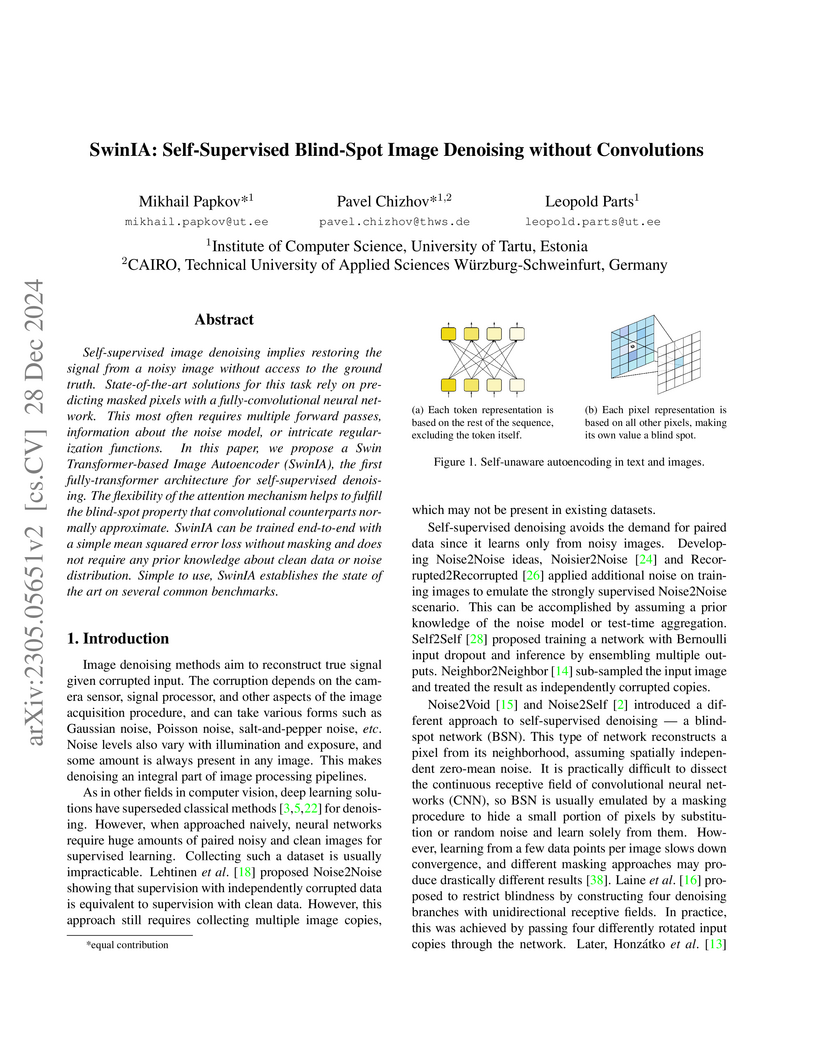

Self-supervised image denoising implies restoring the signal from a noisy image without access to the ground truth. State-of-the-art solutions for this task rely on predicting masked pixels with a fully-convolutional neural network. This most often requires multiple forward passes, information about the noise model, or intricate regularization functions. In this paper, we propose a Swin Transformer-based Image Autoencoder (SwinIA), the first fully-transformer architecture for self-supervised denoising. The flexibility of the attention mechanism helps to fulfill the blind-spot property that convolutional counterparts normally approximate. SwinIA can be trained end-to-end with a simple mean squared error loss without masking and does not require any prior knowledge about clean data or noise distribution. Simple to use, SwinIA establishes the state of the art on several common benchmarks.

University of Cincinnati California Institute of Technology

California Institute of Technology Harvard UniversityIndiana UniversityIllinois Institute of Technology

Harvard UniversityIndiana UniversityIllinois Institute of Technology Argonne National LaboratoryColorado State UniversityFermi National Accelerator LaboratoryUniversity of HoustonUniversity of DelhiUniversidade Federal de GoiásCzech Technical University in PragueIIT HyderabadInstitute of Science, Banaras Hindu UniversityUniversity of HyderabadCochin University of Science and TechnologyIIT GuwahatiThe Czech Academy of SciencesUniversity of DallasUniversidad del Atlantico

Argonne National LaboratoryColorado State UniversityFermi National Accelerator LaboratoryUniversity of HoustonUniversity of DelhiUniversidade Federal de GoiásCzech Technical University in PragueIIT HyderabadInstitute of Science, Banaras Hindu UniversityUniversity of HyderabadCochin University of Science and TechnologyIIT GuwahatiThe Czech Academy of SciencesUniversity of DallasUniversidad del Atlantico

California Institute of Technology

California Institute of Technology Harvard UniversityIndiana UniversityIllinois Institute of Technology

Harvard UniversityIndiana UniversityIllinois Institute of Technology Argonne National LaboratoryColorado State UniversityFermi National Accelerator LaboratoryUniversity of HoustonUniversity of DelhiUniversidade Federal de GoiásCzech Technical University in PragueIIT HyderabadInstitute of Science, Banaras Hindu UniversityUniversity of HyderabadCochin University of Science and TechnologyIIT GuwahatiThe Czech Academy of SciencesUniversity of DallasUniversidad del Atlantico

Argonne National LaboratoryColorado State UniversityFermi National Accelerator LaboratoryUniversity of HoustonUniversity of DelhiUniversidade Federal de GoiásCzech Technical University in PragueIIT HyderabadInstitute of Science, Banaras Hindu UniversityUniversity of HyderabadCochin University of Science and TechnologyIIT GuwahatiThe Czech Academy of SciencesUniversity of DallasUniversidad del AtlanticoWe report a search for a magnetic monopole component of the cosmic-ray flux

in a 95-day exposure of the NOvA experiment's Far Detector, a 14 kt segmented

liquid scintillator detector designed primarily to observe GeV-scale electron

neutrinos. No events consistent with monopoles were observed, setting an upper

limit on the flux of 2×10−14cm−2s−1sr−1 at 90%

C.L. for monopole speed 6\times 10^{-4} < \beta < 5\times 10^{-3} and mass

greater than 5×108 GeV. Because of NOvA's small overburden of 3

meters-water equivalent, this constraint covers a previously unexplored

low-mass region.

We study a geometric facility location problem under imprecision. Given n unit intervals in the real line, each with one of k colors, the goal is to place one point in each interval such that the resulting \emph{minimum color-spanning interval} is as large as possible. A minimum color-spanning interval is an interval of minimum size that contains at least one point from a given interval of each color. We prove that if the input intervals are pairwise disjoint, the problem can be solved in O(n) time, even for intervals of arbitrary length. For overlapping intervals, the problem becomes much more difficult. Nevertheless, we show that it can be solved in O(nlog2n) time when k=2, by exploiting several structural properties of candidate solutions, combined with a number of advanced algorithmic techniques. Interestingly, this shows a sharp contrast with the 2-dimensional version of the problem, recently shown to be NP-hard.

06 Aug 2025

University of Cincinnati California Institute of TechnologyCharles UniversityIndiana UniversityIllinois Institute of Technology

California Institute of TechnologyCharles UniversityIndiana UniversityIllinois Institute of Technology Argonne National LaboratoryFlorida State UniversityColorado State UniversityFermi National Accelerator LaboratoryUniversity of HoustonUniversity of DelhiCzech Technical University in PragueErciyes UniversityIIT HyderabadBanaras Hindu UniversityUniversity of HyderabadCochin University of Science and TechnologyIIT GuwahatiThe Czech Academy of SciencesUniversidad del AtlanticoUniversidade Federal de GoiasBandirma Onyedi Eylul University

Argonne National LaboratoryFlorida State UniversityColorado State UniversityFermi National Accelerator LaboratoryUniversity of HoustonUniversity of DelhiCzech Technical University in PragueErciyes UniversityIIT HyderabadBanaras Hindu UniversityUniversity of HyderabadCochin University of Science and TechnologyIIT GuwahatiThe Czech Academy of SciencesUniversidad del AtlanticoUniversidade Federal de GoiasBandirma Onyedi Eylul University

California Institute of TechnologyCharles UniversityIndiana UniversityIllinois Institute of Technology

California Institute of TechnologyCharles UniversityIndiana UniversityIllinois Institute of Technology Argonne National LaboratoryFlorida State UniversityColorado State UniversityFermi National Accelerator LaboratoryUniversity of HoustonUniversity of DelhiCzech Technical University in PragueErciyes UniversityIIT HyderabadBanaras Hindu UniversityUniversity of HyderabadCochin University of Science and TechnologyIIT GuwahatiThe Czech Academy of SciencesUniversidad del AtlanticoUniversidade Federal de GoiasBandirma Onyedi Eylul University

Argonne National LaboratoryFlorida State UniversityColorado State UniversityFermi National Accelerator LaboratoryUniversity of HoustonUniversity of DelhiCzech Technical University in PragueErciyes UniversityIIT HyderabadBanaras Hindu UniversityUniversity of HyderabadCochin University of Science and TechnologyIIT GuwahatiThe Czech Academy of SciencesUniversidad del AtlanticoUniversidade Federal de GoiasBandirma Onyedi Eylul UniversityThe flux of cosmic ray muons at the Earth's surface exhibits seasonal variations due to changes in the temperature of the atmosphere affecting the production and decay of mesons in the upper atmosphere. Using data collected by the NOvA Near Detector during 2018--2022, we studied the seasonal pattern in the multiple-muon event rate. The data confirm an anticorrelation between the multiple-muon event rate and effective atmospheric temperature, consistent across all the years of data. Previous analyses from MINOS and NOvA saw a similar anticorrelation but did not include an explanation. We find that this anticorrelation is driven by altitude--geometry effects as the average muon production height changes with the season. This has been checked with a CORSIKA cosmic ray simulation package by varying atmospheric parameters, and provides an explanation to a longstanding discrepancy between the seasonal phases of single and multiple-muon events.

11 Oct 2024

The metric dimension of a graph measures how uniquely vertices may be

identified using a set of landmark vertices. This concept is frequently used in

the study of network architecture, location-based problems and communication.

Given a graph G, the metric dimension, denoted as dim(G), is the minimum

size of a resolving set, a subset of vertices such that for every pair of

vertices in G, there exists a vertex in the resolving set whose shortest path

distance to the two vertices is different. This subset of vertices helps to

uniquely determine the location of other vertices in the graph. A basis is a

resolving set with a least cardinality. Finding a basis is a problem with

practical applications in network design, where it is important to efficiently

locate and identify nodes based on a limited set of reference points. The

Cartesian product of Pm and Pn is the grid network in network science. In

this paper, we investigate two novel types of grids in network science: the

Villarceau grid Type I and Type II. For each of these grid types, we find the

precise metric dimension.

16 Sep 2025

We study the labelled growth rate of an ω-categorical structure \fa, i.e., the number of orbits of Aut(\fa) on n-tuples of distinct elements, and show that the model-theoretic property of monadic stability yields a gap in the spectrum of allowable labelled growth rates. As a further application, we obtain gap in the spectrum of allowable labelled growth rates in hereditary graph classes, with no a priori assumption of ω-categoricity. We also establish a way to translate results about labelled growth rates of ω-categorical structures into combinatorial statements about sets with weak finiteness properties in the absence of the axiom of choice, and derive several results from this translation.

Maximum entropy estimation is of broad interest for inferring properties of

systems across many different disciplines. In this work, we significantly

extend a technique we previously introduced for estimating the maximum entropy

of a set of random discrete variables when conditioning on bivariate mutual

informations and univariate entropies. Specifically, we show how to apply the

concept to continuous random variables and vastly expand the types of

information-theoretic quantities one can condition on. This allows us to

establish a number of significant advantages of our approach over existing

ones. Not only does our method perform favorably in the undersampled regime,

where existing methods fail, but it also can be dramatically less

computationally expensive as the cardinality of the variables increases. In

addition, we propose a nonparametric formulation of connected informations and

give an illustrative example showing how this agrees with the existing

parametric formulation in cases of interest. We further demonstrate the

applicability and advantages of our method to real world systems for the case

of resting-state human brain networks. Finally, we show how our method can be

used to estimate the structural network connectivity between interacting units

from observed activity and establish the advantages over other approaches for

the case of phase oscillator networks as a generic example.

We study the role of co-jumps in the interest rate futures markets. To

disentangle continuous part of quadratic covariation from co-jumps, we localize

the co-jumps precisely through wavelet coefficients and identify statistically

significant ones. Using high frequency data about U.S. and European yield

curves we quantify the effect of co-jumps on their correlation structure.

Empirical findings reveal much stronger co-jumping behavior of the U.S. yield

curves in comparison to the European one. Further, we connect co-jumping

behavior to the monetary policy announcements, and study effect of 103 FOMC and

119 ECB announcements on the identified co-jumps during the period from January

2007 to December 2017.

We show how bad and good volatility propagate through forex markets, i.e., we

provide evidence for asymmetric volatility connectedness on forex markets.

Using high-frequency, intra-day data of the most actively traded currencies

over 2007 - 2015 we document the dominating asymmetries in spillovers that are

due to bad rather than good volatility. We also show that negative spillovers

are chiefly tied to the dragging sovereign debt crisis in Europe while positive

spillovers are correlated with the subprime crisis, different monetary policies

among key world central banks, and developments on commodities markets. It

seems that a combination of monetary and real-economy events is behind the net

positive asymmetries in volatility spillovers, while fiscal factors are linked

with net negative spillovers.

13 Jan 2022

For graphs G and H, we write $G \overset{\mathrm{rb}}{\longrightarrow} H

ifanyproperedge−coloringofGcontainsarainbowcopyofH$, i.e., a

copy where no color appears more than once. Kohayakawa, Konstadinidis and the

last author proved that the threshold for $G(n,p)

\overset{\mathrm{rb}}{\longrightarrow}Hisatmostn^{-1/m_2(H)}$. Previous

results have matched the lower bound for this anti-Ramsey threshold for cycles

and complete graphs with at least 5 vertices. Kohayakawa, Konstadinidis and the

last author also presented an infinite family of graphs H for which the

anti-Ramsey threshold is asymptotically smaller than n−1/m2(H). In this

paper, we devise a framework that provides a richer and more complex family of

such graphs that includes all the previously known examples.

We describe a compilation language of backdoor decomposable monotone circuits

(BDMCs) which generalizes several concepts appearing in the literature, e.g.

DNNFs and backdoor trees. A C-BDMC sentence is a monotone circuit

which satisfies decomposability property (such as in DNNF) in which the inputs

(or leaves) are associated with CNF encodings from a given base class

C. We consider the class of propagation complete (PC) encodings as

a base class and we show that PC-BDMCs are polynomially equivalent to PC

encodings. Additionally, we use this to determine the properties of PC-BDMCs

and PC encodings with respect to the knowledge compilation map including the

list of efficient operations on the languages.

Many animals emit vocal sounds which, independently from the sounds'

function, embed some individually-distinctive signature. Thus the automatic

recognition of individuals by sound is a potentially powerful tool for zoology

and ecology research and practical monitoring. Here we present a general

automatic identification method, that can work across multiple animal species

with various levels of complexity in their communication systems. We further

introduce new analysis techniques based on dataset manipulations that can

evaluate the robustness and generality of a classifier. By using these

techniques we confirmed the presence of experimental confounds in situations

resembling those from past studies. We introduce data manipulations that can

reduce the impact of these confounds, compatible with any classifier. We

suggest that assessment of confounds should become a standard part of future

studies to ensure they do not report over-optimistic results. We provide

annotated recordings used for analyses along with this study and we call for

dataset sharing to be a common practice to enhance development of methods and

comparisons of results.

18 Nov 2023

This paper characterises dynamic linkages arising from shocks with

heterogeneous degrees of persistence. Using frequency domain techniques, we

introduce measures that identify smoothly varying links of a transitory and

persistent nature. Our approach allows us to test for statistical differences

in such dynamic links. We document substantial differences in transitory and

persistent linkages among US financial industry volatilities, argue that they

track heterogeneously persistent sources of systemic risk, and thus may serve

as a useful tool for market participants.

Bitcoin being a safe haven asset is one of the traditional stories in the

cryptocurrency community. However, during its existence and relevant presence,

i.e. approximately since 2013, there has been no severe situation on the

financial markets globally to prove or disprove this story until the COVID-19

pandemics. We study the quantile correlations of Bitcoin and two benchmarks --

S\&P500 and VIX -- and we make comparison with gold as the traditional safe

haven asset. The Bitcoin safe haven story is shown and discussed to be

unsubstantiated and far-fetched, while gold comes out as a clear winner in this

contest.

There are no more papers matching your filters at the moment.