Ask or search anything...

Researchers from Flashbots and the Technical University of Munich conducted the first large-scale empirical study of executed cross-chain arbitrage, quantifying its 868.64millionannualvolumeand8.65 million net profit across nine blockchains. Their analysis revealed a highly concentrated market where inventory-based strategies dominate due to bridging latency, raising concerns about vertical integration and systemic risks in decentralized finance.

View blogA systematic survey by researchers from Tianjin University and the University of Lisbon presents a comprehensive overview of multilingual large language models, categorizing research into six domains while detailing architectures, data strategies, tuning methodologies, and evaluation. The work synthesizes current progress and highlights critical challenges in achieving equitable performance across languages and ensuring responsible AI.

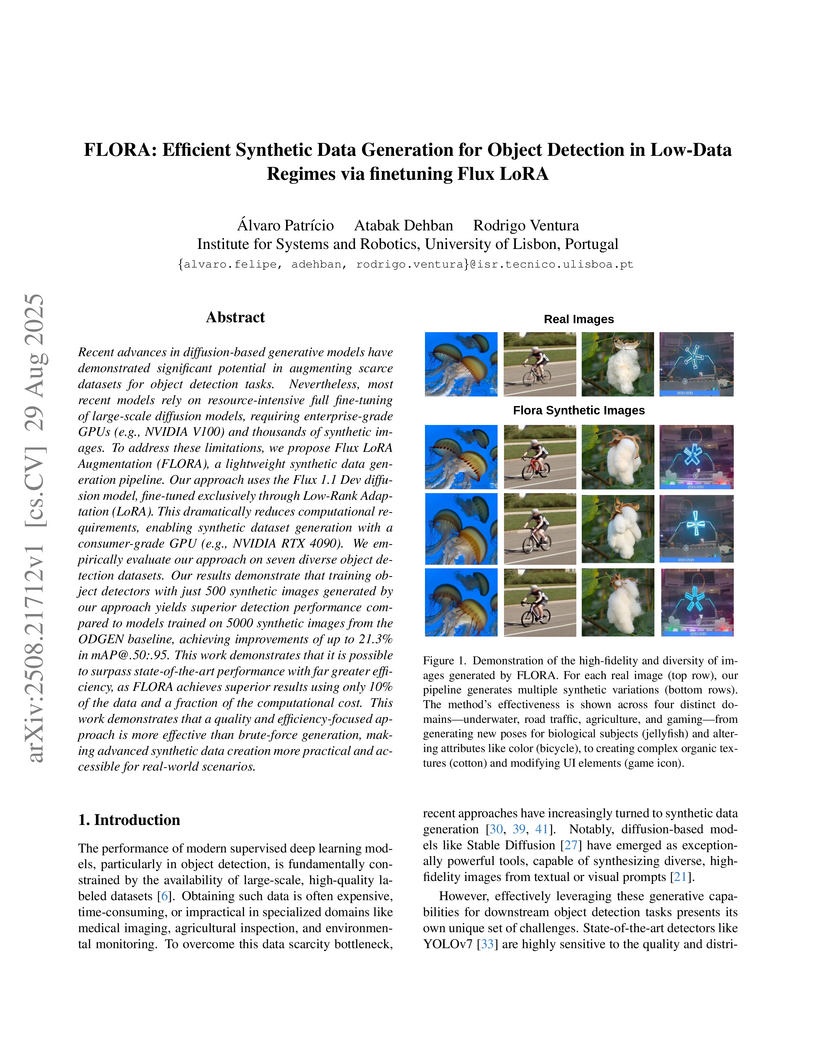

View blogFLORA introduces an efficient, two-stage pipeline for generating synthetic data for object detection in low-data environments, leveraging Flux LoRA for object-centric inpainting. The method achieves superior object detection performance and image fidelity across multiple benchmarks using significantly less synthetic data and computational resources than prior state-of-the-art approaches.

View blogLMCAP is a few-shot, retrieval-augmented language model prompting approach for multilingual image captioning that operates without task-specific training. It achieves competitive performance against fully supervised models on human-labeled multilingual datasets, notably outperforming them on Chinese captions.

View blogA study evaluated 16 Large Language Models (LLMs) for generating correct Python code specific to LoRaWAN engineering tasks, such as optimal UAV placement and received power calculation. It found that lightweight, locally executable models like Phi-4 and LLaMA-3.3 generated highly accurate code, demonstrating performance comparable to larger cloud-based models like DeepSeek-V3.

View blog

Monash University

Monash University Imperial College London

Imperial College London

University of Copenhagen

University of Copenhagen

Carnegie Mellon University

Carnegie Mellon University

University of Toronto

University of Toronto University of Amsterdam

University of Amsterdam

Australian National University

Australian National University