Kasetsart University

18 Sep 2025

This empirical study analyzed 253 `Claude.md` files from open-source repositories to characterize the structural organization and content patterns of agentic coding manifests. It found a preference for shallow hierarchical structures and a predominance of operational and technical instructions, alongside contextual information for AI agents, establishing foundational insights for effective AI-assisted software development.

We present laboratory results from supercritical, magnetized collisionless shock experiments (MA≲10, β∼1). We report the first observation of fully-developed shocks (R=4 compression ratio and a downstream region decoupled from the piston) after seven upstream ion gyration periods. A foot ahead of the shock exhibits super-adiabatic electron and ion heating. We measure the electron temperature Te=115 eV and ion temperature Ti=15 eV upstream of the shock; whereas, downstream, we measure Te=390 eV and infer Ti=340 eV, consistent with both Thomson scattering ion-acoustic wave spectral broadening and Rankine-Hugoniot conditions. The downstream electron temperature has a 30-percent excess from adiabatic and collisional electron-ion heating, implying significant collisionless anomalous electron heating. Furthermore, downstream electrons and ions are in equipartition, with a unity electron-ion temperature ratio Te/Ti=1.2.

Close to Earth the solar wind is usually super-Alfvénic, i.e. the speed of the solar wind is much larger than the Alfvén speed. However, in the lower coronal regions, the solar wind is mostly sub-Alfvénic. With the Parker Solar Probe (PSP) crossing the boundary between the sub- and super-Alfvénic flow, Bandyopadhyay et al. (2022) performed a turbulence characterization of the sub-Alfvénic solar wind with initial data from encounters 8 and 9. In this study, we re-examine the turbulence properties such as turbulence amplitude, anisotropy of the magnetic field variance, intermittency and switchback strength extending with PSP data for encounters 8-19. The later orbits probe lower altitudes and experience sub-Alfvénic conditions more frequently providing a greater statistical coverage to contrast sub- and super-Alfvénic solar wind. Also, by isolating the intervals where the solar wind speed is approximately equal to the Alfvén speed, we explore the transition in more detail. We show that the amplitude of the normalized magnetic field fluctuation is smaller for the sub-Alfvénic samples. While solar wind turbulence in general is shown to be anisotropic, the sub-Alfvénic samples are more anisotropic than the super-Alfvénic samples, in general. Further, we show that the sub- and super-Alfvénic samples do not show much distinction in terms of intermittency strength. Finally, consistent with prior results, we find no evidence for polarity reversing > 90 degrees switchbacks in the sub-Alfvénic solar wind

06 Oct 2025

Trivial packages, small modules with low functionality, are common in the npm ecosystem and can pose security risks despite their simplicity. This paper refines existing definitions and introduce data-only packages that contain no executable logic. A rule-based static analysis method is developed to detect trivial and data-only packages and evaluate their prevalence and associated risks in the 2025 npm ecosystem. The analysis shows that 17.92% of packages are trivial, with vulnerability levels comparable to non-trivial ones, and data-only packages, though rare, also contain risks. The proposed detection tool achieves 94% accuracy (macro-F1 0.87), enabling effective large-scale analysis to reduce security exposure. This findings suggest that trivial and data-only packages warrant greater attention in dependency management to reduce potential technical debt and security exposure.

31 Oct 2013

California Institute of TechnologyUniversity of VictoriaSLAC National Accelerator Laboratory

California Institute of TechnologyUniversity of VictoriaSLAC National Accelerator Laboratory Carnegie Mellon University

Carnegie Mellon University New York University

New York University University of Chicago

University of Chicago Stanford University

Stanford University Yale University

Yale University Northwestern University

Northwestern University University of Florida

University of Florida CERN

CERN Argonne National LaboratoryThe University of Alabama

Argonne National LaboratoryThe University of Alabama Stony Brook University

Stony Brook University Brookhaven National Laboratory

Brookhaven National Laboratory Rutgers UniversityLos Alamos National LaboratoryDeutsches Elektronen-Synchrotron DESYUniversity of Western AustraliaFermi National Accelerator LaboratoryUniversidad de ZaragozaUniversitat de BarcelonaUniversity of Patras

Rutgers UniversityLos Alamos National LaboratoryDeutsches Elektronen-Synchrotron DESYUniversity of Western AustraliaFermi National Accelerator LaboratoryUniversidad de ZaragozaUniversitat de BarcelonaUniversity of Patras University of VirginiaUniversity of TennesseeIstituto Nazionale di Fisica NucleareUniversity of Cape TownLawrence Livermore National LaboratoryUniversity of New HampshireJohannes Gutenberg University MainzInstitut d'Astrophysique de ParisThomas Jefferson National Accelerator FacilityLudwig Maximilians UniversityCollege of William and MaryHampton UniversityLaboratori Nazionali di Frascati dell’INFNNorth Carolina Central UniversityKasetsart UniversityUniversità di MessinaMax-Planck-Institute f ̈ur PhysikMassachusetts Inst. of TechnologyEwha UniversityPerimeter Inst. for Theoretical PhysicsMax-Planck-Institut f

ür RadioastronomieUniversit at HamburgUniversită di GenovaUniversit

at HeidelbergNorth Carolina AT State University

University of VirginiaUniversity of TennesseeIstituto Nazionale di Fisica NucleareUniversity of Cape TownLawrence Livermore National LaboratoryUniversity of New HampshireJohannes Gutenberg University MainzInstitut d'Astrophysique de ParisThomas Jefferson National Accelerator FacilityLudwig Maximilians UniversityCollege of William and MaryHampton UniversityLaboratori Nazionali di Frascati dell’INFNNorth Carolina Central UniversityKasetsart UniversityUniversità di MessinaMax-Planck-Institute f ̈ur PhysikMassachusetts Inst. of TechnologyEwha UniversityPerimeter Inst. for Theoretical PhysicsMax-Planck-Institut f

ür RadioastronomieUniversit at HamburgUniversită di GenovaUniversit

at HeidelbergNorth Carolina AT State UniversityDark sectors, consisting of new, light, weakly-coupled particles that do not

interact with the known strong, weak, or electromagnetic forces, are a

particularly compelling possibility for new physics. Nature may contain

numerous dark sectors, each with their own beautiful structure, distinct

particles, and forces. This review summarizes the physics motivation for dark

sectors and the exciting opportunities for experimental exploration. It is the

summary of the Intensity Frontier subgroup "New, Light, Weakly-coupled

Particles" of the Community Summer Study 2013 (Snowmass). We discuss axions,

which solve the strong CP problem and are an excellent dark matter candidate,

and their generalization to axion-like particles. We also review dark photons

and other dark-sector particles, including sub-GeV dark matter, which are

theoretically natural, provide for dark matter candidates or new dark matter

interactions, and could resolve outstanding puzzles in particle and

astro-particle physics. In many cases, the exploration of dark sectors can

proceed with existing facilities and comparatively modest experiments. A rich,

diverse, and low-cost experimental program has been identified that has the

potential for one or more game-changing discoveries. These physics

opportunities should be vigorously pursued in the US and elsewhere.

29 Apr 2024

As cognitive interventions for older adults evolve, modern technologies are increasingly integrated into their development. This study investigates the efficacy of augmented reality (AR)-based physical-cognitive training using an interactive game with Kinect motion sensor technology on older individuals at risk of mild cognitive impairment. Utilizing a pretest-posttest experimental design, twenty participants (mean age 66.8 SD. = 4.6 years, age range 60-78 years) underwent eighteen individual training sessions, lasting 45 to 60 minutes each, conducted three times a week over a span of 1.5 months. The training modules from five activities, encompassing episodic and working memory, attention and inhibition, cognitive flexibility, and speed processing, were integrated with physical movement and culturally relevant Thai-context activities. Results revealed significant improvements in inhibition, cognitive flexibility, accuracy, and reaction time, with working memory demonstrating enhancements in accuracy albeit not in reaction time. These findings underscore the potential of AR interventions to bolster basic executive enhancement among community-dwelling older adults at risk of cognitive decline.

Teaching programming effectively is difficult. This paper explores the benefits of using Minecraft Education Edition to teach Python programming. Educators can use the game to teach various programming concepts ranging from fundamental programming concepts, object-oriented programming, event-driven programming, and parallel programming. It has several benefits, including being highly engaging, sharpen creativity and problem-solving skill, motivating the study of mathematics, and making students realizes the importance of programming.

OpenThaiGPT 1.5 is an advanced Thai language chat model based on Qwen v2.5,

finetuned on over 2,000,000 Thai instruction pairs. This report provides an

engineering perspective on the model's development, capabilities, and

performance. We discuss the model's architecture, training process, and key

features, including multi-turn conversation support, Retrieval Augmented

Generation (RAG) compatibility, and tool-calling functionality. Benchmark

results demonstrate OpenThaiGPT 1.5's state-of-the-art performance on various

Thai language tasks, outperforming other open-source Thai language models. We

also address practical considerations such as GPU memory requirements and

deployment strategies.

16 Jul 2021

Robustly handling collisions between individual particles in a large particle-based simulation has been a challenging problem. We introduce particle merging-and-splitting, a simple scheme for robustly handling collisions between particles that prevents inter-penetrations of separate objects without introducing numerical instabilities. This scheme merges colliding particles at the beginning of the time-step and then splits them at the end of the time-step. Thus, collisions last for the duration of a time-step, allowing neighboring particles of the colliding particles to influence each other. We show that our merging-and-splitting method is effective in robustly handling collisions and avoiding penetrations in particle-based simulations. We also show how our merging-and-splitting approach can be used for coupling different simulation systems using different and otherwise incompatible integrators. We present simulation tests involving complex solid-fluid interactions, including solid fractures generated by fluid interactions.

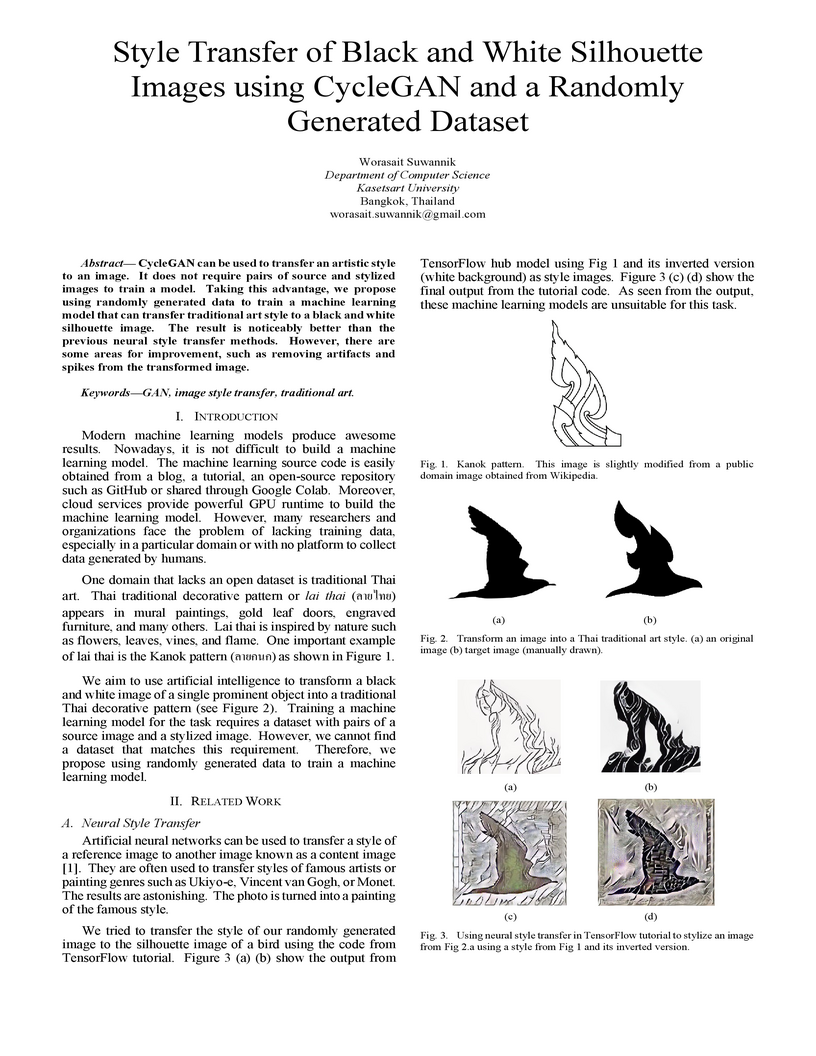

CycleGAN can be used to transfer an artistic style to an image. It does not require pairs of source and stylized images to train a model. Taking this advantage, we propose using randomly generated data to train a machine learning model that can transfer traditional art style to a black and white silhouette image. The result is noticeably better than the previous neural style transfer methods. However, there are some areas for improvement, such as removing artifacts and spikes from the transformed image.

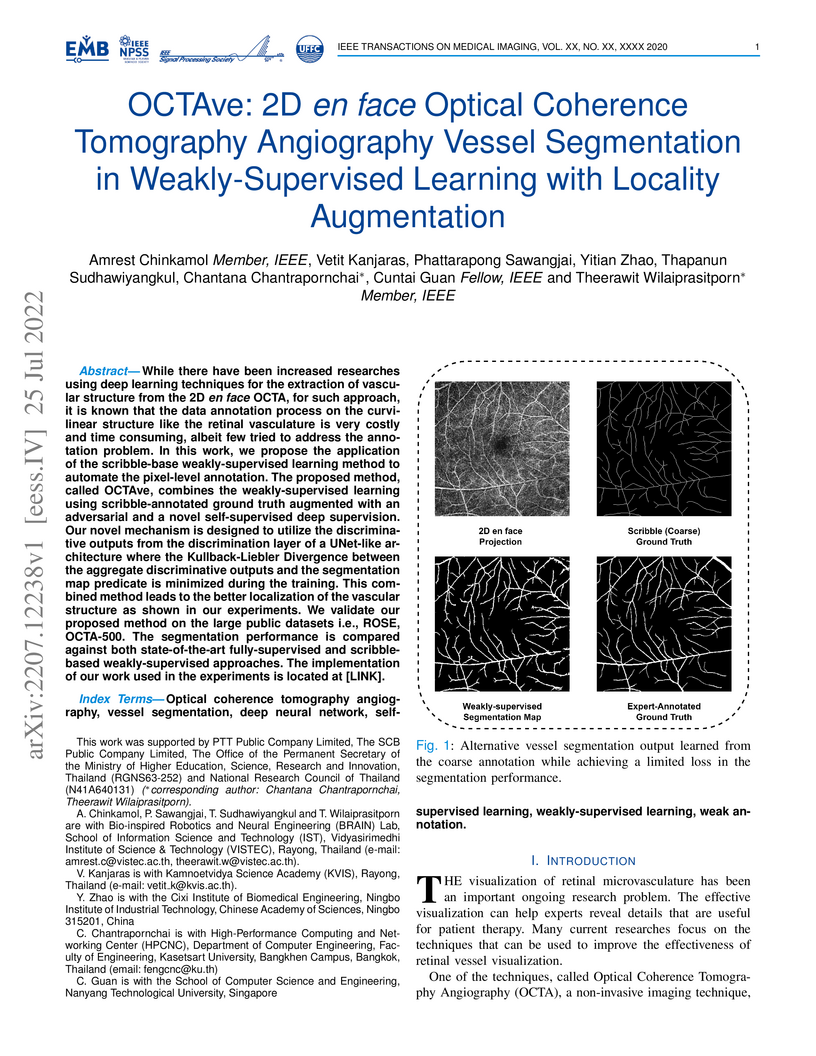

While there have been increased researches using deep learning techniques for

the extraction of vascular structure from the 2D en face OCTA, for such

approach, it is known that the data annotation process on the curvilinear

structure like the retinal vasculature is very costly and time consuming,

albeit few tried to address the annotation problem.

In this work, we propose the application of the scribble-base

weakly-supervised learning method to automate the pixel-level annotation. The

proposed method, called OCTAve, combines the weakly-supervised learning using

scribble-annotated ground truth augmented with an adversarial and a novel

self-supervised deep supervision. Our novel mechanism is designed to utilize

the discriminative outputs from the discrimination layer of a UNet-like

architecture where the Kullback-Liebler Divergence between the aggregate

discriminative outputs and the segmentation map predicate is minimized during

the training. This combined method leads to the better localization of the

vascular structure as shown in our experiments. We validate our proposed method

on the large public datasets i.e., ROSE, OCTA-500. The segmentation performance

is compared against both state-of-the-art fully-supervised and scribble-based

weakly-supervised approaches. The implementation of our work used in the

experiments is located at [LINK].

Carnegie Mellon University

Carnegie Mellon University Tel Aviv UniversityUniversity of LjubljanaUniversity of Regina

Tel Aviv UniversityUniversity of LjubljanaUniversity of Regina Argonne National Laboratory

Argonne National Laboratory Stony Brook University

Stony Brook University Seoul National UniversityLos Alamos National Laboratory

Seoul National UniversityLos Alamos National Laboratory Duke UniversityTemple UniversitySyracuse University

Duke UniversityTemple UniversitySyracuse University University of VirginiaFlorida International UniversityOld Dominion UniversityKent State UniversityUniversity of MassachusettsRutgers, The State University of New JerseyUniversidad de ValenciaKharkov Institute of Physics and TechnologyThe Catholic University of AmericaThomas Jefferson National Accelerator FacilityNorfolk State UniversityCollege of William and MaryCalifornia State UniversityOhio UniversityHampton UniversityYerevan Physics InstituteUniversit`a di BariNorth Carolina Central UniversityTexas A&M University-KingsvilleKasetsart UniversityLongwood UniversityFacult ́e des Sciences de MonastirClermont Universit ́eI.N.F.N.Sezione di CataniaINFN/Sezione Sanit`aInstitut de Physique Nucl ́eaire CNRS-IN2P3INFN/Sezione di RomaGeorges Washington UniversityIRFU, CEA, Universit

´e Paris-Saclay

University of VirginiaFlorida International UniversityOld Dominion UniversityKent State UniversityUniversity of MassachusettsRutgers, The State University of New JerseyUniversidad de ValenciaKharkov Institute of Physics and TechnologyThe Catholic University of AmericaThomas Jefferson National Accelerator FacilityNorfolk State UniversityCollege of William and MaryCalifornia State UniversityOhio UniversityHampton UniversityYerevan Physics InstituteUniversit`a di BariNorth Carolina Central UniversityTexas A&M University-KingsvilleKasetsart UniversityLongwood UniversityFacult ́e des Sciences de MonastirClermont Universit ́eI.N.F.N.Sezione di CataniaINFN/Sezione Sanit`aInstitut de Physique Nucl ́eaire CNRS-IN2P3INFN/Sezione di RomaGeorges Washington UniversityIRFU, CEA, Universit

´e Paris-SaclayWe report the first longitudinal/transverse separation of the deeply virtual

exclusive π0 electroproduction cross section off the neutron and coherent

deuteron. The corresponding four structure functions dσL/dt,

dσT/dt, dσLT/dt and dσTT/dt are extracted as a

function of the momentum transfer to the recoil system at Q2=1.75 GeV2

and xB=0.36. The ed→edπ0 cross sections are found compatible with

the small values expected from theoretical models. The en→enπ0 cross

sections show a dominance from the response to transversely polarized photons,

and are in good agreement with calculations based on the transversity GPDs of

the nucleon. By combining these results with previous measurements of π0

electroproduction off the proton, we present a flavor decomposition of the u

and d quark contributions to the cross section.

18 Sep 2025

Agentic coding tools receive goals written in natural language as input, break them down into specific tasks, and write/execute the actual code with minimal human intervention. Key to this process are agent manifests, configuration files (such as this http URL) that provide agents with essential project context, identity, and operational rules. However, the lack of comprehensive and accessible documentation for creating these manifests presents a significant challenge for developers. We analyzed 253 this http URL files from 242 repositories to identify structural patterns and common content. Our findings show that manifests typically have shallow hierarchies with one main heading and several subsections, with content dominated by operational commands, technical implementation notes, and high-level architecture.

A LINE Bot System to diagnose rice diseases from actual paddy field images

was developed and presented in this paper. It was easy-to-use and automatic

system designed to help rice farmers improve the rice yield and quality. The

targeted images were taken from the actual paddy environment without special

sample preparation. We used a deep learning neural networks technique to detect

rice diseases from the images. We developed an object detection model training

and refinement process to improve the performance of our previous research on

rice leave diseases detection. The process was based on analyzing the model's

predictive results and could be repeatedly used to improve the quality of the

database in the next training of the model. The deployment model for our LINE

Bot system was created from the selected best performance technique in our

previous paper, YOLOv3, trained by refined training data set. The performance

of the deployment model was measured on 5 target classes and found that the

Average True Positive Point improved from 91.1% in the previous paper to 95.6%

in this study. Therefore, we used this deployment model for Rice Disease LINE

Bot system. Our system worked automatically real-time to suggest primary

diagnosis results to the users in the LINE group, which included rice farmers

and rice disease specialists. They could communicate freely via chat. In the

real LINE Bot deployment, the model's performance was measured by our own

defined measurement Average True Positive Point and was found to be an average

of 78.86%. The system was fast and took only 2-3 s for detection process in our

system server.

This chapter presents a methodology for diagnosis of pigmented skin lesions

using convolutional neural networks. The architecture is based on

convolu-tional neural networks and it is evaluated using new CNN models as well

as re-trained modification of pre-existing CNN models were used. The

experi-mental results showed that CNN models pre-trained on big datasets for

gen-eral purpose image classification when re-trained in order to identify skin

le-sion types offer more accurate results when compared to convolutional neural

network models trained explicitly from the dermatoscopic images. The best

performance was achieved by re-training a modified version of ResNet-50

convolutional neural network with accuracy equal to 93.89%. Analysis on skin

lesion pathology type was also performed with classification accuracy for

melanoma and basal cell carcinoma being equal to 79.13% and 82.88%,

respectively.

31 Aug 2018

Obstructive sleep apnea (OSA) is a common sleep disorder caused by abnormal

breathing. The severity of OSA can lead to many symptoms such as sudden cardiac

death (SCD). Polysomnography (PSG) is a gold standard for OSA diagnosis. It

records many signals from the patient's body for at least one whole night and

calculates the Apnea-Hypopnea Index (AHI) which is the number of apnea or

hypopnea incidences per hour. This value is then used to classify patients into

OSA severity levels. However, it has many disadvantages and limitations.

Consequently, we proposed a novel methodology of OSA severity classification

using a Deep Learning approach. We focused on the classification between normal

subjects (AHI < 5) and severe OSA patients (AHI > 30). The 15-second raw

ECG records with apnea or hypopnea events were used with a series of deep

learning models. The main advantages of our proposed method include easier data

acquisition, instantaneous OSA severity detection, and effective feature

extraction without domain knowledge from expertise. To evaluate our proposed

method, 545 subjects of which 364 were normal and 181 were severe OSA patients

obtained from the MrOS sleep study (Visit 1) database were used with the k-fold

cross-validation technique. The accuracy of 79.45\% for OSA severity

classification with sensitivity, specificity, and F-score was achieved. This is

significantly higher than the results from the SVM classifier with RR Intervals

and ECG derived respiration (EDR) signal feature extraction. The promising

result shows that this proposed method is a good start for the detection of OSA

severity from a single channel ECG which can be obtained from wearable devices

at home and can also be applied to near real-time alerting systems such as

before SCD occurs.

14 Dec 2017

Two non-harmonic canonical-dissipative limit cycle oscillators are considered that oscillate in one-dimensional Smorodinsky-Winternitz potentials. It is shown that the standard approach of the canonical-dissipative framework to introduce dissipative forces leads naturally to a coupling force between the oscillators that establishes synchronization. The non-harmonic character of the limit cycles in the context of anchoring, the phase difference between the synchronized oscillators, and the degree of synchronization are studied in detail.

02 Oct 2017

Software ecosystems have had a tremendous impact on computing and society,

capturing the attention of businesses, researchers, and policy makers alike.

Massive ecosystems like the JavaScript node package manager (npm) is evidence

of how packages are readily available for use by software projects. Due to its

high-dimension and complex properties, software ecosystem analysis has been

limited. In this paper, we leverage topological methods in visualize the

high-dimensional datasets from a software ecosystem. Topological Data Analysis

(TDA) is an emerging technique to analyze high-dimensional datasets, which

enables us to study the shape of data. We generate the npm software ecosystem

topology to uncover insights and extract patterns of existing libraries by

studying its localities. Our real-world example reveals many interesting

insights and patterns that describes the shape of a software ecosystem.

Efficient and accurate BRDF acquisition of real world materials is a challenging research problem that requires sampling millions of incident light and viewing directions. To accelerate the acquisition process, one needs to find a minimal set of sampling directions such that the recovery of the full BRDF is accurate and robust given such samples. In this paper, we formulate BRDF acquisition as a compressed sensing problem, where the sensing operator is one that performs sub-sampling of the BRDF signal according to a set of optimal sample directions. To solve this problem, we propose the Fast and Robust Optimal Sampling Technique (FROST) for designing a provably optimal sub-sampling operator that places light-view samples such that the recovery error is minimized. FROST casts the problem of designing an optimal sub-sampling operator for compressed sensing into a sparse representation formulation under the Multiple Measurement Vector (MMV) signal model. The proposed reformulation is exact, i.e. without any approximations, hence it converts an intractable combinatorial problem into one that can be solved with standard optimization techniques. As a result, FROST is accompanied by strong theoretical guarantees from the field of compressed sensing. We perform a thorough analysis of FROST-BRDF using a 10-fold cross-validation with publicly available BRDF datasets and show significant advantages compared to the state-of-the-art with respect to reconstruction quality. Finally, FROST is simple, both conceptually and in terms of implementation, it produces consistent results at each run, and it is at least two orders of magnitude faster than the prior art.

Cloud computing helps reduce costs, increase business agility and deploy

solutions with a high return on investment for many types of applications.

However, data security is of premium importance to many users and often

restrains their adoption of cloud technologies. Various approaches, i.e., data

encryption, anonymization, replication and verification, help enforce different

facets of data security. Secret sharing is a particularly interesting

cryptographic technique. Its most advanced variants indeed simultaneously

enforce data privacy, availability and integrity, while allowing computation on

encrypted data. The aim of this paper is thus to wholly survey secret sharing

schemes with respect to data security, data access and costs in the

pay-as-you-go paradigm.

There are no more papers matching your filters at the moment.