Korea Advanced Institute of Science and Technology

04 Dec 2024

We study an optimal control problem encompassing investment, consumption, and retirement decisions under exponential (CARA-type) utility. The financial market comprises a bond with constant drift and a stock following geometric Brownian motion. The agent receives continuous income, consumes over time, and has the option to retire irreversibly, gaining increased leisure post-retirement compared to pre-retirement. The objective is to maximize the expected exponential utility of weighted consumption and leisure over an infinite horizon. Using a martingale approach and dual value function, we derive implicit solutions for the optimal portfolio, consumption, and retirement time. The analysis highlights key contributions: first, the equivalent condition for no retirement is characterized by a specific income threshold; second, the influence of income and leisure levels on optimal portfolio, consumption, and retirement decisions is thoroughly examined. These results provide valuable insights into the interplay between financial and lifestyle choices in retirement planning.

Multimodal Magnetic Resonance (MR) Imaging plays a crucial role in disease

diagnosis due to its ability to provide complementary information by analyzing

a relationship between multimodal images on the same subject. Acquiring all MR

modalities, however, can be expensive, and, during a scanning session, certain

MR images may be missed depending on the study protocol. The typical solution

would be to synthesize the missing modalities from the acquired images such as

using generative adversarial networks (GANs). Yet, GANs constructed with

convolutional neural networks (CNNs) are likely to suffer from a lack of global

relationships and mechanisms to condition the desired modality. To address

this, in this work, we propose a transformer-based modality infuser designed to

synthesize multimodal brain MR images. In our method, we extract

modality-agnostic features from the encoder and then transform them into

modality-specific features using the modality infuser. Furthermore, the

modality infuser captures long-range relationships among all brain structures,

leading to the generation of more realistic images. We carried out experiments

on the BraTS 2018 dataset, translating between four MR modalities, and our

experimental results demonstrate the superiority of our proposed method in

terms of synthesis quality. In addition, we conducted experiments on a brain

tumor segmentation task and different conditioning methods.

Adaptive-RAG introduces a framework that dynamically selects one of three retrieval-augmented LLM strategies based on an input query's predicted complexity. This approach balances accuracy and computational cost, achieving competitive performance while significantly reducing the average number of retrieval and generation steps compared to fixed multi-step methods.

The Convolutional Block Attention Module (CBAM) enhances Convolutional Neural Networks by sequentially applying channel and spatial attention mechanisms. This lightweight module consistently improves performance across various architectures and tasks, including image classification and object detection, with minimal additional computational cost.

The Riemannian Diffusion Language Model (RDLM) introduces a continuous diffusion framework for discrete data that leverages the geometry of categorical distributions on a statistical manifold. This approach achieves state-of-the-art performance for diffusion models across language modeling, pixel-level image generation, and DNA sequence design, with scalable training enabled by a simulation-free approximation method.

Time series analysis provides essential insights for real-world system dynamics and informs downstream decision-making, yet most existing methods often overlook the rich contextual signals present in auxiliary modalities. To bridge this gap, we introduce TimeXL, a multi-modal prediction framework that integrates a prototype-based time series encoder with three collaborating Large Language Models (LLMs) to deliver more accurate predictions and interpretable explanations. First, a multi-modal prototype-based encoder processes both time series and textual inputs to generate preliminary forecasts alongside case-based rationales. These outputs then feed into a prediction LLM, which refines the forecasts by reasoning over the encoder's predictions and explanations. Next, a reflection LLM compares the predicted values against the ground truth, identifying textual inconsistencies or noise. Guided by this feedback, a refinement LLM iteratively enhances text quality and triggers encoder retraining. This closed-loop workflow-prediction, critique (reflect), and refinement-continuously boosts the framework's performance and interpretability. Empirical evaluations on four real-world datasets demonstrate that TimeXL achieves up to 8.9% improvement in AUC and produces human-centric, multi-modal explanations, highlighting the power of LLM-driven reasoning for time series prediction.

Researchers from NAVER Cloud and KAIST rigorously analyze Peri-LN, a Transformer normalization strategy that normalizes both sub-layer inputs and outputs, demonstrating its capability to maintain stable hidden-state activations and gradient flow, resulting in improved pre-training and downstream task performance for large language models.

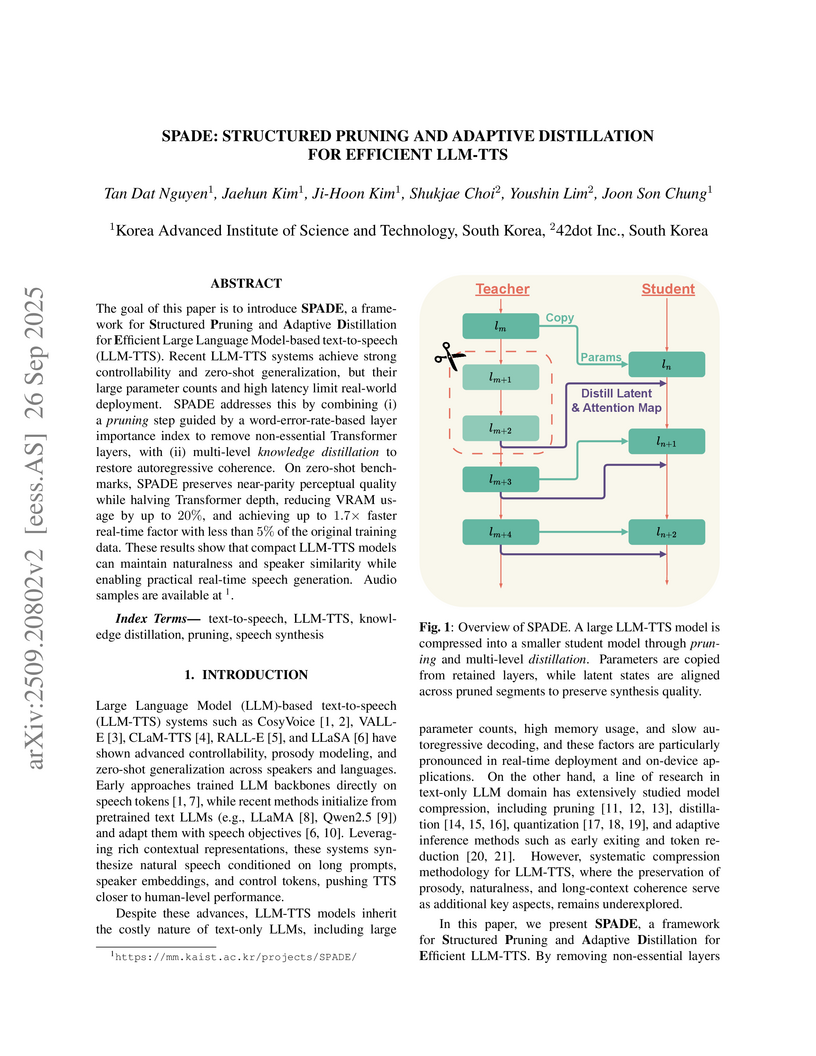

The goal of this paper is to introduce SPADE, a framework for Structured Pruning and Adaptive Distillation for Efficient Large Language Model-based text-to-speech (LLM-TTS). Recent LLM-TTS systems achieve strong controllability and zero-shot generalization, but their large parameter counts and high latency limit real-world deployment. SPADE addresses this by combining (i) a pruning step guided by a word-error-rate-based layer importance index to remove non-essential Transformer layers, with (ii) multi-level knowledge distillation to restore autoregressive coherence. On zero-shot benchmarks, SPADE preserves near-parity perceptual quality while halving Transformer depth, reducing VRAM usage by up to 20%, and achieving up to 1.7x faster real-time factor with less than 5% of the original training data. These results show that compact LLM-TTS models can maintain naturalness and speaker similarity while enabling practical real-time speech generation. Audio samples are available at this https URL.

Researchers from Shanghai Jiao Tong University, The Chinese University of Hong Kong, KAIST, and Geely developed Semantic-VAE, a Variational Autoencoder framework that incorporates semantic alignment to address the reconstruction-generation dilemma in zero-shot Text-to-Speech. This method achieved a Word Error Rate of 2.10% and speaker similarity of 0.64, outperforming prior mel-based and vanilla VAE approaches on the LibriSpeech-PC test-clean dataset.

Researchers from KAIST developed Diffusion-Link, a diffusion probabilistic model that generatively transforms audio embeddings into text-like distributions to bridge the audio-text modality gap. This lightweight module achieves state-of-the-art performance in zero-shot automatic audio captioning on AudioCaps, improving CIDEr by 52.5% over baselines without requiring external knowledge.

Spread Preference Annotation (SPA) presents an iterative framework that efficiently aligns Large Language Models (LLMs) with human preferences using only a small initial human-annotated dataset. The method achieves this by leveraging the LLM's intrinsic knowledge to generate self-refined preference labels, demonstrating a 21.13% win rate against GPT-4 on AlpacaEval 2.0 using significantly less data than previous approaches.

VoiceDiT is a multi-modal generative model developed at KAIST that synthesizes environment-aware speech and audio from diverse inputs, including text and visual prompts. The system generates high-quality, intelligible speech that seamlessly integrates with specified acoustic environments, outperforming existing models in speech intelligibility and audio realism.

Hallucination remains a major challenge in multimodal large language models (MLLMs). To address this, various contrastive decoding (CD) methods have been proposed that contrasts original logits with hallucinated logits generated from perturbed inputs. While CD has shown promise in vision-language models (VLMs), it is not well-suited for AV-LLMs, where hallucinations often emerge from both unimodal and cross-modal combinations involving audio, video, and language. These intricate interactions call for a more adaptive and modality-aware decoding strategy. In this paper, we propose Audio-Visual Contrastive Decoding (AVCD)-a novel, training-free decoding framework designed to model trimodal interactions and suppress modality-induced hallucinations in AV-LLMs. Unlike previous CD methods in VLMs that corrupt a fixed modality, AVCD leverages attention distributions to dynamically identify less dominant modalities and applies attentive masking to generate perturbed output logits. To support CD in a trimodal setting, we also reformulate the original CD framework to jointly handle audio, visual, and textual inputs. Finally, to improve efficiency, we introduce entropy-guided adaptive decoding, which selectively skips unnecessary decoding steps based on the model's confidence in its predictions. Extensive experiments demonstrate that AVCD consistently outperforms existing decoding methods. Especially, on the AVHBench dataset, it improves accuracy by 2% for VideoLLaMA2 and 7% for video-SALMONN, demonstrating strong robustness and generalizability. Our code is available at this https URL.

Researchers from KAIST and ByteDance Seed developed SCORE, a training-free inference-time scaling method that uses standardized composite rewards to enhance Text-to-Audio generation. SCORE improves both perceptual audio quality and text-alignment, achieving a 10.8% improvement in CLAP score and a 10.6% improvement in production quality over naive sampling when using balanced guidance.

Researchers from Mila and KAIST developed the Generative Flow Ant Colony Sampler (GFACS), a meta-heuristic that combines GFlowNets with Ant Colony Optimization (ACO) for combinatorial optimization. This approach generates multi-modal prior distributions of solutions and achieves superior solution quality and efficiency, outperforming strong baselines and specialized RL solvers across various benchmarks, often with reduced training time.

The SEED model applies diffusion models directly to speaker embeddings, aiming to enhance the robustness of speaker recognition systems against environmental mismatches. It improves speaker identification accuracy by up to 19.6% in noisy conditions compared to baseline systems, demonstrating an efficient alternative to traditional audio enhancement or complex disentangled representation learning approaches.

Integer linear programming (ILP) is widely utilized for various combinatorial optimization problems. Primal heuristics play a crucial role in quickly finding feasible solutions for NP-hard ILP. Although end-to-end learning-based primal heuristics (E2EPH) have recently been proposed, they are typically unable to independently generate feasible solutions and mainly focus on binary variables. Ensuring feasibility is critical, especially when handling non-binary integer variables. To address this challenge, we propose RL-SPH, a novel reinforcement learning-based start primal heuristic capable of independently generating feasible solutions, even for ILP involving non-binary integers. Experimental results demonstrate that RL-SPH rapidly obtains high-quality feasible solutions, achieving on average a 44x lower primal gap and a 2.3x lower primal integral compared to existing primal heuristics.

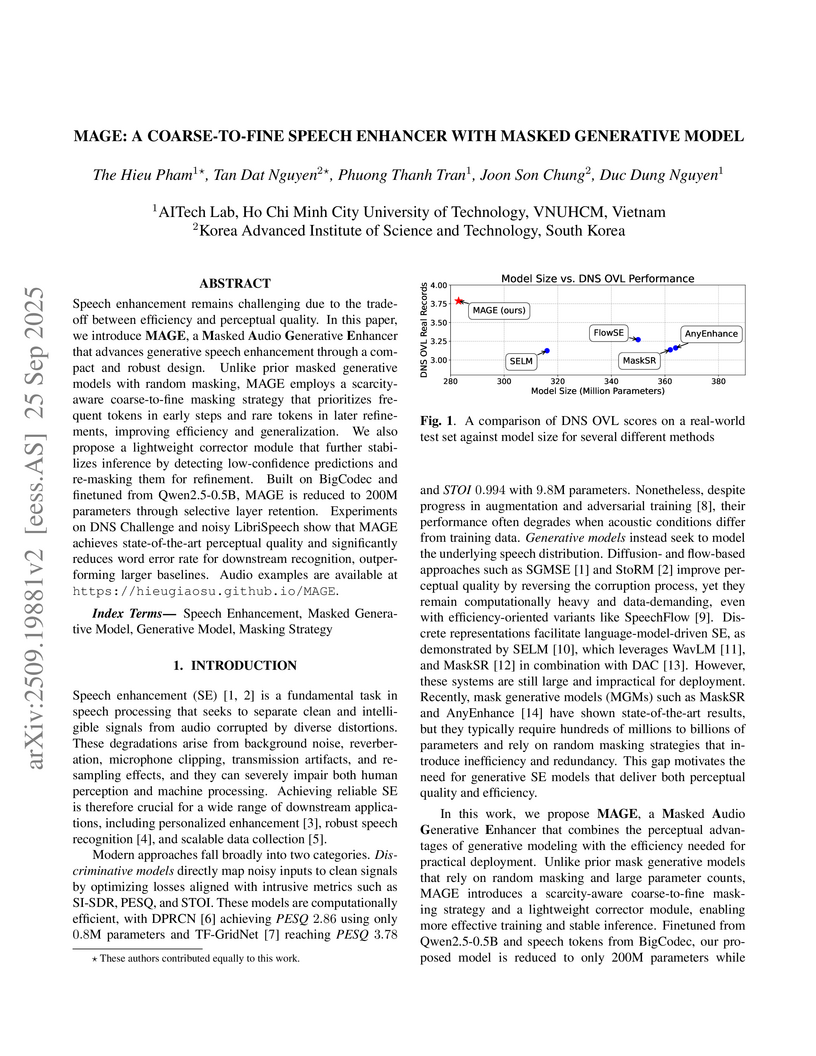

Speech enhancement remains challenging due to the trade-off between efficiency and perceptual quality. In this paper, we introduce MAGE, a Masked Audio Generative Enhancer that advances generative speech enhancement through a compact and robust design. Unlike prior masked generative models with random masking, MAGE employs a scarcity-aware coarse-to-fine masking strategy that prioritizes frequent tokens in early steps and rare tokens in later refinements, improving efficiency and generalization. We also propose a lightweight corrector module that further stabilizes inference by detecting low-confidence predictions and re-masking them for refinement. Built on BigCodec and finetuned from Qwen2.5-0.5B, MAGE is reduced to 200M parameters through selective layer retention. Experiments on DNS Challenge and noisy LibriSpeech show that MAGE achieves state-of-the-art perceptual quality and significantly reduces word error rate for downstream recognition, outperforming larger baselines. Audio examples are available at this https URL.

This survey reviews the progress of diffusion models in generating images from text, ~\textit{i.e.} text-to-image diffusion models. As a self-contained work, this survey starts with a brief introduction of how diffusion models work for image synthesis, followed by the background for text-conditioned image synthesis. Based on that, we present an organized review of pioneering methods and their improvements on text-to-image generation. We further summarize applications beyond image generation, such as text-guided generation for various modalities like videos, and text-guided image editing. Beyond the progress made so far, we discuss existing challenges and promising future directions.

In zero-shot skeleton-based action recognition (ZSAR), aligning skeleton features with the text features of action labels is essential for accurately predicting unseen actions. ZSAR faces a fundamental challenge in bridging the modality gap between the two-kind features, which severely limits generalization to unseen actions. Previous methods focus on direct alignment between skeleton and text latent spaces, but the modality gaps between these spaces hinder robust generalization learning. Motivated by the success of diffusion models in multi-modal alignment (e.g., text-to-image, text-to-video), we firstly present a diffusion-based skeleton-text alignment framework for ZSAR. Our approach, Triplet Diffusion for Skeleton-Text Matching (TDSM), focuses on cross-alignment power of diffusion models rather than their generative capability. Specifically, TDSM aligns skeleton features with text prompts by incorporating text features into the reverse diffusion process, where skeleton features are denoised under text guidance, forming a unified skeleton-text latent space for robust matching. To enhance discriminative power, we introduce a triplet diffusion (TD) loss that encourages our TDSM to correct skeleton-text matches while pushing them apart for different action classes. Our TDSM significantly outperforms very recent state-of-the-art methods with significantly large margins of 2.36%-point to 13.05%-point, demonstrating superior accuracy and scalability in zero-shot settings through effective skeleton-text matching.

There are no more papers matching your filters at the moment.