Orange Labs

Troisemaine Colin and colleagues at IMT Atlantique and Orange Labs developed a practical framework for Novel Class Discovery (NCD) in tabular data, introducing a hyperparameter optimization procedure and class number estimation that do not rely on novel class labels. Their Projection-Based NCD (PBN) model achieved an average accuracy of 71.0% across diverse tabular datasets when the number of novel classes was unknown, outperforming prior methods.

22 Feb 2023

This paper establishes a unified framework for Novel Class Discovery (NCD), a subfield addressing the challenge of identifying new, unlabeled classes by leveraging knowledge from labeled known classes. It categorizes existing NCD methods into two-stage and one-stage approaches and details key techniques like pseudo-labeling, self-supervised learning, and contrastive learning, while also differentiating NCD from related machine learning fields.

17 Jun 2022

A comprehensive survey details deep learning methods for sound source localization (SSL), reviewing 156 papers published between 2011 and 2021. The work establishes a structured taxonomy for neural network architectures, input features, output strategies, and learning paradigms, specifically focusing on challenging indoor acoustic environments.

It has been recently shown that Generative Adversarial Networks (GANs) can produce synthetic images of exceptional visual fidelity. In this work, we propose the GAN-based method for automatic face aging. Contrary to previous works employing GANs for altering of facial attributes, we make a particular emphasize on preserving the original person's identity in the aged version of his/her face. To this end, we introduce a novel approach for "Identity-Preserving" optimization of GAN's latent vectors. The objective evaluation of the resulting aged and rejuvenated face images by the state-of-the-art face recognition and age estimation solutions demonstrate the high potential of the proposed method.

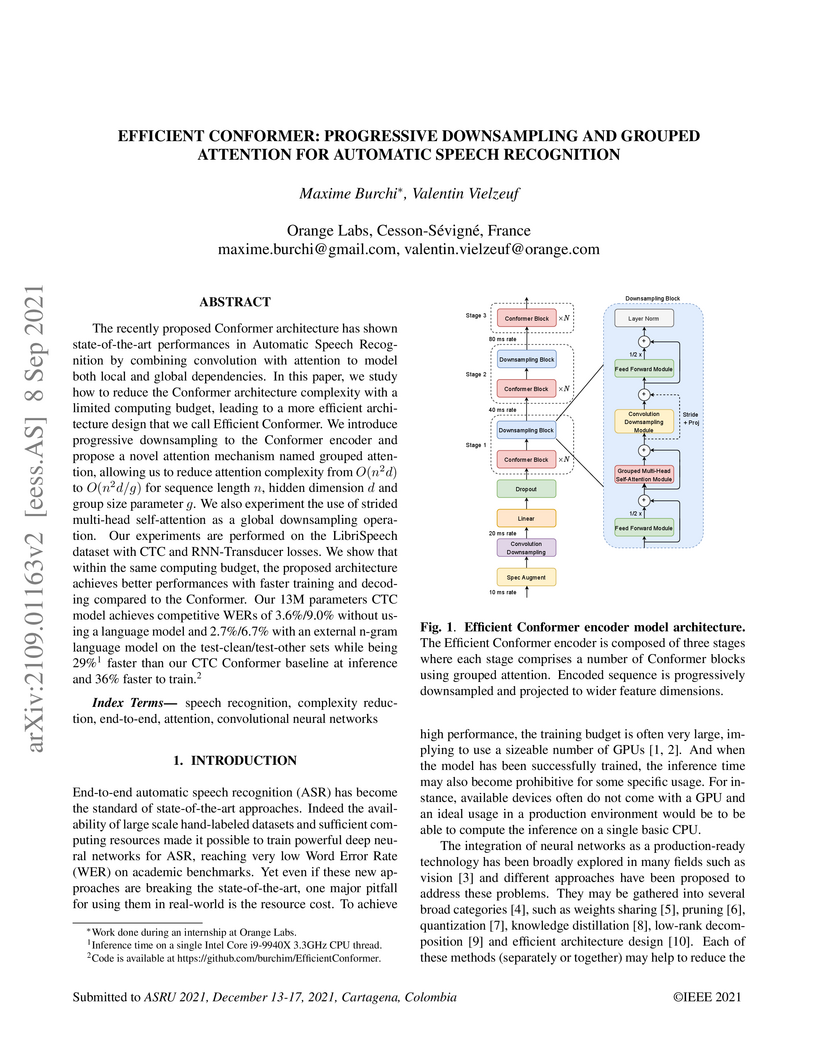

Efficient conformer: Progressive downsampling and grouped attention for automatic speech recognition

Efficient conformer: Progressive downsampling and grouped attention for automatic speech recognition

The recently proposed Conformer architecture has shown state-of-the-art performances in Automatic Speech Recognition by combining convolution with attention to model both local and global dependencies. In this paper, we study how to reduce the Conformer architecture complexity with a limited computing budget, leading to a more efficient architecture design that we call Efficient Conformer. We introduce progressive downsampling to the Conformer encoder and propose a novel attention mechanism named grouped attention, allowing us to reduce attention complexity from O(n2d) to O(n2d/g) for sequence length n, hidden dimension d and group size parameter g. We also experiment the use of strided multi-head self-attention as a global downsampling operation. Our experiments are performed on the LibriSpeech dataset with CTC and RNN-Transducer losses. We show that within the same computing budget, the proposed architecture achieves better performances with faster training and decoding compared to the Conformer. Our 13M parameters CTC model achieves competitive WERs of 3.6%/9.0% without using a language model and 2.7%/6.7% with an external n-gram language model on the test-clean/test-other sets while being 29% faster than our CTC Conformer baseline at inference and 36% faster to train.

The ability to train generative models that produce realistic, safe and useful tabular data is essential for data privacy, imputation, oversampling, explainability or simulation. However, generating tabular data is not straightforward due to its heterogeneity, non-smooth distributions, complex dependencies and imbalanced categorical features. Although diverse methods have been proposed in the literature, there is a need for a unified evaluation, under the same conditions, on a variety of datasets. This study addresses this need by fully considering the optimization of: hyperparameters, feature encodings, and architectures. We investigate the impact of dataset-specific tuning on five recent model families for tabular data generation through an extensive benchmark on 16 datasets. These datasets vary in terms of size (an average of 80,000 rows), data types, and domains. We also propose a reduced search space for each model that allows for quick optimization, achieving nearly equivalent performance at a significantly lower cost. Our benchmark demonstrates that, for most models, large-scale dataset-specific tuning substantially improves performance compared to the original configurations. Furthermore, we confirm that diffusion-based models generally outperform other models on tabular data. However, this advantage is not significant when the entire tuning and training process is restricted to the same GPU budget.

Modeling radio propagation is essential for wireless network design and performance optimization. Traditional methods rely on physics models of radio propagation, which can be inaccurate or inflexible. In this work, we propose using graph neural networks to learn radio propagation behaviors directly from real-world network data. Our approach converts the radio propagation environment into a graph representation, with nodes corresponding to locations and edges representing spatial and ray-tracing relationships between locations. The graph is generated by converting images of the environment into a graph structure, with specific relationships between nodes. The model is trained on this graph representation, using sensor measurements as target data.

We demonstrate that the graph neural network, which learns to predict radio propagation directly from data, achieves competitive performance compared to traditional heuristic models. This data-driven approach outperforms classic numerical solvers in terms of both speed and accuracy. To the best of our knowledge, we are the first to apply graph neural networks to real-world radio propagation data to generate coverage maps, enabling generative models of signal propagation with point measurements only.

For decades, Simultaneous Ascending Auction (SAA) has been the most popular mechanism used for spectrum auctions. It has recently been employed by many countries for the allocation of 5G licences. Although SAA presents relatively simple rules, it induces a complex strategic game for which the optimal bidding strategy is unknown. Considering the fact that sometimes billions of euros are at stake in an SAA, establishing an efficient bidding strategy is crucial. In this work, we model the auction as a n-player simultaneous move game with complete information and propose the first efficient bidding algorithm that tackles simultaneously its four main strategic issues: the exposure problem, the own price effect, budget constraints and the eligibility management problem. Our solution, called SMSα, is based on Simultaneous Move Monte Carlo Tree Search (SM-MCTS) and relies on a new method for the prediction of closing prices. By introducing a new reward function in SMSα, we give the possibility to bidders to define their own level of risk-aversion. Through extensive numerical experiments on instances of realistic size, we show that SMSα largely outperforms state-of-the-art algorithms, notably by achieving higher expected utility while taking less risks.

Anomaly detection in time series is a complex task that has been widely studied. In recent years, the ability of unsupervised anomaly detection algorithms has received much attention. This trend has led researchers to compare only learning-based methods in their articles, abandoning some more conventional approaches. As a result, the community in this field has been encouraged to propose increasingly complex learning-based models mainly based on deep neural networks. To our knowledge, there are no comparative studies between conventional, machine learning-based and, deep neural network methods for the detection of anomalies in multivariate time series. In this work, we study the anomaly detection performance of sixteen conventional, machine learning-based and, deep neural network approaches on five real-world open datasets. By analyzing and comparing the performance of each of the sixteen methods, we show that no family of methods outperforms the others. Therefore, we encourage the community to reincorporate the three categories of methods in the anomaly detection in multivariate time series benchmarks.

To address the contextual bandit problem, we propose an online random forest

algorithm. The analysis of the proposed algorithm is based on the sample

complexity needed to find the optimal decision stump. Then, the decision stumps

are assembled in a random collection of decision trees, Bandit Forest. We show

that the proposed algorithm is optimal up to logarithmic factors. The

dependence of the sample complexity upon the number of contextual variables is

logarithmic. The computational cost of the proposed algorithm with respect to

the time horizon is linear. These analytical results allow the proposed

algorithm to be efficient in real applications, where the number of events to

process is huge, and where we expect that some contextual variables, chosen

from a large set, have potentially non- linear dependencies with the rewards.

In the experiments done to illustrate the theoretical analysis, Bandit Forest

obtain promising results in comparison with state-of-the-art algorithms.

This paper presents a publicly available corpus of French encyclopedic history texts annotated according to the Berkeley FrameNet formalism. The main difference in our approach compared to previous works on semantic parsing with FrameNet is that we are not interested here in full text parsing but rather on partial parsing. The goal is to select from the FrameNet resources the minimal set of frames that are going to be useful for the applicative framework targeted, in our case Information Extraction from encyclopedic documents. Such an approach leverages the manual annotation of larger corpora than those obtained through full text parsing and therefore opens the door to alternative methods for Frame parsing than those used so far on the FrameNet 1.5 benchmark corpus. The approaches compared in this study rely on an integrated sequence labeling model which jointly optimizes frame identification and semantic role segmentation and identification. The models compared are CRFs and multitasks bi-LSTMs.

High quality census data are not always available in developing countries. Instead, mobile phone data are becoming a trending proxy to evaluate population density, activity and social characteristics. They offer additional advantages for infrastructure planning such as being updated in real-time, including mobility information and recording visitors' activity. We combine various data sets from Senegal to evaluate mobile phone data's potential to replace insufficient census data for infrastructure planning in developing countries. As an applied case, we test their ability at predicting domestic electricity consumption. We show that, contrary to common belief, average mobile phone activity does not correlate well with population density. However, it can provide better electricity consumption estimates than basic census data. More importantly, we use curve and network clustering techniques to enhance the accuracy of the predictions, to recover good population mapping potential and to reduce the collection of required data to substantially smaller samples.

Large-scale networks of human interaction, in particular country-wide telephone call networks, can be used to redraw geographical maps by applying algorithms of topological community detection. The geographic projections of the emerging areas in a few recent studies on single regions have been suggested to share two distinct properties: first, they are cohesive, and second, they tend to closely follow socio-economic boundaries and are similar to existing political regions in size and number. Here we use an extended set of countries and clustering indices to quantify overlaps, providing ample additional evidence for these observations using phone data from countries of various scales across Europe, Asia, and Africa: France, the UK, Italy, Belgium, Portugal, Saudi Arabia, and Ivory Coast. In our analysis we use the known approach of partitioning country-wide networks, and an additional iterative partitioning of each of the first level communities into sub-communities, revealing that cohesiveness and matching of official regions can also be observed on a second level if spatial resolution of the data is high enough. The method has possible policy implications on the definition of the borderlines and sizes of administrative regions.

26 Feb 2015

In this paper, we study the influence of the radius of a cylindrical

supporting structure on radiation properties of a conformal millimeter-wave

antenna array. Bent antenna array structures on cylindrical surfaces may have

important applications in future mobile devices. Small radii may be needed if

the antenna is printed on the edges of mobile devices and in items which human

beings are wearing, such as wrist watches, bracelets and rings. The antenna

under study consists of four linear series-fed arrays of four patch elements

and is operating at 58.8 GHz with linear polarization. The antenna array is

fabricated on polytetrafluoroethylene substrate with thickness of 0.127 mm due

to its good plasticity properties and low losses. Results for both planar and

conformal antenna arrays show rather good agreement between simulation and

measurements. The results show that conformal antenna structures allow

achieving large angular coverage and may allow beam-steering implementations if

switches are used to select between different arrays around a cylindrical

supporting structure.

The arrival of 6G technologies shall massively increase the proliferation of

on-demand and ephemeral networks. Creating and removing customized networks on

the fly notably requires fully automation to guarantee commissioning-time

acceleration. In this paper, we address the deployment automation of an

end-to-end mobile network, with special focus on RAN units (referred to as

Cloud-RAN). The Cloud-RAN automation is especially challenging due to the

strong latency constraints expected in 6G as well as the required management of

physical antennas. To automatically instantiate a Cloud-RAN chain, we introduce

a Zero Touch Commissioning (ZTC) model which performs resource discovery while

looking for both antennas and computing capacity as near as possible to the

targeted coverage zone. We validate the ZTC model by a testbed which deploys,

configures and starts the network service without human intervention while

using Kubernetes-based infrastructures as well as open-source RAN and core

elements implementing the mobile network functions.

Counterfactual learning is a natural scenario to improve web-based machine

translation services by offline learning from feedback logged during user

interactions. In order to avoid the risk of showing inferior translations to

users, in such scenarios mostly exploration-free deterministic logging policies

are in place. We analyze possible degeneracies of inverse and reweighted

propensity scoring estimators, in stochastic and deterministic settings, and

relate them to recently proposed techniques for counterfactual learning under

deterministic logging.

Few-shot learning aims at leveraging knowledge learned by one or more deep learning models, in order to obtain good classification performance on new problems, where only a few labeled samples per class are available. Recent years have seen a fair number of works in the field, introducing methods with numerous ingredients. A frequent problem, though, is the use of suboptimally trained models to extract knowledge, leading to interrogations on whether proposed approaches bring gains compared to using better initial models without the introduced ingredients. In this work, we propose a simple methodology, that reaches or even beats state of the art performance on multiple standardized benchmarks of the field, while adding almost no hyperparameters or parameters to those used for training the initial deep learning models on the generic dataset. This methodology offers a new baseline on which to propose (and fairly compare) new techniques or adapt existing ones.

The task of verbalization of RDF triples has known a growth in popularity due

to the rising ubiquity of Knowledge Bases (KBs). The formalism of RDF triples

is a simple and efficient way to store facts at a large scale. However, its

abstract representation makes it difficult for humans to interpret. For this

purpose, the WebNLG challenge aims at promoting automated RDF-to-text

generation. We propose to leverage pre-trainings from augmented data with the

Transformer model using a data augmentation strategy. Our experiment results

show a minimum relative increases of 3.73%, 126.05% and 88.16% in BLEU score

for seen categories, unseen entities and unseen categories respectively over

the standard training.

Researchers from Orange Labs and Aix Marseille University introduce HERCULES, a hyperbolic embedding model for temporal knowledge graphs that dynamically adjusts the hyperbolic space's curvature based on relation and time. Their work suggests that non-temporal hyperbolic models, when adequately trained with sufficient negative samples, can achieve state-of-the-art performance on temporal link prediction tasks, comparable to or surpassing many explicit time-aware models.

Recently, clustering moving object trajectories kept gaining interest from

both the data mining and machine learning communities. This problem, however,

was studied mainly and extensively in the setting where moving objects can move

freely on the euclidean space. In this paper, we study the problem of

clustering trajectories of vehicles whose movement is restricted by the

underlying road network. We model relations between these trajectories and road

segments as a bipartite graph and we try to cluster its vertices. We

demonstrate our approaches on synthetic data and show how it could be useful in

inferring knowledge about the flow dynamics and the behavior of the drivers

using the road network.

There are no more papers matching your filters at the moment.