Poznań University of Technology

OpenGVL introduces an open-source benchmark and tool that evaluates Vision-Language Models (VLMs) on their ability to predict robotic task progress from visual observations, aiding in automated data curation. The system quantifies a significant performance disparity between open-source and proprietary VLMs while effectively identifying various data quality issues in diverse robotics datasets.

23 Apr 2025

Researchers from Universitat Pompeu Fabra and Poznań University develop a unified framework for constructing confidence sequences in generalized linear models by connecting online learning algorithms with statistical inference, enabling tighter confidence bounds and recovering existing constructions while establishing new types of confidence sets through regret analysis.

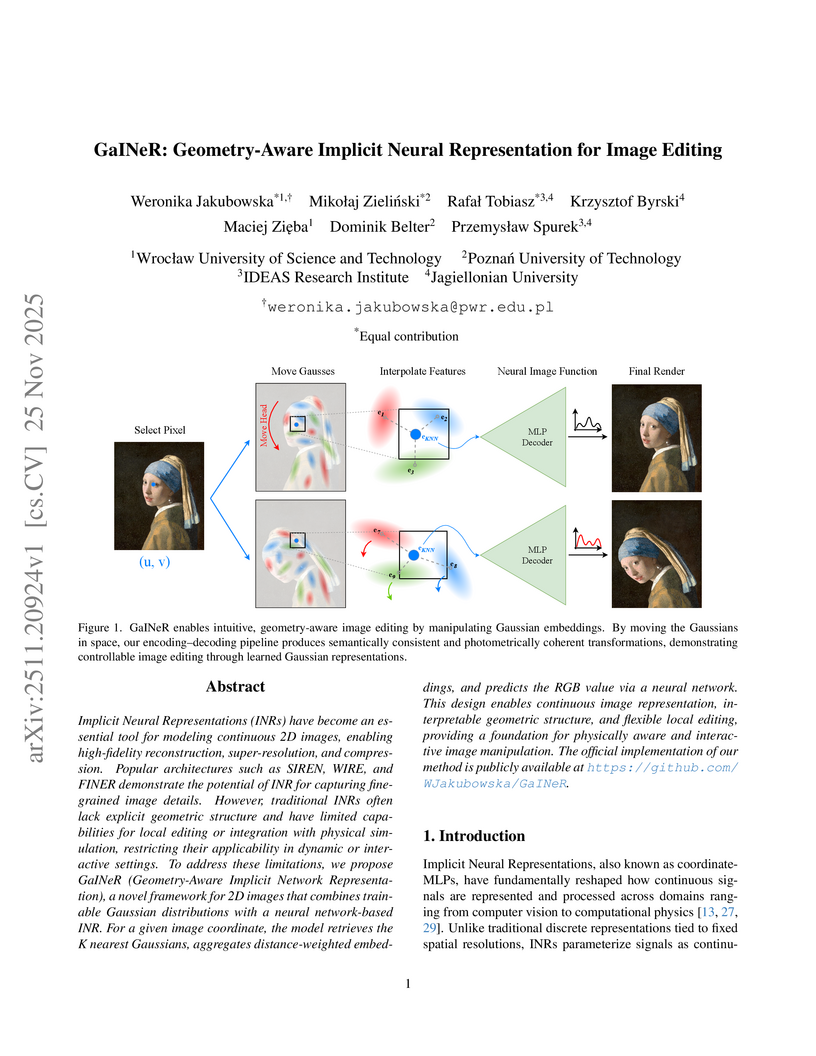

GaINeR introduces a framework that merges continuous implicit neural representations with trainable Gaussian embeddings for 2D images. This approach facilitates geometry-aware local editing and robust physical simulation integration without introducing visual artifacts, achieving a PSNR of 77.09 on the Kodak dataset.

Unifying Perspectives: Plausible Counterfactual Explanations on Global, Group-wise, and Local Levels

Unifying Perspectives: Plausible Counterfactual Explanations on Global, Group-wise, and Local Levels

The growing complexity of AI systems has intensified the need for transparency through Explainable AI (XAI). Counterfactual explanations (CFs) offer actionable "what-if" scenarios on three levels: Local CFs providing instance-specific insights, Global CFs addressing broader trends, and Group-wise CFs (GWCFs) striking a balance and revealing patterns within cohesive groups. Despite the availability of methods for each granularity level, the field lacks a unified method that integrates these complementary approaches. We address this limitation by proposing a gradient-based optimization method for differentiable models that generates Local, Global, and Group-wise Counterfactual Explanations in a unified manner. We especially enhance GWCF generation by combining instance grouping and counterfactual generation into a single efficient process, replacing traditional two-step methods. Moreover, to ensure trustworthiness, we innovatively introduce the integration of plausibility criteria into the GWCF domain, making explanations both valid and realistic. Our results demonstrate the method's effectiveness in balancing validity, proximity, and plausibility while optimizing group granularity, with practical utility validated through practical use cases.

Reproducibility is one of the core dimensions that concur to deliver Trustworthy Artificial Intelligence. Broadly speaking, reproducibility can be defined as the possibility to reproduce the same or a similar experiment or method, thereby obtaining the same or similar results as the original scientists. It is an essential ingredient of the scientific method and crucial for gaining trust in relevant claims. A reproducibility crisis has been recently acknowledged by scientists and this seems to affect even more Artificial Intelligence and Machine Learning, due to the complexity of the models at the core of their recent successes. Notwithstanding the recent debate on Artificial Intelligence reproducibility, its practical implementation is still insufficient, also because many technical issues are overlooked. In this survey, we critically review the current literature on the topic and highlight the open issues. Our contribution is three-fold. We propose a concise terminological review of the terms coming into play. We collect and systematize existing recommendations for achieving reproducibility, putting forth the means to comply with them. We identify key elements often overlooked in modern Machine Learning and provide novel recommendations for them. We further specialize these for two critical application domains, namely the biomedical and physical artificial intelligence fields.

People use language for various purposes. Apart from sharing information, individuals may use it to express emotions or to show respect for another person. In this paper, we focus on the formality level of machine-generated translations and present FAME-MT -- a dataset consisting of 11.2 million translations between 15 European source languages and 8 European target languages classified to formal and informal classes according to target sentence formality. This dataset can be used to fine-tune machine translation models to ensure a given formality level for each European target language considered. We describe the dataset creation procedure, the analysis of the dataset's quality showing that FAME-MT is a reliable source of language register information, and we present a publicly available proof-of-concept machine translation model that uses the dataset to steer the formality level of the translation. Currently, it is the largest dataset of formality annotations, with examples expressed in 112 European language pairs. The dataset is published online: this https URL .

Test-time adaptation is a promising research direction that allows the source

model to adapt itself to changes in data distribution without any supervision.

Yet, current methods are usually evaluated on benchmarks that are only a

simplification of real-world scenarios. Hence, we propose to validate test-time

adaptation methods using the recently introduced datasets for autonomous

driving, namely CLAD-C and SHIFT. We observe that current test-time adaptation

methods struggle to effectively handle varying degrees of domain shift, often

resulting in degraded performance that falls below that of the source model. We

noticed that the root of the problem lies in the inability to preserve the

knowledge of the source model and adapt to dynamically changing, temporally

correlated data streams. Therefore, we enhance the well-established

self-training framework by incorporating a small memory buffer to increase

model stability and at the same time perform dynamic adaptation based on the

intensity of domain shift. The proposed method, named AR-TTA, outperforms

existing approaches on both synthetic and more real-world benchmarks and shows

robustness across a variety of TTA scenarios. The code is available at

this https URL

Despite impressive advancements in multilingual corpora collection and model training, developing large-scale deployments of multilingual models still presents a significant challenge. This is particularly true for language tasks that are culture-dependent. One such example is the area of multilingual sentiment analysis, where affective markers can be subtle and deeply ensconced in culture. This work presents the most extensive open massively multilingual corpus of datasets for training sentiment models. The corpus consists of 79 manually selected datasets from over 350 datasets reported in the scientific literature based on strict quality criteria. The corpus covers 27 languages representing 6 language families. Datasets can be queried using several linguistic and functional features. In addition, we present a multi-faceted sentiment classification benchmark summarizing hundreds of experiments conducted on different base models, training objectives, dataset collections, and fine-tuning strategies.

The paper describes the image acquisition system able to capture images in

two separated bands of light, used to underwater autonomous navigation. The

channels are: the visible light spectrum and near infrared spectrum. The

characteristics of natural, underwater environment were also described together

with the process of the underwater image creation. The results of an experiment

with comparison of selected images acquired in these channels are discussed.

24 Feb 2017

The paper presents quantitative analysis of the video quality losses in the

homogenous HEVC video transcoder. With the use of HM15.0 reference software and

a set of test video sequences, cascaded pixel domain video transcoder (CPDT)

concept has been used to gather all the necessary data needed for the analysis.

This experiment was done for wide range of source and target bitrates. The

essential result of the work is extensive evaluation of CPDT, commonly used as

a reference in works on effective video transcoding. Until now no such

extensively performed study have been made available in the literature. Quality

degradation between transcoded video and the video that would be result of

direct compression of the original video at the same bitrate as the transcoded

one have been reported. The dependency between quality degradation caused by

transcoding and the bitrate changes of the transcoded data stream are clearly

presented on graphs.

A standard introduction to online learning might place Online Gradient

Descent at its center and then proceed to develop generalizations and

extensions like Online Mirror Descent and second-order methods. Here we explore

the alternative approach of putting Exponential Weights (EW) first. We show

that many standard methods and their regret bounds then follow as a special

case by plugging in suitable surrogate losses and playing the EW posterior

mean. For instance, we easily recover Online Gradient Descent by using EW with

a Gaussian prior on linearized losses, and, more generally, all instances of

Online Mirror Descent based on regular Bregman divergences also correspond to

EW with a prior that depends on the mirror map. Furthermore, appropriate

quadratic surrogate losses naturally give rise to Online Gradient Descent for

strongly convex losses and to Online Newton Step. We further interpret several

recent adaptive methods (iProd, Squint, and a variation of Coin Betting for

experts) as a series of closely related reductions to exp-concave surrogate

losses that are then handled by Exponential Weights. Finally, a benefit of our

EW interpretation is that it opens up the possibility of sampling from the EW

posterior distribution instead of playing the mean. As already observed by

Bubeck and Eldan, this recovers the best-known rate in Online Bandit Linear

Optimization.

25 Jul 2019

To perform a precise auscultation for the purposes of examination of respiratory system normally requires the presence of an experienced doctor. With most recent advances in machine learning and artificial intelligence, automatic detection of pathological breath phenomena in sounds recorded with stethoscope becomes a reality. But to perform a full auscultation in home environment by layman is another matter, especially if the patient is a child. In this paper we propose a unique application of Reinforcement Learning for training an agent that interactively guides the end user throughout the auscultation procedure. We show that \textit{intelligent} selection of auscultation points by the agent reduces time of the examination fourfold without significant decrease in diagnosis accuracy compared to exhaustive auscultation.

The paper presents a new method of depth estimation dedicated for

free-viewpoint television (FTV). The estimation is performed for segments and

thus their size can be used to control a trade-off between the quality of depth

maps and the processing time of their estimation. The proposed algorithm can

take as its input multiple arbitrarily positioned views which are

simultaneously used to produce multiple inter view consistent output depth

maps. The presented depth estimation method uses novel parallelization and

temporal consistency enhancement methods that significantly reduce the

processing time of depth estimation. An experimental assessment of the

proposals has been performed, based on the analysis of virtual view quality in

FTV. The results show that the proposed method provides an improvement of the

depth map quality over the state of-the-art method, simultaneously reducing the

complexity of depth estimation. The consistency of depth maps, which is crucial

for the quality of the synthesized video and thus the quality of experience of

navigating through a 3D scene, is also vastly improved.

Set-valued prediction is a well-known concept in multi-class classification.

When a classifier is uncertain about the class label for a test instance, it

can predict a set of classes instead of a single class. In this paper, we focus

on hierarchical multi-class classification problems, where valid sets

(typically) correspond to internal nodes of the hierarchy. We argue that this

is a very strong restriction, and we propose a relaxation by introducing the

notion of representation complexity for a predicted set. In combination with

probabilistic classifiers, this leads to a challenging inference problem for

which specific combinatorial optimization algorithms are needed. We propose

three methods and evaluate them on benchmark datasets: a na\"ive approach that

is based on matrix-vector multiplication, a reformulation as a knapsack problem

with conflict graph, and a recursive tree search method. Experimental results

demonstrate that the last method is computationally more efficient than the

other two approaches, due to a hierarchical factorization of the conditional

class distribution.

15 Dec 2023

Let RγBp,qs(Rd) be a subspace of the Besov space

Bp,qs(Rd) that consists of block-radial (multi-radial)

functions. We study an asymptotic behaviour of approximation numbers of compact

embeddings $id: R_\gamma B^{s_1}_{p_1,q_1}(\mathbb{R}^d) \rightarrow R_\gamma

B^{s_2}_{p_2,q_2}(\mathbb{R}^d)$. Moreover we find the sufficient and necessary

condition for nuclearity of the above embeddings. Analogous results are proved

for fractional Sobolev spaces RγHps(Rd).

Combinatorial optimization problems pose significant computational challenges across various fields, from logistics to cryptography. Traditional computational methods often struggle with their exponential complexity, motivating exploration into alternative paradigms such as quantum computing. In this paper, we investigate the application of photonic quantum computing to solve combinatorial optimization problems. Leveraging the principles of quantum mechanics, we demonstrate how photonic quantum computers can efficiently explore solution spaces and identify optimal solutions for a range of combinatorial problems. We provide an overview of quantum algorithms tailored for combinatorial optimization for different quantum architectures (boson sampling, quantum annealing and gate-based quantum computing). Additionally, we discuss the advantages and challenges of implementing those algorithms on photonic quantum hardware. Through experiments run on an 8-qumode photonic quantum device, as well as numerical simulations, we evaluate the performance of photonic quantum computers in solving representative combinatorial optimization problems, such as the Max-Cut problem and the Job Shop Scheduling Problem.

09 Oct 2025

Binary optimisation tasks are ubiquitous in areas ranging from logistics to cryptography. The exponential complexity of such problems means that the performance of traditional computational methods decreases rapidly with increasing problem sizes. Here, we propose a new algorithm for binary optimisation, the Bosonic Binary Solver, designed for near-term photonic quantum processors. This variational algorithm uses samples from a quantum optical circuit, which are post-processed using trainable classical bit-flip probabilities, to propose candidate solutions. A gradient-based training loop finds progressively better solutions until convergence. We perform ablation tests that validate the structure of the algorithm. We then evaluate its performance on an illustrative range of binary optimisation problems, using both simulators and real hardware, and perform comparisons to classical algorithms. We find that this algorithm produces high-quality solutions to these problems. As such, this algorithm is a promising method for leveraging the scalable nature of photonic quantum processors to solve large-scale real-world optimisation problems.

19 Apr 2024

Digital Behavior Change Interventions (DBCIs) are supporting development of new health behaviors. Evaluating their effectiveness is crucial for their improvement and understanding of success factors. However, comprehensive guidance for developers, particularly in small-scale studies with ethical constraints, is limited. Building on the CAPABLE project, this study aims to define effective engagement with DBCIs for supporting cancer patients in enhancing their quality of life. We identify metrics for measuring engagement, explore the interest of both patients and clinicians in DBCIs, and propose hypotheses for assessing the impact of DBCIs in such contexts. Our findings suggest that clinician prescriptions significantly increase sustained engagement with mobile DBCIs. In addition, while one weekly engagement with a DBCI is sufficient to maintain well-being, transitioning from extrinsic to intrinsic motivation may require a higher level of engagement.

Being able to rapidly respond to the changing scenes and traffic situations by generating feasible local paths is of pivotal importance for car autonomy. We propose to train a deep neural network (DNN) to plan feasible and nearly-optimal paths for kinematically constrained vehicles in small constant time. Our DNN model is trained using a novel weakly supervised approach and a gradient-based policy search. On real and simulated scenes and a large set of local planning problems, we demonstrate that our approach outperforms the existing planners with respect to the number of successfully completed tasks. While the path generation time is about 40 ms, the generated paths are smooth and comparable to those obtained from conventional path planners.

The paper presents a new approach to multiview video coding using Screen

Content Coding. It is assumed that for a time instant the frames corresponding

to all views are packed into a single frame, i.e. the frame-compatible approach

to multiview coding is applied. For such coding scenario, the paper

demonstrates that Screen Content Coding can be efficiently used for multiview

video coding. Two approaches are considered: the first using standard HEVC

Screen Content Coding, and the second using Advanced Screen Content Coding. The

latter is the original proposal of the authors that exploits quarter-pel motion

vectors and other nonstandard extensions of HEVC Screen Content Coding. The

experimental results demonstrate that multiview video coding even using

standard HEVC Screen Content Coding is much more efficient than simulcast HEVC

coding. The proposed Advanced Screen Content Coding provides virtually the same

coding efficiency as MV-HEVC, which is the state-of-the-art multiview video

compression technique. The authors suggest that Advanced Screen Content Coding

can be efficiently used within the new Versatile Video Coding (VVC) technology.

Nevertheless a reference multiview extension of VVC does not exist yet,

therefore, for VVC-based coding, the experimental comparisons are left for

future work.

There are no more papers matching your filters at the moment.