Ask or search anything...

A comprehensive survey systematically reviews over 100 recent papers in Multimodal Retrieval-Augmented Generation (RAG), proposing an innovation-driven taxonomy that categorizes methods across retrieval, fusion, augmentation, generation, and training strategies, and outlines open challenges and future research directions.

View blogResearchers from UCLA, University of Washington, Qatar Computing Research Institute, Google, and Stanford University developed X-Teaming, an adaptive multi-agent framework for multi-turn jailbreaking, achieving attack success rates up to 98.1% against robust LLMs. They also created XGuard-Train, a 30K-entry dataset that improved multi-turn attack resistance by 34.2% when used for fine-tuning.

View blogThe OPEN-RAG framework enhances the reasoning capabilities of open-source Large Language Models (LLMs) by integrating external knowledge via Retrieval-Augmented Generation (RAG). It leverages a parameter-efficient sparse Mixture of Experts (MoE) architecture, reflection tokens for context evaluation, and adaptive retrieval, demonstrating improved performance on single and multi-hop reasoning tasks.

View blogLLMxCPG introduces a two-phase framework that integrates Code Property Graphs (CPGs) with Large Language Models (LLMs) to improve software vulnerability detection. The system reduces code size by up to 90.93% through CPG-guided slicing, achieving a 20% F1-score improvement over state-of-the-art baselines on unseen datasets while demonstrating robustness to code modifications.

View blogResearchers at QCRI developed a model diffing methodology using crosscoders to mechanistically analyze changes in Large Language Models after fine-tuning. Their work reveals that techniques like Simplified Preference Optimization (SimPO) lead to targeted shifts in internal capabilities, enhancing areas such as safety and instruction following while diminishing others like hallucination detection and specialized technical skills.

View blogMATHMIST introduces the first parallel multilingual benchmark dataset for evaluating Large Language Models on mathematical problem-solving across seven typologically diverse languages. It expands beyond arithmetic to include symbolic and proof-based reasoning, employing novel evaluation paradigms such as code-switched Chain-of-Thought and perturbed reasoning to reveal persistent performance deficiencies in low-resource settings and complex cross-lingual generalization challenges.

View blogXolver, a multi-agent reasoning framework, emulates human Olympiad teams by integrating collaborative agents, a dual-memory system, and iterative refinement. It achieves state-of-the-art results on complex math and coding benchmarks, demonstrating that even lightweight LLM backbones can surpass larger models when augmented with holistic experience learning.

View blogThis survey provides a structured and comprehensive overview of deep learning-based anomaly detection methods, categorizing techniques by data type, label availability, and training objective. It synthesizes findings on deep learning's enhanced performance for complex data and reviews the adoption and effectiveness of these methods across numerous real-world application domains.

View blogResearchers from Purdue, QCRI, and MBZUAI developed a multi-stage semantic ranking system to automate the annotation of cyber threat behaviors to MITRE ATT&CK techniques. The system, which utilizes fine-tuned transformer models and a newly released human-annotated dataset, achieved a recall@10 of 92.07% and recall@3 of 81.02%, outperforming prior methods and significantly exceeding the performance of general large language models.

View blogThis survey paper from Sharif University of Technology, Iran University of Science and Technology, and Qatar Computing Research Institute presents a comprehensive overview of how generative artificial intelligence techniques are transforming character animation. It integrates advances across previously fragmented areas like facial animation, avatar creation, and motion synthesis, demonstrating how AI can automate and augment traditional animation processes, leading to more accessible and efficient content creation.

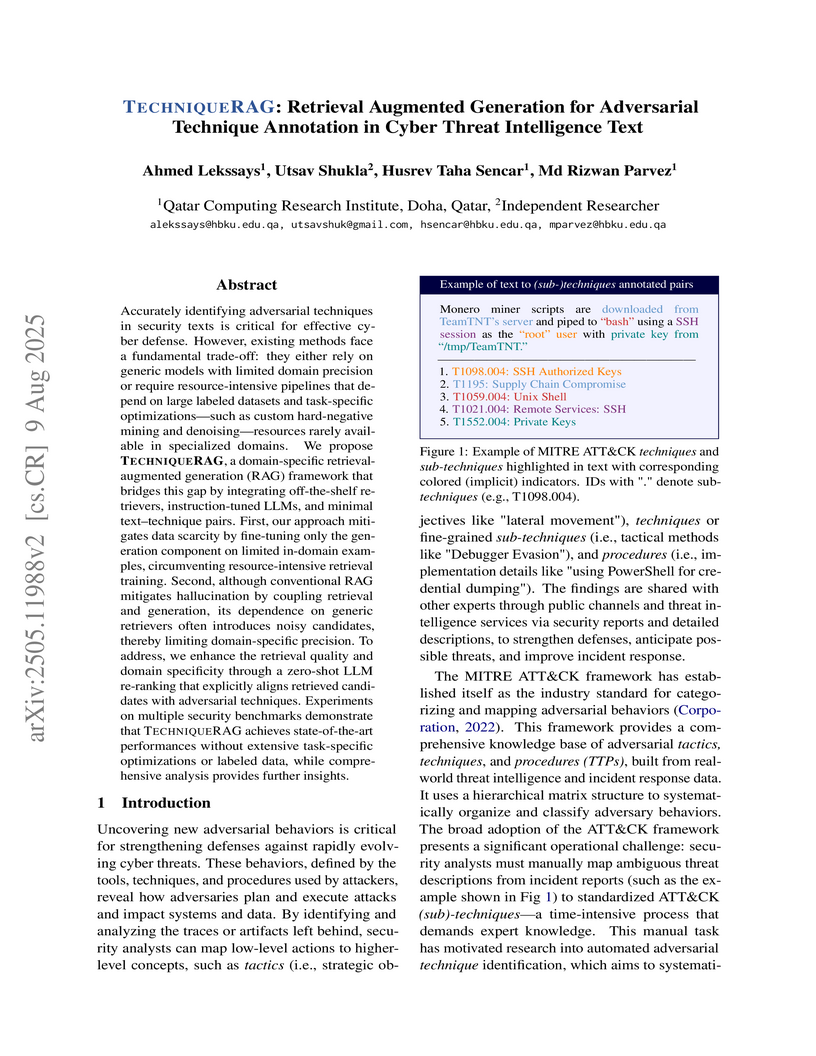

View blogResearchers from the Qatar Computing Research Institute developed TECHNIQUERAG, a Retrieval-Augmented Generation framework that automates the annotation of adversarial techniques in cyber threat intelligence texts. This framework achieves state-of-the-art performance, with an F1 score of 91.09% on the Procedures dataset for technique prediction, by integrating off-the-shelf retrievers, a zero-shot LLM re-ranker, and a minimally fine-tuned generator to overcome data scarcity and enhance domain-specific precision.

View blogThe Qatar Computing Research Institute (QCRI) developed T-RAG, a system for secure, on-premise question answering over private organizational documents by integrating Retrieval-Augmented Generation (RAG) with a QLoRA-finetuned Llama-2 7B model and a unique tree-based context for hierarchical information. T-RAG achieved a 73.0% total correct rate in human evaluations, surpassing RAG (56.8%) and finetuned-only (54.1%) models, especially for hierarchical queries, and demonstrated improved robustness in "Needle in a Haystack" tests.

View blog

University of Washington

University of Washington UCLA

UCLA

Mohamed bin Zayed University of Artificial Intelligence

Mohamed bin Zayed University of Artificial Intelligence

University of Virginia

University of Virginia

Cornell University

Cornell University

University of Sydney

University of Sydney

Purdue University

Purdue University

University of Pennsylvania

University of Pennsylvania