SAP SE

CNRSFreie Universität Berlin

CNRSFreie Universität Berlin University of OxfordTU Dortmund UniversityGerman Research Center for Artificial Intelligence (DFKI)University of InnsbruckCollège de FranceMax Planck Institute for the Science of LightFriedrich-Alexander-Universität Erlangen-NürnbergInstitut Polytechnique de ParisUniversity of LatviaUniversity of TurkuSaarland UniversityFondazione Bruno KesslerTU Wien

University of OxfordTU Dortmund UniversityGerman Research Center for Artificial Intelligence (DFKI)University of InnsbruckCollège de FranceMax Planck Institute for the Science of LightFriedrich-Alexander-Universität Erlangen-NürnbergInstitut Polytechnique de ParisUniversity of LatviaUniversity of TurkuSaarland UniversityFondazione Bruno KesslerTU Wien Chalmers University of TechnologyForschungszentrum JülichUniversity of RegensburgUniversity of FlorenceUniversity of AugsburgUniversity of GothenburgLeiden Institute of PhysicsDonostia International Physics CenterJohannes Kepler University LinzFraunhofer Heinrich-Hertz-InstituteSAP SEFriedrich-Schiller-University JenaEuropean Centre for Theoretical Studies in Nuclear Physics and Related Areas (ECT*)EPITA Research LabLeiden Institute of Advanced Computer ScienceÖAWVienna Center for Quantum Science and TechnologyAtominstitutUniversity of Applied Sciences Zittau/GörlitzIQOQI ViennaFraunhofer IOSB-ASTUniversit PSLInria Paris–SaclayUniversit

Paris Diderot`Ecole PolytechniqueUniversity of Naples

“Federico II”INFN

Sezione di Firenze

Chalmers University of TechnologyForschungszentrum JülichUniversity of RegensburgUniversity of FlorenceUniversity of AugsburgUniversity of GothenburgLeiden Institute of PhysicsDonostia International Physics CenterJohannes Kepler University LinzFraunhofer Heinrich-Hertz-InstituteSAP SEFriedrich-Schiller-University JenaEuropean Centre for Theoretical Studies in Nuclear Physics and Related Areas (ECT*)EPITA Research LabLeiden Institute of Advanced Computer ScienceÖAWVienna Center for Quantum Science and TechnologyAtominstitutUniversity of Applied Sciences Zittau/GörlitzIQOQI ViennaFraunhofer IOSB-ASTUniversit PSLInria Paris–SaclayUniversit

Paris Diderot`Ecole PolytechniqueUniversity of Naples

“Federico II”INFN

Sezione di FirenzeA collaborative white paper coordinated by the Quantum Community Network comprehensively analyzes the current status and future perspectives of Quantum Artificial Intelligence, categorizing its potential into "Quantum for AI" and "AI for Quantum" applications. It proposes a strategic research and development agenda to bolster Europe's competitive position in this rapidly converging technological domain.

15 Apr 2025

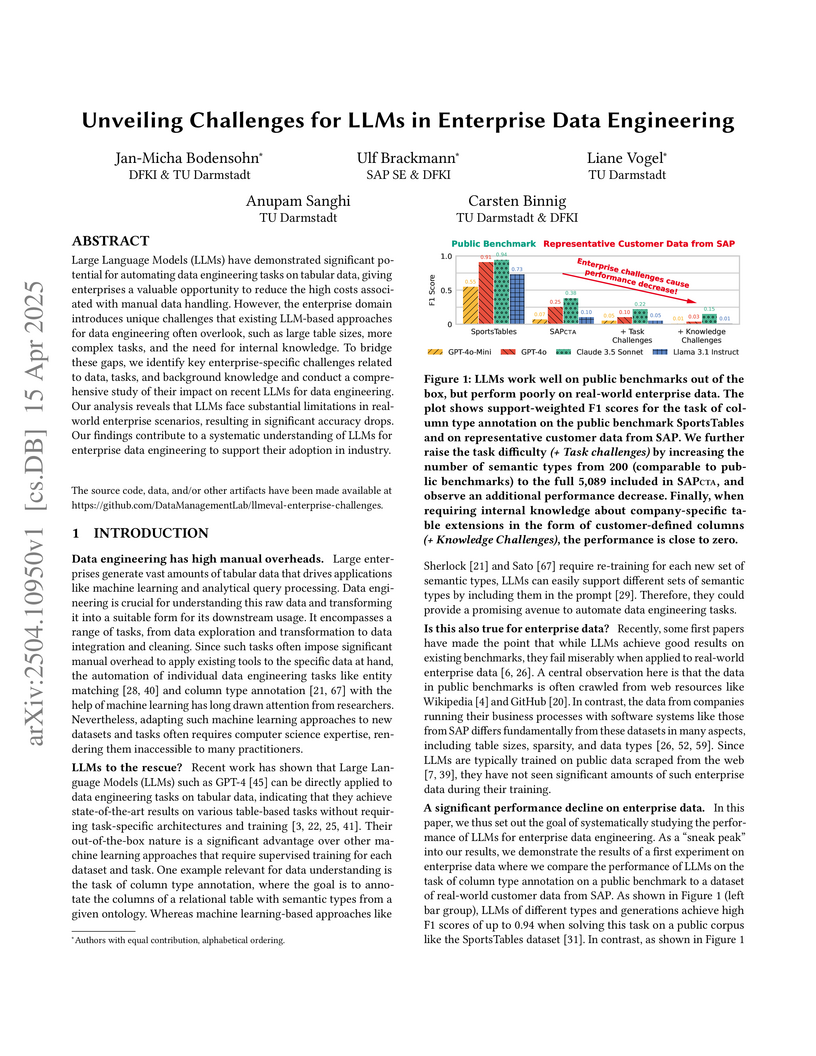

Large Language Models (LLMs) have demonstrated significant potential for

automating data engineering tasks on tabular data, giving enterprises a

valuable opportunity to reduce the high costs associated with manual data

handling. However, the enterprise domain introduces unique challenges that

existing LLM-based approaches for data engineering often overlook, such as

large table sizes, more complex tasks, and the need for internal knowledge. To

bridge these gaps, we identify key enterprise-specific challenges related to

data, tasks, and background knowledge and conduct a comprehensive study of

their impact on recent LLMs for data engineering. Our analysis reveals that

LLMs face substantial limitations in real-world enterprise scenarios, resulting

in significant accuracy drops. Our findings contribute to a systematic

understanding of LLMs for enterprise data engineering to support their adoption

in industry.

Researchers at TU Darmstadt and SAP SE introduced Monte Carlo and Reconstruction membership inference attacks, demonstrating superior performance against generative models and revealing Variational Autoencoders are significantly more susceptible to information leakage than Generative Adversarial Networks. The work also formalized and validated a highly effective set membership inference attack for auditing the unauthorized use of specific datasets.

Researchers from BASF Digital Solutions, Aqarios, and SAP conducted a comprehensive benchmarking study of quantum machine learning models against classical counterparts for univariate time-series forecasting. The study found that classical models generally outperformed current quantum models even under ideal noiseless simulation conditions, although some quantum models could surpass classical ARIMA on specific datasets.

03 Aug 2024

This work is a benchmark study for quantum-classical computing method with a real-world optimization problem from industry. The problem involves scheduling and balancing jobs on different machines, with a non-linear objective function. We first present the motivation and the problem description, along with different modeling techniques for classical and quantum computing. The modeling for classical solvers has been done as a mixed-integer convex program, while for the quantum-classical solver we model the problem as a binary quadratic program, which is best suited to the D-Wave Leap's Hybrid Solver. This ensures that all the solvers we use are fetched with dedicated and most suitable model(s). Henceforth, we carry out benchmarking and comparisons between classical and quantum-classical methods, on problem sizes ranging till approximately 150000 variables. We utilize an industry grade classical solver and compare its results with D-Wave Leap's Hybrid Solver. The results we obtain from D-Wave are highly competitive and sometimes offer speedups, compared to the classical solver.

Researchers from the Karlsruhe Institute Of Technology and collaborators at SAP SE and Robert Bosch GmbH developed Multi Time Scale State Space (MTS3) models, a hierarchical probabilistic framework for learning world dynamics. The model achieves accurate long-horizon predictions and robust uncertainty quantification across various complex simulated and real-world robotic environments by explicitly modeling dynamics at multiple temporal granularities.

Knowledge graph embedding approaches represent nodes and edges of graphs as mathematical vectors. Current approaches focus on embedding complete knowledge graphs, i.e. all nodes and edges. This leads to very high computational requirements on large graphs such as DBpedia or Wikidata. However, for most downstream application scenarios, only a small subset of concepts is of actual interest. In this paper, we present RDF2Vec Light, a lightweight embedding approach based on RDF2Vec which generates vectors for only a subset of entities. To that end, RDF2Vec Light only traverses and processes a subgraph of the knowledge graph. Our method allows the application of embeddings of very large knowledge graphs in scenarios where such embeddings were not possible before due to a significantly lower runtime and significantly reduced hardware requirements.

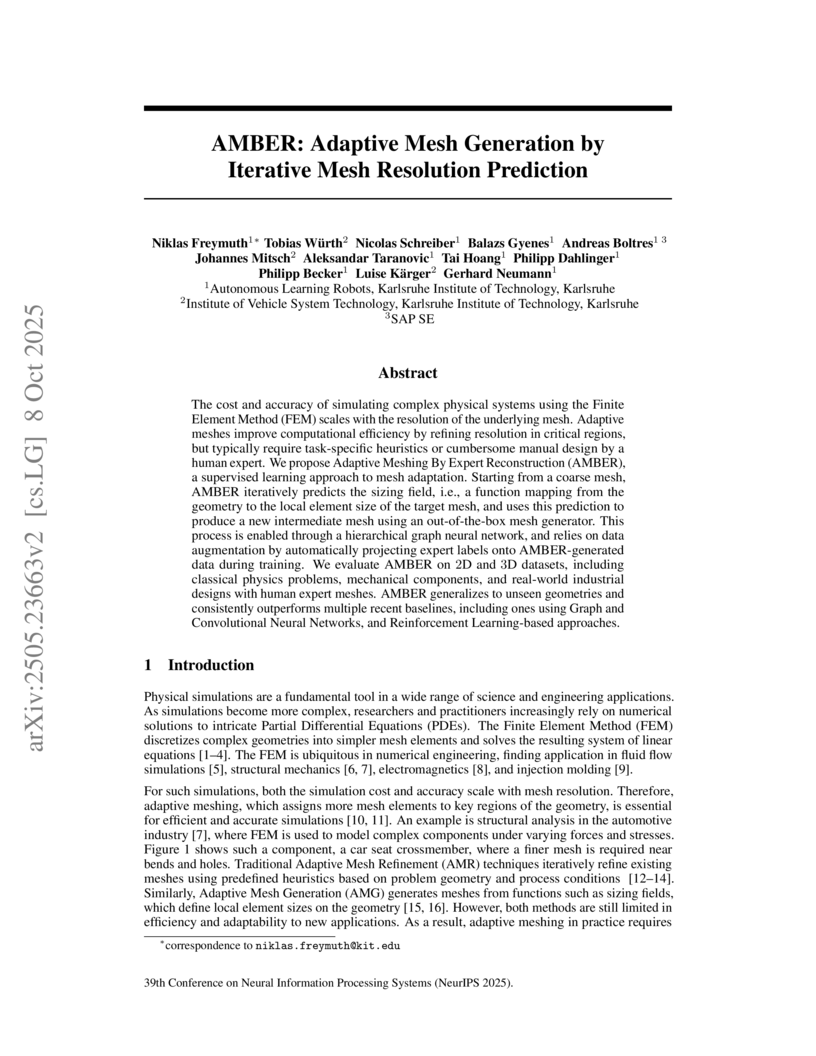

The cost and accuracy of simulating complex physical systems using the Finite Element Method (FEM) scales with the resolution of the underlying mesh. Adaptive meshes improve computational efficiency by refining resolution in critical regions, but typically require task-specific heuristics or cumbersome manual design by a human expert. We propose Adaptive Meshing By Expert Reconstruction (AMBER), a supervised learning approach to mesh adaptation. Starting from a coarse mesh, AMBER iteratively predicts the sizing field, i.e., a function mapping from the geometry to the local element size of the target mesh, and uses this prediction to produce a new intermediate mesh using an out-of-the-box mesh generator. This process is enabled through a hierarchical graph neural network, and relies on data augmentation by automatically projecting expert labels onto AMBER-generated data during training. We evaluate AMBER on 2D and 3D datasets, including classical physics problems, mechanical components, and real-world industrial designs with human expert meshes. AMBER generalizes to unseen geometries and consistently outperforms multiple recent baselines, including ones using Graph and Convolutional Neural Networks, and Reinforcement Learning-based approaches.

A quantum autoencoder (QAE) developed by Fraunhofer AISEC and SAP SE detects anomalies in multivariate time series data from enterprise systems, achieving performance comparable to medium-sized classical autoencoders with significantly fewer trainable parameters.

Quality Estimation (QE) models for Neural Machine Translation (NMT) predict the quality of the hypothesis without having access to the reference. An emerging research direction in NMT involves the use of QE models, which have demonstrated high correlations with human judgment and can enhance translations through Quality-Aware Decoding. Although several approaches have been proposed based on sampling multiple candidate translations and picking the best candidate, none have integrated these models directly into the decoding process. In this paper, we address this by proposing a novel token-level QE model capable of reliably scoring partial translations. We build a uni-directional QE model for this, as decoder models are inherently trained and efficient on partial sequences. We then present a decoding strategy that integrates the QE model for Quality-Aware decoding and demonstrate that the translation quality improves when compared to the N-best list re-ranking with state-of-the-art QE models (up to 1.39 XCOMET-XXL ↑). Finally, we show that our approach provides significant benefits in document translation tasks, where the quality of N-best lists is typically suboptimal. Code can be found at this https URL\this http URL

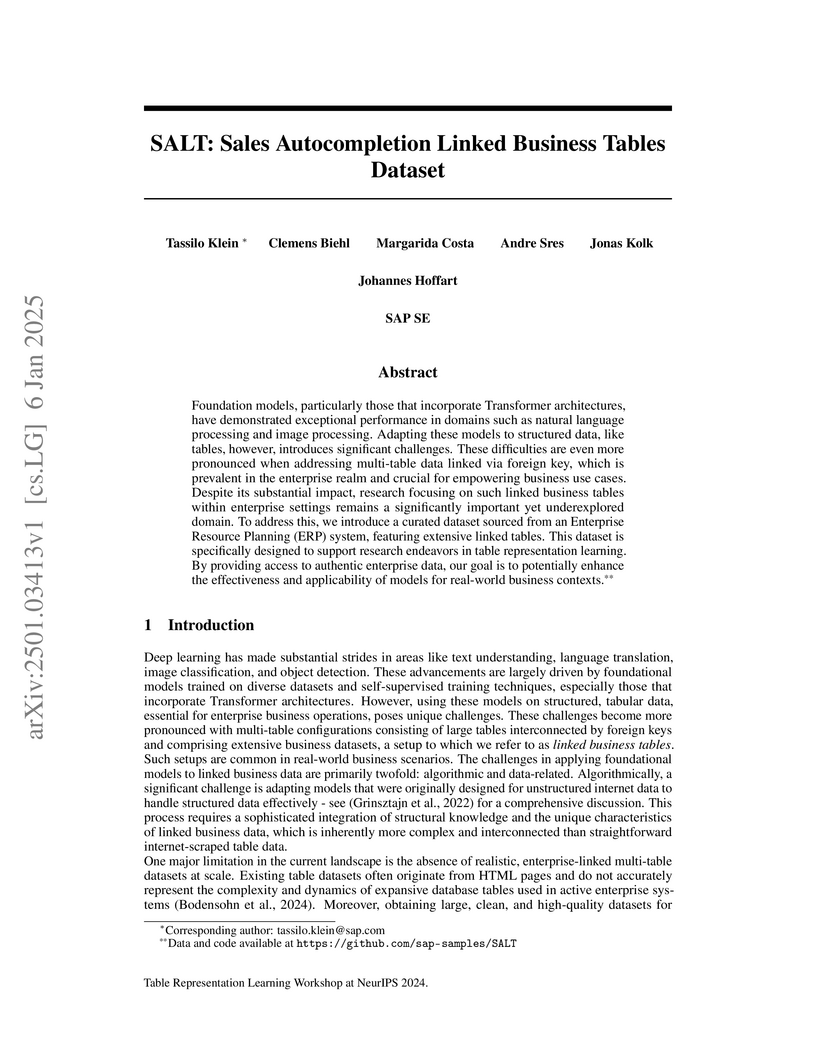

Foundation models, particularly those that incorporate Transformer architectures, have demonstrated exceptional performance in domains such as natural language processing and image processing. Adapting these models to structured data, like tables, however, introduces significant challenges. These difficulties are even more pronounced when addressing multi-table data linked via foreign key, which is prevalent in the enterprise realm and crucial for empowering business use cases. Despite its substantial impact, research focusing on such linked business tables within enterprise settings remains a significantly important yet underexplored domain. To address this, we introduce a curated dataset sourced from an Enterprise Resource Planning (ERP) system, featuring extensive linked tables. This dataset is specifically designed to support research endeavors in table representation learning. By providing access to authentic enterprise data, our goal is to potentially enhance the effectiveness and applicability of models for real-world business contexts.

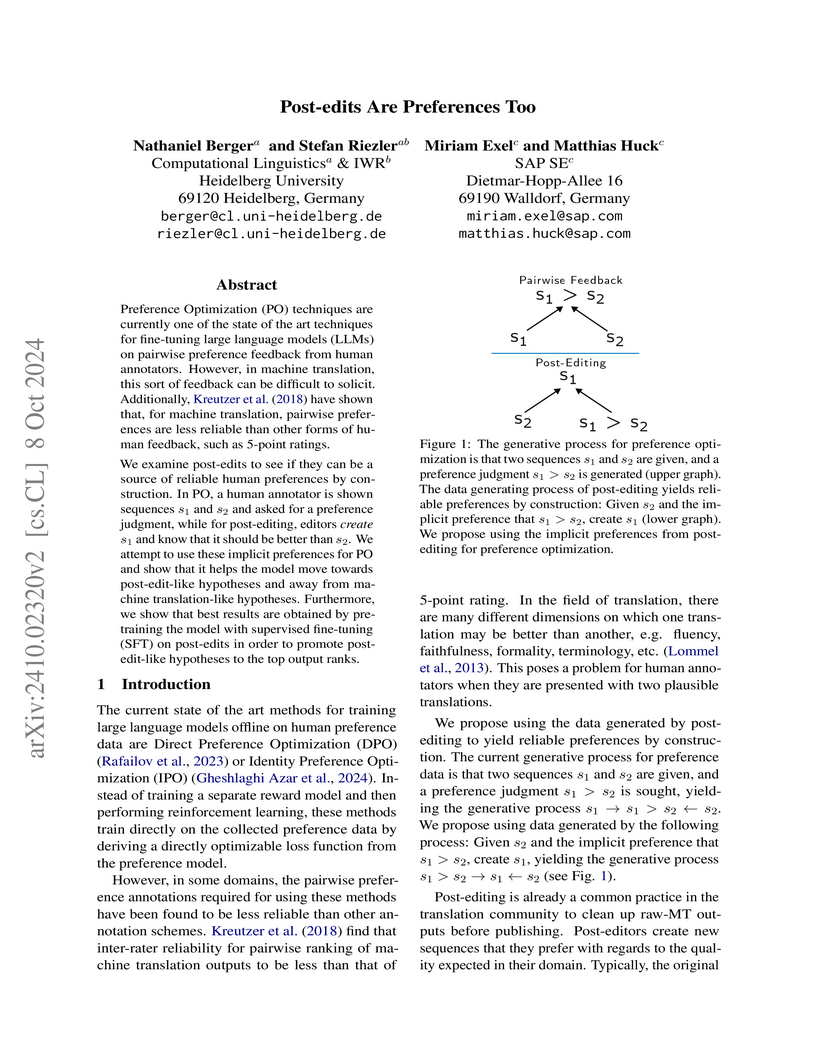

Preference Optimization (PO) techniques are currently one of the state of the

art techniques for fine-tuning large language models (LLMs) on pairwise

preference feedback from human annotators. However, in machine translation,

this sort of feedback can be difficult to solicit. Additionally, Kreutzer et

al. (2018) have shown that, for machine translation, pairwise preferences are

less reliable than other forms of human feedback, such as 5-point ratings.

We examine post-edits to see if they can be a source of reliable human

preferences by construction. In PO, a human annotator is shown sequences s1

and s2 and asked for a preference judgment, %s1>s2; while for

post-editing, editors create s1 and know that it should be better than

s2. We attempt to use these implicit preferences for PO and show that it

helps the model move towards post-edit-like hypotheses and away from machine

translation-like hypotheses. Furthermore, we show that best results are

obtained by pre-training the model with supervised fine-tuning (SFT) on

post-edits in order to promote post-edit-like hypotheses to the top output

ranks.

Finding efficient routes for data packets is an essential task in computer

networking. The optimal routes depend greatly on the current network topology,

state and traffic demand, and they can change within milliseconds.

Reinforcement Learning can help to learn network representations that provide

routing decisions for possibly novel situations. So far, this has commonly been

done using fluid network models. We investigate their suitability for

millisecond-scale adaptations with a range of traffic mixes and find that

packet-level network models are necessary to capture true dynamics, in

particular in the presence of TCP traffic. To this end, we present

PackeRL, the first packet-level Reinforcement Learning environment

for routing in generic network topologies. Our experiments confirm that

learning-based strategies that have been trained in fluid environments do not

generalize well to this more realistic, but more challenging setup. Hence, we

also introduce two new algorithms for learning sub-second Routing Optimization.

We present M-Slim, a dynamic shortest-path algorithm that excels at

high traffic volumes but is computationally hard to scale to large network

topologies, and FieldLines, a novel next-hop policy design that

re-optimizes routing for any network topology within milliseconds without

requiring any re-training. Both algorithms outperform current learning-based

approaches as well as commonly used static baseline protocols in scenarios with

high-traffic volumes. All findings are backed by extensive experiments in

realistic network conditions in our fast and versatile training and evaluation

framework.

06 Dec 2017

We report on a community effort between industry and academia to shape the

future of graph query languages. We argue that existing graph database

management systems should consider supporting a query language with two key

characteristics. First, it should be composable, meaning, that graphs are the

input and the output of queries. Second, the graph query language should treat

paths as first-class citizens. Our result is G-CORE, a powerful graph query

language design that fulfills these goals, and strikes a careful balance

between path query expressivity and evaluation complexity.

Exploiting the fact that samples drawn from a quantum annealer inherently follow a Boltzmann-like distribution, annealing-based Quantum Boltzmann Machines (QBMs) have gained increasing popularity in the quantum research community. While they harbor great promises for quantum speed-up, their usage currently stays a costly endeavor, as large amounts of QPU time are required to train them. This limits their applicability in the NISQ era. Following the idea of Noè et al. (2024), who tried to alleviate this cost by incorporating parallel quantum annealing into their unsupervised training of QBMs, this paper presents an improved version of parallel quantum annealing that we employ to train QBMs in a supervised setting. Saving qubits to encode the inputs, the latter setting allows us to test our approach on medical images from the MedMNIST data set (Yang et al., 2023), thereby moving closer to real-world applicability of the technology. Our experiments show that QBMs using our approach already achieve reasonable results, comparable to those of similarly-sized Convolutional Neural Networks (CNNs), with markedly smaller numbers of epochs than these classical models. Our parallel annealing technique leads to a speed-up of almost 70 % compared to regular annealing-based BM executions.

29 Jul 2025

This study evaluates the performance of a quantum-classical metaheuristic and a traditional classical mathematical programming solver, applied to two mathematical optimization models for an industry-relevant scheduling problem with autonomous guided vehicles (AGVs). The two models are: (1) a time-indexed mixed-integer linear program, and (2) a novel binary optimization problem with linear and quadratic constraints and a linear objective. Our experiments indicate that optimization methods are very susceptible to modeling techniques and different solvers require dedicated methods. We show in this work that quantum-classical metaheuristics can benefit from a new way of modeling mathematical optimization problems. Additionally, we present a detailed performance comparison of the two solution methods for each optimization model.

Allocating resources in a distributed environment is a fundamental challenge. In this paper, we analyze the scheduling and placement of virtual machines (VMs) in the cloud platform of SAP, the world's largest enterprise resource planning software vendor. Based on data from roughly 1,800 hypervisors and 48,000 VMs within a 30-day observation period, we highlight potential improvements for workload management. The data was measured through observability tooling that tracks resource usage and performance metrics across the entire infrastructure. In contrast to existing datasets, ours uniquely offers fine-grained time-series telemetry data of fully virtualized enterprise-level workloads from both long-running and memory-intensive SAP S/4HANA and diverse, general-purpose applications. Our key findings include several suboptimal scheduling situations, such as CPU resource contention exceeding 40%, CPU ready times of up to 220 seconds, significantly imbalanced compute hosts with a maximum CPU~utilization on intra-building block hosts of up to 99%, and overprovisioned CPU and memory resources resulting into over 80% of VMs using less than 70% of the provided resources. Bolstered by these findings, we derive requirements for the design and implementation of novel placement and scheduling algorithms and provide guidance to optimize resource allocations. We make the full dataset used in this study publicly available to enable data-driven evaluations of scheduling approaches for large-scale cloud infrastructures in future research.

Self-supervised learning on tabular data seeks to apply advances from natural language and image domains to the diverse domain of tables. However, current techniques often struggle with integrating multi-domain data and require data cleaning or specific structural requirements, limiting the scalability of pre-training datasets. We introduce PORTAL (Pretraining One-Row-at-a-Time for All tabLes), a framework that handles various data modalities without the need for cleaning or preprocessing. This simple yet powerful approach can be effectively pre-trained on online-collected datasets and fine-tuned to match state-of-the-art methods on complex classification and regression tasks. This work offers a practical advancement in self-supervised learning for large-scale tabular data.

21 Nov 2018

Many questions cannot be answered simply; their answers must include numerous nuanced details and additional context. Complex Answer Retrieval (CAR) is the retrieval of answers to such questions. In their simplest form, these questions are constructed from a topic entity (e.g., `cheese') and a facet (e.g., `health effects'). While topic matching has been thoroughly explored, we observe that some facets use general language that is unlikely to appear verbatim in answers. We call these low-utility facets. In this work, we present an approach to CAR that identifies and addresses low-utility facets. We propose two estimators of facet utility. These include exploiting the hierarchical structure of CAR queries and using facet frequency information from training data. To improve the retrieval performance on low-utility headings, we also include entity similarity scores using knowledge graph embeddings. We apply our approaches to a leading neural ranking technique, and evaluate using the TREC CAR dataset. We find that our approach perform significantly better than the unmodified neural ranker and other leading CAR techniques. We also provide a detailed analysis of our results, and verify that low-utility facets are indeed more difficult to match, and that our approach improves the performance for these difficult queries.

Face images are subject to many different factors of variation, especially in

unconstrained in-the-wild scenarios. For most tasks involving such images, e.g.

expression recognition from video streams, having enough labeled data is

prohibitively expensive. One common strategy to tackle such a problem is to

learn disentangled representations for the different factors of variation of

the observed data using adversarial learning. In this paper, we use a

formulation of the adversarial loss to learn disentangled representations for

face images. The used model facilitates learning on single-task datasets and

improves the state-of-the-art in expression recognition with an accuracy

of60.53%on the AffectNetdataset, without using any additional data.

There are no more papers matching your filters at the moment.