Siemens Corporate Technology

To achieve a successful grasp, gripper attributes such as its geometry and kinematics play a role as important as the object geometry. The majority of previous work has focused on developing grasp methods that generalize over novel object geometry but are specific to a certain robot hand. We propose UniGrasp, an efficient data-driven grasp synthesis method that considers both the object geometry and gripper attributes as inputs. UniGrasp is based on a novel deep neural network architecture that selects sets of contact points from the input point cloud of the object. The proposed model is trained on a large dataset to produce contact points that are in force closure and reachable by the robot hand. By using contact points as output, we can transfer between a diverse set of multifingered robotic hands. Our model produces over 90% valid contact points in Top10 predictions in simulation and more than 90% successful grasps in real world experiments for various known two-fingered and three-fingered grippers. Our model also achieves 93%, 83% and 90% successful grasps in real world experiments for an unseen two-fingered gripper and two unseen multi-fingered anthropomorphic robotic hands.

PD-MORL develops an algorithm that trains a single universal network to generate Pareto-optimal policies for any given preference vector at run-time. It yields broader and denser Pareto fronts with an order of magnitude fewer parameters across discrete and continuous control tasks compared to multi-policy baselines, addressing the challenge of dynamic adaptation and scalability in multi-objective reinforcement learning.

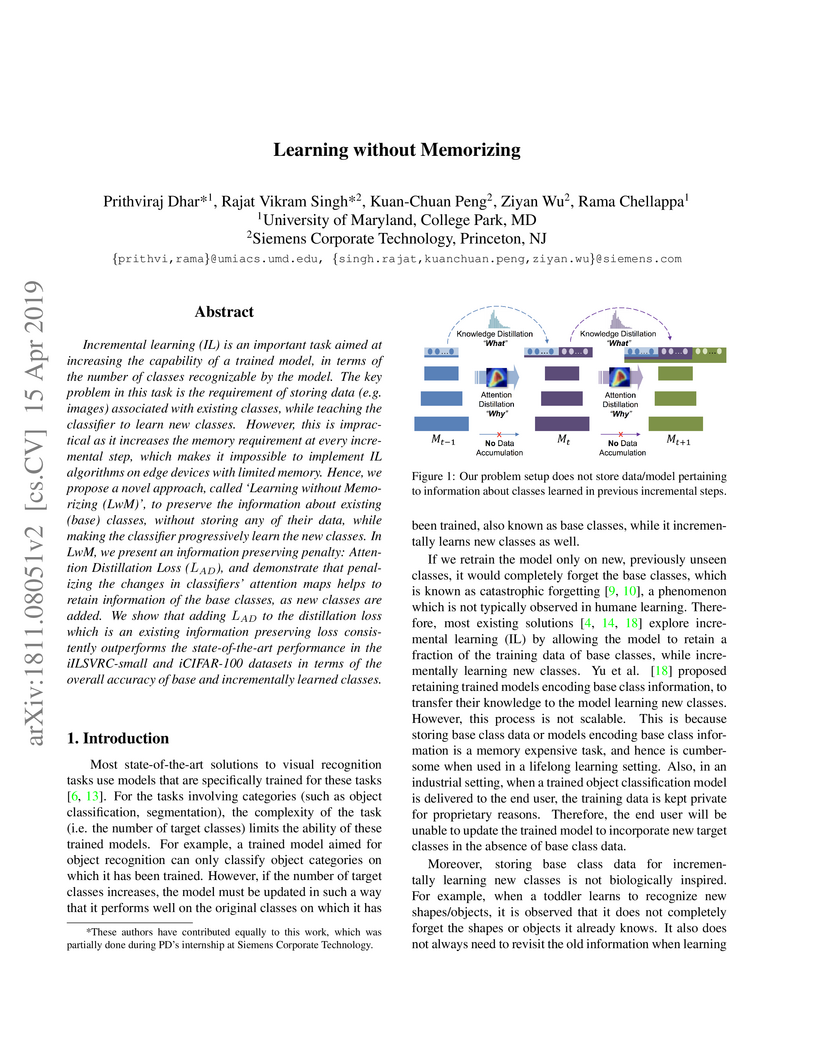

Incremental learning (IL) is an important task aimed at increasing the capability of a trained model, in terms of the number of classes recognizable by the model. The key problem in this task is the requirement of storing data (e.g. images) associated with existing classes, while teaching the classifier to learn new classes. However, this is impractical as it increases the memory requirement at every incremental step, which makes it impossible to implement IL algorithms on edge devices with limited memory. Hence, we propose a novel approach, called `Learning without Memorizing (LwM)', to preserve the information about existing (base) classes, without storing any of their data, while making the classifier progressively learn the new classes. In LwM, we present an information preserving penalty: Attention Distillation Loss (LAD), and demonstrate that penalizing the changes in classifiers' attention maps helps to retain information of the base classes, as new classes are added. We show that adding LAD to the distillation loss which is an existing information preserving loss consistently outperforms the state-of-the-art performance in the iILSVRC-small and iCIFAR-100 datasets in terms of the overall accuracy of base and incrementally learned classes.

10 May 2019

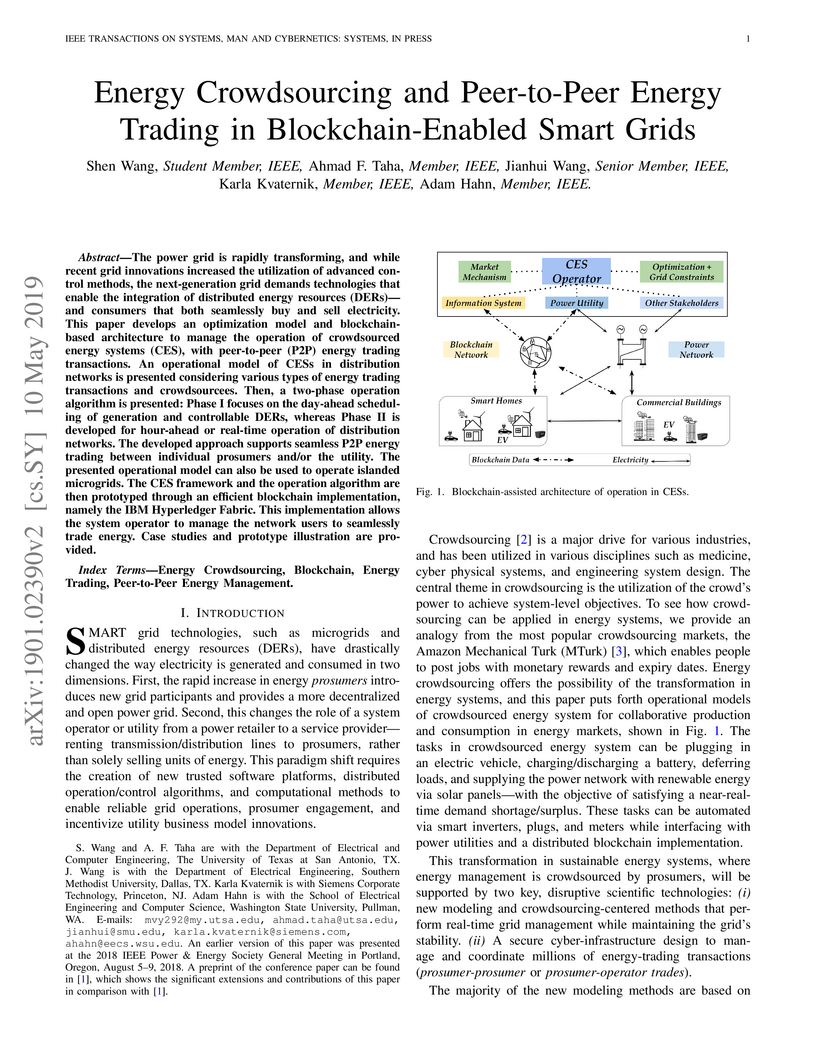

The power grid is rapidly transforming, and while recent grid innovations

increased the utilization of advanced control methods, the next-generation grid

demands technologies that enable the integration of distributed energy

resources (DERs)---and consumers that both seamlessly buy and sell electricity.

This paper develops an optimization model and blockchain-based architecture to

manage the operation of crowdsourced energy systems (CES), with peer-to-peer

(P2P) energy trading transactions. An operational model of CESs in distribution

networks is presented considering various types of energy trading transactions

and crowdsourcees. Then, a two-phase operation algorithm is presented: Phase I

focuses on the day-ahead scheduling of generation and controllable DERs,

whereas Phase II is developed for hour-ahead or real-time operation of

distribution networks. The developed approach supports seamless P2P energy

trading between individual prosumers and/or the utility. The presented

operational model can also be used to operate islanded microgrids. The CES

framework and the operation algorithm are then prototyped through an efficient

blockchain implementation, namely the IBM Hyperledger Fabric. This

implementation allows the system operator to manage the network users to

seamlessly trade energy. Case studies and prototype illustration are provided.

In this paper, we introduce Symplectic ODE-Net (SymODEN), a deep learning framework which can infer the dynamics of a physical system, given by an ordinary differential equation (ODE), from observed state trajectories. To achieve better generalization with fewer training samples, SymODEN incorporates appropriate inductive bias by designing the associated computation graph in a physics-informed manner. In particular, we enforce Hamiltonian dynamics with control to learn the underlying dynamics in a transparent way, which can then be leveraged to draw insight about relevant physical aspects of the system, such as mass and potential energy. In addition, we propose a parametrization which can enforce this Hamiltonian formalism even when the generalized coordinate data is embedded in a high-dimensional space or we can only access velocity data instead of generalized momentum. This framework, by offering interpretable, physically-consistent models for physical systems, opens up new possibilities for synthesizing model-based control strategies.

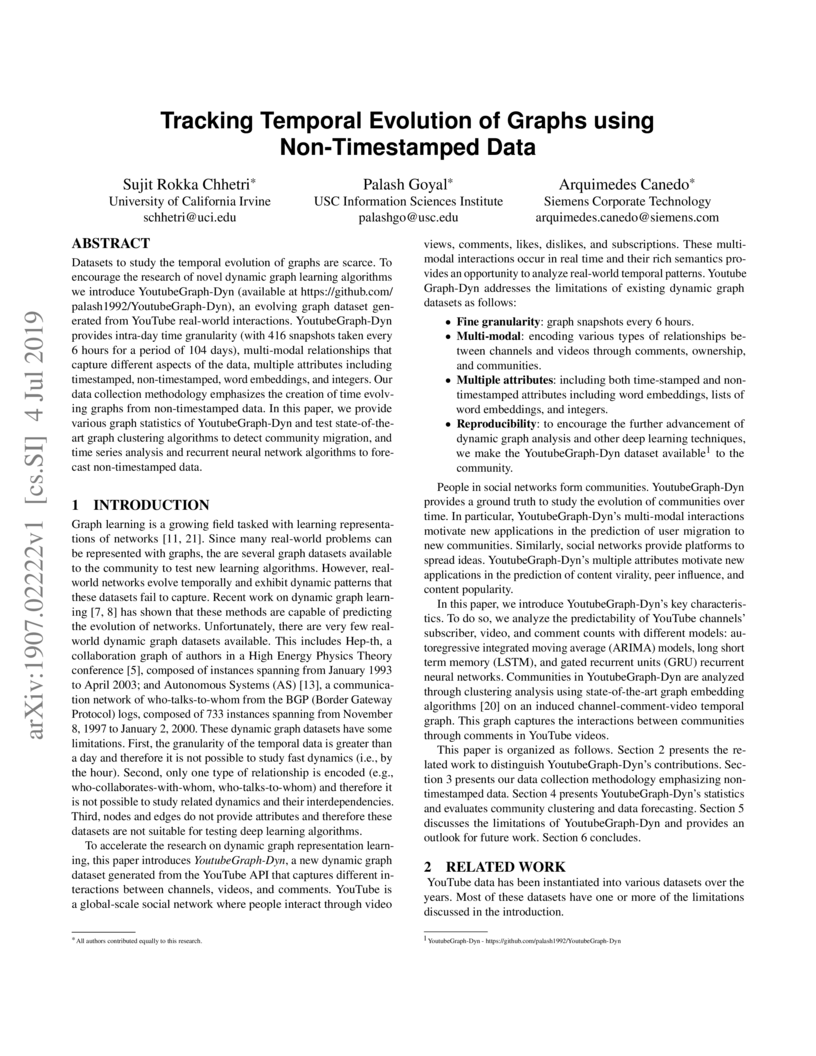

Datasets to study the temporal evolution of graphs are scarce. To encourage the research of novel dynamic graph learning algorithms we introduce YoutubeGraph-Dyn (available at this https URL), an evolving graph dataset generated from YouTube real-world interactions. YoutubeGraph-Dyn provides intra-day time granularity (with 416 snapshots taken every 6 hours for a period of 104 days), multi-modal relationships that capture different aspects of the data, multiple attributes including timestamped, non-timestamped, word embeddings, and integers. Our data collection methodology emphasizes the creation of time evolving graphs from non-timestamped data. In this paper, we provide various graph statistics of YoutubeGraph-Dyn and test state-of-the-art graph clustering algorithms to detect community migration, and time series analysis and recurrent neural network algorithms to forecast non-timestamped data.

Deep generative models are proven to be a useful tool for automatic design

synthesis and design space exploration. When applied in engineering design,

existing generative models face three challenges: 1) generated designs lack

diversity and do not cover all areas of the design space, 2) it is difficult to

explicitly improve the overall performance or quality of generated designs, and

3) existing models generally do not generate novel designs, outside the domain

of the training data. In this paper, we simultaneously address these challenges

by proposing a new Determinantal Point Processes based loss function for

probabilistic modeling of diversity and quality. With this new loss function,

we develop a variant of the Generative Adversarial Network, named "Performance

Augmented Diverse Generative Adversarial Network" or PaDGAN, which can generate

novel high-quality designs with good coverage of the design space. Using three

synthetic examples and one real-world airfoil design example, we demonstrate

that PaDGAN can generate diverse and high-quality designs. In comparison to a

vanilla Generative Adversarial Network, on average, it generates samples with a

28% higher mean quality score with larger diversity and without the mode

collapse issue. Unlike typical generative models that usually generate new

designs by interpolating within the boundary of training data, we show that

PaDGAN expands the design space boundary outside the training data towards

high-quality regions. The proposed method is broadly applicable to many tasks

including design space exploration, design optimization, and creative solution

recommendation.

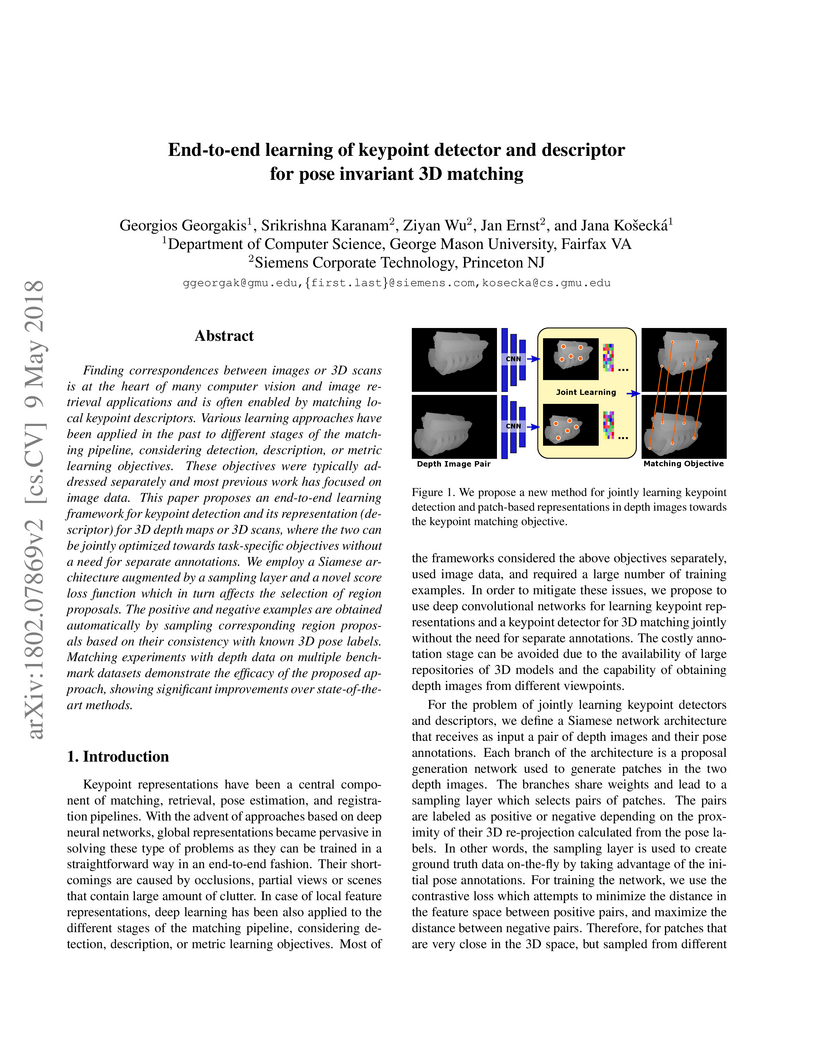

Finding correspondences between images or 3D scans is at the heart of many

computer vision and image retrieval applications and is often enabled by

matching local keypoint descriptors. Various learning approaches have been

applied in the past to different stages of the matching pipeline, considering

detector, descriptor, or metric learning objectives. These objectives were

typically addressed separately and most previous work has focused on image

data. This paper proposes an end-to-end learning framework for keypoint

detection and its representation (descriptor) for 3D depth maps or 3D scans,

where the two can be jointly optimized towards task-specific objectives without

a need for separate annotations. We employ a Siamese architecture augmented by

a sampling layer and a novel score loss function which in turn affects the

selection of region proposals. The positive and negative examples are obtained

automatically by sampling corresponding region proposals based on their

consistency with known 3D pose labels. Matching experiments with depth data on

multiple benchmark datasets demonstrate the efficacy of the proposed approach,

showing significant improvements over state-of-the-art methods.

With vast amounts of video content being uploaded to the Internet every minute, video summarization becomes critical for efficient browsing, searching, and indexing of visual content. Nonetheless, the spread of social and egocentric cameras creates an abundance of sparse scenarios captured by several devices, and ultimately required to be jointly summarized. In this paper, we discuss the problem of summarizing videos recorded independently by several dynamic cameras that intermittently share the field of view. We present a robust framework that (a) identifies a diverse set of important events among moving cameras that often are not capturing the same scene, and (b) selects the most representative view(s) at each event to be included in a universal summary. Due to the lack of an applicable alternative, we collected a new multi-view egocentric dataset, Multi-Ego. Our dataset is recorded simultaneously by three cameras, covering a wide variety of real-life scenarios. The footage is annotated by multiple individuals under various summarization configurations, with a consensus analysis ensuring a reliable ground truth. We conduct extensive experiments on the compiled dataset in addition to three other standard benchmarks that show the robustness and the advantage of our approach in both supervised and unsupervised settings. Additionally, we show that our approach learns collectively from data of varied number-of-views and orthogonal to other summarization methods, deeming it scalable and generic.

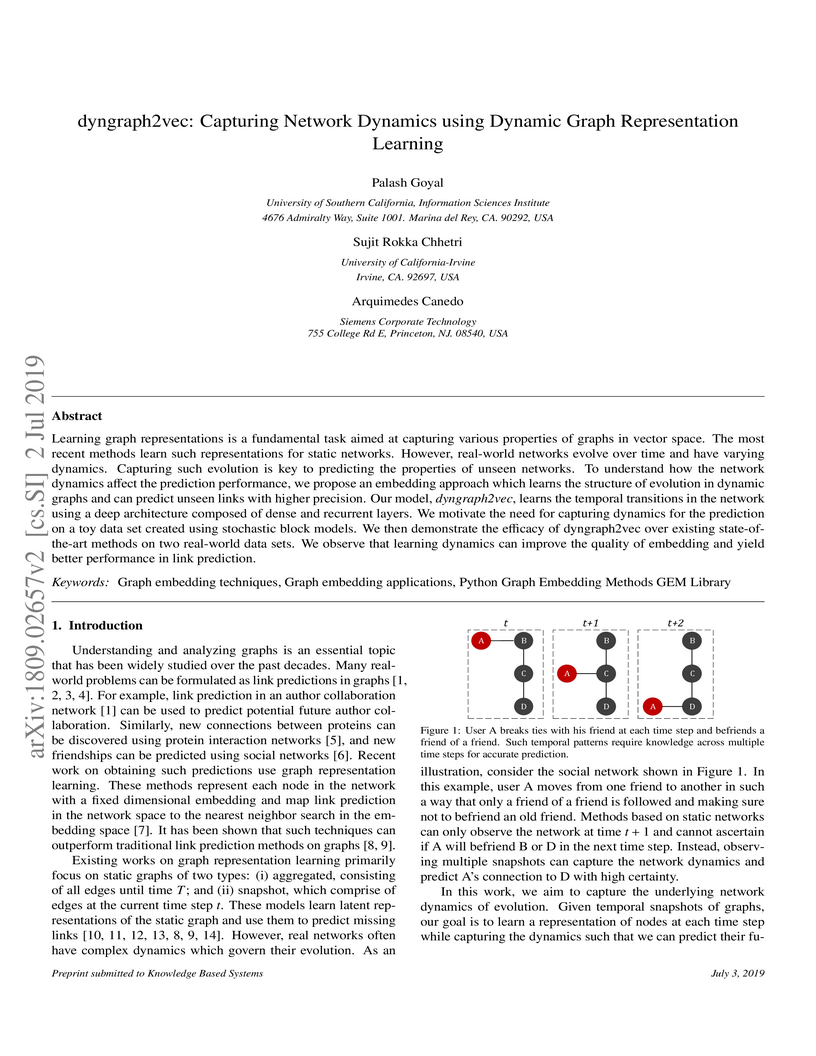

Learning graph representations is a fundamental task aimed at capturing various properties of graphs in vector space. The most recent methods learn such representations for static networks. However, real world networks evolve over time and have varying dynamics. Capturing such evolution is key to predicting the properties of unseen networks. To understand how the network dynamics affect the prediction performance, we propose an embedding approach which learns the structure of evolution in dynamic graphs and can predict unseen links with higher precision. Our model, dyngraph2vec, learns the temporal transitions in the network using a deep architecture composed of dense and recurrent layers. We motivate the need of capturing dynamics for prediction on a toy data set created using stochastic block models. We then demonstrate the efficacy of dyngraph2vec over existing state-of-the-art methods on two real world data sets. We observe that learning dynamics can improve the quality of embedding and yield better performance in link prediction.

Engineering design tasks often require synthesizing new designs that meet

desired performance requirements. The conventional design process, which

requires iterative optimization and performance evaluation, is slow and

dependent on initial designs. Past work has used conditional generative

adversarial networks (cGANs) to enable direct design synthesis for given target

performances. However, most existing cGANs are restricted to categorical

conditions. Recent work on Continuous conditional GAN (CcGAN) tries to address

this problem, but still faces two challenges: 1) it performs poorly on

non-uniform performance distributions, and 2) the generated designs may not

cover the entire design space. We propose a new model, named Performance

Conditioned Diverse Generative Adversarial Network (PcDGAN), which introduces a

singular vicinal loss combined with a Determinantal Point Processes (DPP) based

loss function to enhance diversity. PcDGAN uses a new self-reinforcing score

called the Lambert Log Exponential Transition Score (LLETS) for improved

conditioning. Experiments on synthetic problems and a real-world airfoil design

problem demonstrate that PcDGAN outperforms state-of-the-art GAN models and

improves the conditioning likelihood by 69% in an airfoil generation task and

up to 78% in synthetic conditional generation tasks and achieves greater design

space coverage. The proposed method enables efficient design synthesis and

design space exploration with applications ranging from CAD model generation to

metamaterial selection.

Existing approaches for open-domain question answering (QA) are typically designed for questions that require either single-hop or multi-hop reasoning, which make strong assumptions of the complexity of questions to be answered. Also, multi-step document retrieval often incurs higher number of relevant but non-supporting documents, which dampens the downstream noise-sensitive reader module for answer extraction. To address these challenges, we propose a unified QA framework to answer any-hop open-domain questions, which iteratively retrieves, reranks and filters documents, and adaptively determines when to stop the retrieval process. To improve the retrieval accuracy, we propose a graph-based reranking model that perform multi-document interaction as the core of our iterative reranking framework. Our method consistently achieves performance comparable to or better than the state-of-the-art on both single-hop and multi-hop open-domain QA datasets, including Natural Questions Open, SQuAD Open, and HotpotQA.

We propose a novel method for automatic reasoning on knowledge graphs based on debate dynamics. The main idea is to frame the task of triple classification as a debate game between two reinforcement learning agents which extract arguments -- paths in the knowledge graph -- with the goal to promote the fact being true (thesis) or the fact being false (antithesis), respectively. Based on these arguments, a binary classifier, called the judge, decides whether the fact is true or false. The two agents can be considered as sparse, adversarial feature generators that present interpretable evidence for either the thesis or the antithesis. In contrast to other black-box methods, the arguments allow users to get an understanding of the decision of the judge. Since the focus of this work is to create an explainable method that maintains a competitive predictive accuracy, we benchmark our method on the triple classification and link prediction task. Thereby, we find that our method outperforms several baselines on the benchmark datasets FB15k-237, WN18RR, and Hetionet. We also conduct a survey and find that the extracted arguments are informative for users.

Recent studies on open-domain question answering have achieved prominent

performance improvement using pre-trained language models such as BERT.

State-of-the-art approaches typically follow the "retrieve and read" pipeline

and employ BERT-based reranker to filter retrieved documents before feeding

them into the reader module. The BERT retriever takes as input the

concatenation of question and each retrieved document. Despite the success of

these approaches in terms of QA accuracy, due to the concatenation, they can

barely handle high-throughput of incoming questions each with a large

collection of retrieved documents. To address the efficiency problem, we

propose DC-BERT, a decoupled contextual encoding framework that has dual BERT

models: an online BERT which encodes the question only once, and an offline

BERT which pre-encodes all the documents and caches their encodings. On SQuAD

Open and Natural Questions Open datasets, DC-BERT achieves 10x speedup on

document retrieval, while retaining most (about 98%) of the QA performance

compared to state-of-the-art approaches for open-domain question answering.

We propose a new method that uses deep learning techniques to solve the inverse problems. The inverse problem is cast in the form of learning an end-to-end mapping from observed data to the ground-truth. Inspired by the splitting strategy widely used in regularized iterative algorithm to tackle inverse problems, the mapping is decomposed into two networks, with one handling the inversion of the physical forward model associated with the data term and one handling the denoising of the output from the former network, i.e., the inverted version, associated with the prior/regularization term. The two networks are trained jointly to learn the end-to-end mapping, getting rid of a two-step training. The training is annealing as the intermediate variable between these two networks bridges the gap between the input (the degraded version of output) and output and progressively approaches to the ground-truth. The proposed network, referred to as InverseNet, is flexible in the sense that most of the existing end-to-end network structure can be leveraged in the first network and most of the existing denoising network structure can be used in the second one. Extensive experiments on both synthetic data and real datasets on the tasks, motion deblurring, super-resolution, and colorization, demonstrate the efficiency and accuracy of the proposed method compared with other image processing algorithms.

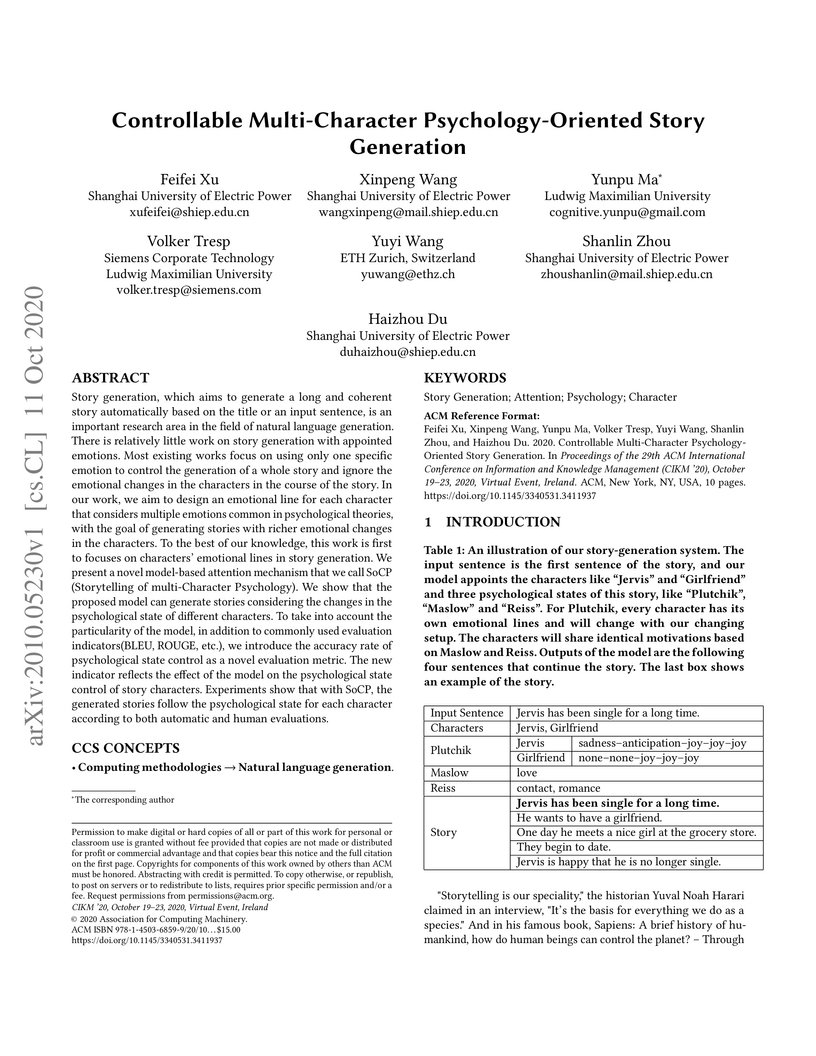

Story generation, which aims to generate a long and coherent story

automatically based on the title or an input sentence, is an important research

area in the field of natural language generation. There is relatively little

work on story generation with appointed emotions. Most existing works focus on

using only one specific emotion to control the generation of a whole story and

ignore the emotional changes in the characters in the course of the story. In

our work, we aim to design an emotional line for each character that considers

multiple emotions common in psychological theories, with the goal of generating

stories with richer emotional changes in the characters. To the best of our

knowledge, this work is first to focuses on characters' emotional lines in

story generation. We present a novel model-based attention mechanism that we

call SoCP (Storytelling of multi-Character Psychology). We show that the

proposed model can generate stories considering the changes in the

psychological state of different characters. To take into account the

particularity of the model, in addition to commonly used evaluation

indicators(BLEU, ROUGE, etc.), we introduce the accuracy rate of psychological

state control as a novel evaluation metric. The new indicator reflects the

effect of the model on the psychological state control of story characters.

Experiments show that with SoCP, the generated stories follow the psychological

state for each character according to both automatic and human evaluations.

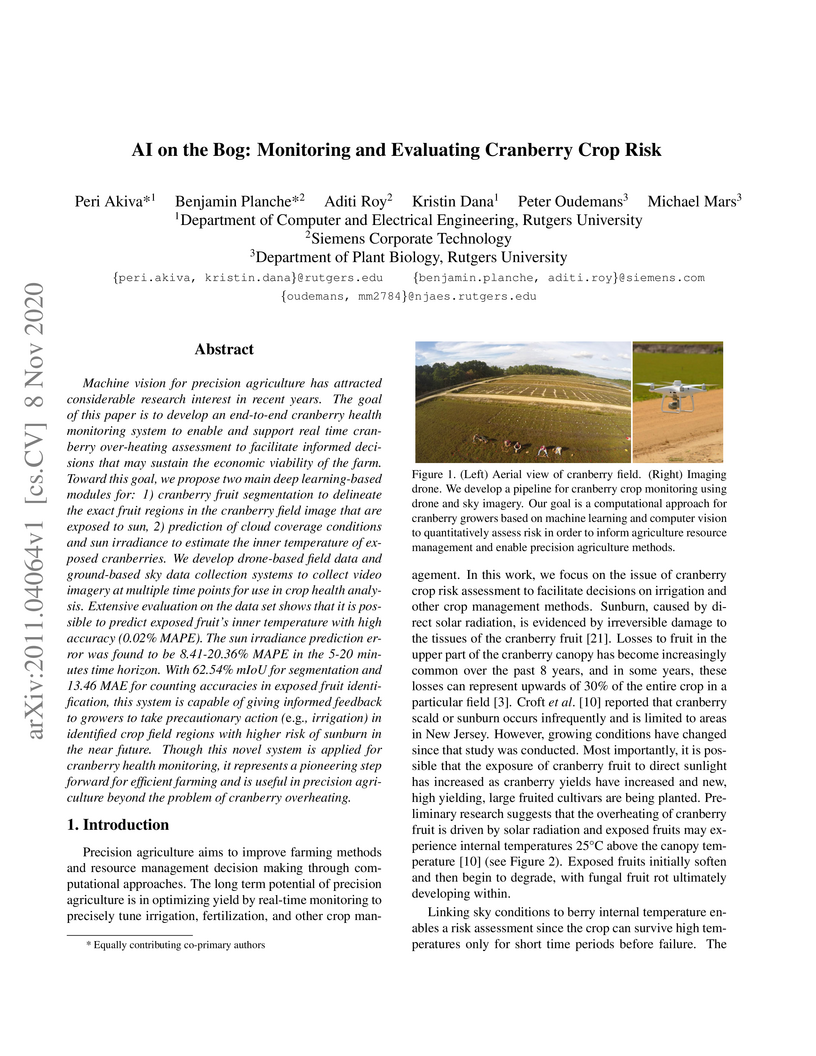

Machine vision for precision agriculture has attracted considerable research interest in recent years. The goal of this paper is to develop an end-to-end cranberry health monitoring system to enable and support real time cranberry over-heating assessment to facilitate informed decisions that may sustain the economic viability of the farm. Toward this goal, we propose two main deep learning-based modules for: 1) cranberry fruit segmentation to delineate the exact fruit regions in the cranberry field image that are exposed to sun, 2) prediction of cloud coverage conditions and sun irradiance to estimate the inner temperature of exposed cranberries. We develop drone-based field data and ground-based sky data collection systems to collect video imagery at multiple time points for use in crop health analysis. Extensive evaluation on the data set shows that it is possible to predict exposed fruit's inner temperature with high accuracy (0.02% MAPE). The sun irradiance prediction error was found to be 8.41-20.36% MAPE in the 5-20 minutes time horizon. With 62.54% mIoU for segmentation and 13.46 MAE for counting accuracies in exposed fruit identification, this system is capable of giving informed feedback to growers to take precautionary action (e.g. irrigation) in identified crop field regions with higher risk of sunburn in the near future. Though this novel system is applied for cranberry health monitoring, it represents a pioneering step forward for efficient farming and is useful in precision agriculture beyond the problem of cranberry overheating.

Domain adaptation is an important tool to transfer knowledge about a task

(e.g. classification) learned in a source domain to a second, or target domain.

Current approaches assume that task-relevant target-domain data is available

during training. We demonstrate how to perform domain adaptation when no such

task-relevant target-domain data is available. To tackle this issue, we propose

zero-shot deep domain adaptation (ZDDA), which uses privileged information from

task-irrelevant dual-domain pairs. ZDDA learns a source-domain representation

which is not only tailored for the task of interest but also close to the

target-domain representation. Therefore, the source-domain task of interest

solution (e.g. a classifier for classification tasks) which is jointly trained

with the source-domain representation can be applicable to both the source and

target representations. Using the MNIST, Fashion-MNIST, NIST, EMNIST, and SUN

RGB-D datasets, we show that ZDDA can perform domain adaptation in

classification tasks without access to task-relevant target-domain training

data. We also extend ZDDA to perform sensor fusion in the SUN RGB-D scene

classification task by simulating task-relevant target-domain representations

with task-relevant source-domain data. To the best of our knowledge, ZDDA is

the first domain adaptation and sensor fusion method which requires no

task-relevant target-domain data. The underlying principle is not particular to

computer vision data, but should be extensible to other domains.

Transactive energy systems (TES) are emerging as a transformative solution

for the problems faced by distribution system operators due to an increase in

the use of distributed energy resources and a rapid acceleration in renewable

energy generation. These, on one hand, pose a decentralized power system

controls problem, requiring strategic microgrid control to maintain stability

for the community and for the utility. On the other hand, they require robust

financial markets operating on distributed software platforms that preserve

privacy. In this paper, we describe the implementation of a novel,

blockchain-based transactive energy system. We outline the key requirements and

motivation of this platform, describe the lessons learned, and provide a

description of key architectural components of this system.

Transactive microgrids are emerging as a transformative solution for the

problems faced by distribution system operators due to an increase in the use

of distributed energy resources and a rapid acceleration in renewable energy

generation, such as wind and solar power. Distributed ledgers have recently

found widespread interest in this domain due to their ability to provide

transactional integrity across decentralized computing nodes. However, the

existing state of the art has not focused on the privacy preservation

requirement of these energy systems -- the transaction level data can provide

much greater insights into a prosumer's behavior compared to smart meter data.

There are specific safety requirements in transactive microgrids to ensure the

stability of the grid and to control the load. To fulfil these requirements,

the distribution system operator needs transaction information from the grid,

which poses a further challenge to the privacy-goals. This problem is made

worse by requirement for off-blockchain communication in these networks. In

this paper, we extend a recently developed trading workflow called PETra and

describe our solution for communication and transactional anonymity.

There are no more papers matching your filters at the moment.