Silicon Austria Labs

From Johannes Kepler University Linz, the Hopfield Boosting method integrates Modern Hopfield Networks and an adaptive boosting framework into an outlier exposure paradigm for out-of-distribution (OOD) detection. This approach achieved state-of-the-art performance across CIFAR-10, CIFAR-100, and ImageNet-1K benchmarks, lowering the mean False Positive Rate at 95% True Positives (FPR95) to 0.92% on CIFAR-10.

Backscatter Communication (BackCom) nodes harvest energy from and modulate information over external carriers. Reconfigurable Intelligent Surface (RIS) adapts phase shift response to alter channel strength in specific directions. In this paper, we unify those two seemingly different technologies (and their derivatives) into one architecture called RIScatter. RIScatter is a batteryless cognitive radio that recycles ambient signal in an adaptive and customizable manner, where dispersed or co-located scatter nodes partially modulate their information and partially engineer the wireless channel. The key is to render the probability distribution of reflection states as a joint function of the information source, Channel State Information (CSI), and relative priority of coexisting links. This enables RIScatter to softly bridge BackCom and RIS; reduce to either in special cases; or evolve in a mixed form for heterogeneous traffic control and universal hardware design. We also propose a low-complexity Successive Interference Cancellation (SIC)-free receiver that exploits the properties of RIScatter. For a single-user multi-node network, we characterize the achievable primary-(total-)backscatter rate region by optimizing the input distribution at scatter nodes, the active beamforming at the Access Point (AP), and the energy decision regions at the user. Simulations demonstrate RIScatter nodes can shift between backscatter modulation and passive beamforming.

Besides the recent impressive results on reinforcement learning (RL), safety

is still one of the major research challenges in RL. RL is a machine-learning

approach to determine near-optimal policies in Markov decision processes

(MDPs). In this paper, we consider the setting where the safety-relevant

fragment of the MDP together with a temporal logic safety specification is

given and many safety violations can be avoided by planning ahead a short time

into the future. We propose an approach for online safety shielding of RL

agents. During runtime, the shield analyses the safety of each available

action. For any action, the shield computes the maximal probability to not

violate the safety specification within the next k steps when executing this

action. Based on this probability and a given threshold, the shield decides

whether to block an action from the agent. Existing offline shielding

approaches compute exhaustively the safety of all state-action combinations

ahead of time, resulting in huge computation times and large memory

consumption. The intuition behind online shielding is to compute at runtime the

set of all states that could be reached in the near future. For each of these

states, the safety of all available actions is analysed and used for shielding

as soon as one of the considered states is reached. Our approach is well suited

for high-level planning problems where the time between decisions can be used

for safety computations and it is sustainable for the agent to wait until these

computations are finished. For our evaluation, we selected a 2-player version

of the classical computer game SNAKE. The game represents a high-level planning

problem that requires fast decisions and the multiplayer setting induces a

large state space, which is computationally expensive to analyse exhaustively.

This research introduces the Symplectic Euler discretization for adaptive Leaky Integrate-and-Fire (adLIF) neurons, ensuring stable and expressive spatio-temporal processing in spiking neural networks. The approach achieves state-of-the-art performance on various tasks while demonstrating inherent robustness and simplifying neuromorphic hardware deployment by negating the need for explicit normalization.

Feature ranking and selection is a widely used approach in various applications of supervised dimensionality reduction in discriminative machine learning. Nevertheless there exists significant evidence on feature ranking and selection algorithms based on any criterion leading to potentially sub-optimal solutions for class separability. In that regard, we introduce emerging information theoretic feature transformation protocols as an end-to-end neural network training approach. We present a dimensionality reduction network (MMINet) training procedure based on the stochastic estimate of the mutual information gradient. The network projects high-dimensional features onto an output feature space where lower dimensional representations of features carry maximum mutual information with their associated class labels. Furthermore, we formulate the training objective to be estimated non-parametrically with no distributional assumptions. We experimentally evaluate our method with applications to high-dimensional biological data sets, and relate it to conventional feature selection algorithms to form a special case of our approach.

26 May 2021

We present Tempest, a synthesis tool to automatically create

correct-by-construction reactive systems and shields from qualitative or

quantitative specifications in probabilistic environments. A shield is a

special type of reactive system used for run-time enforcement; i.e., a shield

enforces a given qualitative or quantitative specification of a running system

while interfering with its operation as little as possible. Shields that

enforce a qualitative or quantitative specification are called safety-shields

or optimal-shields, respectively. Safety-shields can be implemented as

pre-shields or as post-shields, optimal-shields are implemented as

post-shields. Pre-shields are placed before the system and restrict the choices

of the system. Post-shields are implemented after the system and are able to

overwrite the system's output. Tempest is based on the probabilistic model

checker Storm, adding model checking algorithms for stochastic games with

safety and mean-payoff objectives. To the best of our knowledge, Tempest is the

only synthesis tool able to solve 2-1/2-player games with mean-payoff

objectives without restrictions on the state space. Furthermore, Tempest adds

the functionality to synthesize safe and optimal strategies that implement

reactive systems and shields

Safety is still one of the major research challenges in reinforcement

learning (RL). In this paper, we address the problem of how to avoid safety

violations of RL agents during exploration in probabilistic and partially

unknown environments. Our approach combines automata learning for Markov

Decision Processes (MDPs) and shield synthesis in an iterative approach.

Initially, the MDP representing the environment is unknown. The agent starts

exploring the environment and collects traces. From the collected traces, we

passively learn MDPs that abstractly represent the safety-relevant aspects of

the environment. Given a learned MDP and a safety specification, we construct a

shield. For each state-action pair within a learned MDP, the shield computes

exact probabilities on how likely it is that executing the action results in

violating the specification from the current state within the next k steps.

After the shield is constructed, the shield is used during runtime and blocks

any actions that induce a too large risk from the agent. The shielded agent

continues to explore the environment and collects new data on the environment.

Iteratively, we use the collected data to learn new MDPs with higher accuracy,

resulting in turn in shields able to prevent more safety violations. We

implemented our approach and present a detailed case study of a Q-learning

agent exploring slippery Gridworlds. In our experiments, we show that as the

agent explores more and more of the environment during training, the improved

learned models lead to shields that are able to prevent many safety violations.

Over the last decade, society and industries are undergoing rapid

digitization that is expected to lead to the evolution of the cyber-physical

continuum. End-to-end deterministic communications infrastructure is the

essential glue that will bridge the digital and physical worlds of the

continuum. We describe the state of the art and open challenges with respect to

contemporary deterministic communications and compute technologies: 3GPP 5G,

IEEE Time-Sensitive Networking, IETF DetNet, OPC UA as well as edge computing.

While these technologies represent significant technological advancements

towards networking Cyber-Physical Systems (CPS), we argue in this paper that

they rather represent a first generation of systems which are still limited in

different dimensions. In contrast, realizing future deterministic communication

systems requires, firstly, seamless convergence between these technologies and,

secondly, scalability to support heterogeneous (time-varying requirements)

arising from diverse CPS applications. In addition, future deterministic

communication networks will have to provide such characteristics end-to-end,

which for CPS refers to the entire communication and computation loop, from

sensors to actuators. In this paper, we discuss the state of the art regarding

the main challenges towards these goals: predictability, end-to-end technology

integration, end-to-end security, and scalable vertical application

interfacing. We then present our vision regarding viable approaches and

technological enablers to overcome these four central challenges. Key

approaches to leverage in that regard are 6G system evolutions, wireless

friendly integration of 6G into TSN and DetNet, novel end-to-end security

approaches, efficient edge-cloud integrations, data-driven approaches for

stochastic characterization and prediction, as well as leveraging digital twins

towards system awareness.

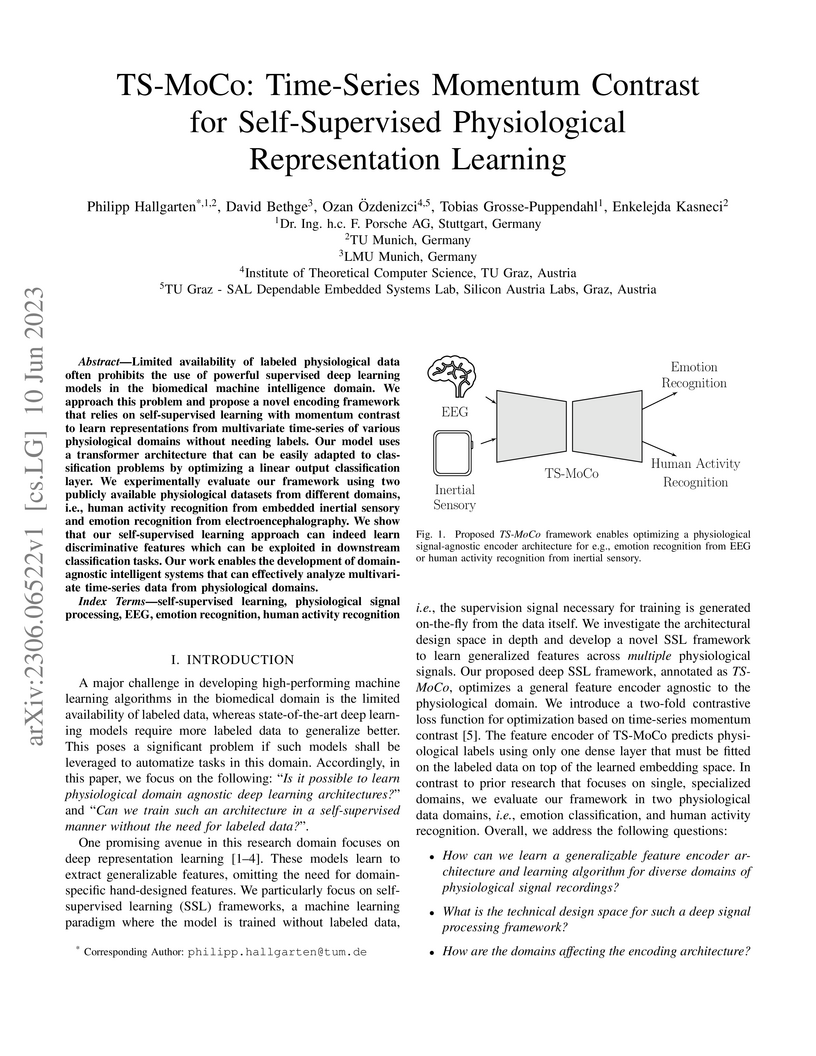

Limited availability of labeled physiological data often prohibits the use of powerful supervised deep learning models in the biomedical machine intelligence domain. We approach this problem and propose a novel encoding framework that relies on self-supervised learning with momentum contrast to learn representations from multivariate time-series of various physiological domains without needing labels. Our model uses a transformer architecture that can be easily adapted to classification problems by optimizing a linear output classification layer. We experimentally evaluate our framework using two publicly available physiological datasets from different domains, i.e., human activity recognition from embedded inertial sensory and emotion recognition from electroencephalography. We show that our self-supervised learning approach can indeed learn discriminative features which can be exploited in downstream classification tasks. Our work enables the development of domain-agnostic intelligent systems that can effectively analyze multivariate time-series data from physiological domains.

Evaluation of deep reinforcement learning (RL) is inherently challenging.

Especially the opaqueness of learned policies and the stochastic nature of both

agents and environments make testing the behavior of deep RL agents difficult.

We present a search-based testing framework that enables a wide range of novel

analysis capabilities for evaluating the safety and performance of deep RL

agents. For safety testing, our framework utilizes a search algorithm that

searches for a reference trace that solves the RL task. The backtracking states

of the search, called boundary states, pose safety-critical situations. We

create safety test-suites that evaluate how well the RL agent escapes

safety-critical situations near these boundary states. For robust performance

testing, we create a diverse set of traces via fuzz testing. These fuzz traces

are used to bring the agent into a wide variety of potentially unknown states

from which the average performance of the agent is compared to the average

performance of the fuzz traces. We apply our search-based testing approach on

RL for Nintendo's Super Mario Bros.

Active automata learning became a popular tool for the behavioral analysis of

communication protocols. The main advantage is that no manual modeling effort

is required since a behavioral model is automatically inferred from a black-box

system. However, several real-world applications of this technique show that

the overhead for the establishment of an active interface might hamper the

practical applicability. Our recent work on the active learning of Bluetooth

Low Energy (BLE) protocol found that the active interaction creates a

bottleneck during learning. Considering the automata learning toolset, passive

learning techniques appear as a promising solution since they do not require an

active interface to the system under learning. Instead, models are learned

based on a given data set. In this paper, we evaluate passive learning for two

network protocols: BLE and Message Queuing Telemetry Transport (MQTT). Our

results show that passive techniques can correctly learn with less data than

required by active learning. However, a general random data generation for

passive learning is more expensive compared to the costs of active learning.

Incremental Learning scenarios do not always represent real-world inference

use-cases, which tend to have less strict task boundaries, and exhibit

repetition of common classes and concepts in their continual data stream. To

better represent these use-cases, new scenarios with partial repetition and

mixing of tasks are proposed, where the repetition patterns are innate to the

scenario and unknown to the strategy. We investigate how exemplar-free

incremental learning strategies are affected by data repetition, and we adapt a

series of state-of-the-art approaches to analyse and fairly compare them under

both settings. Further, we also propose a novel method (Horde), able to

dynamically adjust an ensemble of self-reliant feature extractors, and align

them by exploiting class repetition. Our proposed exemplar-free method achieves

competitive results in the classic scenario without repetition, and

state-of-the-art performance in the one with repetition.

Deployment of deep neural networks in resource-constrained embedded systems

requires innovative algorithmic solutions to facilitate their energy and memory

efficiency. To further ensure the reliability of these systems against

malicious actors, recent works have extensively studied adversarial robustness

of existing architectures. Our work focuses on the intersection of adversarial

robustness, memory- and energy-efficiency in neural networks. We introduce a

neural network conversion algorithm designed to produce sparse and

adversarially robust spiking neural networks (SNNs) by leveraging the sparse

connectivity and weights from a robustly pretrained artificial neural network

(ANN). Our approach combines the energy-efficient architecture of SNNs with a

novel conversion algorithm, leading to state-of-the-art performance with

enhanced energy and memory efficiency through sparse connectivity and

activations. Our models are shown to achieve up to 100x reduction in the number

of weights to be stored in memory, with an estimated 8.6x increase in energy

efficiency compared to dense SNNs, while maintaining high performance and

robustness against adversarial threats.

04 Aug 2024

Multiple-input multiple-output has been a key technology for wireless systems

for decades. For typical MIMO communication systems, antenna array elements are

usually separated by half of the carrier wavelength, thus termed as

conventional MIMO. In this paper, we investigate the performance of multi-user

MIMO communication, with sparse arrays at both the transmitter and receiver

side, i.e., the array elements are separated by more than half wavelength.

Given the same number of array elements, the performance of sparse MIMO is

compared with conventional MIMO. On one hand, sparse MIMO has a larger

aperture, which can achieve narrower main lobe beams that make it easier to

resolve densely located users. Besides, increased array aperture also enlarges

the near-field communication region, which can enhance the spatial multiplexing

gain, thanks to the spherical wavefront property in the near-field region. On

the other hand, element spacing larger than half wavelength leads to undesired

grating lobes, which, if left unattended, may cause severe inter-user

interference. To gain further insights, we first study the spatial multiplexing

gain of the basic single-user sparse MIMO communication system, where a

closed-form expression of the near-field effective degree of freedom is

derived. The result shows that the EDoF increases with the array sparsity for

sparse MIMO before reaching its upper bound, which equals to the minimum value

between the transmit and receive antenna numbers. Furthermore, the scaling law

for the achievable data rate with varying array sparsity is analyzed and an

array sparsity-selection strategy is proposed. We then consider the more

general multi-user sparse MIMO communication system. It is shown that sparse

MIMO is less likely to experience severe IUI than conventional MIMO.

The wireless domain is witnessing a flourishing of integrated systems, e.g. (a) integrated sensing and communications, and (b) simultaneous wireless information and power transfer, due to their potential to use resources (spectrum, power) judiciously. Inspired by this trend, we investigate integrated sensing, communications and powering (ISCAP), through the design of a wideband OFDM signal to power a sensor while simultaneously performing target-sensing and communication. To characterize the ISCAP performance region, we assume symbols with non-zero mean asymmetric Gaussian distribution (i.e., the input distribution), and optimize its mean and variance at each subcarrier to maximize the harvested power, subject to constraints on the achievable rate (communications) and the average side-to-peak-lobe difference (sensing). The resulting input distribution, through simulations, achieves a larger performance region than that of (i) a symmetric complex Gaussian input distribution with identical mean and variance for the real and imaginary parts, (ii) a zero-mean symmetric complex Gaussian input distribution, and (iii) the superposed power-splitting communication and sensing signal (the coexisting solution). In particular, the optimized input distribution balances the three functions by exhibiting the following features: (a) symbols in subcarriers with strong communication channels have high variance to satisfy the rate constraint, while the other symbols are dominated by the mean, forming a relatively uniform sum of mean and variance across subcarriers for sensing; (b) with looser communication and sensing constraints, large absolute means appear on subcarriers with stronger powering channels for higher harvested power. As a final note, the results highlight the great potential of the co-designed ISCAP system for further efficiency enhancement.

Future wireless networks, in particular, 5G and beyond, are anticipated to

deploy dense Low Earth Orbit (LEO) satellites to provide global coverage and

broadband connectivity. However, the limited frequency band and the coexistence

of multiple constellations bring new challenges for interference management. In

this paper, we propose a robust multilayer interference management scheme for

spectrum sharing in heterogeneous satellite networks with statistical channel

state information (CSI) at the transmitter (CSIT) and receivers (CSIR). In the

proposed scheme, Rate-Splitting Multiple Access (RSMA), as a general and

powerful framework for interference management and multiple access strategies,

is implemented distributedly at GEO and LEO satellites, coined Distributed-RSMA

(D-RSMA). By doing so, D-RSMA aims to mitigate the interference and boost the

user fairness of the overall multilayer satellite system. Specifically, we

study the problem of jointly optimizing the GEO/LEO precoders and message

splits to maximize the minimum rate among User Terminals (UTs) subject to a

transmit power constraint at all satellites. A robust algorithm is proposed to

solve the original non-convex optimization problem. Numerical results

demonstrate the effectiveness and robustness towards network load and CSI

uncertainty of our proposed D-RSMA scheme. Benefiting from the interference

management capability, D-RSMA provides significant max-min fairness performance

gains compared to several benchmark schemes.

13 Jul 2023

The design of beamforming for downlink multi-user massive multi-input

multi-output (MIMO) relies on accurate downlink channel state information (CSI)

at the transmitter (CSIT). In fact, it is difficult for the base station (BS)

to obtain perfect CSIT due to user mobility, and latency/feedback delay

(between downlink data transmission and CSI acquisition). Hence, robust

beamforming under imperfect CSIT is needed. In this paper, considering multiple

antennas at all nodes (base station and user terminals), we develop a

multi-agent deep reinforcement learning (DRL) framework for massive MIMO under

imperfect CSIT, where the transmit and receive beamforming are jointly designed

to maximize the average information rate of all users. Leveraging this

DRL-based framework, interference management is explored and three DRL-based

schemes, namely the distributed-learning-distributed-processing scheme,

partial-distributed-learning-distributed-processing, and central-learning

distributed-processing scheme, are proposed and analyzed. This paper 1)

highlights the fact that the DRL-based strategies outperform the random

action-chosen strategy and the delay-sensitive strategy named as

sample-and-hold (SAH) approach, and achieved over 90% of the information rate

of two selected benchmarks with lower complexity: the zero-forcing

channel-inversion (ZF-CI) with perfect CSIT and the Greedy Beam Selection

strategy, 2) demonstrates the inherent robustness of the proposed designs in

the presence of channel aging. 3) conducts detailed convergence and scalability

analysis on the proposed framework.

Neural network implementations are known to be vulnerable to physical attack

vectors such as fault injection attacks. As of now, these attacks were only

utilized during the inference phase with the intention to cause a

misclassification. In this work, we explore a novel attack paradigm by

injecting faults during the training phase of a neural network in a way that

the resulting network can be attacked during deployment without the necessity

of further faulting. In particular, we discuss attacks against ReLU activation

functions that make it possible to generate a family of malicious inputs, which

are called fooling inputs, to be used at inference time to induce controlled

misclassifications. Such malicious inputs are obtained by mathematically

solving a system of linear equations that would cause a particular behaviour on

the attacked activation functions, similar to the one induced in training

through faulting. We call such attacks fooling backdoors as the fault attacks

at the training phase inject backdoors into the network that allow an attacker

to produce fooling inputs. We evaluate our approach against multi-layer

perceptron networks and convolutional networks on a popular image

classification task obtaining high attack success rates (from 60% to 100%) and

high classification confidence when as little as 25 neurons are attacked while

preserving high accuracy on the originally intended classification task.

Solving control tasks in complex environments automatically through learning offers great potential. While contemporary techniques from deep reinforcement learning (DRL) provide effective solutions, their decision-making is not transparent. We aim to provide insights into the decisions faced by the agent by learning an automaton model of environmental behavior under the control of an agent. However, for most control problems, automata learning is not scalable enough to learn a useful model. In this work, we raise the capabilities of automata learning such that it is possible to learn models for environments that have complex and continuous dynamics.

The core of the scalability of our method lies in the computation of an abstract state-space representation, by applying dimensionality reduction and clustering on the observed environmental state space. The stochastic transitions are learned via passive automata learning from observed interactions of the agent and the environment. In an iterative model-based RL process, we sample additional trajectories to learn an accurate environment model in the form of a discrete-state Markov decision process (MDP). We apply our automata learning framework on popular RL benchmarking environments in the OpenAI Gym, including LunarLander, CartPole, Mountain Car, and Acrobot. Our results show that the learned models are so precise that they enable the computation of policies solving the respective control tasks. Yet the models are more concise and more general than neural-network-based policies and by using MDPs we benefit from a wealth of tools available for analyzing them. When solving the task of LunarLander, the learned model even achieved similar or higher rewards than deep RL policies learned with stable-baselines3.

Recent advancements in edge computing have significantly enhanced the AI capabilities of Internet of Things (IoT) devices. However, these advancements introduce new challenges in knowledge exchange and resource management, particularly addressing the spatiotemporal data locality in edge computing environments. This study examines algorithms and methods for deploying distributed machine learning within autonomous, network-capable, AI-enabled edge devices. We focus on determining confidence levels in learning outcomes considering the spatial variability of data encountered by independent agents. Using collaborative mapping as a case study, we explore the application of the Distributed Neural Network Optimization (DiNNO) algorithm extended with Bayesian neural networks (BNNs) for uncertainty estimation. We implement a 3D environment simulation using the Webots platform to simulate collaborative mapping tasks, decouple the DiNNO algorithm into independent processes for asynchronous network communication in distributed learning, and integrate distributed uncertainty estimation using BNNs. Our experiments demonstrate that BNNs can effectively support uncertainty estimation in a distributed learning context, with precise tuning of learning hyperparameters crucial for effective uncertainty assessment. Notably, applying Kullback-Leibler divergence for parameter regularization resulted in a 12-30% reduction in validation loss during distributed BNN training compared to other regularization strategies.

There are no more papers matching your filters at the moment.