Ask or search anything...

PiSSA (Principal Singular Values and Singular Vectors Adaptation) introduces an SVD-based initialization strategy for low-rank adapters in Large Language Models, directly tuning the principal components of pre-trained weight matrices. This approach consistently outperforms LoRA in fine-tuning performance across diverse models and tasks, achieving faster convergence and significantly reducing quantization error in its QPiSSA variant, for example, improving Gemma-7B's GSM8K accuracy by 3.25% over LoRA.

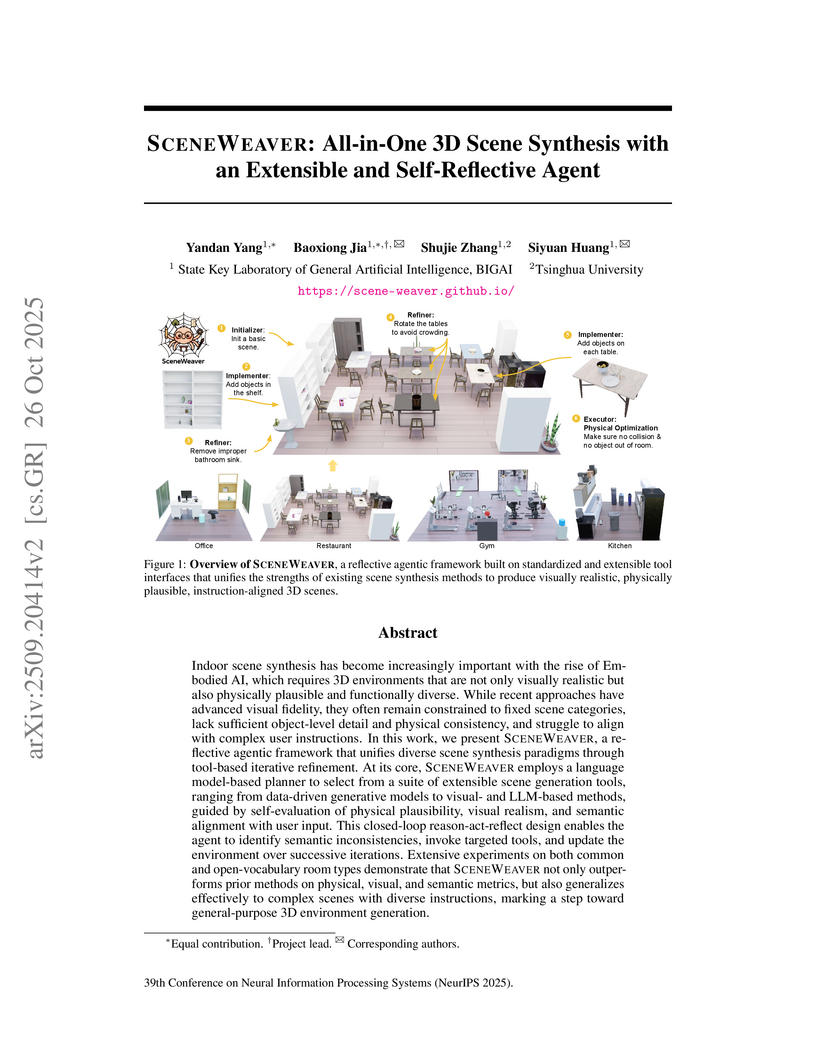

View blogSCENEWEAVER is a reflective agentic framework that utilizes Multimodal Large Language Models (MLLMs) for feedback-guided, all-in-one 3D scene synthesis. It unifies diverse synthesis methods through a standardized tool interface and a "reason-act-reflect" paradigm, achieving state-of-the-art results in visual realism, physical plausibility, and semantic alignment across various scene types.

View blogResearchers from BIGAI and Tsinghua University introduce MANIPTRANS, a two-stage framework that enables efficient transfer of human bimanual manipulation skills to robotic hands through combined trajectory imitation and residual learning, while generating DEXMANIPNET - a dataset of 3.3K manipulation episodes demonstrating generalization across multiple robotic hand designs.

View blogResearchers developed COLA, a framework enabling humanoid robots to compliantly and coordinately carry objects with humans, leveraging a proprioception-only policy learned through a three-step training process. The system achieved a 24.7% reduction in human effort and demonstrated stable performance across diverse objects and terrains in real-world scenarios.

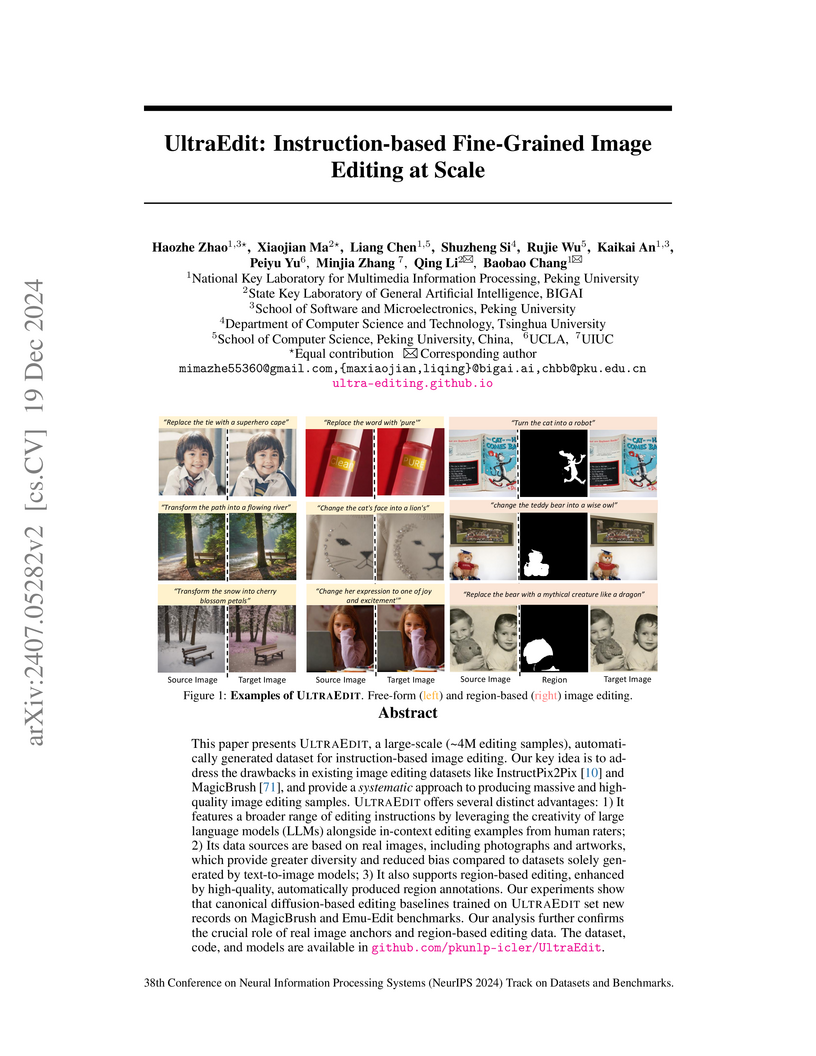

View blogUltraEdit introduces a large-scale (over 4 million samples), high-quality dataset for instruction-based image editing, designed to mitigate common biases and enhance fine-grained control. Models trained on UltraEdit demonstrate improved performance on established benchmarks like MagicBrush and Emu Edit, particularly for region-based and multi-turn editing tasks.

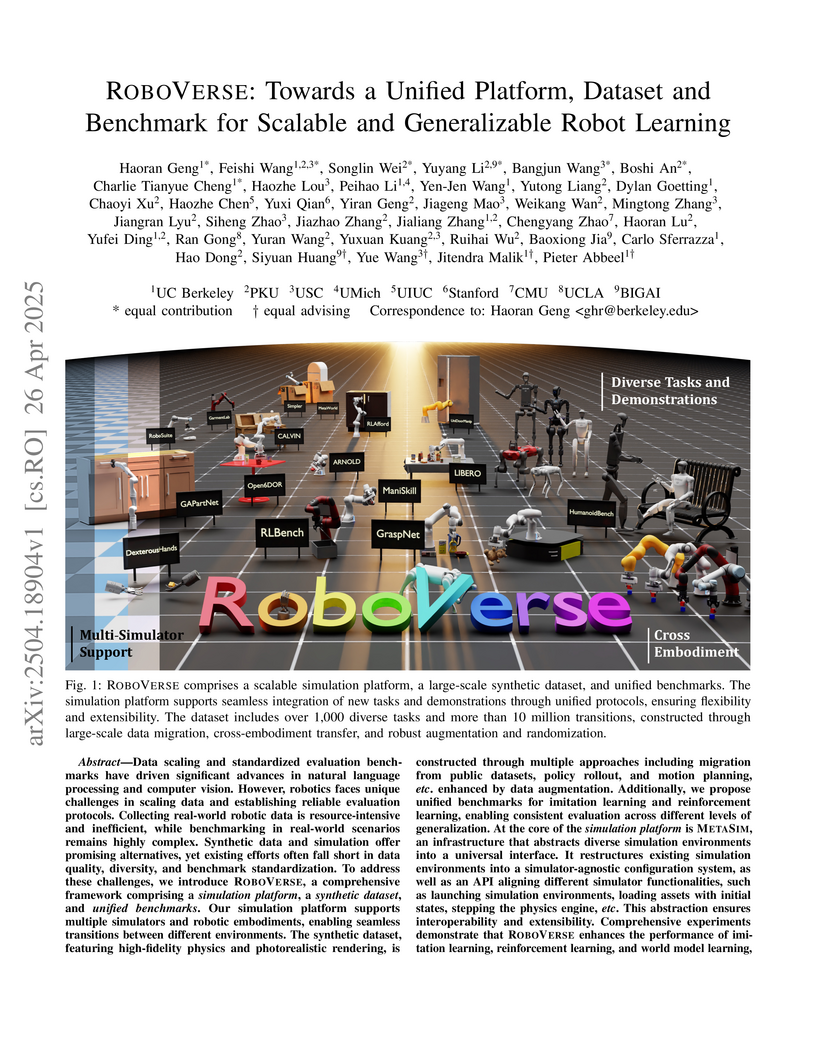

View blogRoboVerse introduces a unified robotics platform combining high-fidelity simulation environments, a large-scale synthetic dataset, and standardized benchmarks for imitation and reinforcement learning, enabling cross-simulator integration and improved sim-to-real transfer through its METASIM infrastructure and diverse data generation approaches.

View blogResearchers developed an automated pipeline to generate a large-scale multi-modal tool-usage dataset (MM-Traj), which was then used to fine-tune Vision-Language Models (VLMs) as agents, resulting in enhanced multi-step reasoning and tool-usage capabilities across various multi-modal tasks. The T3-Agent, trained on MM-Traj, demonstrated improved accuracy and tool utilization on challenging multi-modal benchmarks like GTA and GAIA, closing the performance gap with larger, closed-source models.

View blogResearchers developed SPORT, an iterative framework enabling multimodal agents to autonomously enhance tool usage without human-annotated data. This method employs step-wise AI feedback and direct preference optimization, achieving up to 6.41% higher answer accuracy on the GTA benchmark and 3.64% higher answer accuracy on the GAIA benchmark compared to strong baselines.

View blogPoliCon introduces a benchmark to assess large language models' capabilities in facilitating and achieving political consensus under diverse real-world objectives. Experiments revealed that while models like Gemini-2.5-Flash perform well on straightforward consensus tasks, all tested LLMs struggle with complex goals such as Rawlsianism and exhibit inherent partisan biases, highlighting limitations in sophisticated coalition-building.

View blogCLONE introduces a closed-loop system for whole-body humanoid teleoperation that achieves precise control for long-horizon tasks, virtually eliminating positional drift using only head and hand tracking. The system demonstrated a mean tracking error of 5.1 cm over 8.9 meters on a Unitree G1 robot, effectively enabling robust and coordinated execution of diverse dynamic skills.

View blogJARVIS-VLA, a Vision Language Action model, is introduced for playing visual games in Minecraft using keyboards and mouse, leveraging ActVLP, a three-stage post-training paradigm that improves world knowledge and visual grounding before action tuning. This enabled JARVIS-VLA-Qwen2-VL to achieve state-of-the-art performance on over 1,000 diverse atomic tasks in Minecraft, demonstrating a 40% improvement over prior baselines.

View blogThe paper "Keeping Yourself is Important in Downstream Tuning Multimodal Large Language Model" provides a systematic review and unified benchmark for tuning MLLMs, classifying methods into Selective, Additive, and Reparameterization paradigms. It empirically analyzes the trade-offs between task-expert specialization and open-world stabilization, offering practical guidelines for MLLM deployment.

View blogEmbodied VideoAgent develops a multimodal agent that uses persistent object memory from egocentric videos and embodied sensors to understand dynamic 3D scenes. The system achieved an 85.37% success rate for 3D object localization on Ego4D-VQ3D and showed improved performance on embodied question answering benchmarks compared to existing models.

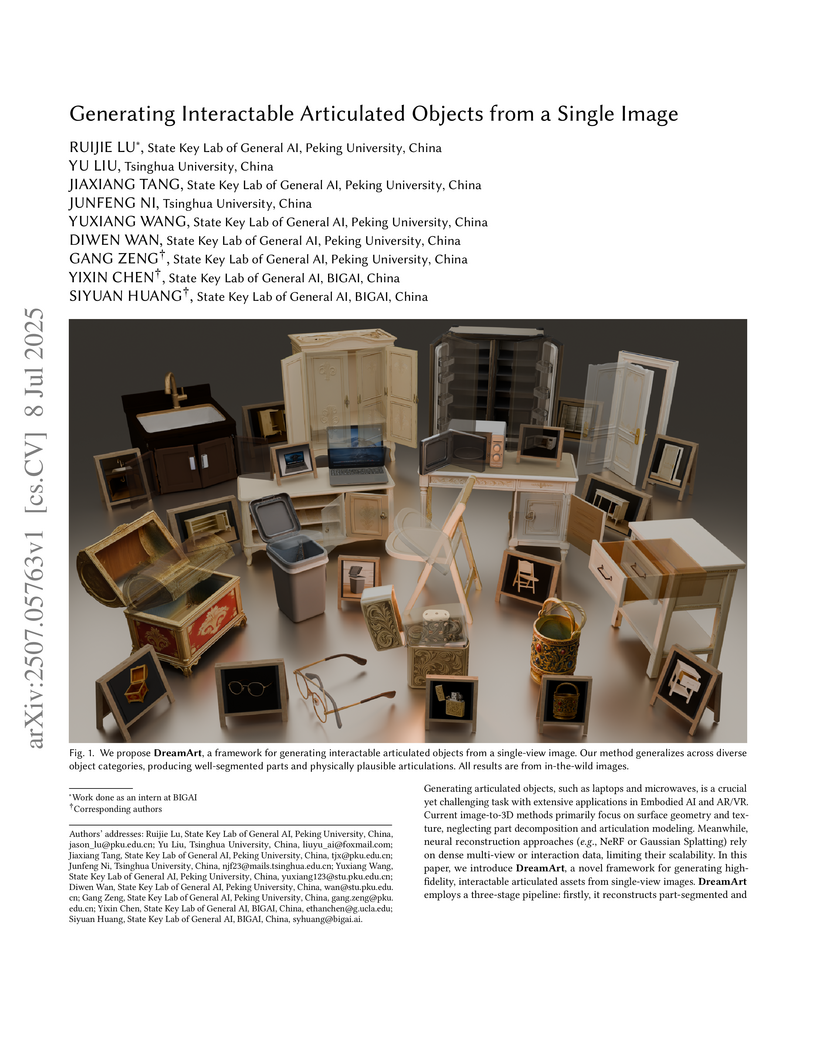

View blogDreamArt generates high-fidelity, interactable articulated 3D objects from a single image by integrating part-aware 3D reconstruction, conditional video diffusion with novel prompts, and physics-informed articulation optimization. The system demonstrated superior visual quality and articulation plausibility compared to existing methods, achieving higher PSNR, SSIM, and FVD for video synthesis, and significantly better user study scores for overall asset quality.

View blog Tsinghua University

Tsinghua University Peking University

Peking University

The University of Hong Kong

The University of Hong Kong

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign UCLA

UCLA

Shanghai Jiao Tong University

Shanghai Jiao Tong University

Zhejiang University

Zhejiang University

Chinese Academy of Sciences

Chinese Academy of Sciences National University of Singapore

National University of Singapore

University of Science and Technology of China

University of Science and Technology of China