Tokyo University of Agriculture and Technology

Current deep learning models for electroencephalography (EEG) are often task-specific and depend on large labeled datasets, limiting their adaptability. Although emerging foundation models aim for broader applicability, their rigid dependence on fixed, high-density multi-channel montages restricts their use across heterogeneous datasets and in missing-channel or practical low-channel settings. To address these limitations, we introduce SingLEM, a self-supervised foundation model that learns robust, general-purpose representations from single-channel EEG, making it inherently hardware agnostic. The model employs a hybrid encoder architecture that combines convolutional layers to extract local features with a hierarchical transformer to model both short- and long-range temporal dependencies. SingLEM is pretrained on 71 public datasets comprising over 9,200 subjects and 357,000 single-channel hours of EEG. When evaluated as a fixed feature extractor across six motor imagery and cognitive tasks, aggregated single-channel representations consistently outperformed leading multi-channel foundation models and handcrafted baselines. These results demonstrate that a single-channel approach can achieve state-of-the-art generalization while enabling fine-grained neurophysiological analysis and enhancing interpretability. The source code and pretrained models are available at this https URL.

01 Sep 2025

This research investigates how supervised versus unsupervised loss functions influence the performance of deep unfolded ISTA and IHT algorithms for sparse signal recovery, revealing that problem convexity significantly modulates the impact of the loss choice. For convex problems, supervised learning yields superior signal recovery (lower MSE), while unsupervised learning accelerates convergence to the original problem's objective minimum; for nonconvex problems, both approaches lead to improved and similar performance over traditional methods.

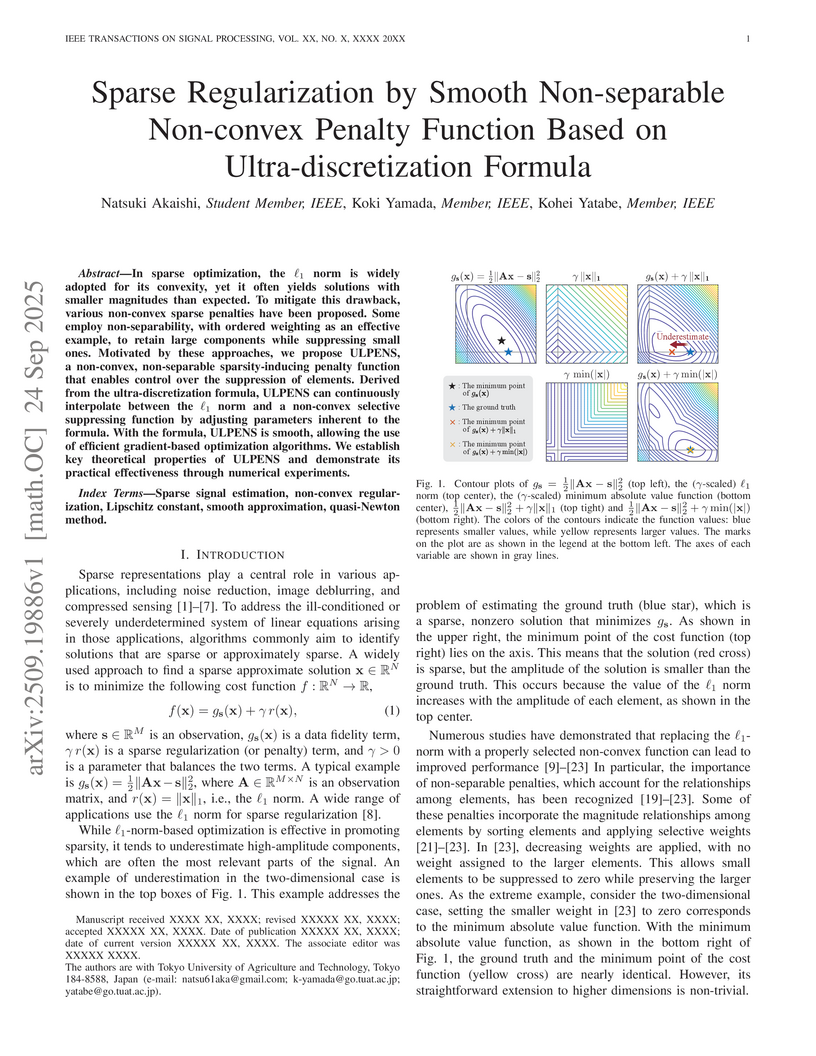

In sparse optimization, the ℓ1 norm is widely adopted for its convexity, yet it often yields solutions with smaller magnitudes than expected. To mitigate this drawback, various non-convex sparse penalties have been proposed. Some employ non-separability, with ordered weighting as an effective example, to retain large components while suppressing small ones. Motivated by these approaches, we propose ULPENS, a non-convex, non-separable sparsity-inducing penalty function that enables control over the suppression of elements. Derived from the ultra-discretization formula, ULPENS can continuously interpolate between the ℓ1 norm and a non-convex selective suppressing function by adjusting parameters inherent to the formula. With the formula, ULPENS is smooth, allowing the use of efficient gradient-based optimization algorithms. We establish key theoretical properties of ULPENS and demonstrate its practical effectiveness through numerical experiments.

Blood oxygen saturation (SpO2) is a crucial vital sign routinely monitored in

medical settings. Traditional methods require dedicated contact sensors,

limiting accessibility and comfort. This study presents a deep learning

framework for contactless SpO2 measurement using an off-the-shelf camera,

addressing challenges related to lighting variations and skin tone diversity.

We conducted two large-scale studies with diverse participants and evaluated

our method against traditional signal processing approaches in intra- and

inter-dataset scenarios. Our approach demonstrated consistent accuracy across

demographic groups, highlighting the feasibility of camera-based SpO2

monitoring as a scalable and non-invasive tool for remote health assessment.

01 Jul 2024

In this paper, we propose a deep learning based system for the task of deepfake audio detection. In particular, the draw input audio is first transformed into various spectrograms using three transformation methods of Short-time Fourier Transform (STFT), Constant-Q Transform (CQT), Wavelet Transform (WT) combined with different auditory-based filters of Mel, Gammatone, linear filters (LF), and discrete cosine transform (DCT). Given the spectrograms, we evaluate a wide range of classification models based on three deep learning approaches. The first approach is to train directly the spectrograms using our proposed baseline models of CNN-based model (CNN-baseline), RNN-based model (RNN-baseline), C-RNN model (C-RNN baseline). Meanwhile, the second approach is transfer learning from computer vision models such as ResNet-18, MobileNet-V3, EfficientNet-B0, DenseNet-121, SuffleNet-V2, Swint, Convnext-Tiny, GoogLeNet, MNASsnet, RegNet. In the third approach, we leverage the state-of-the-art audio pre-trained models of Whisper, Seamless, Speechbrain, and Pyannote to extract audio embeddings from the input spectrograms. Then, the audio embeddings are explored by a Multilayer perceptron (MLP) model to detect the fake or real audio samples. Finally, high-performance deep learning models from these approaches are fused to achieve the best performance. We evaluated our proposed models on ASVspoof 2019 benchmark dataset. Our best ensemble model achieved an Equal Error Rate (EER) of 0.03, which is highly competitive to top-performing systems in the ASVspoofing 2019 challenge. Experimental results also highlight the potential of selective spectrograms and deep learning approaches to enhance the task of audio deepfake detection.

When applying offline reinforcement learning (RL) in healthcare scenarios,

the out-of-distribution (OOD) issues pose significant risks, as inappropriate

generalization beyond clinical expertise can result in potentially harmful

recommendations. While existing methods like conservative Q-learning (CQL)

attempt to address the OOD issue, their effectiveness is limited by only

constraining action selection by suppressing uncertain actions. This

action-only regularization imitates clinician actions that prioritize

short-term rewards, but it fails to regulate downstream state trajectories,

thereby limiting the discovery of improved long-term treatment strategies. To

safely improve policy beyond clinician recommendations while ensuring that

state-action trajectories remain in-distribution, we propose \textit{Offline

Guarded Safe Reinforcement Learning} (OGSRL), a theoretically

grounded model-based offline RL framework. OGSRL introduces a novel

dual constraint mechanism for improving policy with reliability and safety.

First, the OOD guardian is established to specify clinically validated regions

for safe policy exploration. By constraining optimization within these regions,

it enables the reliable exploration of treatment strategies that outperform

clinician behavior by leveraging the full patient state history, without

drifting into unsupported state-action trajectories. Second, we introduce a

safety cost constraint that encodes medical knowledge about physiological

safety boundaries, providing domain-specific safeguards even in areas where

training data might contain potentially unsafe interventions. Notably, we

provide theoretical guarantees on safety and near-optimality: policies that

satisfy these constraints remain in safe and reliable regions and achieve

performance close to the best possible policy supported by the data.

The deep neural networks are known to be vulnerable to well-designed adversarial attacks. The most successful defense technique based on adversarial training (AT) can achieve optimal robustness against particular attacks but cannot generalize well to unseen attacks. Another effective defense technique based on adversarial purification (AP) can enhance generalization but cannot achieve optimal robustness. Meanwhile, both methods share one common limitation on the degraded standard accuracy. To mitigate these issues, we propose a novel pipeline to acquire the robust purifier model, named Adversarial Training on Purification (AToP), which comprises two components: perturbation destruction by random transforms (RT) and purifier model fine-tuned (FT) by adversarial loss. RT is essential to avoid overlearning to known attacks, resulting in the robustness generalization to unseen attacks, and FT is essential for the improvement of robustness. To evaluate our method in an efficient and scalable way, we conduct extensive experiments on CIFAR-10, CIFAR-100, and ImageNette to demonstrate that our method achieves optimal robustness and exhibits generalization ability against unseen attacks.

14 Oct 2025

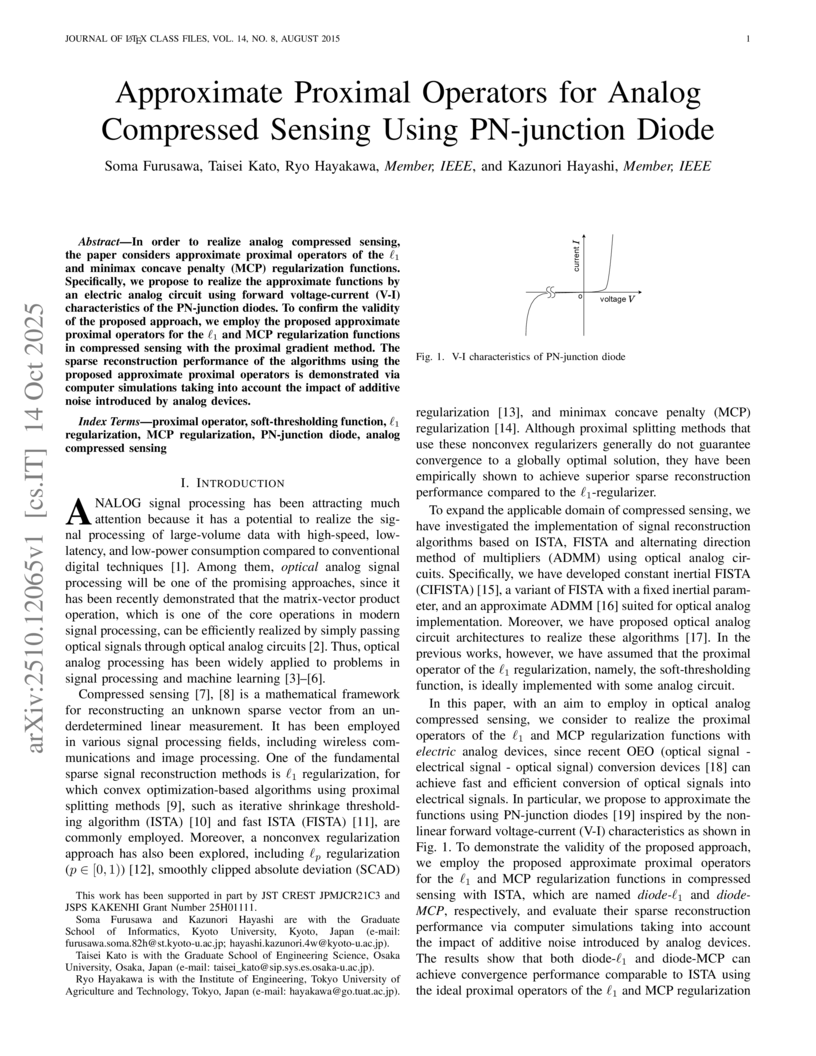

In order to realize analog compressed sensing, the paper considers approximate proximal operators of the ℓ1 and minimax concave penalty (MCP) regularization functions. Specifically, we propose to realize the approximate functions by an electric analog circuit using forward voltage-current (V-I) characteristics of the PN-junction diodes. To confirm the validity of the proposed approach, we employ the proposed approximate proximal operators for the ℓ1 and MCP regularization functions in compressed sensing with the proximal gradient method. The sparse reconstruction performance of the algorithms using the proposed approximate proximal operators is demonstrated via computer simulations taking into account the impact of additive noise introduced by analog devices.

Thanks to advancements in deep learning, speech generation systems now power

a variety of real-world applications, such as text-to-speech for individuals

with speech disorders, voice chatbots in call centers, cross-linguistic speech

translation, etc. While these systems can autonomously generate human-like

speech and replicate specific voices, they also pose risks when misused for

malicious purposes. This motivates the research community to develop models for

detecting synthesized speech (e.g., fake speech) generated by

deep-learning-based models, referred to as the Deepfake Speech Detection task.

As the Deepfake Speech Detection task has emerged in recent years, there are

not many survey papers proposed for this task. Additionally, existing surveys

for the Deepfake Speech Detection task tend to summarize techniques used to

construct a Deepfake Speech Detection system rather than providing a thorough

analysis. This gap motivated us to conduct a comprehensive survey, providing a

critical analysis of the challenges and developments in Deepfake Speech

Detection. Our survey is innovatively structured, offering an in-depth analysis

of current challenge competitions, public datasets, and the deep-learning

techniques that provide enhanced solutions to address existing challenges in

the field. From our analysis, we propose hypotheses on leveraging and combining

specific deep learning techniques to improve the effectiveness of Deepfake

Speech Detection systems. Beyond conducting a survey, we perform extensive

experiments to validate these hypotheses and propose a highly competitive model

for the task of Deepfake Speech Detection. Given the analysis and the

experimental results, we finally indicate potential and promising research

directions for the Deepfake Speech Detection task.

Researchers from Tokyo University of Agriculture and Technology developed AOAD-MAT, a Transformer-based multi-agent deep reinforcement learning model that dynamically learns the optimal order of agent action decisions. This approach achieved 100% median win rates across all tested SMAC scenarios and an approximately 10% improvement in median reward on the MA-MuJoCo HalfCheetah task, demonstrating enhanced performance and stability.

Diffusion model (DM) based adversarial purification (AP) has proven to be a

powerful defense method that can remove adversarial perturbations and generate

a purified example without threats. In principle, the pre-trained DMs can only

ensure that purified examples conform to the same distribution of the training

data, but it may inadvertently compromise the semantic information of input

examples, leading to misclassification of purified examples. Recent

advancements introduce guided diffusion techniques to preserve semantic

information while removing the perturbations. However, these guidances often

rely on distance measures between purified examples and diffused examples,

which can also preserve perturbations in purified examples. To further unleash

the robustness power of DM-based AP, we propose an adversarial guided diffusion

model (AGDM) by introducing a novel adversarial guidance that contains

sufficient semantic information but does not explicitly involve adversarial

perturbations. The guidance is modeled by an auxiliary neural network obtained

with adversarial training, considering the distance in the latent

representations rather than at the pixel-level values. Extensive experiments

are conducted on CIFAR-10, CIFAR-100 and ImageNet to demonstrate that our

method is effective for simultaneously maintaining semantic information and

removing the adversarial perturbations. In addition, comprehensive comparisons

show that our method significantly enhances the robustness of existing DM-based

AP, with an average robust accuracy improved by up to 7.30% on CIFAR-10.

California Institute of TechnologyNational Astronomical Observatory of Japan

California Institute of TechnologyNational Astronomical Observatory of Japan Chinese Academy of Sciences

Chinese Academy of Sciences Shanghai Jiao Tong University

Shanghai Jiao Tong University the University of Tokyo

the University of Tokyo Space Telescope Science Institute

Space Telescope Science Institute Johns Hopkins University

Johns Hopkins University Stockholm UniversityNational Astronomical ObservatoriesTokyo University of Agriculture and TechnologyThe Graduate University for Advanced Studies (SOKENDAI)The University of Western OntarioInstitute of Science TokyoJet Propulsion LaboratoryInstituto de Astrofísica de CanariasIbaraki UniversityUniversity of Hawai’iTsung-Dao Lee InstituteNational Institutes of Natural SciencesCalifornia State University, NorthridgeAstrobiology CenterNASA Exoplanet Science InstituteSubaru TelescopeInfrared Processing and Analysis CenterKomaba Institute for ScienceInstitute for AstronomyCenter for Computational AstrophysicsOita UniversityNiigata Institute of TechnologyCAS Key Laboratory of Optical AstronomyMax Planck Institut für AstronomieSofia University ","St. Kliment Ohridski"

Stockholm UniversityNational Astronomical ObservatoriesTokyo University of Agriculture and TechnologyThe Graduate University for Advanced Studies (SOKENDAI)The University of Western OntarioInstitute of Science TokyoJet Propulsion LaboratoryInstituto de Astrofísica de CanariasIbaraki UniversityUniversity of Hawai’iTsung-Dao Lee InstituteNational Institutes of Natural SciencesCalifornia State University, NorthridgeAstrobiology CenterNASA Exoplanet Science InstituteSubaru TelescopeInfrared Processing and Analysis CenterKomaba Institute for ScienceInstitute for AstronomyCenter for Computational AstrophysicsOita UniversityNiigata Institute of TechnologyCAS Key Laboratory of Optical AstronomyMax Planck Institut für AstronomieSofia University ","St. Kliment Ohridski"We report the discovery of a new directly-imaged brown dwarf companion with Keck/NIRC2+pyWFS around a nearby mid-type M~dwarf LSPM~J1446+4633 (hereafter J1446). The L′-band contrast (4.5×10−3) is consistent with a ∼20−60 MJup object at 1--10~Gyr and our two-epoch NIRC2 data suggest a ∼30% (∼3.1σ) variability in its L′-band flux. We incorporated Gaia DR3 non-single-star catalog into the orbital fitting by combining the Subaru/IRD RV monitoring results, NIRC2 direct imaging results, and Gaia proper motion acceleration. As a result, we derive 59.8−1.4+1.5 MJup and ≈4.3 au for the dynamical mass and the semi-major axis of the companion J1446B, respectively. J1446B is one of the intriguing late-T~dwarfs showing variability at L′-band for future atmospheric studies with the constrained dynamical mass. Because the J1446 system is accessible with various observation techniques such as astrometry, direct imaging, and high-resolution spectroscopy including radial velocity measurement, it has a potential as a great benchmark system to improve our understanding for cool dwarfs.

Researchers from RIKEN and Tokyo University of Agriculture and Technology developed LLAMOS, an LLM-based adversarial purification method that acts as a pre-processing module to enhance LLM robustness. The approach effectively reduces attack success rates by up to 45.59% and significantly improves robust accuracy across various text classification tasks and LLMs by leveraging an LLM as an intelligent defense agent capable of in-context learning.

Handwritten mathematical expressions (HMEs) contain ambiguities in their interpretations, even for humans sometimes. Several math symbols are very similar in the writing style, such as dot and comma or 0, O, and o, which is a challenge for HME recognition systems to handle without using contextual information. To address this problem, this paper presents a Transformer-based Math Language Model (TMLM). Based on the self-attention mechanism, the high-level representation of an input token in a sequence of tokens is computed by how it is related to the previous tokens. Thus, TMLM can capture long dependencies and correlations among symbols and relations in a mathematical expression (ME). We trained the proposed language model using a corpus of approximately 70,000 LaTeX sequences provided in CROHME 2016. TMLM achieved the perplexity of 4.42, which outperformed the previous math language models, i.e., the N-gram and recurrent neural network-based language models. In addition, we combine TMLM into a stochastic context-free grammar-based HME recognition system using a weighting parameter to re-rank the top-10 best candidates. The expression rates on the testing sets of CROHME 2016 and CROHME 2019 were improved by 2.97 and 0.83 percentage points, respectively.

This paper introduces a broad class of Mirror Descent (MD) and Generalized Exponentiated Gradient (GEG) algorithms derived from trace-form entropies defined via deformed logarithms. Leveraging these generalized entropies yields MD \& GEG algorithms with improved convergence behavior, robustness to vanishing and exploding gradients, and inherent adaptability to non-Euclidean geometries through mirror maps. We establish deep connections between these methods and Amari's natural gradient, revealing a unified geometric foundation for additive, multiplicative, and natural gradient updates. Focusing on the Tsallis, Kaniadakis, Sharma--Taneja--Mittal, and Kaniadakis--Lissia--Scarfone entropy families, we show that each entropy induces a distinct Riemannian metric on the parameter space, leading to GEG algorithms that preserve the natural statistical geometry. The tunable parameters of deformed logarithms enable adaptive geometric selection, providing enhanced robustness and convergence over classical Euclidean optimization. Overall, our framework unifies key first-order MD optimization methods under a single information-geometric perspective based on generalized Bregman divergences, where the choice of entropy determines the underlying metric and dual geometric structure.

Physics-Informed Neural Networks (PINNs) have emerged as a powerful, mesh-free paradigm for solving partial differential equations (PDEs). However, they notoriously struggle with stiff, multi-scale, and nonlinear systems due to the inherent spectral bias of standard multilayer perceptron (MLP) architectures, which prevents them from adequately representing high-frequency components. In this work, we introduce the Adaptive Spectral Physics-Enabled Network (ASPEN), a novel architecture designed to overcome this critical limitation. ASPEN integrates an adaptive spectral layer with learnable Fourier features directly into the network's input stage. This mechanism allows the model to dynamically tune its own spectral basis during training, enabling it to efficiently learn and represent the precise frequency content required by the solution. We demonstrate the efficacy of ASPEN by applying it to the complex Ginzburg-Landau equation (CGLE), a canonical and challenging benchmark for nonlinear, stiff spatio-temporal dynamics. Our results show that a standard PINN architecture catastrophically fails on this problem, diverging into non-physical oscillations. In contrast, ASPEN successfully solves the CGLE with exceptional accuracy. The predicted solution is visually indistinguishable from the high-resolution ground truth, achieving a low median physics residual of 5.10 x 10^-3. Furthermore, we validate that ASPEN's solution is not only pointwise accurate but also physically consistent, correctly capturing emergent physical properties, including the rapid free energy relaxation and the long-term stability of the domain wall front. This work demonstrates that by incorporating an adaptive spectral basis, our framework provides a robust and physically-consistent solver for complex dynamical systems where standard PINNs fail, opening new options for machine learning in challenging physical domains.

Automated diagnosis with artificial intelligence has emerged as a promising area in the realm of medical imaging, while the interpretability of the introduced deep neural networks still remains an urgent concern. Although contemporary works, such as XProtoNet and MProtoNet, has sought to design interpretable prediction models for the issue, the localization precision of their resulting attribution maps can be further improved. To this end, we propose a Multi-scale Attentive Prototypical part Network, termed MAProtoNet, to provide more precise maps for attribution. Specifically, we introduce a concise multi-scale module to merge attentive features from quadruplet attention layers, and produces attribution maps. The proposed quadruplet attention layers can enhance the existing online class activation mapping loss via capturing interactions between the spatial and channel dimension, while the multi-scale module then fuses both fine-grained and coarse-grained information for precise maps generation. We also apply a novel multi-scale mapping loss for supervision on the proposed multi-scale module. Compared to existing interpretable prototypical part networks in medical imaging, MAProtoNet can achieve state-of-the-art performance in localization on brain tumor segmentation (BraTS) datasets, resulting in approximately 4% overall improvement on activation precision score (with a best score of 85.8%), without using additional annotated labels of segmentation. Our code will be released in this https URL.

30 Apr 2025

We consider the oblivious transfer (OT) capacities of noisy channels against the passive adversary; this problem has not been solved even for the binary symmetric channel (BSC). In the literature, the general construction of OT has been known only for generalized erasure channels (GECs); for the BSC, we convert the channel to the binary symmetric erasure channel (BSEC), which is a special instance of the GEC, via alphabet extension and erasure emulation. In a previous paper by the authors, we derived an improved lower bound on the OT capacity of BSC by proposing a method to recursively emulate BSEC via interactive communication. In this paper, we introduce two new ideas of OT construction: (i) via ``polarization" and interactive communication, we recursively emulate GECs that are not necessarily a BSEC; (ii) in addition to the GEC emulation part, we also utilize interactive communication in the key agreement part of OT protocol. By these methods, we derive lower bounds on the OT capacity of BSC that are superior to the previous one for a certain range of crossover probabilities of the BSC. Via our new lower bound, we show that, at the crossover probability being zero, the slope of tangent of the OT capacity is unbounded.

In this paper, we propose a depth-aided color image inpainting method in the

quaternion domain, called depth-aided low-rank quaternion matrix completion

(D-LRQMC). In conventional quaternion-based inpainting techniques, the color

image is expressed as a quaternion matrix by using the three imaginary parts as

the color channels, whereas the real part is set to zero and has no

information. Our approach incorporates depth information as the real part of

the quaternion representations, leveraging the correlation between color and

depth to improve the result of inpainting. In the proposed method, we first

restore the observed image with the conventional LRQMC and estimate the depth

of the restored result. We then incorporate the estimated depth into the real

part of the observed image and perform LRQMC again. Simulation results

demonstrate that the proposed D-LRQMC can improve restoration accuracy and

visual quality for various images compared to the conventional LRQMC. These

results suggest the effectiveness of the depth information for color image

processing in quaternion domain.

Exoplanets in their infancy are ideal targets to probe the formation and

evolution history of planetary systems, including the planet migration and

atmospheric evolution and dissipation. In this paper, we present spectroscopic

observations and analyses of two planetary transits of K2-33b, which is known

to be one of the youngest transiting planets (age ≈8−11 Myr) around a

pre-main-sequence M-type star. Analysing K2-33's near-infrared spectra obtained

by the IRD instrument on Subaru, we investigate the spin-orbit angle and

transit-induced excess absorption for K2-33b. We attempt both classical

modelling of the Rossiter-McLaughlin (RM) effect and Doppler-shadow analyses

for the measurements of the projected stellar obliquity, finding a low angle of

λ=−6−58+61 deg (for RM analysis) and λ=−10−24+22

deg (for Doppler-shadow analysis). In the modelling of the RM effect, we allow

the planet-to-star radius ratio to float freely to take into account the

possible smaller radius in the near infrared, but the constraint we obtain

(Rp/Rs=0.037−0.017+0.013) is inconclusive due to the low

radial-velocity precision. Comparison spectra of K2-33 of the 1083 nm triplet

of metastable ortho-He I obtained in and out of the 2021 transit reveal excess

absorption that could be due to an escaping He-rich atmosphere. Under certain

conditions on planet mass and stellar XUV emission, the implied escape rate is

sufficient to remove an Earth-mass H/He in ∼1 Gyr, transforming this

object from a Neptune to a super-Earth.

There are no more papers matching your filters at the moment.