Universidade da Coruña

In this paper we extend the equal division and the equal surplus division

values for transferable utility cooperative games to the more general setup of

transferable utility cooperative games with level structures. In the case of

the equal surplus division value we propose three possible extensions, one of

which has already been described in the literature. We provide axiomatic

characterizations of the values considered, apply them to a particular cost

sharing problem and compare them in the framework of such an application.

31 Jan 2024

Gamification has been applied in software engineering to improve quality and

results by increasing people's motivation and engagement. A systematic mapping

has identified research gaps in the field, one of them being the difficulty of

creating an integrated gamified environment comprising all the tools of an

organization, since most existing gamified tools are custom developments or

prototypes. In this paper, we propose a gamification software architecture that

allows us to transform the work environment of a software organization into an

integrated gamified environment, i.e., the organization can maintain its tools,

and the rewards obtained by the users for their actions in different tools will

mount up. We developed a gamification engine based on our proposal, and we

carried out a case study in which we applied it in a real software development

company. The case study shows that the gamification engine has allowed the

company to create a gamified workplace by integrating custom developed tools

and off-the-shelf tools such as Redmine, TestLink, or JUnit, with the

gamification engine. Two main advantages can be highlighted: (i) our solution

allows the organization to maintain its current tools, and (ii) the rewards for

actions in any tool accumulate in a centralized gamified environment.

21 Aug 2024

The MARLIN kernel provides an efficient method for batched inference of 4-bit quantized Large Language Models (LLMs). It achieves near-optimal speedups across various batch sizes by effectively managing memory bandwidth, dequantization, and Tensor Core utilization.

07 Jun 2024

A lot of recent machine learning research papers have ``open-ended learning'' in their title. But very few of them attempt to define what they mean when using the term. Even worse, when looking more closely there seems to be no consensus on what distinguishes open-ended learning from related concepts such as continual learning, lifelong learning or autotelic learning. In this paper, we contribute to fixing this situation. After illustrating the genealogy of the concept and more recent perspectives about what it truly means, we outline that open-ended learning is generally conceived as a composite notion encompassing a set of diverse properties. In contrast with previous approaches, we propose to isolate a key elementary property of open-ended processes, which is to produce elements from time to time (e.g., observations, options, reward functions, and goals), over an infinite horizon, that are considered novel from an observer's perspective. From there, we build the notion of open-ended learning problems and focus in particular on the subset of open-ended goal-conditioned reinforcement learning problems in which agents can learn a growing repertoire of goal-driven skills. Finally, we highlight the work that remains to be performed to fill the gap between our elementary definition and the more involved notions of open-ended learning that developmental AI researchers may have in mind.

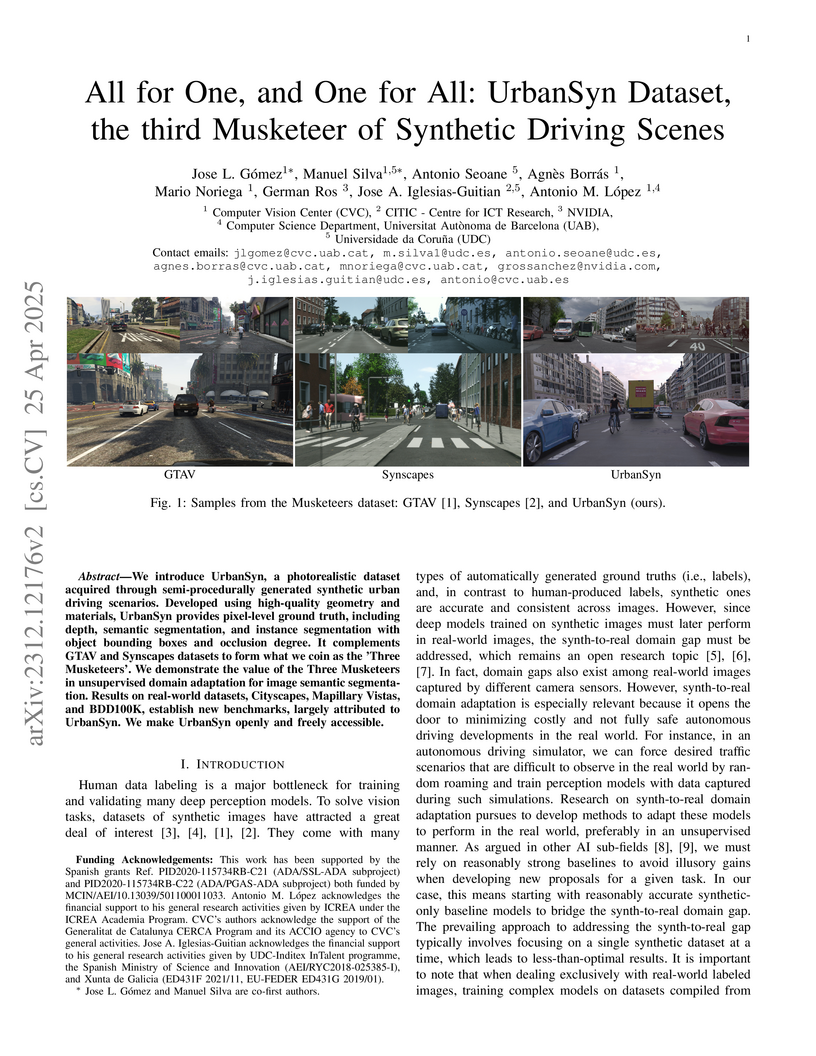

We introduce UrbanSyn, a photorealistic dataset acquired through

semi-procedurally generated synthetic urban driving scenarios. Developed using

high-quality geometry and materials, UrbanSyn provides pixel-level ground

truth, including depth, semantic segmentation, and instance segmentation with

object bounding boxes and occlusion degree. It complements GTAV and Synscapes

datasets to form what we coin as the 'Three Musketeers'. We demonstrate the

value of the Three Musketeers in unsupervised domain adaptation for image

semantic segmentation. Results on real-world datasets, Cityscapes, Mapillary

Vistas, and BDD100K, establish new benchmarks, largely attributed to UrbanSyn.

We make UrbanSyn openly and freely accessible (www.urbansyn.org).

13 May 2020

Robots are still limited to controlled conditions, that the robot designer knows with enough details to endow the robot with the appropriate models or behaviors. Learning algorithms add some flexibility with the ability to discover the appropriate behavior given either some demonstrations or a reward to guide its exploration with a reinforcement learning algorithm. Reinforcement learning algorithms rely on the definition of state and action spaces that define reachable behaviors. Their adaptation capability critically depends on the representations of these spaces: small and discrete spaces result in fast learning while large and continuous spaces are challenging and either require a long training period or prevent the robot from converging to an appropriate behavior. Beside the operational cycle of policy execution and the learning cycle, which works at a slower time scale to acquire new policies, we introduce the redescription cycle, a third cycle working at an even slower time scale to generate or adapt the required representations to the robot, its environment and the task. We introduce the challenges raised by this cycle and we present DREAM (Deferred Restructuring of Experience in Autonomous Machines), a developmental cognitive architecture to bootstrap this redescription process stage by stage, build new state representations with appropriate motivations, and transfer the acquired knowledge across domains or tasks or even across robots. We describe results obtained so far with this approach and end up with a discussion of the questions it raises in Neuroscience.

The RNASA-IMEDIR and CITIC research groups at Universidade da Coruña demonstrated the application of Tabular Transformer architectures for operating system (OS) fingerprinting, particularly the FT-Transformer. This model consistently outperformed classical machine learning methods and prior state-of-the-art techniques, achieving higher accuracy across diverse datasets and classification granularities.

Pretrained Transformer encoders are the dominant approach to sequence labeling. While some alternative architectures-such as xLSTMs, structured state-space models, diffusion models, and adversarial learning-have shown promise in language modeling, few have been applied to sequence labeling, and mostly on flat or simplified tasks. We study how these architectures adapt across tagging tasks that vary in structural complexity, label space, and token dependencies, with evaluation spanning multiple languages. We find that the strong performance previously observed in simpler settings does not always generalize well across languages or datasets, nor does it extend to more complex structured tasks.

We cast nested named entity recognition (NNER) as a sequence labeling task by leveraging prior work that linearizes constituency structures, effectively reducing the complexity of this structured prediction problem to straightforward token classification. By combining these constituency linearizations with pretrained encoders, our method captures nested entities while performing exactly n tagging actions. Our approach achieves competitive performance compared to less efficient systems, and it can be trained using any off-the-shelf sequence labeling library.

01 Sep 2025

Online harms are a growing problem in digital spaces, putting user safety at risk and reducing trust in social media platforms. One of the most persistent forms of harm is hate speech. To address this, we need tools that combine the speed and scale of automated systems with the judgment and insight of human moderators. These tools should not only find harmful content but also explain their decisions clearly, helping to build trust and understanding. In this paper, we present WATCHED, a chatbot designed to support content moderators in tackling hate speech. The chatbot is built as an Artificial Intelligence Agent system that uses Large Language Models along with several specialised tools. It compares new posts with real examples of hate speech and neutral content, uses a BERT-based classifier to help flag harmful messages, looks up slang and informal language using sources like Urban Dictionary, generates chain-of-thought reasoning, and checks platform guidelines to explain and support its decisions. This combination allows the chatbot not only to detect hate speech but to explain why content is considered harmful, grounded in both precedent and policy. Experimental results show that our proposed method surpasses existing state-of-the-art methods, reaching a macro F1 score of 0.91. Designed for moderators, safety teams, and researchers, the tool helps reduce online harms by supporting collaboration between AI and human oversight.

Retinal image registration, particularly for color fundus images, is a

challenging yet essential task with diverse clinical applications. Existing

registration methods for color fundus images typically rely on keypoints and

descriptors for alignment; however, a significant limitation is their reliance

on labeled data, which is particularly scarce in the medical domain.

In this work, we present a novel unsupervised registration pipeline that

entirely eliminates the need for labeled data. Our approach is based on the

principle that locations with distinctive descriptors constitute reliable

keypoints. This fully inverts the conventional state-of-the-art approach,

conditioning the detector on the descriptor rather than the opposite.

First, we propose an innovative descriptor learning method that operates

without keypoint detection or any labels, generating descriptors for arbitrary

locations in retinal images. Next, we introduce a novel, label-free keypoint

detector network which works by estimating descriptor performance directly from

the input image.

We validate our method through a comprehensive evaluation on four hold-out

datasets, demonstrating that our unsupervised descriptor outperforms

state-of-the-art supervised descriptors and that our unsupervised detector

significantly outperforms existing unsupervised detection methods. Finally, our

full registration pipeline achieves performance comparable to the leading

supervised methods, while not employing any labeled data. Additionally, the

label-free nature and design of our method enable direct adaptation to other

domains and modalities.

The rapid advancement of artificial intelligence is enabling the development of increasingly autonomous robots capable of operating beyond engineered factory settings and into the unstructured environments of human life. This shift raises a critical autonomy-alignment problem: how to ensure that a robot's autonomous learning focuses on acquiring knowledge and behaviours that serve human practical objectives while remaining aligned with broader human values (e.g., safety and ethics). This problem remains largely underexplored and lacks a unifying conceptual and formal framework. Here, we address one of its most challenging instances of the problem: open-ended learning (OEL) robots, which autonomously acquire new knowledge and skills through interaction with the environment, guided by intrinsic motivations and self-generated goals. We propose a computational framework, introduced qualitatively and then formalised, to guide the design of OEL architectures that balance autonomy with human control. At its core is the novel concept of purpose, which specifies what humans (designers or users) want the robot to learn, do, or avoid, independently of specific task domains. The framework decomposes the autonomy-alignment problem into four tractable sub-problems: the alignment of robot purposes (hardwired or learnt) with human purposes; the arbitration between multiple purposes; the grounding of abstract purposes into domain-specific goals; and the acquisition of competence to achieve those goals. The framework supports formal definitions of alignment across multiple cases and proofs of necessary and sufficient conditions under which alignment holds. Illustrative hypothetical scenarios showcase the applicability of the framework for guiding the development of purpose-aligned autonomous robots.

Depression is a pervasive mental health condition that affects hundreds of millions of individuals worldwide, yet many cases remain undiagnosed due to barriers in traditional clinical access and pervasive stigma. Social media platforms, and Reddit in particular, offer rich, user-generated narratives that can reveal early signs of depressive symptomatology. However, existing computational approaches often label entire posts simply as depressed or not depressed, without linking language to specific criteria from the DSM-5, the standard clinical framework for diagnosing depression. This limits both clinical relevance and interpretability. To address this gap, we introduce ReDSM5, a novel Reddit corpus comprising 1484 long-form posts, each exhaustively annotated at the sentence level by a licensed psychologist for the nine DSM-5 depression symptoms. For each label, the annotator also provides a concise clinical rationale grounded in DSM-5 methodology. We conduct an exploratory analysis of the collection, examining lexical, syntactic, and emotional patterns that characterize symptom expression in social media narratives. Compared to prior resources, ReDSM5 uniquely combines symptom-specific supervision with expert explanations, facilitating the development of models that not only detect depression but also generate human-interpretable reasoning. We establish baseline benchmarks for both multi-label symptom classification and explanation generation, providing reference results for future research on detection and interpretability.

Latent representations are critical for the performance and robustness of machine learning models, as they encode the essential features of data in a compact and informative manner. However, in vision tasks, these representations are often affected by noisy or irrelevant features, which can degrade the model's performance and generalization capabilities. This paper presents a novel approach for enhancing latent representations using unsupervised Dynamic Feature Selection (DFS). For each instance, the proposed method identifies and removes misleading or redundant information in images, ensuring that only the most relevant features contribute to the latent space. By leveraging an unsupervised framework, our approach avoids reliance on labeled data, making it broadly applicable across various domains and datasets. Experiments conducted on image datasets demonstrate that models equipped with unsupervised DFS achieve significant improvements in generalization performance across various tasks, including clustering and image generation, while incurring a minimal increase in the computational cost.

06 Oct 2025

University of Cincinnati University of AmsterdamUniversity of VictoriaHeidelberg University

University of AmsterdamUniversity of VictoriaHeidelberg University University of Notre Dame

University of Notre Dame University of California, IrvineCSIC

University of California, IrvineCSIC University of Texas at AustinUniversidade de LisboaWeizmann Institute of Science

University of Texas at AustinUniversidade de LisboaWeizmann Institute of Science Sorbonne UniversitéDeutsches Elektronen-Synchrotron DESY

Sorbonne UniversitéDeutsches Elektronen-Synchrotron DESY MIT

MIT Chalmers University of TechnologyUniversitat de ValènciaLomonosov Moscow State UniversityUniversity of BirminghamUniversidade de São PauloEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH (CERN)Ecole Polytechnique Fédérale de Lausanne (EPFL)Max-Planck-Institut für PhysikTaras Shevchenko National University of KyivUniversidade de Santiago de CompostelaUniversidade da CoruñaInstitute for Nuclear Research of the Russian Academy of SciencesNikhef, National Institute for Subatomic PhysicsKarlsruher Institut für Technologie (KIT)Laboratori Nazionali di Frascati dell’INFNUniversidad Cardenal Herrera-CEUCNRS/INP

Chalmers University of TechnologyUniversitat de ValènciaLomonosov Moscow State UniversityUniversity of BirminghamUniversidade de São PauloEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH (CERN)Ecole Polytechnique Fédérale de Lausanne (EPFL)Max-Planck-Institut für PhysikTaras Shevchenko National University of KyivUniversidade de Santiago de CompostelaUniversidade da CoruñaInstitute for Nuclear Research of the Russian Academy of SciencesNikhef, National Institute for Subatomic PhysicsKarlsruher Institut für Technologie (KIT)Laboratori Nazionali di Frascati dell’INFNUniversidad Cardenal Herrera-CEUCNRS/INP

University of AmsterdamUniversity of VictoriaHeidelberg University

University of AmsterdamUniversity of VictoriaHeidelberg University University of Notre Dame

University of Notre Dame University of California, IrvineCSIC

University of California, IrvineCSIC University of Texas at AustinUniversidade de LisboaWeizmann Institute of Science

University of Texas at AustinUniversidade de LisboaWeizmann Institute of Science Sorbonne UniversitéDeutsches Elektronen-Synchrotron DESY

Sorbonne UniversitéDeutsches Elektronen-Synchrotron DESY MIT

MIT Chalmers University of TechnologyUniversitat de ValènciaLomonosov Moscow State UniversityUniversity of BirminghamUniversidade de São PauloEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH (CERN)Ecole Polytechnique Fédérale de Lausanne (EPFL)Max-Planck-Institut für PhysikTaras Shevchenko National University of KyivUniversidade de Santiago de CompostelaUniversidade da CoruñaInstitute for Nuclear Research of the Russian Academy of SciencesNikhef, National Institute for Subatomic PhysicsKarlsruher Institut für Technologie (KIT)Laboratori Nazionali di Frascati dell’INFNUniversidad Cardenal Herrera-CEUCNRS/INP

Chalmers University of TechnologyUniversitat de ValènciaLomonosov Moscow State UniversityUniversity of BirminghamUniversidade de São PauloEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH (CERN)Ecole Polytechnique Fédérale de Lausanne (EPFL)Max-Planck-Institut für PhysikTaras Shevchenko National University of KyivUniversidade de Santiago de CompostelaUniversidade da CoruñaInstitute for Nuclear Research of the Russian Academy of SciencesNikhef, National Institute for Subatomic PhysicsKarlsruher Institut für Technologie (KIT)Laboratori Nazionali di Frascati dell’INFNUniversidad Cardenal Herrera-CEUCNRS/INPWith the establishment and maturation of the experimental programs searching for new physics with sizeable couplings at the LHC, there is an increasing interest in the broader particle and astrophysics community for exploring the physics of light and feebly-interacting particles as a paradigm complementary to a New Physics sector at the TeV scale and beyond. FIPs@LHCb continues the successful series of the FIPs workshops, FIPs 2020 and FIPs 2022. The main focus of the workshop was to explore the LHCb potential to search for FIPs thanks to the new software trigger deployed during the recent upgrade. Equally important goals of the workshop were to update the available parameter space in the commonly used FIPs benchmarks by including recent results from the high energy physics community and to discuss recent theory progress necessary for a more accurate definition of observables related to FIP benchmarks. This document presents the summary of the talks presented at the workshops and the outcome of subsequent discussions.

Automatic detection of hate and abusive language is essential to combat its online spread. Moreover, recognising and explaining hate speech serves to educate people about its negative effects. However, most current detection models operate as black boxes, lacking interpretability and explainability. In this context, Large Language Models (LLMs) have proven effective for hate speech detection and to promote interpretability. Nevertheless, they are computationally costly to run. In this work, we propose distilling big language models by using Chain-of-Thought to extract explanations that support the hate speech classification task. Having small language models for these tasks will contribute to their use in operational settings. In this paper, we demonstrate that distilled models deliver explanations of the same quality as larger models while surpassing them in classification performance. This dual capability, classifying and explaining, advances hate speech detection making it more affordable, understandable and actionable.

ETH Zurich

ETH Zurich CNRS

CNRS University of Cambridge

University of Cambridge Tel Aviv University

Tel Aviv University University College LondonUniversity of EdinburghUniversidade de LisboaTechnische Universität Dresden

University College LondonUniversity of EdinburghUniversidade de LisboaTechnische Universität Dresden KU LeuvenRadboud UniversityUniversität HeidelbergUniversity of HelsinkiUppsala University

KU LeuvenRadboud UniversityUniversität HeidelbergUniversity of HelsinkiUppsala University University of Arizona

University of Arizona Sorbonne Université

Sorbonne Université Leiden UniversityUniversity of GenevaUniversity of ViennaUniversitat de BarcelonaUniversity of LeicesterObservatoire de ParisUniversité de LiègeINAF - Osservatorio Astrofisico di TorinoUniversité Côte d’Azur

Leiden UniversityUniversity of GenevaUniversity of ViennaUniversitat de BarcelonaUniversity of LeicesterObservatoire de ParisUniversité de LiègeINAF - Osservatorio Astrofisico di TorinoUniversité Côte d’Azur University of GroningenClemson UniversityLund UniversityUniversidad Nacional Autónoma de MéxicoSwinburne University of TechnologyUniversität HamburgThales Alenia Space

University of GroningenClemson UniversityLund UniversityUniversidad Nacional Autónoma de MéxicoSwinburne University of TechnologyUniversität HamburgThales Alenia Space European Southern ObservatoryLaboratoire d’Astrophysique de BordeauxSISSACNESUniversity of CalgaryUniversidad de La LagunaIMT AtlantiqueObservatoire de la Côte d’AzurEuropean Space Astronomy Centre (ESAC)Kapteyn Astronomical InstituteObservatoire astronomique de StrasbourgNational Observatory of AthensQueen's University BelfastUniversidade de Santiago de CompostelaINAF – Osservatorio Astronomico di RomaInstituto de Astrofísica de Canarias (IAC)Universidade da CoruñaINAF – Osservatorio Astronomico d’AbruzzoSRON Netherlands Institute for Space ResearchINAF - Osservatorio Astrofisico di CataniaUniversidade de VigoRoyal Observatory of BelgiumINAF- Osservatorio Astronomico di CagliariLeibniz-Institut für Astrophysik Potsdam (AIP)F.R.S.-FNRSTelespazio FRANCEAirbus Defence and SpaceInstituto Galego de Física de Altas Enerxías (IGFAE)Universitat Politècnica de Catalunya-BarcelonaTechSTAR InstituteEuropean Space Agency (ESA)Lund ObservatoryGeneva University HospitalLeiden ObservatoryFinnish Geospatial Research Institute FGICGIAgenzia Spaziale Italiana (ASI)Mullard Space Science LaboratoryInstitut de Ciències del Cosmos (ICCUB)Aurora TechnologyCentro de Supercomputación de Galicia (CESGA)Institut UTINAMGEPISERCOInstitut d’Astronomie et d’AstrophysiqueGMV Innovating Solutions S.L.Space Science Data Center (SSDC)Wallonia Space Centre (CSW)Indra Sistemas S.A.Universit PSL* National and Kapodistrian University of AthensUniversit

de ToulouseUniversit

Bourgogne Franche-ComtUniversit

Libre de BruxellesIstituto Nazionale di Fisica Nucleare INFNMax Planck Institut fr AstronomieUniversit

de LorraineUniversit

de BordeauxUniversit

de StrasbourgUniversit

di PadovaINAF

Osservatorio Astrofisico di ArcetriINAF

Osservatorio Astronomico di PadovaAstronomisches Rechen–InstitutINAF Osservatorio di Astrofisica e Scienza dello Spazio di Bologna

European Southern ObservatoryLaboratoire d’Astrophysique de BordeauxSISSACNESUniversity of CalgaryUniversidad de La LagunaIMT AtlantiqueObservatoire de la Côte d’AzurEuropean Space Astronomy Centre (ESAC)Kapteyn Astronomical InstituteObservatoire astronomique de StrasbourgNational Observatory of AthensQueen's University BelfastUniversidade de Santiago de CompostelaINAF – Osservatorio Astronomico di RomaInstituto de Astrofísica de Canarias (IAC)Universidade da CoruñaINAF – Osservatorio Astronomico d’AbruzzoSRON Netherlands Institute for Space ResearchINAF - Osservatorio Astrofisico di CataniaUniversidade de VigoRoyal Observatory of BelgiumINAF- Osservatorio Astronomico di CagliariLeibniz-Institut für Astrophysik Potsdam (AIP)F.R.S.-FNRSTelespazio FRANCEAirbus Defence and SpaceInstituto Galego de Física de Altas Enerxías (IGFAE)Universitat Politècnica de Catalunya-BarcelonaTechSTAR InstituteEuropean Space Agency (ESA)Lund ObservatoryGeneva University HospitalLeiden ObservatoryFinnish Geospatial Research Institute FGICGIAgenzia Spaziale Italiana (ASI)Mullard Space Science LaboratoryInstitut de Ciències del Cosmos (ICCUB)Aurora TechnologyCentro de Supercomputación de Galicia (CESGA)Institut UTINAMGEPISERCOInstitut d’Astronomie et d’AstrophysiqueGMV Innovating Solutions S.L.Space Science Data Center (SSDC)Wallonia Space Centre (CSW)Indra Sistemas S.A.Universit PSL* National and Kapodistrian University of AthensUniversit

de ToulouseUniversit

Bourgogne Franche-ComtUniversit

Libre de BruxellesIstituto Nazionale di Fisica Nucleare INFNMax Planck Institut fr AstronomieUniversit

de LorraineUniversit

de BordeauxUniversit

de StrasbourgUniversit

di PadovaINAF

Osservatorio Astrofisico di ArcetriINAF

Osservatorio Astronomico di PadovaAstronomisches Rechen–InstitutINAF Osservatorio di Astrofisica e Scienza dello Spazio di BolognaWe produce a clean and well-characterised catalogue of objects within 100\,pc

of the Sun from the \G\ Early Data Release 3. We characterise the catalogue

through comparisons to the full data release, external catalogues, and

simulations. We carry out a first analysis of the science that is possible with

this sample to demonstrate its potential and best practices for its use.

The selection of objects within 100\,pc from the full catalogue used selected

training sets, machine-learning procedures, astrometric quantities, and

solution quality indicators to determine a probability that the astrometric

solution is reliable. The training set construction exploited the astrometric

data, quality flags, and external photometry. For all candidates we calculated

distance posterior probability densities using Bayesian procedures and mock

catalogues to define priors. Any object with reliable astrometry and a non-zero

probability of being within 100\,pc is included in the catalogue.

We have produced a catalogue of \NFINAL\ objects that we estimate contains at

least 92\% of stars of stellar type M9 within 100\,pc of the Sun. We estimate

that 9\% of the stars in this catalogue probably lie outside 100\,pc, but when

the distance probability function is used, a correct treatment of this

contamination is possible. We produced luminosity functions with a high

signal-to-noise ratio for the main-sequence stars, giants, and white dwarfs. We

examined in detail the Hyades cluster, the white dwarf population, and

wide-binary systems and produced candidate lists for all three samples. We

detected local manifestations of several streams, superclusters, and halo

objects, in which we identified 12 members of \G\ Enceladus. We present the

first direct parallaxes of five objects in multiple systems within 10\,pc of

the Sun.

We conduct a quantitative analysis contrasting human-written English news text with comparable large language model (LLM) output from six different LLMs that cover three different families and four sizes in total. Our analysis spans several measurable linguistic dimensions, including morphological, syntactic, psychometric, and sociolinguistic aspects. The results reveal various measurable differences between human and AI-generated texts. Human texts exhibit more scattered sentence length distributions, more variety of vocabulary, a distinct use of dependency and constituent types, shorter constituents, and more optimized dependency distances. Humans tend to exhibit stronger negative emotions (such as fear and disgust) and less joy compared to text generated by LLMs, with the toxicity of these models increasing as their size grows. LLM outputs use more numbers, symbols and auxiliaries (suggesting objective language) than human texts, as well as more pronouns. The sexist bias prevalent in human text is also expressed by LLMs, and even magnified in all of them but one. Differences between LLMs and humans are larger than between LLMs.

Visual explanations based on user-uploaded images are an effective and

self-contained approach to provide transparency to Recommender Systems (RS),

but intrinsic limitations of data used in this explainability paradigm cause

existing approaches to use bad quality training data that is highly sparse and

suffers from labelling noise. Popular training enrichment approaches like model

enlargement or massive data gathering are expensive and environmentally

unsustainable, thus we seek to provide better visual explanations to RS

aligning with the principles of Responsible AI. In this work, we research the

intersection of effective and sustainable training enrichment strategies for

visual-based RS explainability models by developing three novel strategies that

focus on training Data Quality: 1) selection of reliable negative training

examples using Positive-unlabelled Learning, 2) transform-based data

augmentation, and 3) text-to-image generative-based data augmentation. The

integration of these strategies in three state-of-the-art explainability models

increases 5% the performance in relevant ranking metrics of these visual-based

RS explainability models without penalizing their practical long-term

sustainability, as tested in multiple real-world restaurant recommendation

explanation datasets.

We present HEAD-QA, a multi-choice question answering testbed to encourage research on complex reasoning. The questions come from exams to access a specialized position in the Spanish healthcare system, and are challenging even for highly specialized humans. We then consider monolingual (Spanish) and cross-lingual (to English) experiments with information retrieval and neural techniques. We show that: (i) HEAD-QA challenges current methods, and (ii) the results lag well behind human performance, demonstrating its usefulness as a benchmark for future work.

There are no more papers matching your filters at the moment.