University of Antwerp

Large Language Models (LLMs) are increasingly proposed as near-autonomous artificial intelligence (AI) agents capable of making everyday decisions on behalf of humans. Although LLMs perform well on many technical tasks, their behaviour in personal decision-making remains less understood. Previous studies have assessed their rationality and moral alignment with human decisions. However, the behaviour of AI assistants in scenarios where financial rewards are at odds with user comfort has not yet been thoroughly explored. In this paper, we tackle this problem by quantifying the prices assigned by multiple LLMs to a series of user discomforts: additional walking, waiting, hunger and pain. We uncover several key concerns that strongly question the prospect of using current LLMs as decision-making assistants: (1) a large variance in responses between LLMs, (2) within a single LLM, responses show fragility to minor variations in prompt phrasing (e.g., reformulating the question in the first person can considerably alter the decision), (3) LLMs can accept unreasonably low rewards for major inconveniences (e.g., 1 Euro to wait 10 hours), and (4) LLMs can reject monetary gains where no discomfort is imposed (e.g., 1,000 Euro to wait 0 minutes). These findings emphasize the need for scrutiny of how LLMs value human inconvenience, particularly as we move toward applications where such cash-versus-comfort trade-offs are made on users' behalf.

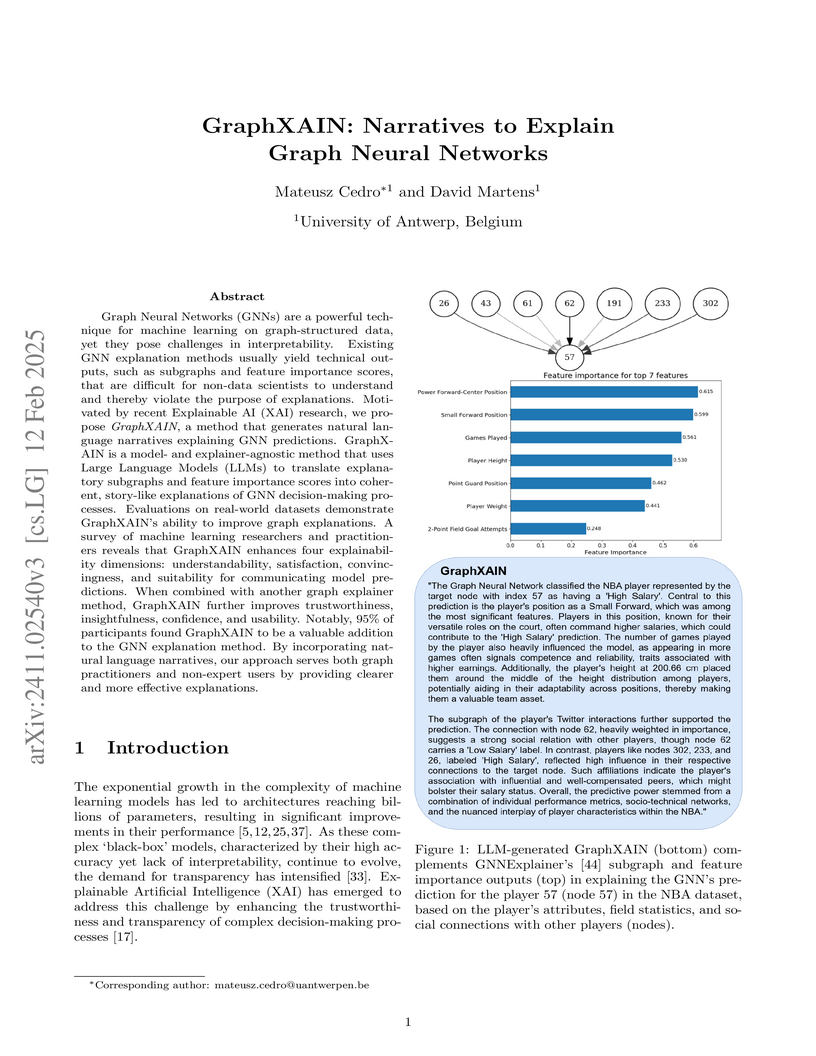

GraphXAIN, developed at the University of Antwerp, provides a general framework for generating natural language narratives to explain Graph Neural Network predictions. It translates technical GNN explainer outputs into coherent, story-like explanations, enhancing the understandability, trustworthiness, and communicability of GNN models for both technical and non-technical users.

University of Toronto

University of Toronto University of AlbertaTufts University

University of AlbertaTufts University Aalto UniversityNational Institute of Standards and TechnologyUniversity of JohannesburgCzech Institute of Informatics, Robotics and Cybernetics, Czech Technical UniversityUniversity of AntwerpFriedrich-Schiller-University JenaWageningen University & ResearchInstitute of Organic Chemistry and Biochemistry of the Czech Academy of SciencesAlberta Machine Intelligence InstituteUniversity of Applied Sciences, DüsseldorfEawag: Swiss Federal Institute of Aquatic Science and TechnologyBright Giant GmbH

Aalto UniversityNational Institute of Standards and TechnologyUniversity of JohannesburgCzech Institute of Informatics, Robotics and Cybernetics, Czech Technical UniversityUniversity of AntwerpFriedrich-Schiller-University JenaWageningen University & ResearchInstitute of Organic Chemistry and Biochemistry of the Czech Academy of SciencesAlberta Machine Intelligence InstituteUniversity of Applied Sciences, DüsseldorfEawag: Swiss Federal Institute of Aquatic Science and TechnologyBright Giant GmbHThe discovery and identification of molecules in biological and environmental

samples is crucial for advancing biomedical and chemical sciences. Tandem mass

spectrometry (MS/MS) is the leading technique for high-throughput elucidation

of molecular structures. However, decoding a molecular structure from its mass

spectrum is exceptionally challenging, even when performed by human experts. As

a result, the vast majority of acquired MS/MS spectra remain uninterpreted,

thereby limiting our understanding of the underlying (bio)chemical processes.

Despite decades of progress in machine learning applications for predicting

molecular structures from MS/MS spectra, the development of new methods is

severely hindered by the lack of standard datasets and evaluation protocols. To

address this problem, we propose MassSpecGym -- the first comprehensive

benchmark for the discovery and identification of molecules from MS/MS data.

Our benchmark comprises the largest publicly available collection of

high-quality labeled MS/MS spectra and defines three MS/MS annotation

challenges: de novo molecular structure generation, molecule retrieval, and

spectrum simulation. It includes new evaluation metrics and a

generalization-demanding data split, therefore standardizing the MS/MS

annotation tasks and rendering the problem accessible to the broad machine

learning community. MassSpecGym is publicly available at

this https URL

Researchers at ETH Zürich and collaborators introduce SimpleStories, a parameterized synthetic text generation framework and dataset that improves upon TinyStories, yielding more diverse and labeled data for interpretability studies. Models trained on SimpleStories with a custom tokenizer achieve better performance on tasks like coherence and grammar with fewer parameters than prior benchmarks.

Recently, many works studied the expressive power of graph neural networks (GNNs) by linking it to the 1-dimensional Weisfeiler--Leman algorithm (1-WL). Here, the 1-WL is a well-studied heuristic for the graph isomorphism problem, which iteratively colors or partitions a graph's vertex set. While this connection has led to significant advances in understanding and enhancing GNNs' expressive power, it does not provide insights into their generalization performance, i.e., their ability to make meaningful predictions beyond the training set. In this paper, we study GNNs' generalization ability through the lens of Vapnik--Chervonenkis (VC) dimension theory in two settings, focusing on graph-level predictions. First, when no upper bound on the graphs' order is known, we show that the bitlength of GNNs' weights tightly bounds their VC dimension. Further, we derive an upper bound for GNNs' VC dimension using the number of colors produced by the 1-WL. Secondly, when an upper bound on the graphs' order is known, we show a tight connection between the number of graphs distinguishable by the 1-WL and GNNs' VC dimension. Our empirical study confirms the validity of our theoretical findings.

Persistent homology (PH) is one of the most popular methods in Topological

Data Analysis. Even though PH has been used in many different types of

applications, the reasons behind its success remain elusive; in particular, it

is not known for which classes of problems it is most effective, or to what

extent it can detect geometric or topological features. The goal of this work

is to identify some types of problems where PH performs well or even better

than other methods in data analysis. We consider three fundamental shape

analysis tasks: the detection of the number of holes, curvature and convexity

from 2D and 3D point clouds sampled from shapes. Experiments demonstrate that

PH is successful in these tasks, outperforming several baselines, including

PointNet, an architecture inspired precisely by the properties of point clouds.

In addition, we observe that PH remains effective for limited computational

resources and limited training data, as well as out-of-distribution test data,

including various data transformations and noise. For convexity detection, we

provide a theoretical guarantee that PH is effective for this task in

Rd, and demonstrate the detection of a convexity measure on the

FLAVIA data set of plant leaf images. Due to the crucial role of shape

classification in understanding mathematical and physical structures and

objects, and in many applications, the findings of this work will provide some

knowledge about the types of problems that are appropriate for PH, so that it

can - to borrow the words from Wigner 1960 - ``remain valid in future research,

and extend, to our pleasure", but to our lesser bafflement, to a variety of

applications.

In many AI applications today, the predominance of black-box machine learning models, due to their typically higher accuracy, amplifies the need for Explainable AI (XAI). Existing XAI approaches, such as the widely used SHAP values or counterfactual (CF) explanations, are arguably often too technical for users to understand and act upon. To enhance comprehension of explanations of AI decisions and the overall user experience, we introduce XAIstories, which leverage Large Language Models to provide narratives about how AI predictions are made: SHAPstories do so based on SHAP explanations, while CFstories do so for CF explanations. We study the impact of our approach on users' experience and understanding of AI predictions. Our results are striking: over 90% of the surveyed general audience finds the narratives generated by SHAPstories convincing. Data scientists primarily see the value of SHAPstories in communicating explanations to a general audience, with 83% of data scientists indicating they are likely to use SHAPstories for this purpose. In an image classification setting, CFstories are considered more or equally convincing as the users' own crafted stories by more than 75% of the participants. CFstories additionally bring a tenfold speed gain in creating a narrative. We also find that SHAPstories help users to more accurately summarize and understand AI decisions, in a credit scoring setting we test, correctly answering comprehension questions significantly more often than they do when only SHAP values are provided. The results thereby suggest that XAIstories may significantly help explaining and understanding AI predictions, ultimately supporting better decision-making in various applications.

The new SysMLv2 adds mechanisms for the built-in specification of domain-specific concepts and language extensions. This feature promises to facilitate the creation of Domain-Specific Languages (DSLs) and interfacing with existing system descriptions and technical designs. In this paper, we review these features and evaluate SysMLv2's capabilities using concrete use cases. We develop DarTwin DSL, a DSL that formalizes the existing DarTwin notation for Digital Twin (DT) evolution, through SysMLv2, thereby supposedly enabling the wide application of DarTwin's evolution templates using any SysMLv2 tool. We demonstrate DarTwin DSL, but also point out limitations in the currently available tooling of SysMLv2 in terms of graphical notation capabilities. This work contributes to the growing field of Model-Driven Engineering (MDE) for DTs and combines it with the release of SysMLv2, thus integrating a systematic approach with DT evolution management in systems engineering.

Researchers at the University of Antwerp developed WINOWHAT, a paraphrased version of the WinoGrande benchmark for common sense reasoning, revealing that large language models consistently exhibit reduced performance on linguistically varied inputs. This indicates that current common sense capabilities in LLMs are likely overestimated due to their reliance on surface-level patterns or dataset artifacts rather than robust generalization.

A mechanistic understanding of how MLPs do computation in deep neural networks remains elusive. Current interpretability work can extract features from hidden activations over an input dataset but generally cannot explain how MLP weights construct features. One challenge is that element-wise nonlinearities introduce higher-order interactions and make it difficult to trace computations through the MLP layer. In this paper, we analyze bilinear MLPs, a type of Gated Linear Unit (GLU) without any element-wise nonlinearity that nevertheless achieves competitive performance. Bilinear MLPs can be fully expressed in terms of linear operations using a third-order tensor, allowing flexible analysis of the weights. Analyzing the spectra of bilinear MLP weights using eigendecomposition reveals interpretable low-rank structure across toy tasks, image classification, and language modeling. We use this understanding to craft adversarial examples, uncover overfitting, and identify small language model circuits directly from the weights alone. Our results demonstrate that bilinear layers serve as an interpretable drop-in replacement for current activation functions and that weight-based interpretability is viable for understanding deep-learning models.

This paper resolves the expressivity-generalization paradox in Graph Neural Networks by proposing a theoretical framework that connects generalization to the variance in graph structures. It reveals that increased expressivity can improve or worsen generalization depending on whether it primarily enhances inter-class separation or increases intra-class variance.

24 Sep 2024

University of Oslo University of CambridgeUniversity of Victoria

University of CambridgeUniversity of Victoria University of ManchesterUniversity of Zurich

University of ManchesterUniversity of Zurich University of Southern California

University of Southern California University College London

University College London University of Oxford

University of Oxford University of Science and Technology of China

University of Science and Technology of China University of California, Irvine

University of California, Irvine University of CopenhagenUniversity of MelbourneUniversity of Edinburgh

University of CopenhagenUniversity of MelbourneUniversity of Edinburgh INFNUniversity of Warsaw

INFNUniversity of Warsaw ETH Zürich

ETH Zürich Texas A&M University

Texas A&M University University of British Columbia

University of British Columbia University of Texas at Austin

University of Texas at Austin Johns Hopkins University

Johns Hopkins University Université Paris-Saclay

Université Paris-Saclay Stockholm University

Stockholm University University of ArizonaUniversité de GenèveUniversity of Massachusetts Amherst

University of ArizonaUniversité de GenèveUniversity of Massachusetts Amherst University of SydneyUniversity of ViennaUniversity of PortsmouthUniversity of IcelandUniversity of SussexObservatoire de ParisUniversità di Trieste

University of SydneyUniversity of ViennaUniversity of PortsmouthUniversity of IcelandUniversity of SussexObservatoire de ParisUniversità di Trieste University of GroningenInstituto de Astrofísica e Ciências do EspaçoINAFNiels Bohr InstituteUniversity of JyväskyläJet Propulsion LaboratoryUniversidade Federal do Rio Grande do NorteUniversity of LiègeInstituto de Astrofísica de CanariasUniversity of NottinghamSISSAPontificia Universidad Católica de ChileUniversità di Napoli Federico IIUniversity of AntwerpUniversity of Hawai’iUniversity of KwaZulu-NatalLudwig-Maximilians-UniversitätUniversidad de Los AndesUniversity of the Western CapeLaboratoire d’Astrophysique de MarseilleUniversidad de AtacamaMax-Planck Institut für extraterrestrische PhysikInstitut d’Estudis Espacials de Catalunya (IEEC)University of DurhamUniversità di RomaNational Astronomical Observatories of ChinaUniversity of CardiffUniversit

Grenoble AlpesUniversit

de ToulouseUniversit

de BordeauxUniversit

Lyon 1Universit

Paris CitUniversit

di PisaUniversit

di PadovaUniversit

de MontpellierUniversit

Di Bologna

University of GroningenInstituto de Astrofísica e Ciências do EspaçoINAFNiels Bohr InstituteUniversity of JyväskyläJet Propulsion LaboratoryUniversidade Federal do Rio Grande do NorteUniversity of LiègeInstituto de Astrofísica de CanariasUniversity of NottinghamSISSAPontificia Universidad Católica de ChileUniversità di Napoli Federico IIUniversity of AntwerpUniversity of Hawai’iUniversity of KwaZulu-NatalLudwig-Maximilians-UniversitätUniversidad de Los AndesUniversity of the Western CapeLaboratoire d’Astrophysique de MarseilleUniversidad de AtacamaMax-Planck Institut für extraterrestrische PhysikInstitut d’Estudis Espacials de Catalunya (IEEC)University of DurhamUniversità di RomaNational Astronomical Observatories of ChinaUniversity of CardiffUniversit

Grenoble AlpesUniversit

de ToulouseUniversit

de BordeauxUniversit

Lyon 1Universit

Paris CitUniversit

di PisaUniversit

di PadovaUniversit

de MontpellierUniversit

Di Bologna

University of CambridgeUniversity of Victoria

University of CambridgeUniversity of Victoria University of ManchesterUniversity of Zurich

University of ManchesterUniversity of Zurich University of Southern California

University of Southern California University College London

University College London University of Oxford

University of Oxford University of Science and Technology of China

University of Science and Technology of China University of California, Irvine

University of California, Irvine University of CopenhagenUniversity of MelbourneUniversity of Edinburgh

University of CopenhagenUniversity of MelbourneUniversity of Edinburgh INFNUniversity of Warsaw

INFNUniversity of Warsaw ETH Zürich

ETH Zürich Texas A&M University

Texas A&M University University of British Columbia

University of British Columbia University of Texas at Austin

University of Texas at Austin Johns Hopkins University

Johns Hopkins University Université Paris-Saclay

Université Paris-Saclay Stockholm University

Stockholm University University of ArizonaUniversité de GenèveUniversity of Massachusetts Amherst

University of ArizonaUniversité de GenèveUniversity of Massachusetts Amherst University of SydneyUniversity of ViennaUniversity of PortsmouthUniversity of IcelandUniversity of SussexObservatoire de ParisUniversità di Trieste

University of SydneyUniversity of ViennaUniversity of PortsmouthUniversity of IcelandUniversity of SussexObservatoire de ParisUniversità di Trieste University of GroningenInstituto de Astrofísica e Ciências do EspaçoINAFNiels Bohr InstituteUniversity of JyväskyläJet Propulsion LaboratoryUniversidade Federal do Rio Grande do NorteUniversity of LiègeInstituto de Astrofísica de CanariasUniversity of NottinghamSISSAPontificia Universidad Católica de ChileUniversità di Napoli Federico IIUniversity of AntwerpUniversity of Hawai’iUniversity of KwaZulu-NatalLudwig-Maximilians-UniversitätUniversidad de Los AndesUniversity of the Western CapeLaboratoire d’Astrophysique de MarseilleUniversidad de AtacamaMax-Planck Institut für extraterrestrische PhysikInstitut d’Estudis Espacials de Catalunya (IEEC)University of DurhamUniversità di RomaNational Astronomical Observatories of ChinaUniversity of CardiffUniversit

Grenoble AlpesUniversit

de ToulouseUniversit

de BordeauxUniversit

Lyon 1Universit

Paris CitUniversit

di PisaUniversit

di PadovaUniversit

de MontpellierUniversit

Di Bologna

University of GroningenInstituto de Astrofísica e Ciências do EspaçoINAFNiels Bohr InstituteUniversity of JyväskyläJet Propulsion LaboratoryUniversidade Federal do Rio Grande do NorteUniversity of LiègeInstituto de Astrofísica de CanariasUniversity of NottinghamSISSAPontificia Universidad Católica de ChileUniversità di Napoli Federico IIUniversity of AntwerpUniversity of Hawai’iUniversity of KwaZulu-NatalLudwig-Maximilians-UniversitätUniversidad de Los AndesUniversity of the Western CapeLaboratoire d’Astrophysique de MarseilleUniversidad de AtacamaMax-Planck Institut für extraterrestrische PhysikInstitut d’Estudis Espacials de Catalunya (IEEC)University of DurhamUniversità di RomaNational Astronomical Observatories of ChinaUniversity of CardiffUniversit

Grenoble AlpesUniversit

de ToulouseUniversit

de BordeauxUniversit

Lyon 1Universit

Paris CitUniversit

di PisaUniversit

di PadovaUniversit

de MontpellierUniversit

Di BolognaThe current standard model of cosmology successfully describes a variety of measurements, but the nature of its main ingredients, dark matter and dark energy, remains unknown. Euclid is a medium-class mission in the Cosmic Vision 2015-2025 programme of the European Space Agency (ESA) that will provide high-resolution optical imaging, as well as near-infrared imaging and spectroscopy, over about 14,000 deg^2 of extragalactic sky. In addition to accurate weak lensing and clustering measurements that probe structure formation over half of the age of the Universe, its primary probes for cosmology, these exquisite data will enable a wide range of science. This paper provides a high-level overview of the mission, summarising the survey characteristics, the various data-processing steps, and data products. We also highlight the main science objectives and expected performance.

10 Aug 2023

Within academia and industry, there has been a need for expansive simulation frameworks that include model-based simulation of sensors, mobile vehicles, and the environment around them. To this end, the modular, real-time, and open-source AirSim framework has been a popular community-built system that fulfills some of those needs. However, the framework required adding systems to serve some complex industrial applications, including designing and testing new sensor modalities, Simultaneous Localization And Mapping (SLAM), autonomous navigation algorithms, and transfer learning with machine learning models. In this work, we discuss the modification and additions to our open-source version of the AirSim simulation framework, including new sensor modalities, vehicle types, and methods to generate realistic environments with changeable objects procedurally. Furthermore, we show the various applications and use cases the framework can serve.

22 Oct 2025

Abrikosov vortices, where the superconducting gap is completely suppressed in the core, are dissipative, semi-classical entities that impact applications from high-current-density wires to superconducting quantum devices. In contrast, we present evidence that vortices trapped in granular superconducting films can behave as two-level systems, exhibiting microsecond-range quantum coherence and energy relaxation times that reach fractions of a millisecond. These findings support recent theoretical modeling of superconductors with granularity on the scale of the coherence length as tunnel junction networks, resulting in gapped vortices. Using the tools of circuit quantum electrodynamics, we perform coherent manipulation and quantum non-demolition readout of vortex states in granular aluminum microwave resonators, heralding new directions for quantum information processing, materials characterization, and sensing.

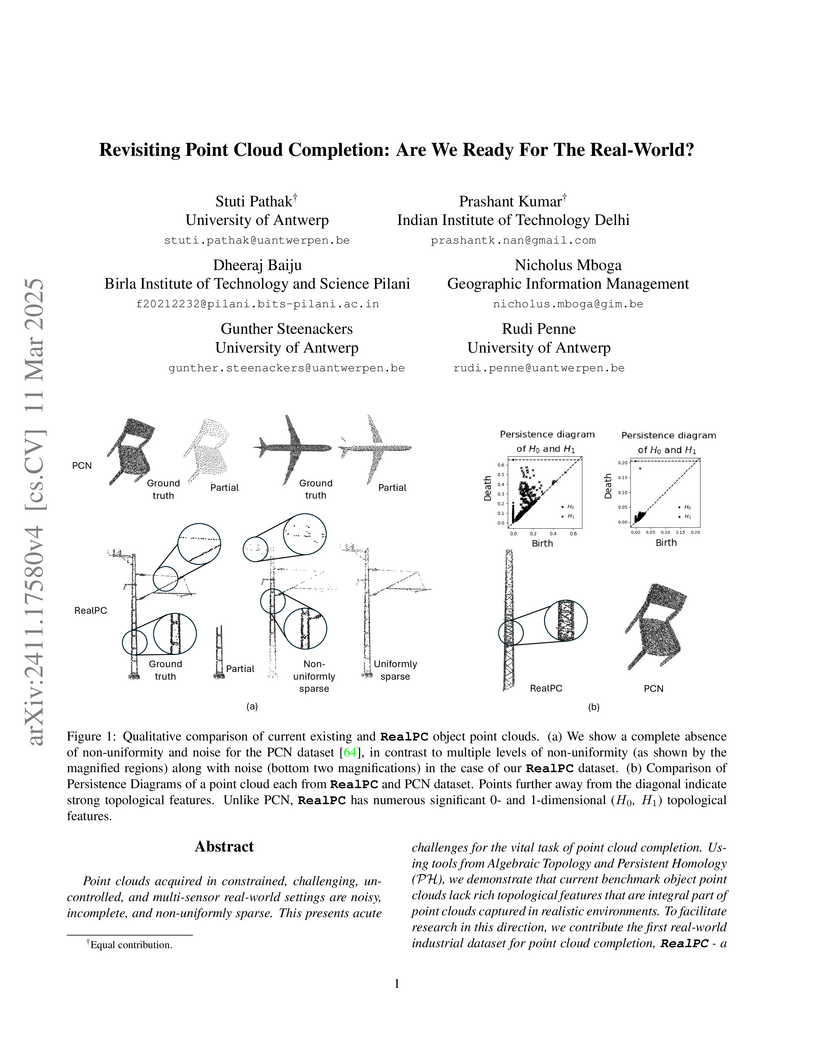

Researchers introduced RealPC, the first paired industrial point cloud completion dataset, revealing that real-world data possesses distinct topological features and noise characteristics compared to synthetic benchmarks. Their work integrates Topological Data Analysis and proposes BOSHNet, a network that leverages sampled ground truth topological backbones to improve completion accuracy on complex real-world point clouds.

Deep learning dominates image classification tasks, yet understanding how models arrive at predictions remains a challenge. Much research focuses on local explanations of individual predictions, such as saliency maps, which visualise the influence of specific pixels on a model's prediction. However, reviewing many of these explanations to identify recurring patterns is infeasible, while global methods often oversimplify and miss important local behaviours. To address this, we propose Segment Attribution Tables (SATs), a method for summarising local saliency explanations into (semi-)global insights. SATs take image segments (such as "eyes" in Chihuahuas) and leverage saliency maps to quantify their influence. These segments highlight concepts the model relies on across instances and reveal spurious correlations, such as reliance on backgrounds or watermarks, even when out-of-distribution test performance sees little change. SATs can explain any classifier for which a form of saliency map can be produced, using segmentation maps that provide named segments. SATs bridge the gap between oversimplified global summaries and overly detailed local explanations, offering a practical tool for analysing and debugging image classifiers.

Leipzig University New York UniversityLMU MunichTU Dortmund University

New York UniversityLMU MunichTU Dortmund University KU LeuvenHumboldt-Universität zu Berlin

KU LeuvenHumboldt-Universität zu Berlin Karlsruhe Institute of TechnologyUniversity of RegensburgNational Tsing-Hua UniversityUniversity of AntwerpINSEADUniversity of BayreuthCopenhagen Business SchoolErasmus UniversityGoethe-University, Frankfurt

Karlsruhe Institute of TechnologyUniversity of RegensburgNational Tsing-Hua UniversityUniversity of AntwerpINSEADUniversity of BayreuthCopenhagen Business SchoolErasmus UniversityGoethe-University, Frankfurt

New York UniversityLMU MunichTU Dortmund University

New York UniversityLMU MunichTU Dortmund University KU LeuvenHumboldt-Universität zu Berlin

KU LeuvenHumboldt-Universität zu Berlin Karlsruhe Institute of TechnologyUniversity of RegensburgNational Tsing-Hua UniversityUniversity of AntwerpINSEADUniversity of BayreuthCopenhagen Business SchoolErasmus UniversityGoethe-University, Frankfurt

Karlsruhe Institute of TechnologyUniversity of RegensburgNational Tsing-Hua UniversityUniversity of AntwerpINSEADUniversity of BayreuthCopenhagen Business SchoolErasmus UniversityGoethe-University, FrankfurtUnderstanding the decisions made and actions taken by increasingly complex AI

system remains a key challenge. This has led to an expanding field of research

in explainable artificial intelligence (XAI), highlighting the potential of

explanations to enhance trust, support adoption, and meet regulatory standards.

However, the question of what constitutes a "good" explanation is dependent on

the goals, stakeholders, and context. At a high level, psychological insights

such as the concept of mental model alignment can offer guidance, but success

in practice is challenging due to social and technical factors. As a result of

this ill-defined nature of the problem, explanations can be of poor quality

(e.g. unfaithful, irrelevant, or incoherent), potentially leading to

substantial risks. Instead of fostering trust and safety, poorly designed

explanations can actually cause harm, including wrong decisions, privacy

violations, manipulation, and even reduced AI adoption. Therefore, we caution

stakeholders to beware of explanations of AI: while they can be vital, they are

not automatically a remedy for transparency or responsible AI adoption, and

their misuse or limitations can exacerbate harm. Attention to these caveats can

help guide future research to improve the quality and impact of AI

explanations.

Group-VI transition metal dichalcogenides (TMDs), MoS2 and MoSe2, have emerged as prototypical low-dimensional systems with distinctive phononic and electronic properties, making them attractive for applications in nanoelectronics, optoelectronics, and thermoelectrics. Yet, their reported lattice thermal conductivities (κ) remain highly inconsistent, with experimental values and theoretical predictions differing by more than an order of magnitude. These discrepancies stem from uncertainties in measurement techniques, variations in computational protocols, and ambiguities in the treatment of higher-order anharmonic processes. In this study, we critically review these inconsistencies, first by mapping the spread of experimental and modeling results, and then by identifying the methodological origins of divergence. To this end, we bridge first-principles calculations, molecular dynamics simulations, and state-of-the-art machine learning force fields (MLFFs) including recently developed foundation models. %MACE-OMAT-0, UMA, and NEP89. We train and benchmark GAP, MACE, NEP, and \textsc{HIPHIVE} against density functional theory (DFT) and rigorously evaluate the impact of third- and fourth-order phonon scattering processes on κ. The computational efficiency of MLFFs enables us to extend convergence tests beyond conventional limits and to validate predictions through homogeneous nonequilibrium molecular dynamics as well. Our analysis demonstrates that, contrary to some recent claims, fully converged four-phonon processes contribute negligibly to the intrinsic thermal conductivity of both MoS2 and MoSe2. These findings not only refine the intrinsic transport limits of 2D TMDs but also establish MLFF-based approaches as a robust and scalable framework for predictive modeling of phonon-mediated thermal transport in low-dimensional materials.

Generating continuous surfaces from discrete point cloud data is a fundamental task in several 3D vision applications. Real-world point clouds are inherently noisy due to various technical and environmental factors. Existing data-driven surface reconstruction algorithms rely heavily on ground truth normals or compute approximate normals as an intermediate step. This dependency makes them extremely unreliable for noisy point cloud datasets, even if the availability of ground truth training data is ensured, which is not always the case. B-spline reconstruction techniques provide compact surface representations of point clouds and are especially known for their smoothening properties. However, the complexity of the surfaces approximated using B-splines is directly influenced by the number and location of the spline control points. Existing spline-based modeling methods predict the locations of a fixed number of control points for a given point cloud, which makes it very difficult to match the complexity of its underlying surface. In this work, we develop a Dictionary-Guided Graph Convolutional Network-based surface reconstruction strategy where we simultaneously predict both the location and the number of control points for noisy point cloud data to generate smooth surfaces without the use of any point normals. We compare our reconstruction method with several well-known as well as recent baselines by employing widely-used evaluation metrics, and demonstrate that our method outperforms all of them both qualitatively and quantitatively.

18 Sep 2025

Cohen's and Fleiss' kappa are well-known measures of inter-rater agreement, but they restrict each rater to selecting only one category per subject. This limitation is consequential in contexts where subjects may belong to multiple categories, such as psychiatric diagnoses involving multiple disorders or classifying interview snippets into multiple codes of a codebook. We propose a generalized version of Fleiss' kappa, which accommodates multiple raters assigning subjects to one or more nominal categories. Our proposed κ statistic can incorporate category weights based on their importance and account for hierarchical category structures, such as primary disorders with sub-disorders. The new κ statistic can also manage missing data and variations in the number of raters per subject or category. We review existing methods that allow for multiple category assignments and detail the derivation of our measure, proving its equivalence to Fleiss' kappa when raters select a single category per subject. The paper discusses the assumptions, premises, and potential paradoxes of the new measure, as well as the range of possible values and guidelines for interpretation. The measure was developed to investigate the reliability of a new mathematics assessment method, of which an example is elaborated. The paper concludes with a worked-out example of psychiatrists diagnosing patients with multiple disorders. All calculations are provided as R script and an Excel sheet to facilitate access to the new κ tatistic.

There are no more papers matching your filters at the moment.