University of Glasgow

01 Feb 2024

California Institute of Technology

California Institute of Technology Northwestern UniversityLouisiana State University

Northwestern UniversityLouisiana State University University of Wisconsin-Madison

University of Wisconsin-Madison MITUniversity of GlasgowSyracuse UniversityThe University of ChicagoCalifornia State University, FullertonKavli Institute for Cosmological PhysicsChristopher Newport UniversityLIGO LaboratoryEnrico Fermi InstituteLIGO Hanford ObservatoryCenter for Interdisciplinary Exploration and Research in Astrophysics (CIERA)Sorbonne Paris Nord UniversityZooniverse, The Adler PlanetariumThe Nicholas and Lee Begovich Center for Gravitational-Wave Physics and AstronomyConSol Software GmbH

MITUniversity of GlasgowSyracuse UniversityThe University of ChicagoCalifornia State University, FullertonKavli Institute for Cosmological PhysicsChristopher Newport UniversityLIGO LaboratoryEnrico Fermi InstituteLIGO Hanford ObservatoryCenter for Interdisciplinary Exploration and Research in Astrophysics (CIERA)Sorbonne Paris Nord UniversityZooniverse, The Adler PlanetariumThe Nicholas and Lee Begovich Center for Gravitational-Wave Physics and AstronomyConSol Software GmbHThe Gravity Spy project aims to uncover the origins of glitches, transient

bursts of noise that hamper analysis of gravitational-wave data. By using both

the work of citizen-science volunteers and machine-learning algorithms, the

Gravity Spy project enables reliable classification of glitches. Citizen

science and machine learning are intrinsically coupled within the Gravity Spy

framework, with machine-learning classifications providing a rapid first-pass

classification of the dataset and enabling tiered volunteer training, and

volunteer-based classifications verifying the machine classifications,

bolstering the machine-learning training set and identifying new morphological

classes of glitches. These classifications are now routinely used in studies

characterizing the performance of the LIGO gravitational-wave detectors.

Providing the volunteers with a training framework that teaches them to

classify a wide range of glitches, as well as additional tools to aid their

investigations of interesting glitches, empowers them to make discoveries of

new classes of glitches. This demonstrates that, when giving suitable support,

volunteers can go beyond simple classification tasks to identify new features

in data at a level comparable to domain experts. The Gravity Spy project is now

providing volunteers with more complicated data that includes auxiliary

monitors of the detector to identify the root cause of glitches.

This study comprehensively assessed the extent to which individual biological characteristics can be predicted from multimodal neuroimaging data, utilizing an unprecedented scale of data and computational resources. It found a significant disparity in predictability, with constitutional traits (e.g., sex, age) being highly predictable, while complex psychological and many chronic disease characteristics remained largely unresolved by current neuroimaging paradigms.

As the gravitational wave detector network is upgraded and the sensitivity of the detectors improves, novel scientific avenues open for exploration. For example, tests of general relativity will become more accurate as smaller deviations can be probed. Additionally, the detection of lensed gravitational waves becomes more likely. However, these new avenues could also interact with each other, and a gravitational wave event presenting deviations from general relativity could be mistaken for a lensed one. Here, we explore how phenomenological deviations from general relativity or binaries of exotic compact objects could impact those lensing searches focusing on a single event. We consider strong lensing, millilensing, and microlensing and find that certain phenomenological deviations from general relativity may be mistaken for all of these types of lensing. Therefore, our study shows that future candidate lensing events would need to be carefully examined to avoid a false claim of lensing where instead a deviation from general relativity has been seen.

31 Aug 2025

This survey paper defines and systematically reviews the emerging paradigm of self-evolving AI agents, which bridge static foundation models with dynamic lifelong adaptability. It introduces a unified conceptual framework and a comprehensive taxonomy of evolution techniques, mapping the progression towards continuous self-improvement in AI systems.

The paper scrutinizes the long-standing belief in unbounded Large Language Model (LLM) scaling, establishing a proof-informed framework that identifies intrinsic theoretical limits on their capabilities. It synthesizes empirical failures like hallucination and reasoning degradation with foundational concepts from computability theory, information theory, and statistical learning, showing that these issues are inherent rather than transient engineering challenges.

23 Sep 2025

EvoAgentX introduces an automated open-source framework for generating, executing, and evolutionarily optimizing multi-agent workflows. It integrates diverse state-of-the-art optimization algorithms to refine agent prompts and workflow topologies, achieving substantial performance gains up to 10% on reasoning and code generation benchmarks, and up to 20% overall accuracy on the real-world GAIA multi-agent task.

Addressing the critical issue of medical hallucinations in radiology MLLMs, researchers at the University of Glasgow introduced Clinical Contrastive Decoding (CCD), an inference-time framework that leverages expert models to ensure image-grounded report generation. The method improved clinical fidelity substantially, yielding up to a 17.13% increase in RadGraph-F1 and a 67.60% increase in CheXbert-F1 (Top 5 pathologies) on the MIMIC-CXR dataset for report generation.

Libra is a novel multimodal large language model designed for radiology report generation that explicitly leverages temporal imaging information from prior studies. The model introduces a Temporal Alignment Connector to integrate high-granularity features and temporal differences, achieving new state-of-the-art results on the MIMIC-CXR dataset by generating more accurate and clinically relevant reports with reduced temporal hallucinations.

24 May 2025

The Self-Evolving Agentic Workflows (SEW) framework introduces an automated approach for generating and optimizing multi-agent systems for code generation, using evolutionary algorithms to adapt both workflow structures and agent prompts. This framework achieved a 33.9% performance improvement on the LiveCodeBench dataset with GPT-4o mini, reaching a pass@1 score of 50.9%.

Researchers from the University of Glasgow and University of Aberdeen systematically evaluated how different memory structures and retrieval methods influence LLM agent performance across various tasks. They found that a mixed memory approach consistently provided balanced performance and high robustness to noise, while iterative retrieval generally excelled for complex reasoning tasks.

Researchers introduced FOLLOWIR, a new benchmark and metric to evaluate how well information retrieval models adhere to complex, real-world instructions. They demonstrated that most existing models fail this task and successfully fine-tuned a 7B parameter model, FOLLOWIR-7B, which showed a 20.8% relative improvement in instruction following.

Video generation assessment is essential for ensuring that generative models

produce visually realistic, high-quality videos while aligning with human

expectations. Current video generation benchmarks fall into two main

categories: traditional benchmarks, which use metrics and embeddings to

evaluate generated video quality across multiple dimensions but often lack

alignment with human judgments; and large language model (LLM)-based

benchmarks, though capable of human-like reasoning, are constrained by a

limited understanding of video quality metrics and cross-modal consistency. To

address these challenges and establish a benchmark that better aligns with

human preferences, this paper introduces Video-Bench, a comprehensive benchmark

featuring a rich prompt suite and extensive evaluation dimensions. This

benchmark represents the first attempt to systematically leverage MLLMs across

all dimensions relevant to video generation assessment in generative models. By

incorporating few-shot scoring and chain-of-query techniques, Video-Bench

provides a structured, scalable approach to generated video evaluation.

Experiments on advanced models including Sora demonstrate that Video-Bench

achieves superior alignment with human preferences across all dimensions.

Moreover, in instances where our framework's assessments diverge from human

evaluations, it consistently offers more objective and accurate insights,

suggesting an even greater potential advantage over traditional human judgment.

20 Apr 2025

A comprehensive survey examines the integration of Multi-Agent Reinforcement Learning (MARL) with Large Language Models to develop meta-thinking capabilities, synthesizing approaches across reward mechanisms, self-play architectures, and meta-learning strategies while highlighting key challenges in scalability and stability for building more introspective AI systems.

We introduce the first continuous-time score-based generative model that leverages fractional diffusion processes for its underlying dynamics. Although diffusion models have excelled at capturing data distributions, they still suffer from various limitations such as slow convergence, mode-collapse on imbalanced data, and lack of diversity. These issues are partially linked to the use of light-tailed Brownian motion (BM) with independent increments. In this paper, we replace BM with an approximation of its non-Markovian counterpart, fractional Brownian motion (fBM), characterized by correlated increments and Hurst index H∈(0,1), where H=0.5 recovers the classical BM. To ensure tractable inference and learning, we employ a recently popularized Markov approximation of fBM (MA-fBM) and derive its reverse-time model, resulting in generative fractional diffusion models (GFDM). We characterize the forward dynamics using a continuous reparameterization trick and propose augmented score matching to efficiently learn the score function, which is partly known in closed form, at minimal added cost. The ability to drive our diffusion model via MA-fBM offers flexibility and control. H≤0.5 enters the regime of rough paths whereas H>0.5 regularizes diffusion paths and invokes long-term memory. The Markov approximation allows added control by varying the number of Markov processes linearly combined to approximate fBM. Our evaluations on real image datasets demonstrate that GFDM achieves greater pixel-wise diversity and enhanced image quality, as indicated by a lower FID, offering a promising alternative to traditional diffusion models

Researchers from L3S Research Center, TU Delft, and the University of Glasgow present a comprehensive survey classifying adaptive retrieval and ranking mechanisms that leverage test-time corpus feedback in Retrieval-Augmented Generation (RAG) systems. The work offers a structured overview of techniques that dynamically refine information retrieval during inference, moving beyond static retrieval to enhance RAG performance for complex tasks.

Geospatial Foundation Models (GFMs) have emerged as powerful tools for

extracting representations from Earth observation data, but their evaluation

remains inconsistent and narrow. Existing works often evaluate on suboptimal

downstream datasets and tasks, that are often too easy or too narrow, limiting

the usefulness of the evaluations to assess the real-world applicability of

GFMs. Additionally, there is a distinct lack of diversity in current evaluation

protocols, which fail to account for the multiplicity of image resolutions,

sensor types, and temporalities, which further complicates the assessment of

GFM performance. In particular, most existing benchmarks are geographically

biased towards North America and Europe, questioning the global applicability

of GFMs. To overcome these challenges, we introduce PANGAEA, a standardized

evaluation protocol that covers a diverse set of datasets, tasks, resolutions,

sensor modalities, and temporalities. It establishes a robust and widely

applicable benchmark for GFMs. We evaluate the most popular GFMs openly

available on this benchmark and analyze their performance across several

domains. In particular, we compare these models to supervised baselines (e.g.

UNet and vanilla ViT), and assess their effectiveness when faced with limited

labeled data. Our findings highlight the limitations of GFMs, under different

scenarios, showing that they do not consistently outperform supervised models.

PANGAEA is designed to be highly extensible, allowing for the seamless

inclusion of new datasets, models, and tasks in future research. By releasing

the evaluation code and benchmark, we aim to enable other researchers to

replicate our experiments and build upon our work, fostering a more principled

evaluation protocol for large pre-trained geospatial models. The code is

available at this https URL

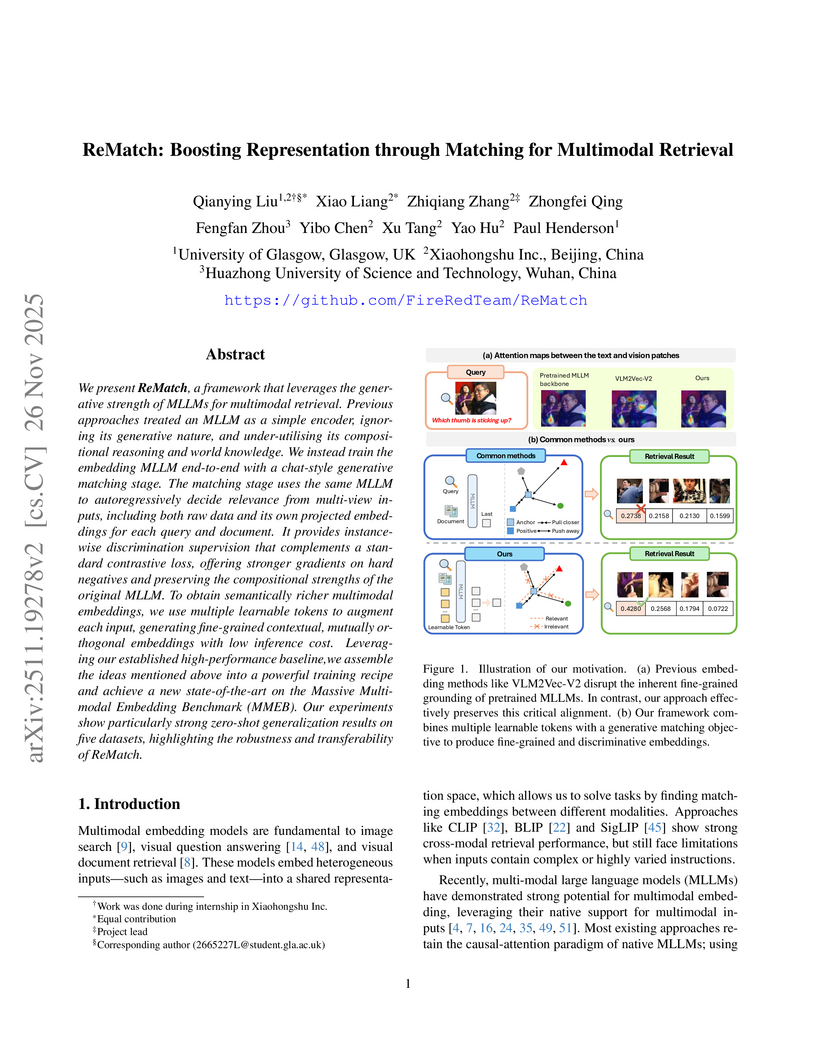

ReMatch enhances multimodal retrieval by unifying generative and discriminative representations within Multimodal Large Language Models. This framework achieves state-of-the-art performance on the MMEB benchmark, showing up to a 27.3% gain on compositional retrieval tasks while preserving the MLLM's generative capabilities.

We introduce RadEval, a unified, open-source framework for evaluating radiology texts. RadEval consolidates a diverse range of metrics, from classic n-gram overlap (BLEU, ROUGE) and contextual measures (BERTScore) to clinical concept-based scores (F1CheXbert, F1RadGraph, RaTEScore, SRR-BERT, TemporalEntityF1) and advanced LLM-based evaluators (GREEN). We refine and standardize implementations, extend GREEN to support multiple imaging modalities with a more lightweight model, and pretrain a domain-specific radiology encoder, demonstrating strong zero-shot retrieval performance. We also release a richly annotated expert dataset with over 450 clinically significant error labels and show how different metrics correlate with radiologist judgment. Finally, RadEval provides statistical testing tools and baseline model evaluations across multiple publicly available datasets, facilitating reproducibility and robust benchmarking in radiology report generation.

Open-Vocabulary semantic segmentation (OVSS) and domain generalization in semantic segmentation (DGSS) highlight a subtle complementarity that motivates Open-Vocabulary Domain-Generalized Semantic Segmentation (OV-DGSS). OV-DGSS aims to generate pixel-level masks for unseen categories while maintaining robustness across unseen domains, a critical capability for real-world scenarios such as autonomous driving in adverse conditions. We introduce Vireo, a novel single-stage framework for OV-DGSS that unifies the strengths of OVSS and DGSS for the first time. Vireo builds upon the frozen Visual Foundation Models (VFMs) and incorporates scene geometry via Depth VFMs to extract domain-invariant structural features. To bridge the gap between visual and textual modalities under domain shift, we propose three key components: (1) GeoText Prompts, which align geometric features with language cues and progressively refine VFM encoder representations; (2) Coarse Mask Prior Embedding (CMPE) for enhancing gradient flow for faster convergence and stronger textual influence; and (3) the Domain-Open-Vocabulary Vector Embedding Head (DOV-VEH), which fuses refined structural and semantic features for robust prediction. Comprehensive evaluation on these components demonstrates the effectiveness of our designs. Our proposed Vireo achieves the state-of-the-art performance and surpasses existing methods by a large margin in both domain generalization and open-vocabulary recognition, offering a unified and scalable solution for robust visual understanding in diverse and dynamic environments. Code is available at this https URL.

WorkBench, a new benchmark dataset from Mindsdb and universities including Glasgow and Warwick, evaluates autonomous AI agents in realistic workplace settings. It introduces an "outcome-centric evaluation" methodology, which revealed that even state-of-the-art models like GPT-4 achieve only 43% accuracy on complex tasks and frequently produce undesirable "side effects" in the simulated environment.

There are no more papers matching your filters at the moment.