University of Maine

ProtoViT, developed by researchers from Dartmouth College, Duke University, and the University of Maine, integrates Vision Transformers with prototype-based reasoning to create an interpretable image classification model. It achieves superior classification accuracy while providing transparent, human-understandable explanations for its decisions by showing which parts of an input image are similar to learned prototypes.

Cross-view geo-localization has garnered notable attention in the realm of computer vision, spurred by the widespread availability of copious geotagged datasets and the advancements in machine learning techniques. This paper provides a thorough survey of cutting-edge methodologies, techniques, and associated challenges that are integral to this domain, with a focus on feature-based and deep learning strategies. Feature-based methods capitalize on unique features to establish correspondences across disparate viewpoints, whereas deep learning-based methodologies deploy convolutional neural networks to embed view-invariant attributes. This work also delineates the multifaceted challenges encountered in cross-view geo-localization, such as variations in viewpoints and illumination, the occurrence of occlusions, and it elucidates innovative solutions that have been formulated to tackle these issues. Furthermore, we delineate benchmark datasets and relevant evaluation metrics, and also perform a comparative analysis of state-of-the-art techniques. Finally, we conclude the paper with a discussion on prospective avenues for future research and the burgeoning applications of cross-view geo-localization in an intricately interconnected global landscape.

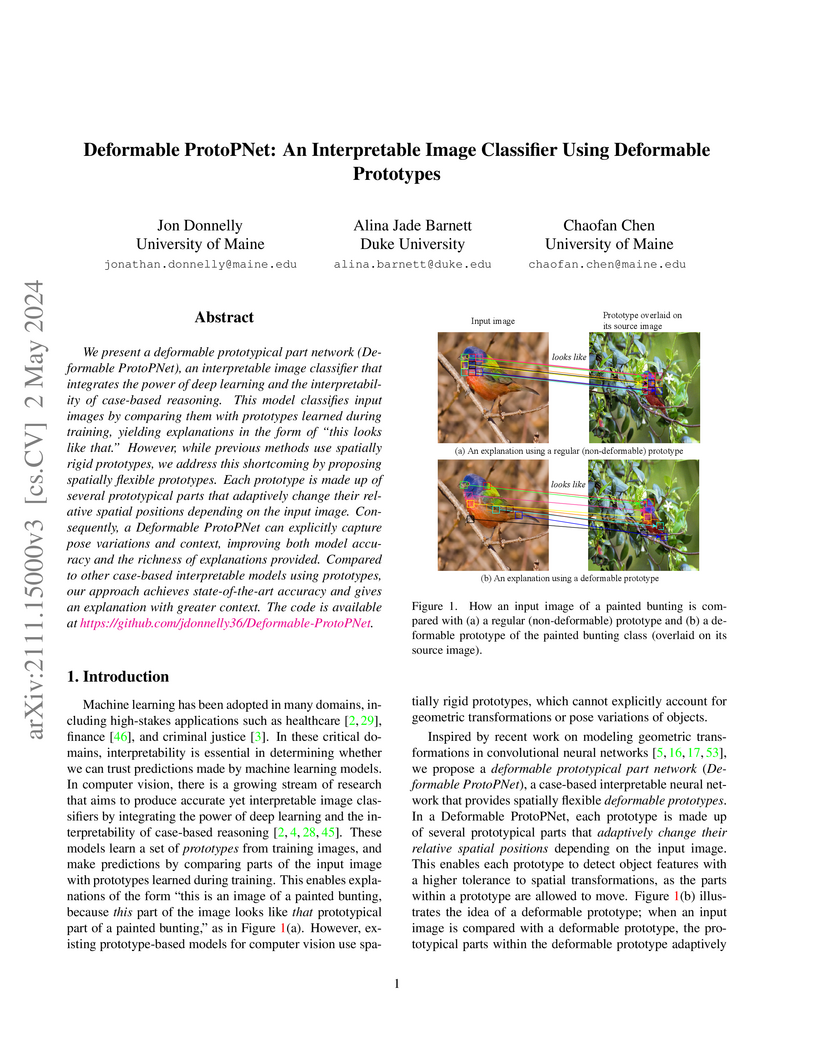

Deformable ProtoPNet introduces an interpretable image classifier that uses spatially flexible prototypes to enhance explanations and achieves competitive accuracy on fine-grained recognition tasks. The model integrates deformable convolutions, a novel L2 norm-preserving interpolation, and angular margin losses to adapt prototypes to diverse object poses and contexts.

We study image segmentation in the biological domain, particularly trait segmentation from specimen images (e.g., butterfly wing stripes, beetle elytra). This fine-grained task is crucial for understanding the biology of organisms, but it traditionally requires manually annotating segmentation masks for hundreds of images per species, making it highly labor-intensive. To address this challenge, we propose a label-efficient approach, Static Segmentation by Tracking (SST), based on a key insight: while specimens of the same species exhibit natural variation, the traits of interest show up consistently. This motivates us to concatenate specimen images into a ``pseudo-video'' and reframe trait segmentation as a tracking problem. Specifically, SST generates masks for unlabeled images by propagating annotated or predicted masks from the ``pseudo-preceding'' images. Built upon recent video segmentation models, such as Segment Anything Model 2, SST achieves high-quality trait segmentation with only one labeled image per species, marking a breakthrough in specimen image analysis. To further enhance segmentation quality, we introduce a cycle-consistent loss for fine-tuning, again requiring only one labeled image. Additionally, we demonstrate the broader potential of SST, including one-shot instance segmentation in natural images and trait-based image retrieval.

Numerous techniques have been proposed for generating adversarial examples in white-box settings under strict Lp-norm constraints. However, such norm-bounded examples often fail to align well with human perception, and only recently have a few methods begun specifically exploring perceptually aligned adversarial examples. Moreover, it remains unclear whether insights from Lp-constrained attacks can be effectively leveraged to improve perceptual efficacy. In this paper, we introduce DAASH, a fully differentiable meta-attack framework that generates effective and perceptually aligned adversarial examples by strategically composing existing Lp-based attack methods. DAASH operates in a multi-stage fashion: at each stage, it aggregates candidate adversarial examples from multiple base attacks using learned, adaptive weights and propagates the result to the next stage. A novel meta-loss function guides this process by jointly minimizing misclassification loss and perceptual distortion, enabling the framework to dynamically modulate the contribution of each base attack throughout the stages. We evaluate DAASH on adversarially trained models across CIFAR-10, CIFAR-100, and ImageNet. Despite relying solely on Lp-constrained based methods, DAASH significantly outperforms state-of-the-art perceptual attacks such as AdvAD -- achieving higher attack success rates (e.g., 20.63\% improvement) and superior visual quality, as measured by SSIM, LPIPS, and FID (improvements ≈ of 11, 0.015, and 5.7, respectively). Furthermore, DAASH generalizes well to unseen defenses, making it a practical and strong baseline for evaluating robustness without requiring handcrafted adaptive attacks for each new defense.

Magnetically controlled states in quantum materials are central to their unique electronic and magnetic properties. However, direct momentum-resolved visualization of these states via angle-resolved photoemission spectroscopy (ARPES) has been hindered by the disruptive effect of magnetic fields on photoelectron trajectories. Here, we introduce an \textit{in-situ} method that is, in principle, capable of applying magnetic fields up to 1 T. This method uses substrates composed of nanomagnetic metamaterial arrays with alternating polarity. Such substrates can generate strong, homogeneous, and spatially confined fields applicable to samples with thicknesses up to the micron scale, enabling ARPES measurements under magnetic fields with minimal photoelectron trajectory distortion. We demonstrate this minimal distortion with ARPES data taken on monolayer graphene. Our method paves the way for probing magnetic field-dependent electronic structures and studying field-tunable quantum phases with state-of-the-art energy-momentum resolutions.

Artificial Intelligence (AI) agents capable of autonomous learning and independent decision-making hold great promise for addressing complex challenges across various critical infrastructure domains, including transportation, energy systems, and manufacturing. However, the surge in the design and deployment of AI systems, driven by various stakeholders with distinct and unaligned objectives, introduces a crucial challenge: How can uncoordinated AI systems coexist and evolve harmoniously in shared environments without creating chaos or compromising safety? To address this, we advocate for a fundamental rethinking of existing multi-agent frameworks, such as multi-agent systems and game theory, which are largely limited to predefined rules and static objective structures. We posit that AI agents should be empowered to adjust their objectives dynamically, make compromises, form coalitions, and safely compete or cooperate through evolving relationships and social feedback. Through two case studies in critical infrastructure applications, we call for a shift toward the emergent, self-organizing, and context-aware nature of these multi-agentic AI systems.

The paper "Weather-Aware Object Detection Transformer for Domain Adaptation" introduces three distinct approaches to enhance the robustness of RT-DETR models in foggy conditions. The proposed perceptual loss-based adaptation (PL-RT-DETR) showed consistent, albeit modest, performance improvements on foggy datasets compared to baseline RT-DETR and YOLOv8.

Understanding a plant's root system architecture (RSA) is crucial for a variety of plant science problem domains including sustainability and climate adaptation. Minirhizotron (MR) technology is a widely-used approach for phenotyping RSA non-destructively by capturing root imagery over time. Precisely segmenting roots from the soil in MR imagery is a critical step in studying RSA features. In this paper, we introduce a large-scale dataset of plant root images captured by MR technology. In total, there are over 72K RGB root images across six different species including cotton, papaya, peanut, sesame, sunflower, and switchgrass in the dataset. The images span a variety of conditions including varied root age, root structures, soil types, and depths under the soil surface. All of the images have been annotated with weak image-level labels indicating whether each image contains roots or not. The image-level labels can be used to support weakly supervised learning in plant root segmentation tasks. In addition, 63K images have been manually annotated to generate pixel-level binary masks indicating whether each pixel corresponds to root or not. These pixel-level binary masks can be used as ground truth for supervised learning in semantic segmentation tasks. By introducing this dataset, we aim to facilitate the automatic segmentation of roots and the research of RSA with deep learning and other image analysis algorithms.

27 Sep 2023

Privacy policies outline the data practices of Online Social Networks (OSN)

to comply with privacy regulations such as the EU-GDPR and CCPA. Several

ontologies for modeling privacy regulations, policies, and compliance have

emerged in recent years. However, they are limited in various ways: (1) they

specifically model what is required of privacy policies according to one

specific privacy regulation such as GDPR; (2) they provide taxonomies of

concepts but are not sufficiently axiomatized to afford automated reasoning

with them; and (3) they do not model data practices of privacy policies in

sufficient detail to allow assessing the transparency of policies. This paper

presents an OWL Ontology for Privacy Policies of OSNs, OPPO, that aims to fill

these gaps by formalizing detailed data practices from OSNS' privacy policies.

OPPO is grounded in BFO, IAO, OMRSE, and OBI, and its design is guided by the

use case of representing and reasoning over the content of OSNs' privacy

policies and evaluating policies' transparency in greater detail.

10 Aug 2024

Detecting falls among the elderly and alerting their community responders can save countless lives. We design and develop a low-cost mobile robot that periodically searches the house for the person being monitored and sends an email to a set of designated responders if a fall is detected. In this project, we make three novel design decisions and contributions. First, our custom-designed low-cost robot has advanced features like omnidirectional wheels, the ability to run deep learning models, and autonomous wireless charging. Second, we improve the accuracy of fall detection for the YOLOv8-Pose-nano object detection network by 6% and YOLOv8-Pose-large by 12%. We do so by transforming the images captured from the robot viewpoint (camera height 0.15m from the ground) to a typical human viewpoint (1.5m above the ground) using a principally computed Homography matrix. This improves network accuracy because the training dataset MS-COCO on which YOLOv8-Pose is trained is captured from a human-height viewpoint. Lastly, we improve the robot controller by learning a model that predicts the robot velocity from the input signal to the motor controller.

Despite the impressive performance of deep neural networks (DNNs), their

computational complexity and storage space consumption have led to the concept

of network compression. While DNN compression techniques such as pruning and

low-rank decomposition have been extensively studied, there has been

insufficient attention paid to their theoretical explanation. In this paper, we

propose a novel theoretical framework that leverages a probabilistic latent

space of DNN weights and explains the optimal network sparsity by using the

information-theoretic divergence measures. We introduce new analogous projected

patterns (AP2) and analogous-in-probability projected patterns (AP3) notions

for DNNs and prove that there exists a relationship between AP3/AP2 property of

layers in the network and its performance. Further, we provide a theoretical

analysis that explains the training process of the compressed network. The

theoretical results are empirically validated through experiments conducted on

standard pre-trained benchmarks, including AlexNet, ResNet50, and VGG16, using

CIFAR10 and CIFAR100 datasets. Through our experiments, we highlight the

relationship of AP3 and AP2 properties with fine-tuning pruned DNNs and

sparsity levels.

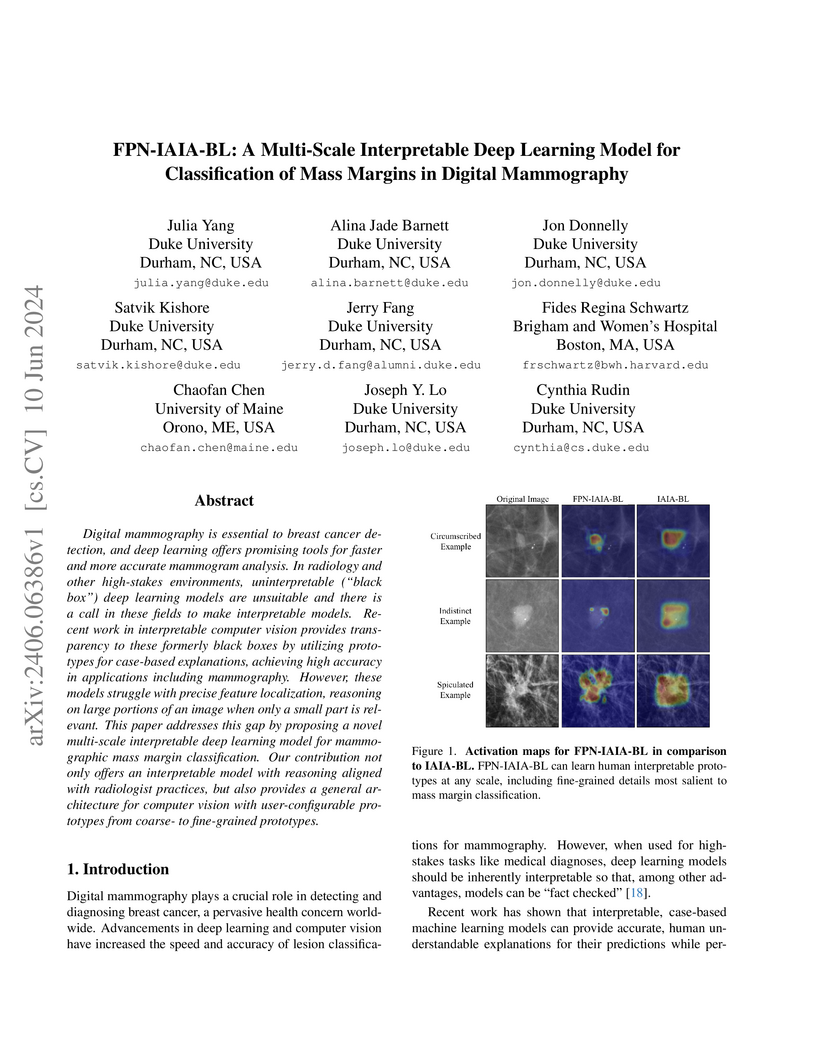

Digital mammography is essential to breast cancer detection, and deep

learning offers promising tools for faster and more accurate mammogram

analysis. In radiology and other high-stakes environments, uninterpretable

("black box") deep learning models are unsuitable and there is a call in these

fields to make interpretable models. Recent work in interpretable computer

vision provides transparency to these formerly black boxes by utilizing

prototypes for case-based explanations, achieving high accuracy in applications

including mammography. However, these models struggle with precise feature

localization, reasoning on large portions of an image when only a small part is

relevant. This paper addresses this gap by proposing a novel multi-scale

interpretable deep learning model for mammographic mass margin classification.

Our contribution not only offers an interpretable model with reasoning aligned

with radiologist practices, but also provides a general architecture for

computer vision with user-configurable prototypes from coarse- to fine-grained

prototypes.

29 Jul 2025

Microelectronic systems are widely used in many sensitive applications (e.g., manufacturing, energy, defense). These systems increasingly handle sensitive data (e.g., encryption key) and are vulnerable to diverse threats, such as, power side-channel attacks, which infer sensitive data through dynamic power profile. In this paper, we present a novel framework, POLARIS for mitigating power side channel leakage using an Explainable Artificial Intelligence (XAI) guided masking approach. POLARIS uses an unsupervised process to automatically build a tailored training dataset and utilize it to train a masking this http URL POLARIS framework outperforms state-of-the-art mitigation solutions (e.g., VALIANT) in terms of leakage reduction, execution time, and overhead across large designs.

Graph neural networks (GNNs) have attracted significant attention for their

outstanding performance in graph learning and node classification tasks.

However, their vulnerability to adversarial attacks, particularly through

susceptible nodes, poses a challenge in decision-making. The need for robust

graph summarization is evident in adversarial challenges resulting from the

propagation of attacks throughout the entire graph. In this paper, we address

both performance and adversarial robustness in graph input by introducing the

novel technique SHERD (Subgraph Learning Hale through Early Training

Representation Distances). SHERD leverages information from layers of a

partially trained graph convolutional network (GCN) to detect susceptible nodes

during adversarial attacks using standard distance metrics. The method

identifies "vulnerable (bad)" nodes and removes such nodes to form a robust

subgraph while maintaining node classification performance. Through our

experiments, we demonstrate the increased performance of SHERD in enhancing

robustness by comparing the network's performance on original and subgraph

inputs against various baselines alongside existing adversarial attacks. Our

experiments across multiple datasets, including citation datasets such as Cora,

Citeseer, and Pubmed, as well as microanatomical tissue structures of cell

graphs in the placenta, highlight that SHERD not only achieves substantial

improvement in robust performance but also outperforms several baselines in

terms of node classification accuracy and computational complexity.

13 Dec 2023

The complex interplay between human societies and their environments has long

been a subject of fascination for social scientists. Utilizing agent-based

modeling, this study delves into the profound implications of seemingly

inconsequential variations in social dynamics. Focusing on the nexus between

food distribution, residential patterns, and population dynamics, our research

provides an analysis of the long-term implications of minute societal changes

over the span of 300 years.

Through a meticulous exploration of two scenarios, we uncover the profound

impact of the butterfly effect on the evolution of human societies, revealing

the fact that even the slightest perturbations in the distribution of resources

can catalyze monumental shifts in residential patterns and population

trajectories. This research sheds light on the inherent complexity of social

systems and underscores the sensitivity of these systems to subtle changes,

emphasizing the unpredictable nature of long-term societal trajectories. The

implications of our findings extend far beyond the realm of social science,

carrying profound significance for policy-making, sustainable development, and

the preservation of societal equilibrium in an ever-changing world.

In this paper, we present FogGuard, a novel fog-aware object detection network designed to address the challenges posed by foggy weather conditions. Autonomous driving systems heavily rely on accurate object detection algorithms, but adverse weather conditions can significantly impact the reliability of deep neural networks (DNNs).

Existing approaches include image enhancement techniques like IA-YOLO and domain adaptation methods. While image enhancement aims to generate clear images from foggy ones, which is more challenging than object detection in foggy images, domain adaptation does not require labeled data in the target domain. Our approach involves fine-tuning on a specific dataset to address these challenges efficiently.

FogGuard compensates for foggy conditions in the scene, ensuring robust performance by incorporating YOLOv3 as the baseline algorithm and introducing a unique Teacher-Student Perceptual loss for accurate object detection in foggy environments. Through comprehensive evaluations on standard datasets like PASCAL VOC and RTTS, our network significantly improves performance, achieving a 69.43\% mAP compared to YOLOv3's 57.78\% on the RTTS dataset. Additionally, we demonstrate that while our training method slightly increases time complexity, it doesn't add overhead during inference compared to the regular YOLO network.

Artificial intelligence (AI) is widely used in various fields including healthcare, autonomous vehicles, robotics, traffic monitoring, and agriculture. Many modern AI applications in these fields are multi-tasking in nature (i.e. perform multiple analysis on same data) and are deployed on resource-constrained edge devices requiring the AI models to be efficient across different metrics such as power, frame rate, and size. For these specific use-cases, in this work, we propose a new paradigm of neural network architecture (ILASH) that leverages a layer sharing concept for minimizing power utilization, increasing frame rate, and reducing model size. Additionally, we propose a novel neural network architecture search framework (ILASH-NAS) for efficient construction of these neural network models for a given set of tasks and device constraints. The proposed NAS framework utilizes a data-driven intelligent approach to make the search efficient in terms of energy, time, and CO2 emission. We perform extensive evaluations of the proposed layer shared architecture paradigm (ILASH) and the ILASH-NAS framework using four open-source datasets (UTKFace, MTFL, CelebA, and Taskonomy). We compare ILASH-NAS with AutoKeras and observe significant improvement in terms of both the generated model performance and neural search efficiency with up to 16x less energy utilization, CO2 emission, and training/search time.

In this paper, we document a novel machine learning based bottom-up approach for static and dynamic portfolio optimization on, potentially, a large number of assets. The methodology applies to general constrained optimization problems and overcomes many major difficulties arising in current optimization schemes. Taking mean-variance optimization as an example, we no longer need to compute the covariance matrix and its inverse, therefore the method is immune from the estimation error on this quantity. Moreover, no explicit calls of optimization routines are needed. Applications to equity portfolio management in U.S. and China equity markets are studied and we document significant excess returns to the selected benchmarks.

19 Feb 2025

Hardware Trojans are malicious modifications in digital designs that can be

inserted by untrusted supply chain entities. Hardware Trojans can give rise to

diverse attack vectors such as information leakage (e.g. MOLES Trojan) and

denial-of-service (rarely triggered bit flip). Such an attack in critical

systems (e.g. healthcare and aviation) can endanger human lives and lead to

catastrophic financial loss. Several techniques have been developed to detect

such malicious modifications in digital designs, particularly for designs

sourced from third-party intellectual property (IP) vendors. However, most

techniques have scalability concerns (due to unsound assumptions during

evaluation) and lead to large number of false positive detections (false

alerts). Our framework (SALTY) mitigates these concerns through the use of a

novel Graph Neural Network architecture (using Jumping-Knowledge mechanism) for

generating initial predictions and an Explainable Artificial Intelligence (XAI)

approach for fine tuning the outcomes (post-processing). Experiments show 98%

True Positive Rate (TPR) and True Negative Rate (TNR), significantly

outperforming state-of-the-art techniques across a large set of standard

benchmarks.

There are no more papers matching your filters at the moment.