University of Rennes

The explosion of data volumes generated by an increasing number of

applications is strongly impacting the evolution of distributed digital

infrastructures for data analytics and machine learning (ML). While data

analytics used to be mainly performed on cloud infrastructures, the rapid

development of IoT infrastructures and the requirements for low-latency, secure

processing has motivated the development of edge analytics. Today, to balance

various trade-offs, ML-based analytics tends to increasingly leverage an

interconnected ecosystem that allows complex applications to be executed on

hybrid infrastructures where IoT Edge devices are interconnected to Cloud/HPC

systems in what is called the Computing Continuum, the Digital Continuum, or

the Transcontinuum.Enabling learning-based analytics on such complex

infrastructures is challenging. The large scale and optimized deployment of

learning-based workflows across the Edge-to-Cloud Continuum requires extensive

and reproducible experimental analysis of the application execution on

representative testbeds. This is necessary to help understand the performance

trade-offs that result from combining a variety of learning paradigms and

supportive frameworks. A thorough experimental analysis requires the assessment

of the impact of multiple factors, such as: model accuracy, training time,

network overhead, energy consumption, processing latency, among others.This

review aims at providing a comprehensive vision of the main state-of-the-art

libraries and frameworks for machine learning and data analytics available

today. It describes the main learning paradigms enabling learning-based

analytics on the Edge-to-Cloud Continuum. The main simulation, emulation,

deployment systems, and testbeds for experimental research on the Edge-to-Cloud

Continuum available today are also surveyed. Furthermore, we analyze how the

selected systems provide support for experiment reproducibility. We conclude

our review with a detailed discussion of relevant open research challenges and

of future directions in this domain such as: holistic understanding of

performance; performance optimization of applications;efficient deployment of

Artificial Intelligence (AI) workflows on highly heterogeneous infrastructures;

and reproducible analysis of experiments on the Computing Continuum.

Large Language Models (LLMs) are nowadays prompted for a wide variety of

tasks. In this article, we investigate their ability in reciting and generating

graphs. We first study the ability of LLMs to regurgitate well known graphs

from the literature (e.g. Karate club or the graph atlas)4. Secondly, we

question the generative capabilities of LLMs by asking for Erdos-Renyi random

graphs. As opposed to the possibility that they could memorize some Erdos-Renyi

graphs included in their scraped training set, this second investigation aims

at studying a possible emergent property of LLMs. For both tasks, we propose a

metric to assess their errors with the lens of hallucination (i.e. incorrect

information returned as facts). We most notably find that the amplitude of

graph hallucinations can characterize the superiority of some LLMs. Indeed, for

the recitation task, we observe that graph hallucinations correlate with the

Hallucination Leaderboard, a hallucination rank that leverages 10, 000 times

more prompts to obtain its ranking. For the generation task, we find

surprisingly good and reproducible results in most of LLMs. We believe this to

constitute a starting point for more in-depth studies of this emergent

capability and a challenging benchmark for their improvements. Altogether,

these two aspects of LLMs capabilities bridge a gap between the network science

and machine learning communities.

This perspective offers a viewpoint on how the challenges of molecular scattering investigations of astrophysical interest have evolved in recent years. Computational progress has steadily expanded collisional databases and provided essential tools for modeling non-LTE astronomical regions. However, the observational leap enabled by the JWST and new observational facilities has revealed critical gaps in these databases. In this framework, two major frontiers emerge: the characterization of collisional processes involving heavy projectiles, and the treatment of ro-vibrational excitation. The significant computational effort of these investigations emphasizes the need to test and develop robust theoretical methods and approximations, capable of extending the census of collisional coefficients required for reliable astrophysical modeling. Recent developments in these directions are outlined, with particular attention to their application and their potential to broaden the coverage of molecular systems and physical environments.

Deep learning (DL) has shown great success in many human-related tasks, which

has led to its adoption in many computer vision based applications, such as

security surveillance systems, autonomous vehicles and healthcare. Such

safety-critical applications have to draw their path to success deployment once

they have the capability to overcome safety-critical challenges. Among these

challenges are the defense against or/and the detection of the adversarial

examples (AEs). Adversaries can carefully craft small, often imperceptible,

noise called perturbations to be added to the clean image to generate the AE.

The aim of AE is to fool the DL model which makes it a potential risk for DL

applications. Many test-time evasion attacks and countermeasures,i.e., defense

or detection methods, are proposed in the literature. Moreover, few reviews and

surveys were published and theoretically showed the taxonomy of the threats and

the countermeasure methods with little focus in AE detection methods. In this

paper, we focus on image classification task and attempt to provide a survey

for detection methods of test-time evasion attacks on neural network

classifiers. A detailed discussion for such methods is provided with

experimental results for eight state-of-the-art detectors under different

scenarios on four datasets. We also provide potential challenges and future

perspectives for this research direction.

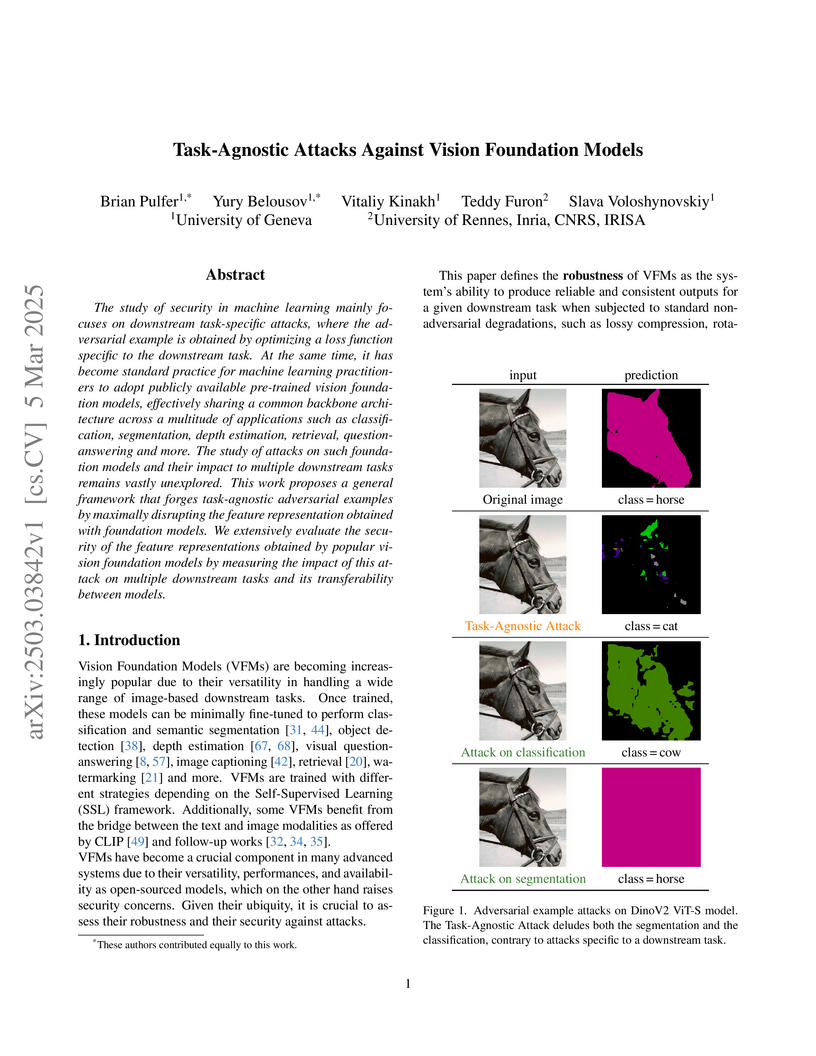

Researchers developed a framework for task-agnostic adversarial attacks against Vision Foundation Models (VFMs), which directly disrupt their learned feature representations. These attacks, even with imperceptible perturbations, successfully compromise performance across multiple downstream computer vision tasks simultaneously, including classification, segmentation, and image retrieval.

24 Sep 2025

Interfacial interactions significantly alter the fundamental properties of water confined in mesoporous structures, with crucial implications for geological, physicochemical, and biological processes. Herein, we focused on the effect of changing the surface ionic charge of nanopores with comparable pore size (3.5-3.8 nm) on the dynamics of confined liquid water. The control of the pore surface ionicity was achieved by using two periodic mesoporous organosilicas (PMOs) containing either neutral or charged forms of a chemically similar bridging unit. The effect on the dynamics of water at the nanoscale was investigated in the temperature range of 245 -300 K, encompassing the glass transition by incoherent quasielastic neutron scattering (QENS), For both types of PMOs, the water dynamics revealed two distinct types of molecular motions: rapid local movements and translational jump diffusion. While the neutral PMO induces a moderate confinement effect, we show that the charged PMO drastically slows down water dynamics, reducing translational diffusion by a factor of four and increasing residence time by an order of magnitude. Notably, by changing the pore filling values, we demonstrate that for charged pore this effect extends beyond the interfacial layer of surface-bound water molecules to encompass the entire pore volume. Thus, our observation indicates a dramatic change in the long-range character of the interaction of water confined in nanopores with surface ionic charge compared to a simple change in hydrophilicity. This is relevant for the understanding of a broad variety of applications in (nano)technological phenomena and processes, such as nanofiltration and membrane design.

26 Aug 2024

Modern software-based systems operate under rapidly changing conditions and

face ever-increasing uncertainty. In response, systems are increasingly

adaptive and reliant on artificial-intelligence methods. In addition to the

ubiquity of software with respect to users and application areas (e.g.,

transportation, smart grids, medicine, etc.), these high-impact software

systems necessarily draw from many disciplines for foundational principles,

domain expertise, and workflows. Recent progress with lowering the barrier to

entry for coding has led to a broader community of developers, who are not

necessarily software engineers. As such, the field of software engineering

needs to adapt accordingly and offer new methods to systematically develop

high-quality software systems by a broad range of experts and non-experts. This

paper looks at these new challenges and proposes to address them through the

lens of Abstraction. Abstraction is already used across many disciplines

involved in software development -- from the time-honored classical deductive

reasoning and formal modeling to the inductive reasoning employed by modern

data science. The software engineering of the future requires Abstraction

Engineering -- a systematic approach to abstraction across the inductive and

deductive spaces. We discuss the foundations of Abstraction Engineering,

identify key challenges, highlight the research questions that help address

these challenges, and create a roadmap for future research.

24 Jun 2025

The Ethereum Virtual Machine (EVM) is a decentralized computing engine. It enables the Ethereum blockchain to execute smart contracts and decentralized applications (dApps). The increasing adoption of Ethereum sparked the rise of phishing activities. Phishing attacks often target users through deceptive means, e.g., fake websites, wallet scams, or malicious smart contracts, aiming to steal sensitive information or funds. A timely detection of phishing activities in the EVM is therefore crucial to preserve the user trust and network integrity. Some state-of-the art approaches to phishing detection in smart contracts rely on the online analysis of transactions and their traces. However, replaying transactions often exposes sensitive user data and interactions, with several security concerns. In this work, we present PhishingHook, a framework that applies machine learning techniques to detect phishing activities in smart contracts by directly analyzing the contract's bytecode and its constituent opcodes. We evaluate the efficacy of such techniques in identifying malicious patterns, suspicious function calls, or anomalous behaviors within the contract's code itself before it is deployed or interacted with. We experimentally compare 16 techniques, belonging to four main categories (Histogram Similarity Classifiers, Vision Models, Language Models and Vulnerability Detection Models), using 7,000 real-world malware smart contracts. Our results demonstrate the efficiency of PhishingHook in performing phishing classification systems, with about 90% average accuracy among all the models. We support experimental reproducibility, and we release our code and datasets to the research community.

14 Oct 2025

Thermal phonons are a major source of decoherence in quantum mechanical systems. Operating in the quantum ground state is therefore often an experimental prerequisite. Additionally to passive cooling in a cryogenic environment, active laser cooling enables the reduction of phonons at specific acoustic frequencies. Brillouin cooling has been used to show efficient reduction of the thermal phonon population in waveguides at GHz frequencies down to 74 K. In this letter, we demonstrate cooling of a 7.608 GHz acoustic mode by combining Brillouin active cooling with precooling from 77 K using liquid nitrogen. We show a 69 % reduction in the phonon population, resulting in a final temperature of 24.3 K, 50 K lower than previously reported.

02 Sep 2025

We discuss the Witsenhausen counterexample from the perspective of varying power budgets and propose a low-power estimation (LoPE) strategy. Specifically, our LoPE approach designs the first decision-maker (DM) a quantization step function of the Gaussian source state, making the target system state a piecewise linear function of the source with slope one. This approach contrasts with Witsenhausen's original two-point strategy, which instead designs the system state itself to be a binary step. While the two-point strategy can outperform the linear strategy in estimation cost, it, along with its multi-step extensions, typically requires a substantial power budget. Analogous to Binary Phase Shift Keying (BPSK) communication for Gaussian channels, we show that the binary LoPE strategy attains first-order optimality in the low-power regime, matching the performance of the linear strategy as the power budget increases from zero. Our analysis also provides an interpretation of the previously observed near-optimal sloped step function ("sawtooth") structure to the Witsenhausen counterexample: In the low-power regime, power saving is prioritized, in which case the LoPE strategy dominates, making the system state a piecewise linear function with slope close to one. Conversely, in the high-power regime, setting the system state as a step function with the slope approaching zero facilitates accurate estimation. Hence, the sawtooth solution can be seen as a combination of both strategies.

Floods are among the most common and devastating natural hazards, imposing immense costs on our society and economy due to their disastrous consequences. Recent progress in weather prediction and spaceborne flood mapping demonstrated the feasibility of anticipating extreme events and reliably detecting their catastrophic effects afterwards. However, these efforts are rarely linked to one another and there is a critical lack of datasets and benchmarks to enable the direct forecasting of flood extent. To resolve this issue, we curate a novel dataset enabling a timely prediction of flood extent. Furthermore, we provide a representative evaluation of state-of-the-art methods, structured into two benchmark tracks for forecasting flood inundation maps i) in general and ii) focused on coastal regions. Altogether, our dataset and benchmark provide a comprehensive platform for evaluating flood forecasts, enabling future solutions for this critical challenge. Data, code & models are shared at this https URL under a CC0 license.

12 Sep 2025

We investigate the self-locking of the bowline knot through numerical simulations, experiments, and theoretical analysis. Specifically, we perform two complementary types of simulations using the 3D finite-element method (FEM) and a reduced-order model based on the discrete-element method (DEM). For the FEM simulations, we develop a novel mapping technique that automatically transforms the centerline of the rod into the required knot topology prior to loading. In parallel, we conduct experiments using a nearly inextensible elastic rod tied into a bowline around a rigid cylinder. One end of the rod is pulled to load the knot while the other is left free. The measured force-displacement response serves to validate both the FEM and DEM simulations. Leveraging these validated computational frameworks, we analyze the internal tension profile along the rod's centerline, revealing that a sharp drop in tension concentrates around a strategic locking region, whose geometry resembles that observed in other knot types. By considering the coupling of tension, bending, and friction, we formulate a theoretical model inspired by the classic capstan problem to predict the stability conditions of the bowline, finding excellent agreement with our FEM and DEM simulations. Our methodology and findings offer new tools and insights for future studies on the performance and reliability of other complex knots.

We study mean-field particle approximations of normalized Feynman-Kac semi-groups, usually called Fleming-Viot or Feynman-Kac particle systems. Assuming various large time stability properties of the semi-group uniformly in the initial condition, we provide explicit time-uniform Lp and exponential bounds (a new result) with the expected rate in terms of sample size. This work is based on a stochastic backward error analysis (similar to the classical concept of numerical analysis) of the measure-valued Markov particle estimator, an approach that simplifies methods previously used for time-uniform Lp estimates.

27 Feb 2025

PolyDebug is a framework that provides seamless debugging across multiple programming languages by coordinating existing language-specific debuggers via the Debug Adapter Protocol. The prototype demonstrates a reduction in engineering effort for polyglot debugging while enabling features like cross-language breakpoints and variable inspection.

28 Aug 2025

Mechanomyography (MMG) is a promising tool for measuring muscle activity in the field but its sensitivity to motion artifacts limits its application. In this study, we proposed an adaptative filtering method for MMG accelerometers based on the complete ensemble empirical mode decomposition, with adaptative noise and spectral fuzzy entropy, to isolate motions artefacts from the MMG signal in dynamic conditions. We compared our method with the traditional band-pass filtering technique, demonstrating better results concerning motion recomposition for deltoid and erector spinae muscles (R2 = 0.907 and 0.842). Thus, this innovative method allows the filtering of motion artifacts dynamically in the 5-20 Hz bandwidth, which is not achievable with traditional method. However, the interpretation of accelerometric MMG signals from the trunk and lower-limb muscles during walking or running should be approached with great caution as impact-related accelerations are still present, though their exact quantity still needs to be quantified.

This chapter explores three key questions in blockchain ethics. First, it

situates blockchain ethics within the broader field of technology ethics,

outlining its goals and guiding principles. Second, it examines the unique

ethical challenges of blockchain applications, including permissionless

systems, incentive mechanisms, and privacy concerns. Key obstacles, such as

conceptual modeling and information asymmetries, are identified as critical

issues. Finally, the chapter argues that blockchain ethics should be approached

as an engineering discipline, emphasizing the analysis and design of trade-offs

in complex systems.

08 Dec 2022

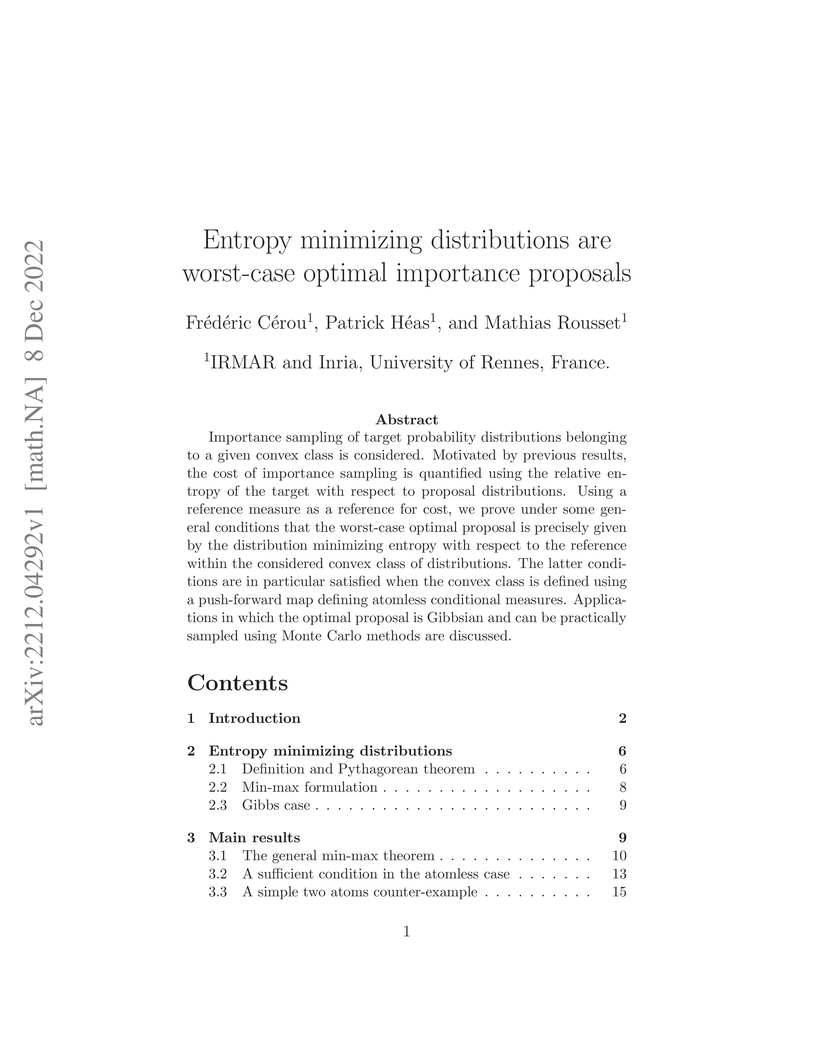

Importance sampling of target probability distributions belonging to a given

convex class is considered. Motivated by previous results, the cost of

importance sampling is quantified using the relative entropy of the target with

respect to proposal distributions. Using a reference measure as a reference for

cost, we prove under some general conditions that the worst-case optimal

proposal is precisely given by the distribution minimizing entropy with respect

to the reference within the considered convex class of distributions. The

latter conditions are in particular satisfied when the convex class is defined

using a push-forward map defining atomless conditional measures. Applications

in which the optimal proposal is Gibbsian and can be practically sampled using

Monte Carlo methods are discussed.

Deep learning has emerged as a powerful method for extracting valuable

information from large volumes of data. However, when new training data arrives

continuously (i.e., is not fully available from the beginning), incremental

training suffers from catastrophic forgetting (i.e., new patterns are

reinforced at the expense of previously acquired knowledge). Training from

scratch each time new training data becomes available would result in extremely

long training times and massive data accumulation. Rehearsal-based continual

learning has shown promise for addressing the catastrophic forgetting

challenge, but research to date has not addressed performance and scalability.

To fill this gap, we propose an approach based on a distributed rehearsal

buffer that efficiently complements data-parallel training on multiple GPUs,

allowing us to achieve short runtime and scalability while retaining high

accuracy. It leverages a set of buffers (local to each GPU) and uses several

asynchronous techniques for updating these local buffers in an embarrassingly

parallel fashion, all while handling the communication overheads necessary to

augment input mini-batches (groups of training samples fed to the model) using

unbiased, global sampling. In this paper we explore the benefits of this

approach for classification models. We run extensive experiments on up to 128

GPUs of the ThetaGPU supercomputer to compare our approach with baselines

representative of training-from-scratch (the upper bound in terms of accuracy)

and incremental training (the lower bound). Results show that rehearsal-based

continual learning achieves a top-5 classification accuracy close to the upper

bound, while simultaneously exhibiting a runtime close to the lower bound.

23 Apr 2024

We define and explain the quasistatic approximation (QSA) as applied to field

modeling for electrical and magnetic stimulation. Neuromodulation analysis

pipelines include discrete stages, and QSA is applied specifically when

calculating the electric and magnetic fields generated in tissues by a given

stimulation dose. QSA simplifies the modeling equations to support tractable

analysis, enhanced understanding, and computational efficiency. The application

of QSA in neuro-modulation is based on four underlying assumptions: (A1) no

wave propagation or self-induction in tissue, (A2) linear tissue properties,

(A3) purely resistive tissue, and (A4) non-dispersive tissue. As a consequence

of these assumptions, each tissue is assigned a fixed conductivity, and the

simplified equations (e.g., Laplace's equation) are solved for the spatial

distribution of the field, which is separated from the field's temporal

waveform. Recognizing that electrical tissue properties may be more complex, we

explain how QSA can be embedded in parallel or iterative pipelines to model

frequency dependence or nonlinearity of conductivity. We survey the history and

validity of QSA across specific applications, such as microstimulation, deep

brain stimulation, spinal cord stimulation, transcranial electrical

stimulation, and transcranial magnetic stimulation. The precise definition and

explanation of QSA in neuromodulation are essential for rigor when using QSA

models or testing their limits.

The analysis of electrophysiological data is crucial for certain surgical

procedures such as deep brain stimulation, which has been adopted for the

treatment of a variety of neurological disorders. During the procedure,

auditory analysis of these signals helps the clinical team to infer the

neuroanatomical location of the stimulation electrode and thus optimize

clinical outcomes. This task is complex, and requires an expert who in turn

requires significant training. In this paper, we propose a generative neural

network, called MerGen, capable of simulating de novo electrophysiological

recordings, with a view to providing a realistic learning tool for clinicians

trainees for identifying these signals. We demonstrate that the generated

signals are perceptually indistinguishable from real signals by experts in the

field, and that it is even possible to condition the generation efficiently to

provide a didactic simulator adapted to a particular surgical scenario. The

efficacy of this conditioning is demonstrated, comparing it to intra-observer

and inter-observer variability amongst experts. We also demonstrate the use of

this network for data augmentation for automatic signal classification which

can play a role in decision-making support in the operating theatre.

There are no more papers matching your filters at the moment.