University of TübingenTübingen AI Center

28 Sep 2025

Researchers from institutions including the Max Planck Institute for Intelligent Systems demonstrate that marginal gains in LLM single-step accuracy lead to exponential improvements in long-horizon task completion, challenging the perception of diminishing returns from scaling. The study identifies 'self-conditioning' as a distinct execution failure mode and shows that sequential test-time compute significantly extends reliable task execution.

Researchers from the University of Tübingen and the Tübingen AI Center developed an axiomatic framework for loss aggregation in online learning, identifying quasi-sums as the fundamental class of aggregation functions. They adapted Vovk's Aggregating Algorithm to work with these generalized aggregations, showing how the choice of aggregation reflects a learner's risk attitude while preserving strong theoretical regret guarantees.

TTT3R improves the length generalization of recurrent 3D reconstruction models by integrating a test-time training (TTT) approach with a confidence-aware state update rule. This method maintains constant memory and real-time inference, achieving a 2x improvement in global pose estimation accuracy for long sequences on datasets like ScanNet and TUM-D compared to prior RNN-based baselines.

KAIST

KAIST University of Washington

University of Washington University of Toronto

University of Toronto Carnegie Mellon University

Carnegie Mellon University Université de Montréal

Université de Montréal New York University

New York University University of Chicago

University of Chicago UC Berkeley

UC Berkeley University of Oxford

University of Oxford Stanford University

Stanford University University of Michigan

University of Michigan Cornell University

Cornell University Nanyang Technological UniversityVector InstituteLG AI Research

Nanyang Technological UniversityVector InstituteLG AI Research MIT

MIT HKUSTUniversity of TübingenHong Kong Baptist University

HKUSTUniversity of TübingenHong Kong Baptist University University of California, Santa CruzCenter for AI SafetyGray Swan AIBeneficial AI ResearchConjectureLawZeroUniversity of Wisconsin

–MadisonMorph LabsInstitute for Applied PsychometricsCSER

University of California, Santa CruzCenter for AI SafetyGray Swan AIBeneficial AI ResearchConjectureLawZeroUniversity of Wisconsin

–MadisonMorph LabsInstitute for Applied PsychometricsCSERThe lack of a concrete definition for Artificial General Intelligence (AGI) obscures the gap between today's specialized AI and human-level cognition. This paper introduces a quantifiable framework to address this, defining AGI as matching the cognitive versatility and proficiency of a well-educated adult. To operationalize this, we ground our methodology in Cattell-Horn-Carroll theory, the most empirically validated model of human cognition. The framework dissects general intelligence into ten core cognitive domains-including reasoning, memory, and perception-and adapts established human psychometric batteries to evaluate AI systems. Application of this framework reveals a highly "jagged" cognitive profile in contemporary models. While proficient in knowledge-intensive domains, current AI systems have critical deficits in foundational cognitive machinery, particularly long-term memory storage. The resulting AGI scores (e.g., GPT-4 at 27%, GPT-5 at 57%) concretely quantify both rapid progress and the substantial gap remaining before AGI.

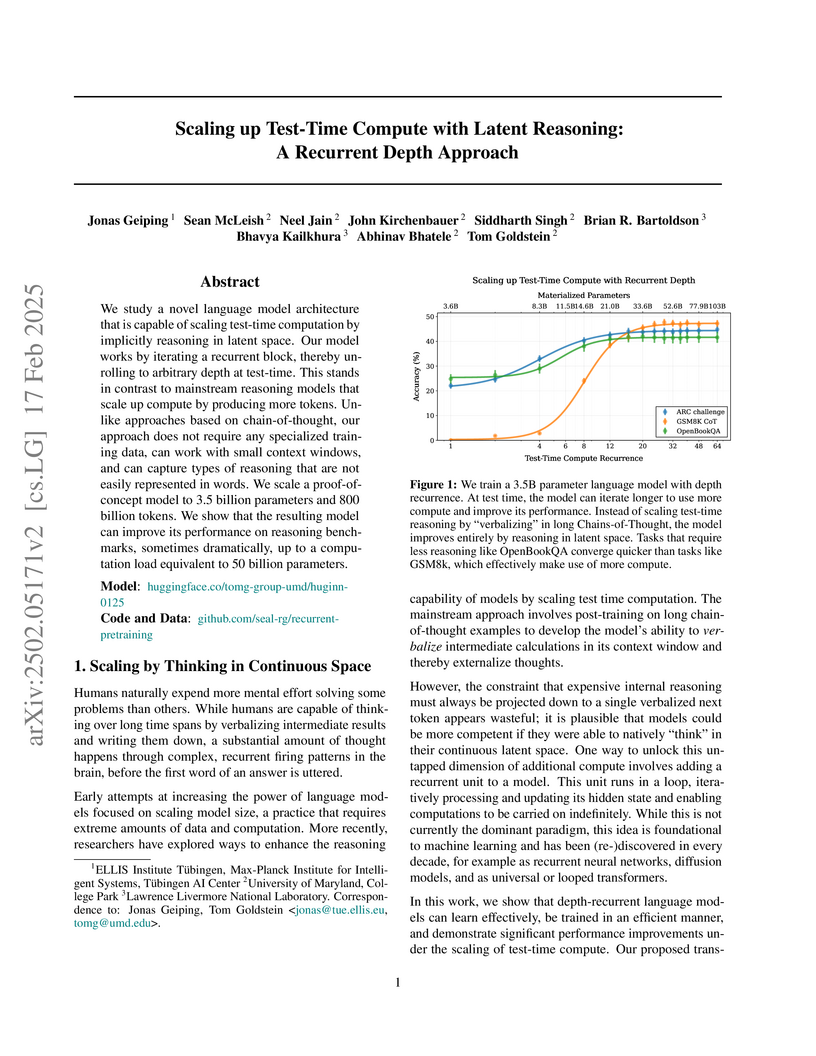

Researchers from ELLIS Institute Tübingen, University of Maryland, and Lawrence Livermore National Laboratory introduce a recurrent depth transformer architecture that scales reasoning abilities by implicitly processing information in a continuous latent space. The 3.5 billion parameter model, Huginn-0125, trained on the Frontier supercomputer, demonstrates significant performance gains on reasoning benchmarks with increased test-time iterations, sometimes matching or exceeding larger models without requiring specialized Chain-of-Thought training data.

Although multilingual language models exhibit impressive cross-lingual

transfer capabilities on unseen languages, the performance on downstream tasks

is impacted when there is a script disparity with the languages used in the

multilingual model's pre-training data. Using transliteration offers a

straightforward yet effective means to align the script of a resource-rich

language with a target language, thereby enhancing cross-lingual transfer

capabilities. However, for mixed languages, this approach is suboptimal, since

only a subset of the language benefits from the cross-lingual transfer while

the remainder is impeded. In this work, we focus on Maltese, a Semitic

language, with substantial influences from Arabic, Italian, and English, and

notably written in Latin script. We present a novel dataset annotated with

word-level etymology. We use this dataset to train a classifier that enables us

to make informed decisions regarding the appropriate processing of each token

in the Maltese language. We contrast indiscriminate transliteration or

translation to mixing processing pipelines that only transliterate words of

Arabic origin, thereby resulting in text with a mixture of scripts. We

fine-tune the processed data on four downstream tasks and show that conditional

transliteration based on word etymology yields the best results, surpassing

fine-tuning with raw Maltese or Maltese processed with non-selective pipelines.

HUMAN3R presents a unified, feed-forward framework for online 4D human-scene reconstruction from monocular video. The system jointly estimates multi-person global human motions, dense 3D scene geometry, and camera parameters in real-time at 15 FPS, outperforming or matching prior methods on various reconstruction benchmarks while consuming only 8 GB of GPU memory.

ETH Zurich

ETH Zurich KAIST

KAIST University of WashingtonRensselaer Polytechnic Institute

University of WashingtonRensselaer Polytechnic Institute Google DeepMind

Google DeepMind University of Amsterdam

University of Amsterdam University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of CambridgeHeidelberg University

University of CambridgeHeidelberg University University of WaterlooFacebook

University of WaterlooFacebook Carnegie Mellon University

Carnegie Mellon University University of Southern California

University of Southern California Google

Google New York UniversityUniversity of Stuttgart

New York UniversityUniversity of Stuttgart UC Berkeley

UC Berkeley National University of Singapore

National University of Singapore University College London

University College London University of OxfordLMU Munich

University of OxfordLMU Munich Shanghai Jiao Tong University

Shanghai Jiao Tong University University of California, Irvine

University of California, Irvine Tsinghua University

Tsinghua University Stanford University

Stanford University University of Michigan

University of Michigan University of Copenhagen

University of Copenhagen The Chinese University of Hong KongUniversity of Melbourne

The Chinese University of Hong KongUniversity of Melbourne MetaUniversity of Edinburgh

MetaUniversity of Edinburgh OpenAI

OpenAI The University of Texas at Austin

The University of Texas at Austin Cornell University

Cornell University University of California, San DiegoYonsei University

University of California, San DiegoYonsei University McGill University

McGill University Boston UniversityUniversity of Bamberg

Boston UniversityUniversity of Bamberg Nanyang Technological University

Nanyang Technological University Microsoft

Microsoft KU Leuven

KU Leuven Columbia UniversityUC Santa Barbara

Columbia UniversityUC Santa Barbara Allen Institute for AIGerman Research Center for Artificial Intelligence (DFKI)

Allen Institute for AIGerman Research Center for Artificial Intelligence (DFKI) University of Pennsylvania

University of Pennsylvania Johns Hopkins University

Johns Hopkins University Arizona State University

Arizona State University University of Maryland

University of Maryland University of TokyoUniversity of North Carolina at Chapel HillHebrew University of JerusalemAmazonTilburg UniversityUniversity of Massachusetts AmherstUniversity of RochesterUniversity of Duisburg-EssenSapienza University of RomeUniversity of Sheffield

University of TokyoUniversity of North Carolina at Chapel HillHebrew University of JerusalemAmazonTilburg UniversityUniversity of Massachusetts AmherstUniversity of RochesterUniversity of Duisburg-EssenSapienza University of RomeUniversity of Sheffield Princeton University

Princeton University HKUSTUniversity of TübingenTU BerlinSaarland UniversityTechnical University of DarmstadtUniversity of HaifaUniversity of TrentoUniversity of MontrealBilkent UniversityUniversity of Cape TownBar Ilan UniversityIBMUniversity of Mannheim

HKUSTUniversity of TübingenTU BerlinSaarland UniversityTechnical University of DarmstadtUniversity of HaifaUniversity of TrentoUniversity of MontrealBilkent UniversityUniversity of Cape TownBar Ilan UniversityIBMUniversity of Mannheim ServiceNowPotsdam UniversityPolish-Japanese Academy of Information TechnologySalesforceASAPPAI21 LabsValencia Polytechnic UniversityUniversity of Trento, Italy

ServiceNowPotsdam UniversityPolish-Japanese Academy of Information TechnologySalesforceASAPPAI21 LabsValencia Polytechnic UniversityUniversity of Trento, ItalyA large-scale and diverse benchmark, BIG-bench, was introduced to rigorously evaluate the capabilities and limitations of large language models across 204 tasks. The evaluation revealed that even state-of-the-art models currently achieve aggregate scores below 20 (on a 0-100 normalized scale), indicating significantly lower performance compared to human experts.

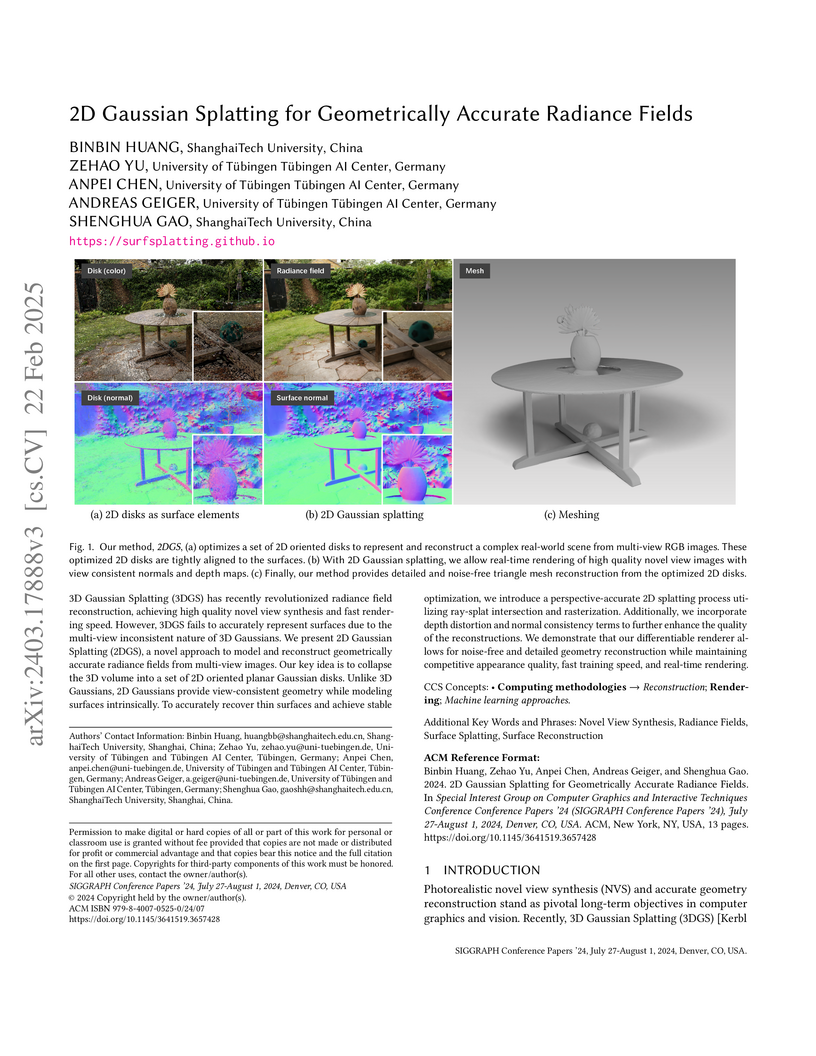

Researchers from ShanghaiTech University and the University of Tübingen introduce 2D Gaussian Splatting (2DGS), a method that represents scenes with oriented planar Gaussian disks to achieve geometrically accurate 3D reconstruction and high-quality novel view synthesis. 2DGS produces detailed and noise-free explicit meshes up to 100 times faster than prior implicit methods while maintaining competitive visual quality.

Vista introduces a driving world model that generates high-fidelity, long-horizon future predictions at 10 Hz and 576x1024 resolution, capable of cross-dataset generalization and versatile control via various action modalities. The model also functions as a generalizable reward function by leveraging its prediction uncertainty to evaluate actions.

NAVSIM offers a data-driven non-reactive simulation and benchmarking framework for autonomous vehicles, designed to evaluate end-to-end driving policies against challenging real-world scenarios. It introduces a comprehensive scoring function, the PDM Score, which demonstrates better alignment with full closed-loop simulation compared to conventional open-loop metrics, and reveals that simpler models can achieve competitive performance against more complex architectures.

DR.LLM introduces a retrofittable framework for Large Language Models that dynamically adjusts computational depth, achieving a mean accuracy gain of +2.25 percentage points and 5.0 fewer layers executed on average on in-domain tasks, while robustly generalizing to out-of-domain benchmarks with minimal accuracy drop.

DriveLM introduces Graph Visual Question Answering (GVQA) as a principled framework for autonomous driving, mimicking human multi-step reasoning. This approach enhances generalization capabilities across unseen sensor configurations and novel objects while also providing explainable, language-based decision processes for end-to-end driving.

Large language model (LLM) developers aim for their models to be honest, helpful, and harmless. However, when faced with malicious requests, models are trained to refuse, sacrificing helpfulness. We show that frontier LLMs can develop a preference for dishonesty as a new strategy, even when other options are available. Affected models respond to harmful requests with outputs that sound harmful but are crafted to be subtly incorrect or otherwise harmless in practice. This behavior emerges with hard-to-predict variations even within models from the same model family. We find no apparent cause for the propensity to deceive, but show that more capable models are better at executing this strategy. Strategic dishonesty already has a practical impact on safety evaluations, as we show that dishonest responses fool all output-based monitors used to detect jailbreaks that we test, rendering benchmark scores unreliable. Further, strategic dishonesty can act like a honeypot against malicious users, which noticeably obfuscates prior jailbreak attacks. While output monitors fail, we show that linear probes on internal activations can be used to reliably detect strategic dishonesty. We validate probes on datasets with verifiable outcomes and by using them as steering vectors. Overall, we consider strategic dishonesty as a concrete example of a broader concern that alignment of LLMs is hard to control, especially when helpfulness and harmlessness conflict.

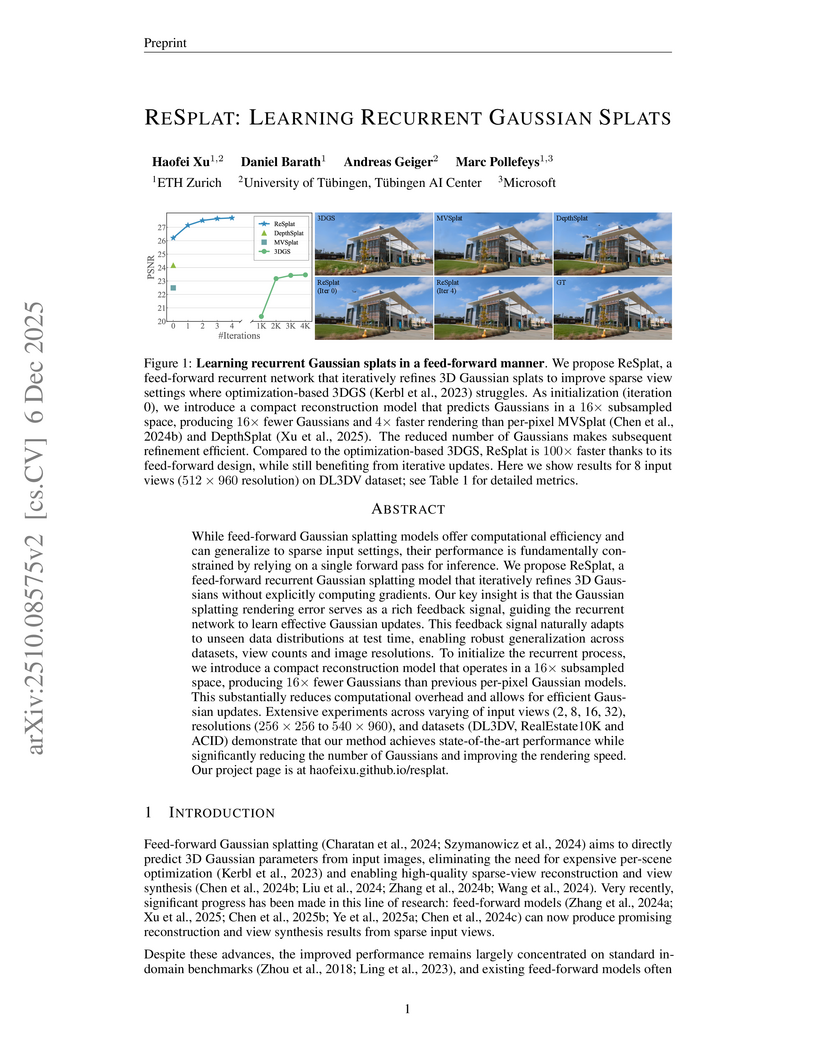

While feed-forward Gaussian splatting models offer computational efficiency and can generalize to sparse input settings, their performance is fundamentally constrained by relying on a single forward pass for inference. We propose ReSplat, a feed-forward recurrent Gaussian splatting model that iteratively refines 3D Gaussians without explicitly computing gradients. Our key insight is that the Gaussian splatting rendering error serves as a rich feedback signal, guiding the recurrent network to learn effective Gaussian updates. This feedback signal naturally adapts to unseen data distributions at test time, enabling robust generalization across datasets, view counts and image resolutions. To initialize the recurrent process, we introduce a compact reconstruction model that operates in a 16× subsampled space, producing 16× fewer Gaussians than previous per-pixel Gaussian models. This substantially reduces computational overhead and allows for efficient Gaussian updates. Extensive experiments across varying of input views (2, 8, 16, 32), resolutions (256×256 to 540×960), and datasets (DL3DV, RealEstate10K and ACID) demonstrate that our method achieves state-of-the-art performance while significantly reducing the number of Gaussians and improving the rendering speed. Our project page is at this https URL.

A new evaluation paradigm for autonomous vehicles, pseudo-simulation, combines the realism of real-world data with the efficiency of offline processing, demonstrating a Pearson correlation of r = 0.89 with closed-loop simulation while reducing environment interactions by 6x.

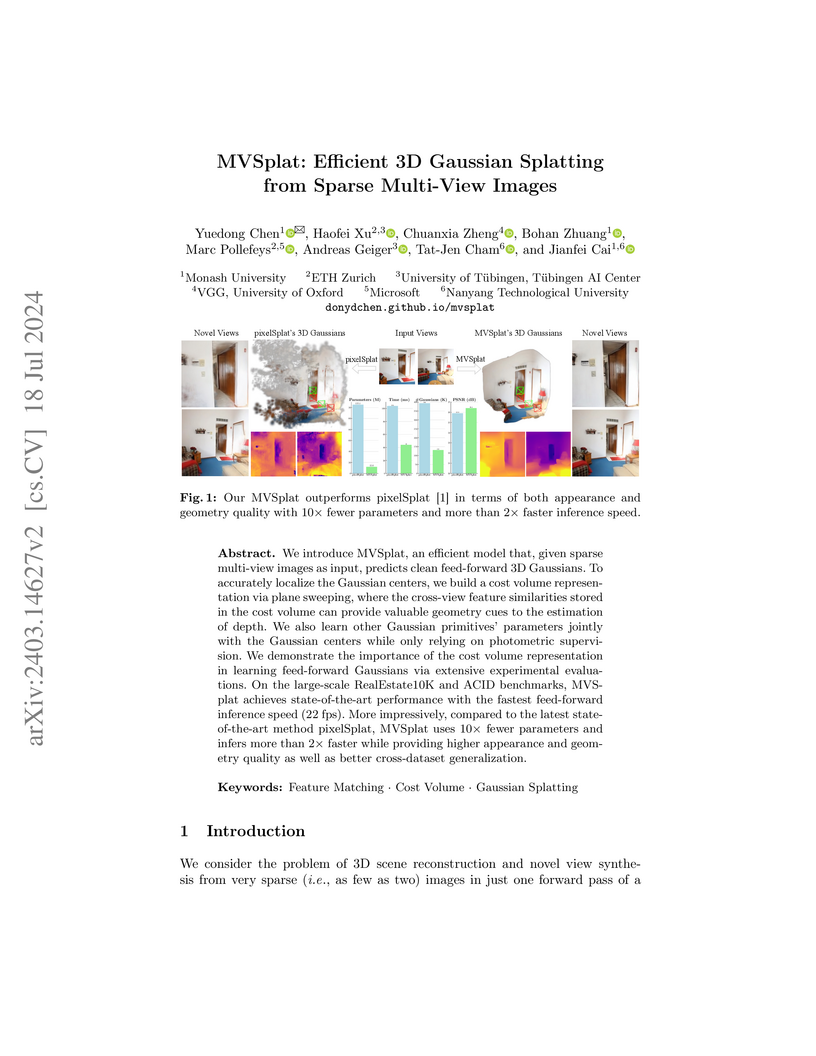

MVSplat presents an efficient, generalizable feed-forward model that generates high-quality 3D Gaussian Splatting representations from sparse multi-view images. It achieves state-of-the-art visual quality with over 2x faster inference (22 fps) and a 10x smaller model size (12M parameters) than prior methods by integrating multi-view stereo cost volumes for robust 3D geometry estimation.

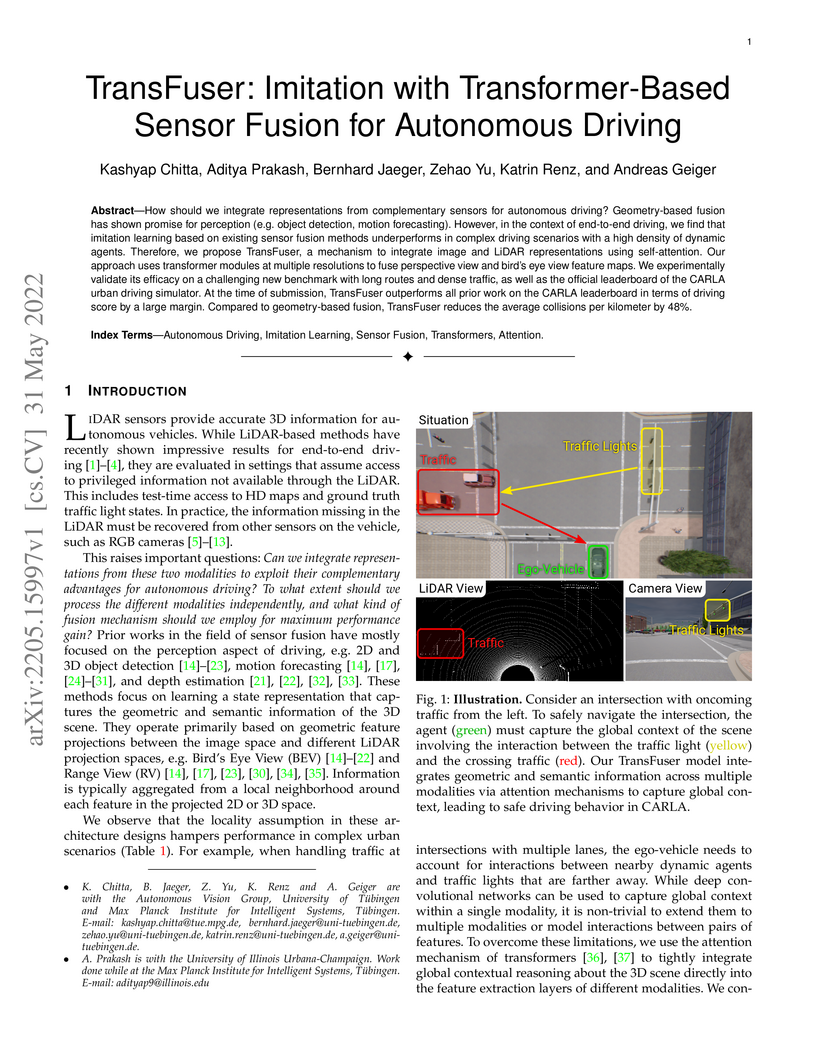

TransFuser presents a transformer-based sensor fusion approach for end-to-end autonomous driving, leveraging self-attention mechanisms to integrate camera and LiDAR data and capture global contextual relationships. This method achieves superior driving scores and a 48% reduction in collision rates on a challenging urban benchmark, demonstrating improved safety and robustness in complex environments.

Orientation-rich images, such as fingerprints and textures, often exhibit coherent angular directional patterns that are challenging to model using standard generative approaches based on isotropic Euclidean diffusion. Motivated by the role of phase synchronization in biological systems, we propose a score-based generative model built on periodic domains by leveraging stochastic Kuramoto dynamics in the diffusion process. In neural and physical systems, Kuramoto models capture synchronization phenomena across coupled oscillators -- a behavior that we re-purpose here as an inductive bias for structured image generation. In our framework, the forward process performs \textit{synchronization} among phase variables through globally or locally coupled oscillator interactions and attraction to a global reference phase, gradually collapsing the data into a low-entropy von Mises distribution. The reverse process then performs \textit{desynchronization}, generating diverse patterns by reversing the dynamics with a learned score function. This approach enables structured destruction during forward diffusion and a hierarchical generation process that progressively refines global coherence into fine-scale details. We implement wrapped Gaussian transition kernels and periodicity-aware networks to account for the circular geometry. Our method achieves competitive results on general image benchmarks and significantly improves generation quality on orientation-dense datasets like fingerprints and textures. Ultimately, this work demonstrates the promise of biologically inspired synchronization dynamics as structured priors in generative modeling.

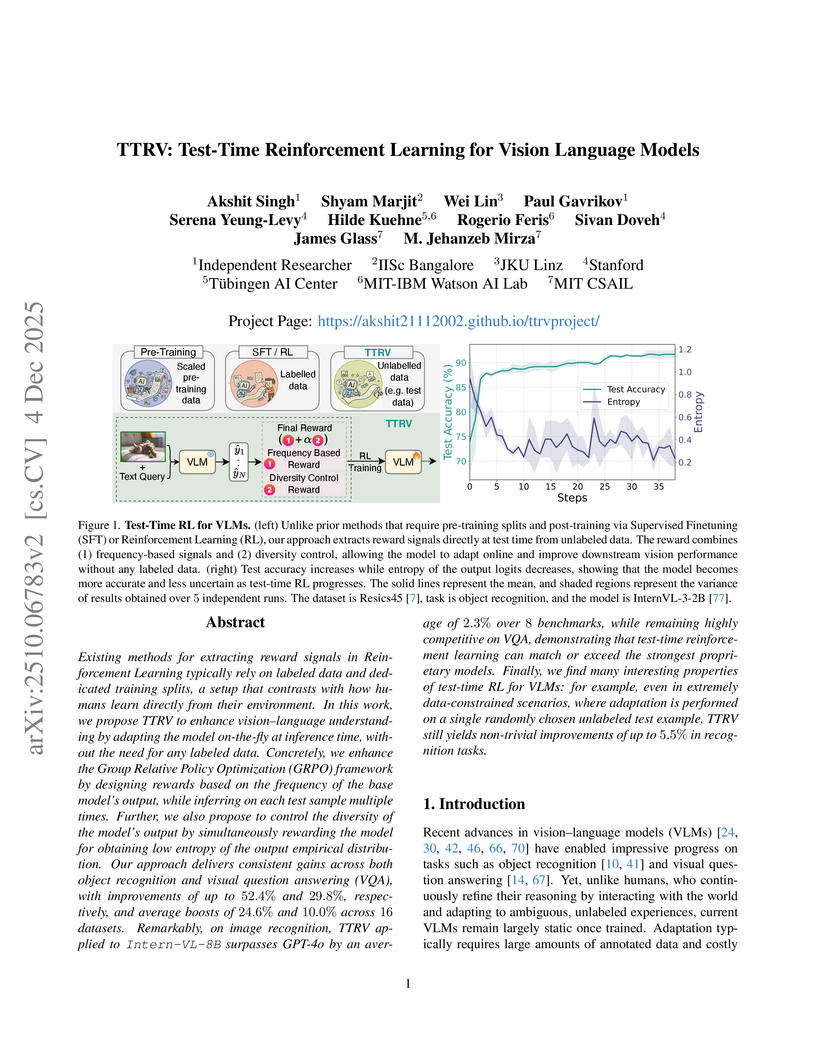

Existing methods for extracting reward signals in Reinforcement Learning typically rely on labeled data and dedicated training splits, a setup that contrasts with how humans learn directly from their environment. In this work, we propose TTRV to enhance vision language understanding by adapting the model on the fly at inference time, without the need for any labeled data. Concretely, we enhance the Group Relative Policy Optimization (GRPO) framework by designing rewards based on the frequency of the base model's output, while inferring on each test sample multiple times. Further, we also propose to control the diversity of the model's output by simultaneously rewarding the model for obtaining low entropy of the output empirical distribution. Our approach delivers consistent gains across both object recognition and visual question answering (VQA), with improvements of up to 52.4% and 29.8%, respectively, and average boosts of 24.6% and 10.0% across 16 datasets. Remarkably, on image recognition, TTRV applied to InternVL 8B surpasses GPT-4o by an average of 2.3% over 8 benchmarks, while remaining highly competitive on VQA, demonstrating that test-time reinforcement learning can match or exceed the strongest proprietary models. Finally, we find many interesting properties of test-time RL for VLMs: for example, even in extremely data-constrained scenarios, where adaptation is performed on a single randomly chosen unlabeled test example, TTRV still yields non-trivial improvements of up to 5.5% in recognition tasks.

There are no more papers matching your filters at the moment.