University of Utah

Researchers developed a three-stage pipeline to assess how well foundation models transfer to precision medicine applications involving physiological signals, utilizing BioGears for synthetic data generation and evaluating embedding quality. Initial application to the Moirai model demonstrated limitations in zero-shot transfer, including the introduction of spurious correlations, poor signal reconstruction, and distorted temporal dynamics in physiological embeddings.

KBASS introduces a robust framework for discovering governing equations from data, combining kernel learning with Bayesian spike-and-slab priors and efficient tensor algebra. This approach consistently recovers ground-truth equations from sparse and noisy data, outperforming state-of-the-art methods like SINDy, PINN-SR, and BSL while providing principled uncertainty quantification and improved computational efficiency.

University of TorontoMax Planck Institute for Intelligent SystemsUniversity of Utah

University of TorontoMax Planck Institute for Intelligent SystemsUniversity of Utah UCLA

UCLA University of Manchester

University of Manchester National University of Singapore

National University of Singapore University of Oxford

University of Oxford Tsinghua University

Tsinghua University Zhejiang University

Zhejiang University The Chinese University of Hong Kong

The Chinese University of Hong Kong Westlake UniversityUniversity of Electronic Science and Technology of China

Westlake UniversityUniversity of Electronic Science and Technology of China University of California, San Diego

University of California, San Diego Peking University

Peking University Columbia University

Columbia University University of SydneyUniversit`a degli Studi di GenovaIstituto Italiano di TecnologiaUniversity of Birmingham

University of SydneyUniversit`a degli Studi di GenovaIstituto Italiano di TecnologiaUniversity of BirminghamResearchers at the University of Toronto, Westlake University, and the University of Electronic Science and Technology of China, along with a global consortium, developed aiXiv, an open-access ecosystem designed for AI-generated scientific content and human-AI collaboration. This platform, featuring a multi-agent review system and iterative refinement, raised the acceptance rate of AI-generated proposals from 0% to 45.2% and papers from 10% to 70% in multi-AI voting, demonstrating enhanced quality and trustworthiness.

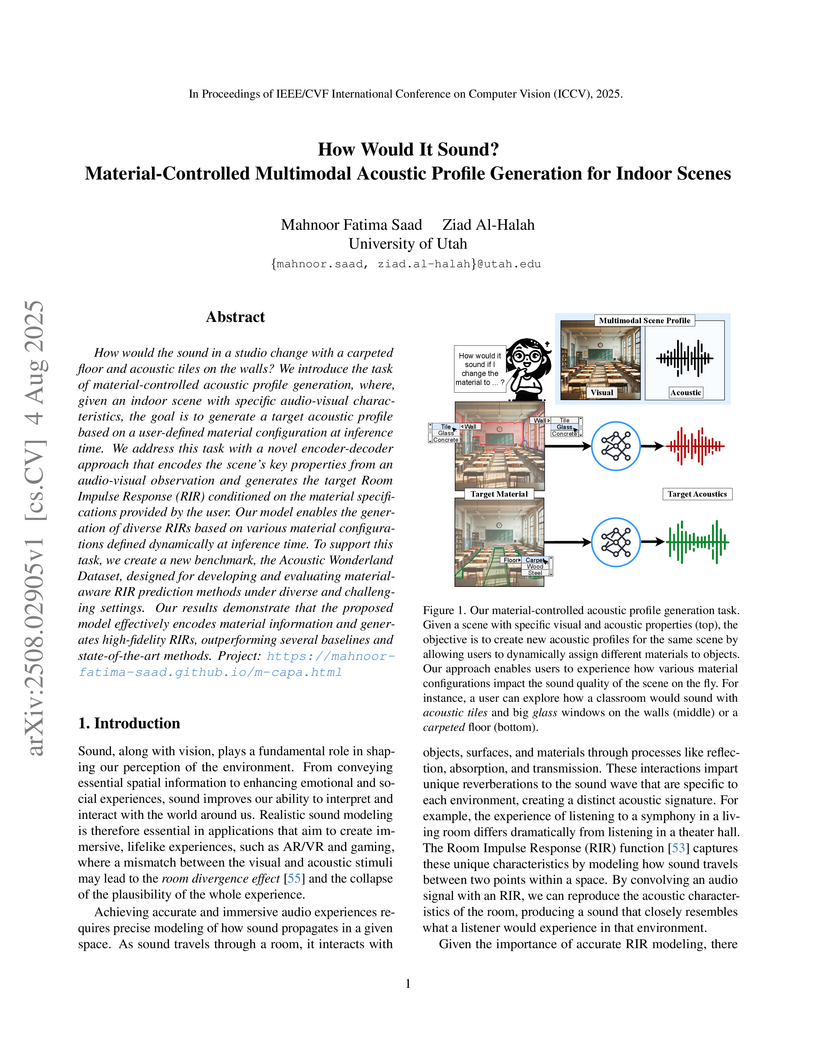

How would the sound in a studio change with a carpeted floor and acoustic tiles on the walls? We introduce the task of material-controlled acoustic profile generation, where, given an indoor scene with specific audio-visual characteristics, the goal is to generate a target acoustic profile based on a user-defined material configuration at inference time. We address this task with a novel encoder-decoder approach that encodes the scene's key properties from an audio-visual observation and generates the target Room Impulse Response (RIR) conditioned on the material specifications provided by the user. Our model enables the generation of diverse RIRs based on various material configurations defined dynamically at inference time. To support this task, we create a new benchmark, the Acoustic Wonderland Dataset, designed for developing and evaluating material-aware RIR prediction methods under diverse and challenging settings. Our results demonstrate that the proposed model effectively encodes material information and generates high-fidelity RIRs, outperforming several baselines and state-of-the-art methods.

WonderPlay introduces a hybrid generative simulator capable of creating action-conditioned dynamic 3D scenes from a single 2D image, integrating physics solvers with a video diffusion model to depict realistic interactions across diverse materials. The system demonstrates superior physical plausibility and visual quality compared to existing methods, achieving 70% to 85% user preference in studies.

MAIN-RAG is a training-free, multi-agent LLM framework designed to filter noisy documents in Retrieval-Augmented Generation (RAG) systems. It consistently outperforms training-free baselines and achieves competitive performance with training-based RAG models by using an adaptive filtering mechanism that quantifies document relevance based on LLM judgments.

University of Utah University of Notre Dame

University of Notre Dame UC Berkeley

UC Berkeley Stanford University

Stanford University University of Michigan

University of Michigan Texas A&M University

Texas A&M University NVIDIA

NVIDIA University of Texas at Austin

University of Texas at Austin Columbia UniversityLehigh University

Columbia UniversityLehigh University University of Florida

University of Florida Johns Hopkins University

Johns Hopkins University Arizona State University

Arizona State University University of Wisconsin-Madison

University of Wisconsin-Madison Purdue UniversityUniversity of California, MercedTechnische Universität MünchenBosch Research North AmericaBosch Center for Artificial Intelligence (BCAI)University of California Riverside

Purdue UniversityUniversity of California, MercedTechnische Universität MünchenBosch Research North AmericaBosch Center for Artificial Intelligence (BCAI)University of California Riverside AdobeCleveland State UniversityTexas A&M Transportation Institute

AdobeCleveland State UniversityTexas A&M Transportation Institute

University of Notre Dame

University of Notre Dame UC Berkeley

UC Berkeley Stanford University

Stanford University University of Michigan

University of Michigan Texas A&M University

Texas A&M University NVIDIA

NVIDIA University of Texas at Austin

University of Texas at Austin Columbia UniversityLehigh University

Columbia UniversityLehigh University University of Florida

University of Florida Johns Hopkins University

Johns Hopkins University Arizona State University

Arizona State University University of Wisconsin-Madison

University of Wisconsin-Madison Purdue UniversityUniversity of California, MercedTechnische Universität MünchenBosch Research North AmericaBosch Center for Artificial Intelligence (BCAI)University of California Riverside

Purdue UniversityUniversity of California, MercedTechnische Universität MünchenBosch Research North AmericaBosch Center for Artificial Intelligence (BCAI)University of California Riverside AdobeCleveland State UniversityTexas A&M Transportation Institute

AdobeCleveland State UniversityTexas A&M Transportation InstituteA comprehensive survey examines how generative AI technologies (GANs, VAEs, Diffusion Models, LLMs) are being applied across the autonomous driving stack, mapping current applications while analyzing challenges in safety, evaluation, and deployment through a collaborative effort spanning 20+ institutions including Texas A&M, Stanford, and NVIDIA.

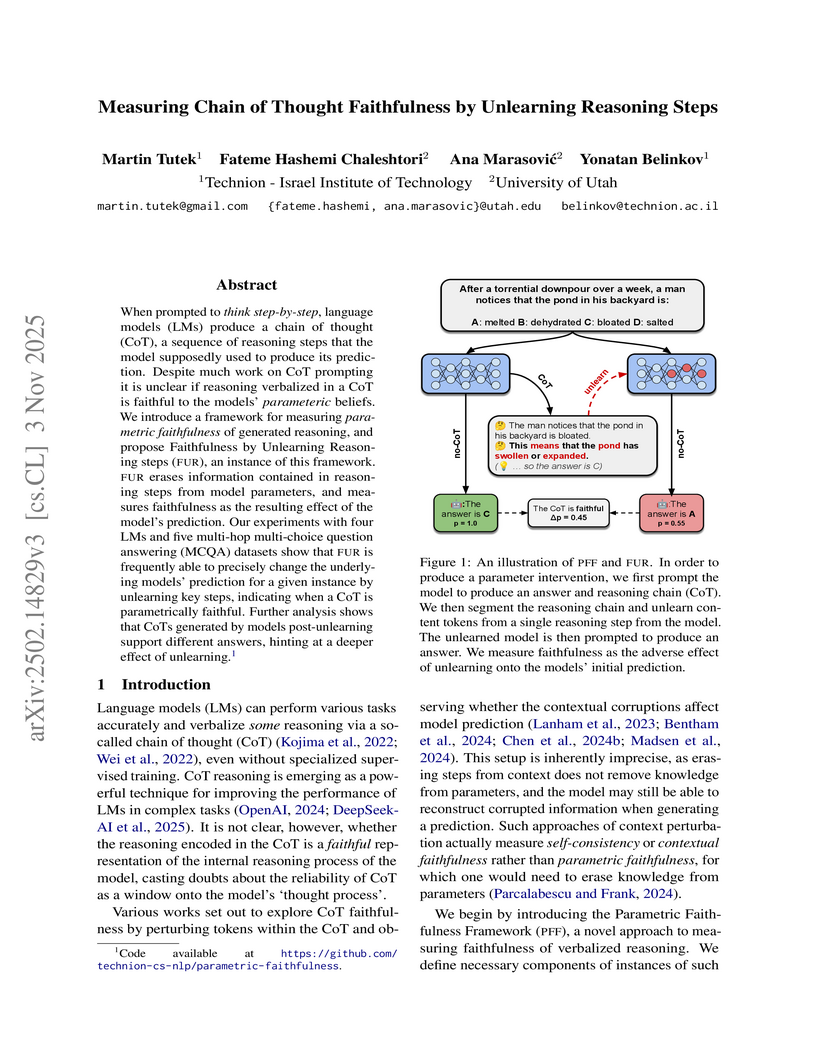

Researchers introduced the Parametric Faithfulness Framework (PFF) and Faithfulness by Unlearning Reasoning steps (FUR) to assess if a large language model's Chain of Thought truly reflects its internal computations. The study found that unlearning specific reasoning steps predictably changes model predictions and subsequent verbalized reasoning, but also uncovered a weak correlation between parametrically faithful steps and human judgments of plausibility.

Reinforcement Mid-Training (RMT) formalizes a critical third stage in large language model development, applying reinforcement learning on unlabeled pre-training data to systematically enhance complex reasoning capabilities. The method achieves up to +64.91% higher language modeling accuracy compared to prior RL-based mid-training approaches while reducing reasoning response length by up to 79%.

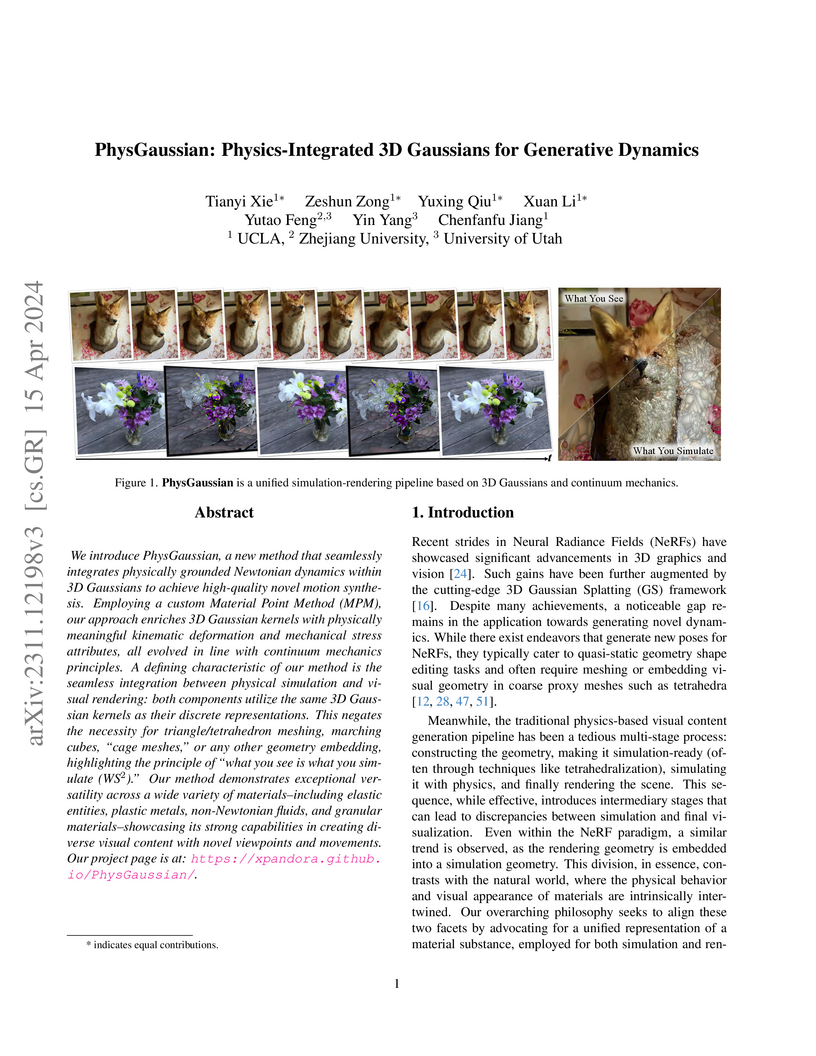

PhysGaussian introduces a unified framework where 3D Gaussian kernels serve as both rendering primitives and discrete elements for physical simulation, enabling the generation of photo-realistic and physically plausible dynamics across diverse material types. This approach eliminates intermediate geometric representations, achieving a "what you see is what you simulate" paradigm for dynamic 3D content.

15 Oct 2024

A system for robust, fast, and safe dexterous robotic grasping is presented, leveraging the integration of reinforcement learning, geometric fabrics, and teacher-student distillation to control an arm-hand system directly from depth images for zero-shot sim-to-real transfer. The approach achieves high success rates on diverse novel objects in real-world bin-picking tasks while ensuring hardware safety.

Researchers systematically compared prompting and reinforcement learning for query augmentation, demonstrating that a simple prompting approach is surprisingly competitive across various retrieval tasks. They introduced On-policy Pseudo-document Query Expansion (OPQE), a hybrid method that combines pseudo-document generation with reinforcement learning, achieving new state-of-the-art results for dense retrieval by optimizing pseudo-document content.

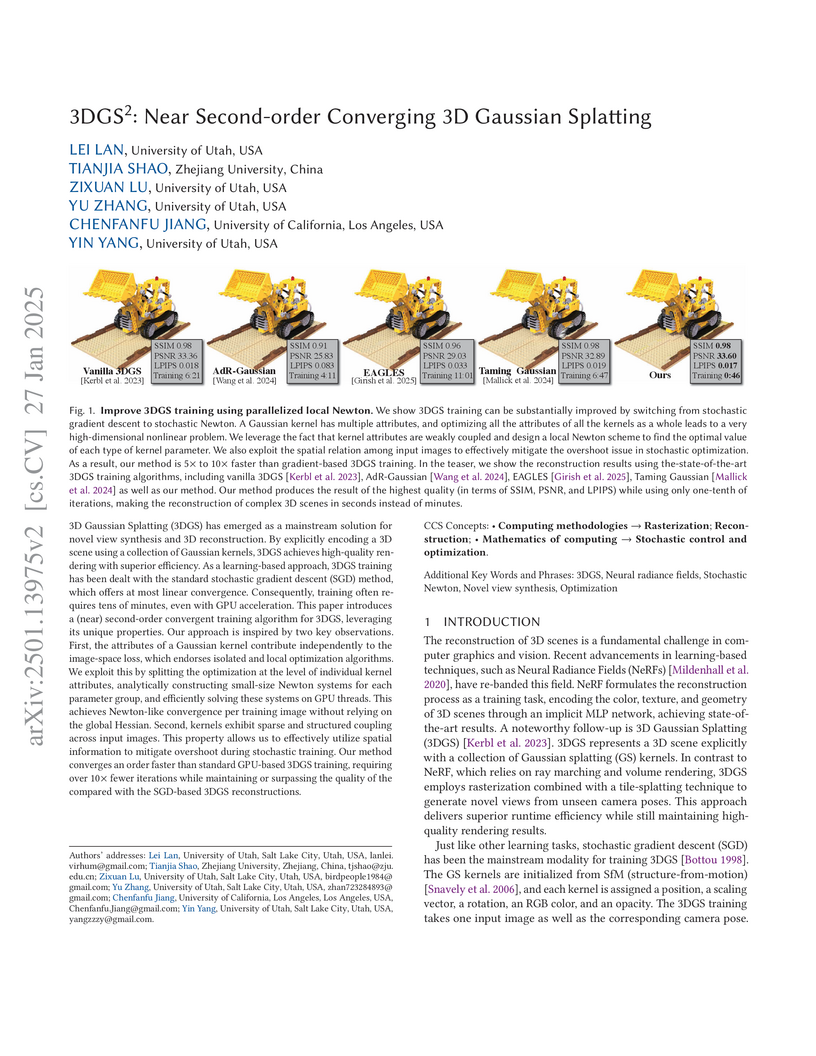

An optimized training algorithm for 3D Gaussian Splatting achieves 5x to 10x faster training, reducing times from minutes to seconds, without compromising reconstruction quality. This is accomplished through a near second-order stochastic local Newton's method that exploits weak coupling among Gaussian attributes and uses K-Nearest Neighbor camera poses to prevent optimization overshoot.

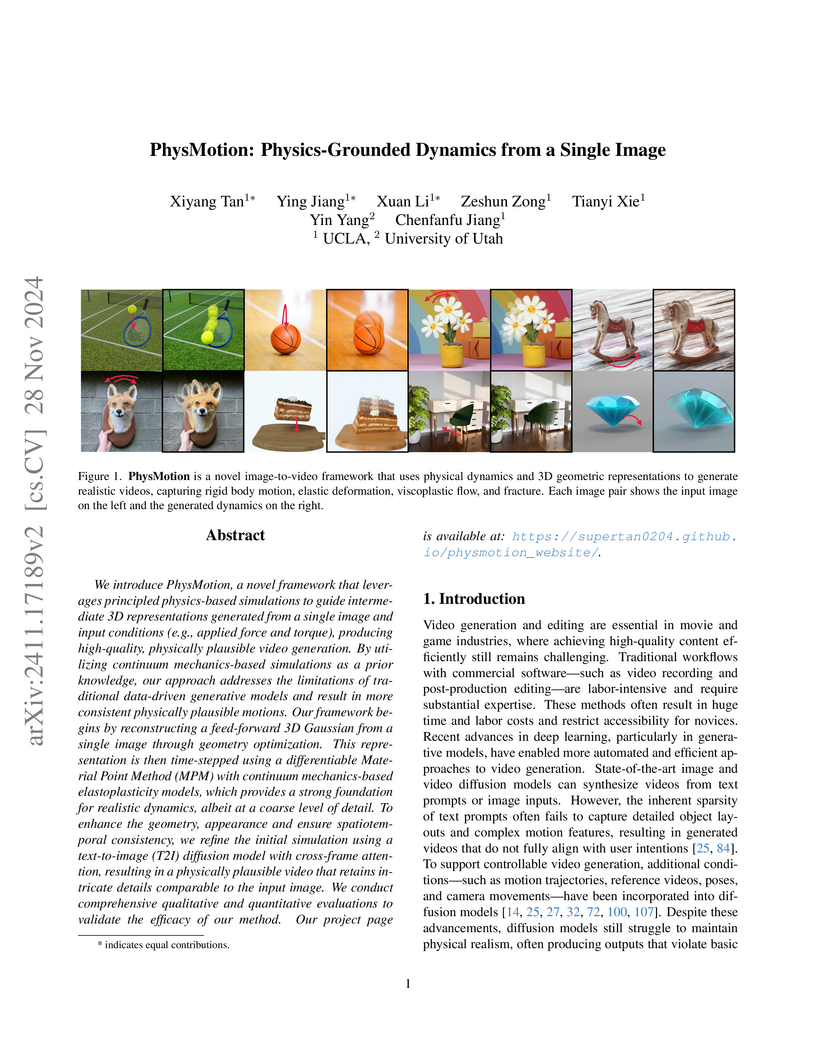

PhysMotion introduces a framework that generates physically plausible 3D dynamics from a single input image by integrating 3D Gaussian Splatting, a differentiable Material Point Method simulator, and diffusion models. It produced realistic object motions across various material types, quantitatively outperforming baselines in physical commonsense and semantic adherence scores.

University of Washington

University of Washington Harvard UniversityUniversity of Utah

Harvard UniversityUniversity of Utah Stanford University

Stanford University McGill UniversityHarvard Medical SchoolMassachusetts General HospitalUniversity of Tübingen

McGill UniversityHarvard Medical SchoolMassachusetts General HospitalUniversity of Tübingen The Ohio State UniversityDana-Farber Cancer InstituteAgency for Science Technology and Research (A*STAR)Brigham and Women’s HospitalHelmholtz Center MunichOregon Health & Science UniversityBroad Institute of Harvard and MITHarvard-MITUniversity of Rochester Medical CenterARUP Institute for Clinical and Experimental PathologyBeth-Israel Deaconess Medical Center

The Ohio State UniversityDana-Farber Cancer InstituteAgency for Science Technology and Research (A*STAR)Brigham and Women’s HospitalHelmholtz Center MunichOregon Health & Science UniversityBroad Institute of Harvard and MITHarvard-MITUniversity of Rochester Medical CenterARUP Institute for Clinical and Experimental PathologyBeth-Israel Deaconess Medical CenterKRONOS introduces the first foundation model specifically designed for spatial proteomics, leveraging a massive dataset of 47 million image patches to learn generalizable representations. This model enables superior cell phenotyping, facilitates robust segmentation-free analysis, and improves patient stratification and image retrieval across diverse experimental conditions and tissue types.

26 Aug 2025

The period from 2019 to the present has represented one of the biggest paradigm shifts in information retrieval (IR) and natural language processing (NLP), culminating in the emergence of powerful large language models (LLMs) from 2022 onward. Methods leveraging pretrained encoder-only models (e.g., BERT) and LLMs have outperformed many previous approaches, particularly excelling in zero-shot scenarios and complex reasoning tasks. This work surveys the evolution of model architectures in IR, focusing on two key aspects: backbone models for feature extraction and end-to-end system architectures for relevance estimation. The review intentionally separates architectural considerations from training methodologies to provide a focused analysis of structural innovations in IR systems. We trace the development from traditional term-based methods to modern neural approaches, particularly highlighting the impact of transformer-based models and subsequent large language models (LLMs). We conclude with a forward-looking discussion of emerging challenges and future directions, including architectural optimizations for performance and scalability, handling of multimodal, multilingual data, and adaptation to novel application domains such as autonomous search agents that is beyond traditional search paradigms.

This paper unifies geometric approaches to Effective Field Theories (EFTs) by demonstrating how on-shell covariant building blocks for scattering amplitudes can be constructed under general field redefinitions. It introduces an unambiguous metric for the functional manifold by using a "Warsaw-like basis," allowing a consistent reduction to existing field space geometry results.

01 Oct 2025

We introduce the concept of \emph{orbital altermagnetism}, a symmetry-protected magnetic order of pure orbital degrees of freedom. It is characterized with ordered anti-parallel orbital magnetic moments in real space but momentum-dependent orbital band splittings, analogous to spin altermagnetism. Using a minimal tight-binding model with complex hoppings in a square-kagome lattice, we show that such order inherently arises from staggered loop currents, producing a d-wave-like orbital-momentum locking. First-principles calculations show that orbital altermagnetism emerges independent of spin ordering in in-plane ferromagnets of CuBr2 and VS2, so that it can be unambiguously identified experimentally. On the other hand, it may also coexist with spin altermagnetism, such as in monolayer MoO and CrO. The orbital altermagnetism offers an alternative platform for symmetry-driven magnetotransport and orbital-based spintronics, as exemplified by large nonlinear current-induced orbital magnetization.

Researchers characterized how Physics-Informed Neural Networks (PINNs) often fail to learn solutions for moderately complex partial differential equations due to optimization difficulties. They introduced curriculum regularization and a sequence-to-sequence learning approach, which reduced prediction errors by up to two orders of magnitude in challenging cases.

Researchers at CERN, EPFL, University of Oregon, UCSD, and University of Utah developed a functional geometry framework for scalar Effective Field Theories, which accounts for derivative-dependent field redefinitions. This framework introduces geometrized vertices that ensure scattering amplitudes are manifestly on-shell covariant and generalizes the geometry-kinematics duality to a broader range of theories.

There are no more papers matching your filters at the moment.