University of Vienna

In biochemical networks, complex dynamical features such as superlinear growth and oscillations are classically considered a consequence of autocatalysis. For the large class of parameter-rich kinetic models, which includes Generalized Mass Action kinetics and Michaelis-Menten kinetics, we show that certain submatrices of the stoichiometric matrix, so-called unstable cores, are sufficient for a reaction network to admit instability and potentially give rise to such complex dynamical behavior. The determinant of the submatrix distinguishes unstable-positive feedbacks, with a single real-positive eigenvalue, and unstable-negative feedbacks without real-positive eigenvalues. Autocatalytic cores turn out to be exactly the unstable-positive feedbacks that are Metzler matrices. Thus there are sources of dynamical instability in chemical networks that are unrelated to autocatalysis. We use such intuition to design non-autocatalytic biochemical networks with superlinear growth and oscillations.

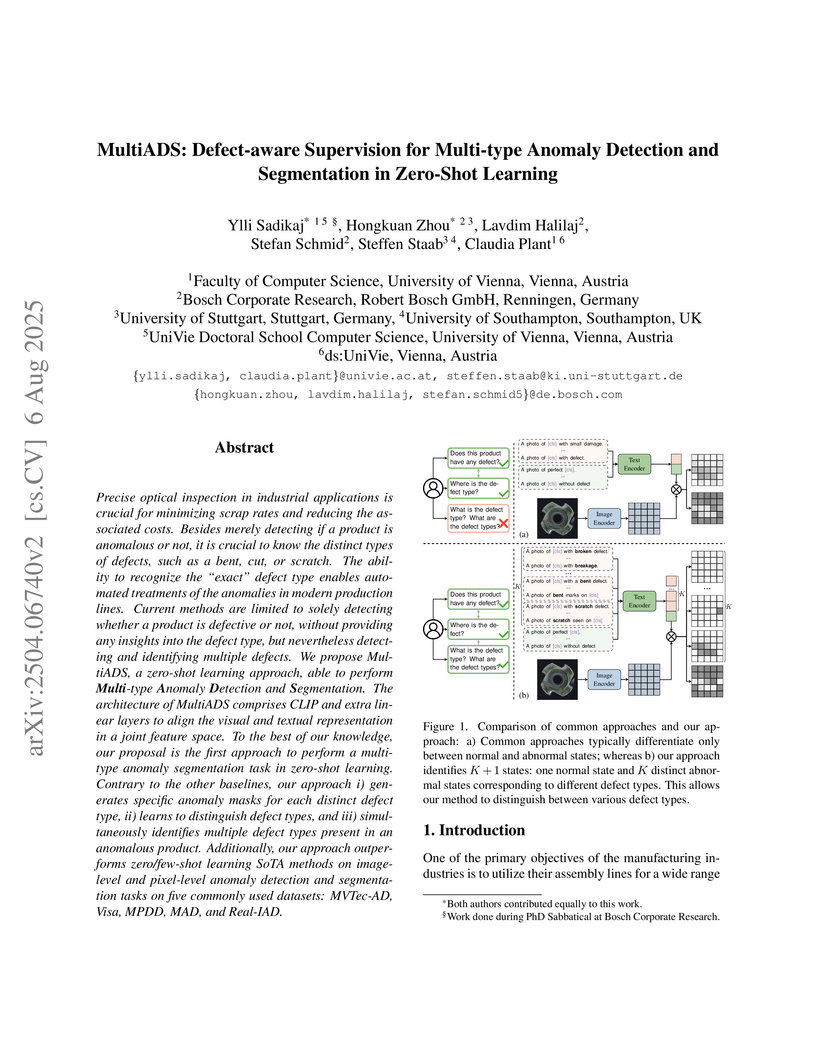

MultiADS introduces a zero-shot learning framework for multi-type anomaly detection and segmentation, enabling the classification of specific defect types within industrial products. The approach leverages defect-aware supervision with Vision-Language Models and outperforms state-of-the-art binary anomaly detection methods while providing fine-grained defect identification.

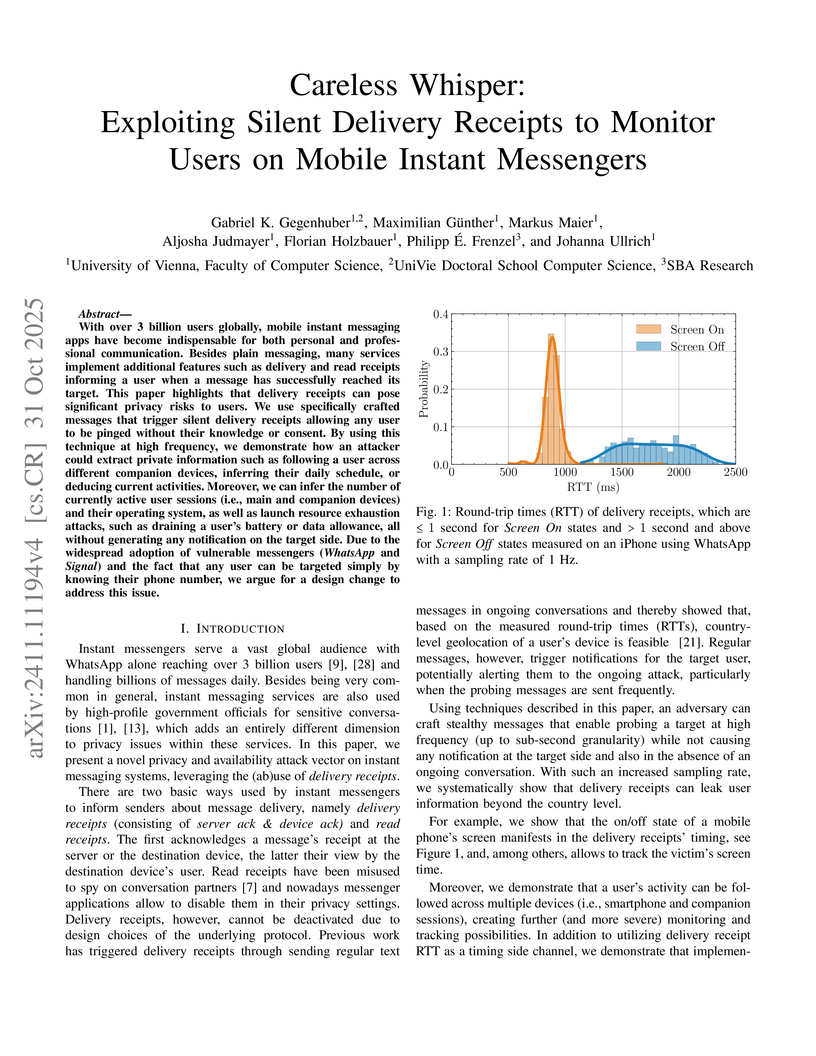

Researchers identified and exploited "silent delivery receipts" in WhatsApp and Signal, enabling covert, high-frequency user monitoring and resource exhaustion attacks. The work demonstrates how an attacker can infer detailed user activity across multiple devices and drain battery or data without detection, impacting billions of users.

27 Feb 2024

CNRS

CNRS University of Southern California

University of Southern California National University of Singapore

National University of Singapore Georgia Institute of Technology

Georgia Institute of Technology Beihang University

Beihang University Osaka University

Osaka University Zhejiang University

Zhejiang University Cornell University

Cornell University Northwestern University

Northwestern University University of Texas at Austin

University of Texas at Austin Nanyang Technological University

Nanyang Technological University Purdue UniversityUniversity of Illinois at ChicagoUniversity of ViennaUniversity of Texas at DallasVirginia Commonwealth UniversityUniversity of California at Los Angeles

Purdue UniversityUniversity of Illinois at ChicagoUniversity of ViennaUniversity of Texas at DallasVirginia Commonwealth UniversityUniversity of California at Los Angeles University of VirginiaUniversity of MessinaPontifical Catholic University of Rio de JaneiroUniversity of South CarolinaKanazawa UniversityIndian Institute of Technology RoorkeeUniversity of GothenburgFederal University of Rio de JaneiroThalesPolitecnico di BariUniversity of California at Santa BarbaraTechnical University DelftUniversity of California at San DiegoToshiba CorporationA* STARUniversity of Duisberg-EssenInteruniversity Microelectronics Center (IMEC)Laboratoire d'Informatique, de Robotique et de Microélectronique de Montpellier

University of VirginiaUniversity of MessinaPontifical Catholic University of Rio de JaneiroUniversity of South CarolinaKanazawa UniversityIndian Institute of Technology RoorkeeUniversity of GothenburgFederal University of Rio de JaneiroThalesPolitecnico di BariUniversity of California at Santa BarbaraTechnical University DelftUniversity of California at San DiegoToshiba CorporationA* STARUniversity of Duisberg-EssenInteruniversity Microelectronics Center (IMEC)Laboratoire d'Informatique, de Robotique et de Microélectronique de MontpellierIn the "Beyond Moore's Law" era, with increasing edge intelligence, domain-specific computing embracing unconventional approaches will become increasingly prevalent. At the same time, adopting a variety of nanotechnologies will offer benefits in energy cost, computational speed, reduced footprint, cyber resilience, and processing power. The time is ripe for a roadmap for unconventional computing with nanotechnologies to guide future research, and this collection aims to fill that need. The authors provide a comprehensive roadmap for neuromorphic computing using electron spins, memristive devices, two-dimensional nanomaterials, nanomagnets, and various dynamical systems. They also address other paradigms such as Ising machines, Bayesian inference engines, probabilistic computing with p-bits, processing in memory, quantum memories and algorithms, computing with skyrmions and spin waves, and brain-inspired computing for incremental learning and problem-solving in severely resource-constrained environments. These approaches have advantages over traditional Boolean computing based on von Neumann architecture. As the computational requirements for artificial intelligence grow 50 times faster than Moore's Law for electronics, more unconventional approaches to computing and signal processing will appear on the horizon, and this roadmap will help identify future needs and challenges. In a very fertile field, experts in the field aim to present some of the dominant and most promising technologies for unconventional computing that will be around for some time to come. Within a holistic approach, the goal is to provide pathways for solidifying the field and guiding future impactful discoveries.

Continuous-time neural processes are performant sequential decision-makers

that are built by differential equations (DE). However, their expressive power

when they are deployed on computers is bottlenecked by numerical DE solvers.

This limitation has significantly slowed down the scaling and understanding of

numerous natural physical phenomena such as the dynamics of nervous systems.

Ideally, we would circumvent this bottleneck by solving the given dynamical

system in closed form. This is known to be intractable in general. Here, we

show it is possible to closely approximate the interaction between neurons and

synapses -- the building blocks of natural and artificial neural networks --

constructed by liquid time-constant networks (LTCs) efficiently in closed-form.

To this end, we compute a tightly-bounded approximation of the solution of an

integral appearing in LTCs' dynamics, that has had no known closed-form

solution so far. This closed-form solution substantially impacts the design of

continuous-time and continuous-depth neural models; for instance, since time

appears explicitly in closed-form, the formulation relaxes the need for complex

numerical solvers. Consequently, we obtain models that are between one and five

orders of magnitude faster in training and inference compared to differential

equation-based counterparts. More importantly, in contrast to ODE-based

continuous networks, closed-form networks can scale remarkably well compared to

other deep learning instances. Lastly, as these models are derived from liquid

networks, they show remarkable performance in time series modeling, compared to

advanced recurrent models.

06 Oct 2025

The mildly non-linear regime of cosmic structure formation holds much of the information that upcoming large-scale structure surveys aim to exploit, making fast and accurate predictions on these scales essential. We present the N-body module of DISCO-DJ (DIfferentiable Simulations for COsmology - Done with Jax), designed to deliver high-fidelity, GPU-accelerated, and differentiable particle-mesh simulations tailored for cosmological inference. Theory-informed time integrators such as the recently introduced BullFrog method allow for accurate predictions already with few time steps (e.g. 6 steps for per-cent-level accuracy in terms of the present-day power spectrum at k≈0.2h/Mpc using N=5123 particles, which takes just a few seconds). To control discreteness effects and achieve high accuracy, the code incorporates a suite of advanced techniques, for example a custom non-uniform FFT implementation for force evaluation. Both forward- and reverse-mode differentiation are supported, with memory requirements independent of the number of time steps; in the reverse case, this is achieved through an adjoint formulation. We extensively study the effect of various numerical parameters on the accuracy. As an application of DISCO-DJ, we perform field-level inference by recovering σ8 and the initial conditions from a noisy Gadget matter density field. Coupled with our recently introduced Einstein--Boltzmann solver, the DISCO-DJ ecosystem provides a self-consistent, fully differentiable pipeline for modelling the large-scale structure of the universe. The code is available at this https URL.

Jakob Steininger and Sergey Yurkevich constructed a convex polyhedron, termed the Noperthedron, and rigorously demonstrated it lacks Rupert's property, thereby disproving the conjecture that all convex polyhedra possess this characteristic. They also identified a 'Ruperthedron' which is Rupert but not locally Rupert, adding a new distinction to the understanding of this geometric phenomenon.

University of PennsylvaniaUniversiteit GentUniversity of Western Australia

University of PennsylvaniaUniversiteit GentUniversity of Western Australia Leiden UniversityUniversity of ViennaLiverpool John Moores UniversityUniversity of PortsmouthUniversity of HullUniversity of NottinghamEcole Polytechnique Fédérale de Lausanne (EPFL)Netherlands Organisation for Applied Scientific Research (TNO)University of DurhamUniversit

degli Studi di Milano-Bicocca

Leiden UniversityUniversity of ViennaLiverpool John Moores UniversityUniversity of PortsmouthUniversity of HullUniversity of NottinghamEcole Polytechnique Fédérale de Lausanne (EPFL)Netherlands Organisation for Applied Scientific Research (TNO)University of DurhamUniversit

degli Studi di Milano-BicoccaWe present the COLIBRE galaxy formation model and the COLIBRE suite of cosmological hydrodynamical simulations. COLIBRE includes new models for radiative cooling, dust grains, star formation, stellar mass loss, turbulent diffusion, pre-supernova stellar feedback, supernova feedback, supermassive black holes and active galactic nucleus (AGN) feedback. The multiphase interstellar medium is explicitly modelled without a pressure floor. Hydrogen and helium are tracked in non-equilibrium, with their contributions to the free electron density included in metal-line cooling calculations. The chemical network is coupled to a dust model that tracks three grain species and two grain sizes. In addition to the fiducial thermally-driven AGN feedback, a subset of simulations uses black hole spin-dependent hybrid jet/thermal AGN feedback. To suppress spurious transfer of energy from dark matter to stars, dark matter is supersampled by a factor 4, yielding similar dark matter and baryonic particle masses. The subgrid feedback model is calibrated to match the observed z≈0 galaxy stellar mass function, galaxy sizes, and black hole masses in massive galaxies. The COLIBRE suite includes three resolutions, with particle masses of ∼105, 106, and 107M⊙ in cubic volumes of up to 50, 200, and 400 cMpc on a side, respectively. The two largest runs use 136 billion (5×30083) particles. We describe the model, assess its strengths and limitations, and present both visual impressions and quantitative results. Comparisons with various low-redshift galaxy observations generally show very good numerical convergence and excellent agreement with the data.

We propose the State Space Neural Operator (SS-NO), a compact architecture for learning solution operators of time-dependent partial differential equations (PDEs). Our formulation extends structured state space models (SSMs) to joint spatiotemporal modeling, introducing two key mechanisms: adaptive damping, which stabilizes learning by localizing receptive fields, and learnable frequency modulation, which enables data-driven spectral selection. These components provide a unified framework for capturing long-range dependencies with parameter efficiency. Theoretically, we establish connections between SSMs and neural operators, proving a universality theorem for convolutional architectures with full field-of-view. Empirically, SS-NO achieves state-of-the-art performance across diverse PDE benchmarks-including 1D Burgers' and Kuramoto-Sivashinsky equations, and 2D Navier-Stokes and compressible Euler flows-while using significantly fewer parameters than competing approaches. A factorized variant of SS-NO further demonstrates scalable performance on challenging 2D problems. Our results highlight the effectiveness of damping and frequency learning in operator modeling, while showing that lightweight factorization provides a complementary path toward efficient large-scale PDE learning.

A nonparametric estimator for path-valued data integrates the Nadaraya-Watson framework with semi-metrics derived from the signature transform. This method achieves Euclidean-type nonparametric convergence rates, demonstrating faster learning from data, and shows superior accuracy and computational efficiency in SDE learning and time series classification tasks.

We study the problem of computing the minimum cut in a weighted distributed message-passing networks (the CONGEST model). Let λ be the minimum cut, n be the number of nodes in the network, and D be the network diameter. Our algorithm can compute λ exactly in O((nlog∗n+D)λ4log2n) time. To the best of our knowledge, this is the first paper that explicitly studies computing the exact minimum cut in the distributed setting. Previously, non-trivial sublinear time algorithms for this problem are known only for unweighted graphs when λ≤3 due to Pritchard and Thurimella's O(D)-time and O(D+n1/2log∗n)-time algorithms for computing 2-edge-connected and 3-edge-connected components.

By using the edge sampling technique of Karger's, we can convert this algorithm into a (1+ϵ)-approximation O((nlog∗n+D)ϵ−5log3n)-time algorithm for any ϵ>0. This improves over the previous (2+ϵ)-approximation O((nlog∗n+D)ϵ−5log2nloglogn)-time algorithm and O(ϵ−1)-approximation O(D+n21+ϵpolylogn)-time algorithm of Ghaffari and Kuhn. Due to the lower bound of Ω(D+n1/2/logn) by Das Sarma et al. which holds for any approximation algorithm, this running time is tight up to a polylogn factor.

To get the stated running time, we developed an approximation algorithm which combines the ideas of Thorup's algorithm and Matula's contraction algorithm. It saves an ϵ−9log7n factor as compared to applying Thorup's tree packing theorem directly. Then, we combine Kutten and Peleg's tree partitioning algorithm and Karger's dynamic programming to achieve an efficient distributed algorithm that finds the minimum cut when we are given a spanning tree that crosses the minimum cut exactly once.

Recently, a series of papers proposed deep learning-based approaches to

sample from target distributions using controlled diffusion processes, being

trained only on the unnormalized target densities without access to samples.

Building on previous work, we identify these approaches as special cases of a

generalized Schr\"odinger bridge problem, seeking a stochastic evolution

between a given prior distribution and the specified target. We further

generalize this framework by introducing a variational formulation based on

divergences between path space measures of time-reversed diffusion processes.

This abstract perspective leads to practical losses that can be optimized by

gradient-based algorithms and includes previous objectives as special cases. At

the same time, it allows us to consider divergences other than the reverse

Kullback-Leibler divergence that is known to suffer from mode collapse. In

particular, we propose the so-called log-variance loss, which exhibits

favorable numerical properties and leads to significantly improved performance

across all considered approaches.

In our derivation of the second law of thermodynamics from the relation of adiabatic accessibility of equilibrium states we stressed the importance of being able to scale a system's size without changing its intrinsic properties. This leaves open the question of defining the entropy of macroscopic, but unscalable systems, such as gravitating bodies or systems where surface effects are important. We show here how the problem can be overcome, in principle, with the aid of an `entropy meter'. An entropy meter can also be used to determine entropy functions for non-equilibrium states and mesoscopic systems.

15 Sep 2025

A magnetic quiver framework is proposed for studying maximal branches of 3d orthosymplectic Chern-Simons matter theories with N≥3 supersymmetry, arising from Type IIB brane setups with O3 planes. These branches are extracted via brane moves, yielding orthosymplectic N=4 magnetic quivers whose Coulomb branches match the moduli spaces of interest. Global gauge group data, inaccessible from brane configurations alone, are determined through supersymmetric indices, Hilbert series, and fugacity maps. The analysis is exploratory in nature and highlights several subtle features. In particular, magnetic quivers are proposed as predictions for the maximal branches in a range of examples. Along the way, dualities and structural puzzles are uncovered, reminiscent of challenges in 3d mirror symmetry with orientifolds.

Akin to neuroplasticity in human brains, the plasticity of deep neural

networks enables their quick adaption to new data. This makes plasticity

particularly crucial for deep Reinforcement Learning (RL) agents: Once

plasticity is lost, an agent's performance will inevitably plateau because it

cannot improve its policy to account for changes in the data distribution,

which are a necessary consequence of its learning process. Thus, developing

well-performing and sample-efficient agents hinges on their ability to remain

plastic during training. Furthermore, the loss of plasticity can be connected

to many other issues plaguing deep RL, such as training instabilities, scaling

failures, overestimation bias, and insufficient exploration. With this survey,

we aim to provide an overview of the emerging research on plasticity loss for

academics and practitioners of deep reinforcement learning. First, we propose a

unified definition of plasticity loss based on recent works, relate it to

definitions from the literature, and discuss metrics for measuring plasticity

loss. Then, we categorize and discuss numerous possible causes of plasticity

loss before reviewing currently employed mitigation strategies. Our taxonomy is

the first systematic overview of the current state of the field. Lastly, we

discuss prevalent issues within the literature, such as a necessity for broader

evaluation, and provide recommendations for future research, like gaining a

better understanding of an agent's neural activity and behavior.

16 Sep 2025

Strassen's theorem asserts that for given marginal probabilities μ,ν there exists a martingale starting in μ and terminating in ν if and only if μ,ν are in convex order. From a financial perspective, it guarantees the existence of market-consistent martingale pricing measures for arbitrage-free prices of European call options and thus plays a fundamental role in robust finance. Arbitrage-free prices of American options demand a stronger version of martingales which are 'biased' in a specific sense. In this paper, we derive an extension of Strassen's theorem that links them to an appropriate strengthening of the convex order. Moreover, we provide a characterization of this order through integrals with respect to compensated Poisson processes.

28 Apr 2025

ETH Zurich

ETH Zurich Harvard University

Harvard University National University of Singapore

National University of Singapore University of OxfordIndiana UniversityScuola Normale Superiore

University of OxfordIndiana UniversityScuola Normale Superiore University of British ColumbiaAustrian Academy of SciencesUniversity of ExeterUniversity of RochesterUniversity of LuxembourgUniversity of ViennaUniversity of PotsdamTU WienUniversity College DublinUniversidad de La LagunaUniversity of YorkUniversity of Maryland Baltimore CountyForschungszentrum Jülich GmbHQueen's University BelfastUniversità di FirenzeThe University of CampinasOIST Graduate UniversityIstituto Nanoscienze

CNRUniversit

de LorraineUniversit

degli Studi di Palermo

University of British ColumbiaAustrian Academy of SciencesUniversity of ExeterUniversity of RochesterUniversity of LuxembourgUniversity of ViennaUniversity of PotsdamTU WienUniversity College DublinUniversidad de La LagunaUniversity of YorkUniversity of Maryland Baltimore CountyForschungszentrum Jülich GmbHQueen's University BelfastUniversità di FirenzeThe University of CampinasOIST Graduate UniversityIstituto Nanoscienze

CNRUniversit

de LorraineUniversit

degli Studi di PalermoThe last two decades has seen quantum thermodynamics become a well

established field of research in its own right. In that time, it has

demonstrated a remarkably broad applicability, ranging from providing

foundational advances in the understanding of how thermodynamic principles

apply at the nano-scale and in the presence of quantum coherence, to providing

a guiding framework for the development of efficient quantum devices. Exquisite

levels of control have allowed state-of-the-art experimental platforms to

explore energetics and thermodynamics at the smallest scales which has in turn

helped to drive theoretical advances. This Roadmap provides an overview of the

recent developments across many of the field's sub-disciplines, assessing the

key challenges and future prospects, providing a guide for its near term

progress.

This work establishes a rigorous theoretical connection between diffusion-based generative models and stochastic optimal control, demonstrating that the time-reversed log-density of diffusion processes satisfies a Hamilton–Jacobi–Bellman equation. Based on this framework, the authors introduce the time-reversed diffusion sampler (DIS), a novel and robust algorithm for sampling from high-dimensional, unnormalized densities that outperforms existing diffusion-based samplers like PIS.

17 Sep 2025

We study Carrollian contractions of WN-algebras from a free-field perspective. Using a contraction of the Miura transformation, we obtain explicit free-field realizations of the resulting Carrollian WN-algebras. At the classical level, they are isomorphic to the Galilean WN-algebras. In the quantum case, we distinguish between two Carrollian constructions: a flipped Carrollian contraction, where the time direction is reversed in one sector, and a symmetric contraction. The flipped construction yields a quantum algebra isomorphic to the Galilean one, whereas the symmetric construction produces a distinct quantum Carrollian WN-algebra whose basic structure constants are identical to those of the classical Carrollian WN-algebra. These algebras provide a natural framework for studying extended symmetries in Carrollian conformal field theories, motivated by recent developments in flat space holography. Our construction provides tools for developing the representation theory of Carrollian (and Galilean) WN-algebras using free-field techniques.

We present an extremely simple polynomial-space exponential-time (1−ε)-approximation algorithm for MAX-k-SAT that is (slightly) faster than the previous known polynomial-space (1−ε)-approximation algorithms by Hirsch (Discrete Applied Mathematics, 2003) and Escoffier, Paschos and Tourniaire (Theoretical Computer Science, 2014). Our algorithm repeatedly samples an assignment uniformly at random until finding an assignment that satisfies a large enough fraction of clauses. Surprisingly, we can show the efficiency of this simpler approach by proving that in any instance of MAX-k-SAT (or more generally any instance of MAXCSP), an exponential number of assignments satisfy a fraction of clauses close to the optimal value.

There are no more papers matching your filters at the moment.