Ask or search anything...

This empirical study from Tsinghua University and collaborators demonstrates that off-path attackers can remotely identify NAT devices via a PMTUD side channel and launch Denial-of-Service (DoS) attacks by manipulating TCP connections. Over 92% of 180 tested real-world NAT networks, including 4G/5G and public Wi-Fi, were found vulnerable, leading to the assignment of 5 CVE/CNVD identifiers.

View blogResearchers from ICT, Chinese Academy of Sciences, developed FedCache, a knowledge cache-driven federated learning architecture that facilitates personalized edge intelligence. It achieves performance comparable to state-of-the-art methods while reducing communication overhead by more than two orders of magnitude, notably being the first sample-grained logits interaction method without feature transmission or public datasets.

View blogPeking University and Alibaba Group researchers introduce Omni-MATH, a comprehensive, text-only, Olympiad-level mathematical reasoning benchmark. It features over 4,400 problems meticulously categorized by 33+ sub-domains and 10+ difficulty levels, revealing that state-of-the-art LLMs achieve only up to 60.54% accuracy, indicating significant remaining challenges in complex mathematical reasoning.

View blogA federated learning framework enables privacy-preserving collaborative training of RAG retrieval models across multiple organizations, combining homomorphic encryption and knowledge distillation to achieve 90.22 MAP score on financial domain tasks while keeping sensitive data localized within each client's environment.

View blogThe paper "Keeping Yourself is Important in Downstream Tuning Multimodal Large Language Model" provides a systematic review and unified benchmark for tuning MLLMs, classifying methods into Selective, Additive, and Reparameterization paradigms. It empirically analyzes the trade-offs between task-expert specialization and open-world stabilization, offering practical guidelines for MLLM deployment.

View blogA framework named ProverGen, developed by researchers including those at Beihang University and Shanghai AI Laboratory, combines large language models with symbolic provers to automatically generate ProverQA, a challenging and logically sound benchmark for first-order logic reasoning. This generated data effectively enhances LLM reasoning abilities, leading to consistent performance gains across both in-distribution and out-of-distribution logical tasks.

View blogSafeBench introduces a comprehensive framework for evaluating the safety of Multimodal Large Language Models (MLLMs) through an LLM-driven generation of high-quality multimodal harmful queries and an automated 'Jury Deliberation Protocol'. It revealed commercial MLLMs generally exhibit higher safety than open-source counterparts, identified specific high-risk categories like Cybersecurity, and demonstrated that multimodal fine-tuning can degrade the safety of underlying language models.

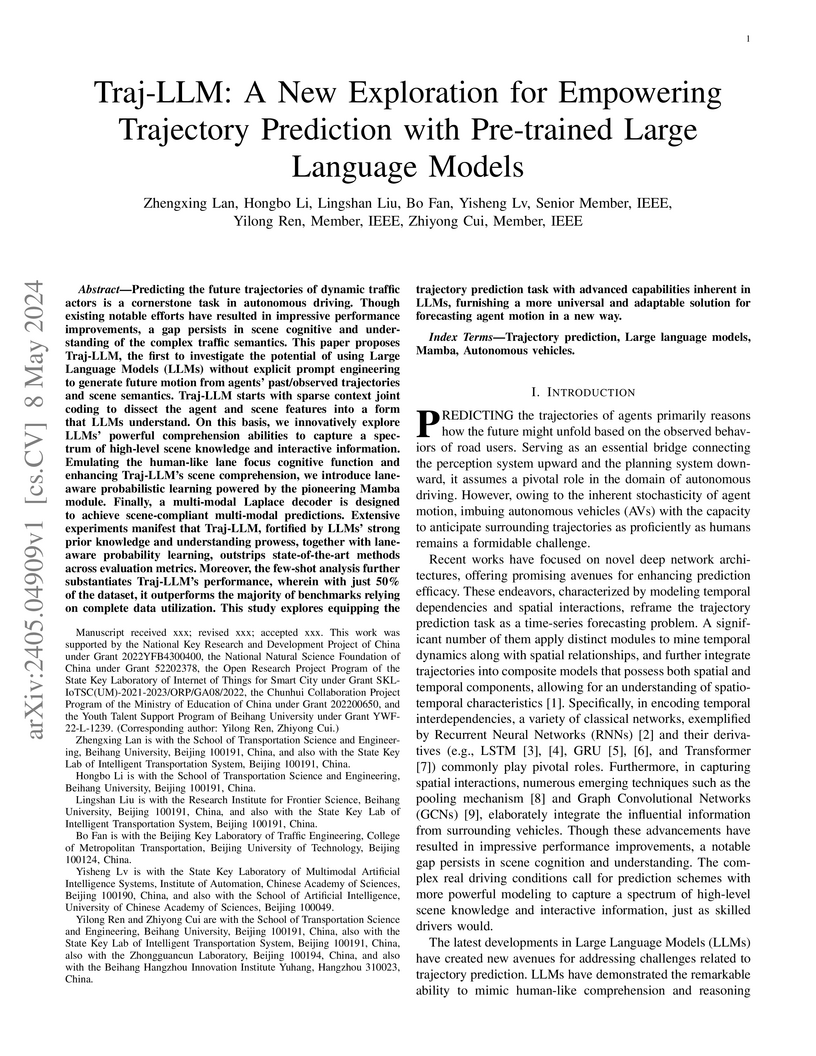

View blogTraj-LLM introduces a framework that integrates pre-trained Large Language Models into trajectory prediction for autonomous driving, processing spatial-temporal features as tokens rather than using explicit prompt engineering. It achieved state-of-the-art performance on the nuScenes dataset and demonstrated robust few-shot learning capabilities while maintaining practical inference times.

View blogA new benchmark, AGENTSAFE, systematically evaluates the safety of embodied vision-language model (VLM) agents against hazardous instructions, revealing vulnerabilities primarily in the planning stage. The research introduces SAFE-AUDIT, a thought-level safety module that improves task success rate by 2.22% on normal instructions and achieves the lowest planning (3.52%) and task success rates (0.48%) for hazardous tasks.

View blogA divergence-based calibration method, DC-PDD, was developed to enhance the detection of pretraining data in black-box large language models. This method consistently outperforms existing state-of-the-art approaches across English and Chinese benchmarks, including a new PatentMIA dataset, by effectively calibrating token probabilities.

View blogSecureWebArena is introduced as the first holistic security evaluation benchmark for LVLM-based web agents, integrating diverse web environments, a broad attack taxonomy, and a multi-layered evaluation protocol. The benchmark reveals consistent vulnerabilities across state-of-the-art models, with pop-up attacks being particularly effective and achieving Payload Delivery Rates (PDR) from 76.67% to 100%.

View blog Tsinghua University

Tsinghua University

Chinese Academy of Sciences

Chinese Academy of Sciences University of Science and Technology of China

University of Science and Technology of China

Beihang University

Beihang University

University of Waterloo

University of Waterloo

Nanyang Technological University

Nanyang Technological University

Zhejiang University

Zhejiang University

Fudan University

Fudan University

National University of Singapore

National University of Singapore

Peking University

Peking University

University of Amsterdam

University of Amsterdam