AI Sweden

JQL introduces a systematic, efficient, and open-source framework for curating high-quality multilingual pre-training data for large language models, extending the "LLM-as-a-judge" paradigm across diverse languages. The framework distills powerful LLM annotation capabilities into lightweight annotators, leading to improved downstream LLM performance by up to 7.2% on benchmarks compared to heuristic filtering.

Layer normalization (LN) is an essential component of modern neural networks. While many alternative techniques have been proposed, none of them have succeeded in replacing LN so far. The latest suggestion in this line of research is a dynamic activation function called Dynamic Tanh (DyT). Although it is empirically well-motivated and appealing from a practical point of view, it lacks a theoretical foundation. In this work, we shed light on the mathematical relationship between LN and dynamic activation functions. In particular, we derive DyT from the LN variant RMSNorm, and show that a well-defined decoupling in derivative space as well as an approximation are needed to do so. By applying the same decoupling procedure directly in function space, we are able to omit the approximation and obtain the exact element-wise counterpart of RMSNorm, which we call Dynamic Inverse Square Root Unit (DyISRU). We demonstrate numerically that DyISRU reproduces the normalization effect on outliers more accurately than DyT does.

Recent developments in Large Language Models (LLMs) have shown promise in automating code generation, yet the generated programs lack rigorous correctness guarantees. Formal verification can address this shortcoming, but requires expertise and is time-consuming to apply. Currently, there is no dataset of verified C code paired with formal specifications that enables systematic benchmarking in this space. To fill this gap, we present a curated evaluation dataset of C code paired with formal specifications written in ANSI/ISO C Specification Language (ACSL). We develop a multi-stage filtering process to carefully extract 506 pairs of C code and formal specifications from The Stack 1 and The Stack 2. We first identify C files annotated with formal languages. Then, we ensure that the annotated C files formally verify, and employ LLMs to improve non-verifying files. Furthermore, we post-process the remaining files into pairs of C code and ACSL specifications, where each specification-implementation pair is formally verified using Frama-C. To ensure the quality of the pairs, a manual inspection is conducted to confirm the correctness of every pair. The resulting dataset of C-ACSL specification pairs (CASP) provides a foundation for benchmarking and further research on integrating automated code generation with verified correctness.

We address the challenge of federated learning on graph-structured data

distributed across multiple clients. Specifically, we focus on the prevalent

scenario of interconnected subgraphs, where interconnections between different

clients play a critical role. We present a novel framework for this scenario,

named FedStruct, that harnesses deep structural dependencies. To uphold

privacy, unlike existing methods, FedStruct eliminates the necessity of sharing

or generating sensitive node features or embeddings among clients. Instead, it

leverages explicit global graph structure information to capture inter-node

dependencies. We validate the effectiveness of FedStruct through experimental

results conducted on six datasets for semi-supervised node classification,

showcasing performance close to the centralized approach across various

scenarios, including different data partitioning methods, varying levels of

label availability, and number of clients.

12 Jul 2025

Open source projects have made incredible progress in producing transparent and widely usable machine learning models and systems, but open source alone will face challenges in fully democratizing access to AI. Unlike software, AI models require substantial resources for activation -- compute, post-training, deployment, and oversight -- which only a few actors can currently provide. This paper argues that open source AI must be complemented by public AI: infrastructure and institutions that ensure models are accessible, sustainable, and governed in the public interest. To achieve the full promise of AI models as prosocial public goods, we need to build public infrastructure to power and deliver open source software and models.

Membership inference attacks (MIAs) aim to determine whether specific data were used to train a model. While extensively studied on classification models, their impact on time series forecasting remains largely unexplored. We address this gap by introducing two new attacks: (i) an adaptation of multivariate LiRA, a state-of-the-art MIA originally developed for classification models, to the time-series forecasting setting, and (ii) a novel end-to-end learning approach called Deep Time Series (DTS) attack. We benchmark these methods against adapted versions of other leading attacks from the classification setting.

We evaluate all attacks in realistic settings on the TUH-EEG and ELD datasets, targeting two strong forecasting architectures, LSTM and the state-of-the-art N-HiTS, under both record- and user-level threat models. Our results show that forecasting models are vulnerable, with user-level attacks often achieving perfect detection. The proposed methods achieve the strongest performance in several settings, establishing new baselines for privacy risk assessment in time series forecasting. Furthermore, vulnerability increases with longer prediction horizons and smaller training populations, echoing trends observed in large language models.

This handbook is a hands-on guide on how to approach text annotation tasks. It provides a gentle introduction to the topic, an overview of theoretical concepts as well as practical advice. The topics covered are mostly technical, but business, ethical and regulatory issues are also touched upon. The focus lies on readability and conciseness rather than completeness and scientific rigor. Experience with annotation and knowledge of machine learning are useful but not required. The document may serve as a primer or reference book for a wide range of professions such as team leaders, project managers, IT architects, software developers and machine learning engineers.

Aquatic bodies face numerous environmental threats caused by several marine

anomalies. Marine debris can devastate habitats and endanger marine life

through entanglement, while harmful algal blooms can produce toxins that

negatively affect marine ecosystems. Additionally, ships may discharge oil or

engage in illegal and overfishing activities, causing further harm. These

marine anomalies can be identified by applying trained deep learning (DL)

models on multispectral satellite imagery. Furthermore, the detection of other

anomalies, such as clouds, could be beneficial in filtering out irrelevant

images. However, DL models often require a large volume of labeled data for

training, which can be both costly and time-consuming, particularly for marine

anomaly detection where expert annotation is needed. A potential solution is

the use of semi-supervised learning methods, which can also utilize unlabeled

data. In this project, we implement and study the performance of FixMatch for

Semantic Segmentation, a semi-supervised algorithm for semantic segmentation.

Firstly, we found that semi-supervised models perform best with a high

confidence threshold of 0.9 when there is a limited amount of labeled data.

Secondly, we compare the performance of semi-supervised models with

fully-supervised models under varying amounts of labeled data. Our findings

suggest that semi-supervised models outperform fully-supervised models with

limited labeled data, while fully-supervised models have a slightly better

performance with larger volumes of labeled data. We propose two hypotheses to

explain why fully-supervised models surpass semi-supervised ones when a high

volume of labeled data is used. All of our experiments were conducted using a

U-Net model architecture with a limited number of parameters to ensure

compatibility with space-rated hardware.

AI Sweden introduces SWEb, a 1.01 trillion token dataset for Scandinavian languages (Swedish, Danish, Norwegian, and Icelandic), which significantly addresses the data scarcity for these languages in large language model development. The dataset was created using a three-stage pipeline featuring an innovative transformer-based content extraction model, enabling the collection of substantially more data than previous methods while maintaining quality.

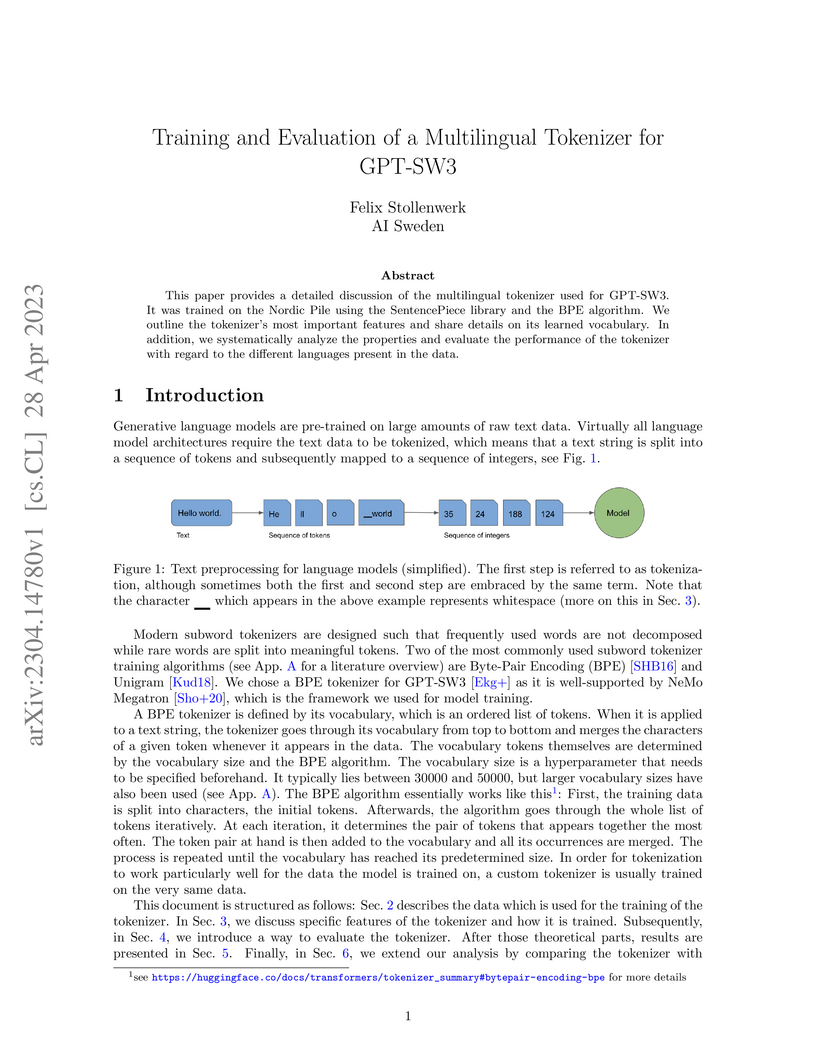

This paper provides a detailed discussion of the multilingual tokenizer used for GPT-SW3. It was trained on the Nordic Pile using the SentencePiece library and the BPE algorithm. We outline the tokenizer's most important features and share details on its learned vocabulary. In addition, we systematically analyze the properties and evaluate the performance of the tokenizer with regard to the different languages present in the data.

Despite their remarkable capabilities, LLMs learn word representations that exhibit the undesirable yet poorly understood feature of anisotropy. In this paper, we argue that the second moment in Adam is a cause of anisotropic embeddings, and suggest a modified optimizer called Coupled Adam to mitigate the problem. Our experiments demonstrate that Coupled Adam significantly improves the quality of embeddings, while also leading to better upstream and downstream performance on large enough datasets.

03 May 2023

Probabilistic programming languages (PPLs) allow users to encode arbitrary inference problems, and PPL implementations provide general-purpose automatic inference for these problems. However, constructing inference implementations that are efficient enough is challenging for many real-world problems. Often, this is due to PPLs not fully exploiting available parallelization and optimization opportunities. For example, handling probabilistic checkpoints in PPLs through continuation-passing style transformations or non-preemptive multitasking -- as is done in many popular PPLs -- often disallows compilation to low-level languages required for high-performance platforms such as GPUs. To solve the checkpoint problem, we introduce the concept of PPL control-flow graphs (PCFGs) -- a simple and efficient approach to checkpoints in low-level languages. We use this approach to implement RootPPL: a low-level PPL built on CUDA and C++ with OpenMP, providing highly efficient and massively parallel SMC inference. We also introduce a general method of compiling universal high-level PPLs to PCFGs and illustrate its application when compiling Miking CorePPL -- a high-level universal PPL -- to RootPPL. The approach is the first to compile a universal PPL to GPUs with SMC inference. We evaluate RootPPL and the CorePPL compiler through a set of real-world experiments in the domains of phylogenetics and epidemiology, demonstrating up to 6x speedups over state-of-the-art PPLs implementing SMC inference.

26 Nov 2024

Segmentation of Earth observation (EO) satellite data is critical for natural hazard analysis and disaster response. However, processing EO data at ground stations introduces delays due to data transmission bottlenecks and communication windows. Using segmentation models capable of near-real-time data analysis onboard satellites can therefore improve response times. This study presents a proof-of-concept using MobileSAM, a lightweight, pre-trained segmentation model, onboard Unibap iX10-100 satellite hardware. We demonstrate the segmentation of water bodies from Sentinel-2 satellite imagery and integrate MobileSAM with PASEOS, an open-source Python module that simulates satellite operations. This integration allows us to evaluate MobileSAM's performance under simulated conditions of a satellite constellation. Our research investigates the potential of fine-tuning MobileSAM in a decentralised way onboard multiple satellites in rapid response to a disaster. Our findings show that MobileSAM can be rapidly fine-tuned and benefits from decentralised learning, considering the constraints imposed by the simulated orbital environment. We observe improvements in segmentation performance with minimal training data and fast fine-tuning when satellites frequently communicate model updates. This study contributes to the field of onboard AI by emphasising the benefits of decentralised learning and fine-tuning pre-trained models for rapid response scenarios. Our work builds on recent related research at a critical time; as extreme weather events increase in frequency and magnitude, rapid response with onboard data analysis is essential.

Predicting the motion of other road agents enables autonomous vehicles to perform safe and efficient path planning. This task is very complex, as the behaviour of road agents depends on many factors and the number of possible future trajectories can be considerable (multi-modal). Most prior approaches proposed to address multi-modal motion prediction are based on complex machine learning systems that have limited interpretability. Moreover, the metrics used in current benchmarks do not evaluate all aspects of the problem, such as the diversity and admissibility of the output. In this work, we aim to advance towards the design of trustworthy motion prediction systems, based on some of the requirements for the design of Trustworthy Artificial Intelligence. We focus on evaluation criteria, robustness, and interpretability of outputs. First, we comprehensively analyse the evaluation metrics, identify the main gaps of current benchmarks, and propose a new holistic evaluation framework. We then introduce a method for the assessment of spatial and temporal robustness by simulating noise in the perception system. To enhance the interpretability of the outputs and generate more balanced results in the proposed evaluation framework, we propose an intent prediction layer that can be attached to multi-modal motion prediction models. The effectiveness of this approach is assessed through a survey that explores different elements in the visualization of the multi-modal trajectories and intentions. The proposed approach and findings make a significant contribution to the development of trustworthy motion prediction systems for autonomous vehicles, advancing the field towards greater safety and reliability.

Federated Learning (FL) is a decentralized learning paradigm, enabling parties to collaboratively train models while keeping their data confidential. Within autonomous driving, it brings the potential of reducing data storage costs, reducing bandwidth requirements, and to accelerate the learning. FL is, however, susceptible to poisoning attacks. In this paper, we introduce two novel poisoning attacks on FL tailored to regression tasks within autonomous driving: FLStealth and Off-Track Attack (OTA). FLStealth, an untargeted attack, aims at providing model updates that deteriorate the global model performance while appearing benign. OTA, on the other hand, is a targeted attack with the objective to change the global model's behavior when exposed to a certain trigger. We demonstrate the effectiveness of our attacks by conducting comprehensive experiments pertaining to the task of vehicle trajectory prediction. In particular, we show that, among five different untargeted attacks, FLStealth is the most successful at bypassing the considered defenses employed by the server. For OTA, we demonstrate the inability of common defense strategies to mitigate the attack, highlighting the critical need for new defensive mechanisms against targeted attacks within FL for autonomous driving.

28 Aug 2024

Local mutual-information privacy (LMIP) is a privacy notion that aims to

quantify the reduction of uncertainty about the input data when the output of a

privacy-preserving mechanism is revealed. We study the relation of LMIP with

local differential privacy (LDP), the de facto standard notion of privacy in

context-independent (CI) scenarios, and with local information privacy (LIP),

the state-of-the-art notion for context-dependent settings. We establish

explicit conversion rules, i.e., bounds on the privacy parameters for an LMIP

mechanism to also satisfy LDP/LIP, and vice versa. We use our bounds to

formally verify that LMIP is a weak privacy notion. We also show that

uncorrelated Gaussian noise is the best-case noise in terms of CI-LMIP if both

the input data and the noise are subject to an average power constraint.

Motion prediction systems aim to capture the future behavior of traffic

scenarios enabling autonomous vehicles to perform safe and efficient planning.

The evolution of these scenarios is highly uncertain and depends on the

interactions of agents with static and dynamic objects in the scene. GNN-based

approaches have recently gained attention as they are well suited to naturally

model these interactions. However, one of the main challenges that remains

unexplored is how to address the complexity and opacity of these models in

order to deal with the transparency requirements for autonomous driving

systems, which includes aspects such as interpretability and explainability. In

this work, we aim to improve the explainability of motion prediction systems by

using different approaches. First, we propose a new Explainable Heterogeneous

Graph-based Policy (XHGP) model based on an heterograph representation of the

traffic scene and lane-graph traversals, which learns interaction behaviors

using object-level and type-level attention. This learned attention provides

information about the most important agents and interactions in the scene.

Second, we explore this same idea with the explanations provided by

GNNExplainer. Third, we apply counterfactual reasoning to provide explanations

of selected individual scenarios by exploring the sensitivity of the trained

model to changes made to the input data, i.e., masking some elements of the

scene, modifying trajectories, and adding or removing dynamic agents. The

explainability analysis provided in this paper is a first step towards more

transparent and reliable motion prediction systems, important from the

perspective of the user, developers and regulatory agencies. The code to

reproduce this work is publicly available at

this https URL

This paper details the process of developing the first native large generative language model for the Nordic languages, GPT-SW3. We cover all parts of the development process, from data collection and processing, training configuration and instruction finetuning, to evaluation and considerations for release strategies. We hope that this paper can serve as a guide and reference for other researchers that undertake the development of large generative models for smaller languages.

We develop practical and theoretically grounded membership inference attacks

(MIAs) against both independent and identically distributed (i.i.d.) data and

graph-structured data. Building on the Bayesian decision-theoretic framework of

Sablayrolles et al., we derive the Bayes-optimal membership inference rule for

node-level MIAs against graph neural networks, addressing key open questions

about optimal query strategies in the graph setting. We introduce BASE and

G-BASE, computationally efficient approximations of the Bayes-optimal attack.

G-BASE achieves superior performance compared to previously proposed

classifier-based node-level MIA attacks. BASE, which is also applicable to

non-graph data, matches or exceeds the performance of prior state-of-the-art

MIAs, such as LiRA and RMIA, at a significantly lower computational cost.

Finally, we show that BASE and RMIA are equivalent under a specific

hyperparameter setting, providing a principled, Bayes-optimal justification for

the RMIA attack.

Federated learning (FL) systems are susceptible to attacks from malicious actors who might attempt to corrupt the training model through various poisoning attacks. FL also poses new challenges in addressing group bias, such as ensuring fair performance for different demographic groups. Traditional methods used to address such biases require centralized access to the data, which FL systems do not have. In this paper, we present a novel approach FedVal for both robustness and fairness that does not require any additional information from clients that could raise privacy concerns and consequently compromise the integrity of the FL system. To this end, we propose an innovative score function based on a server-side validation method that assesses client updates and determines the optimal aggregation balance between locally-trained models. Our research shows that this approach not only provides solid protection against poisoning attacks but can also be used to reduce group bias and subsequently promote fairness while maintaining the system's capability for differential privacy. Extensive experiments on the CIFAR-10, FEMNIST, and PUMS ACSIncome datasets in different configurations demonstrate the effectiveness of our method, resulting in state-of-the-art performances. We have proven robustness in situations where 80% of participating clients are malicious. Additionally, we have shown a significant increase in accuracy for underrepresented labels from 32% to 53%, and increase in recall rate for underrepresented features from 19% to 50%.

There are no more papers matching your filters at the moment.