Amazon.com

19 Feb 2025

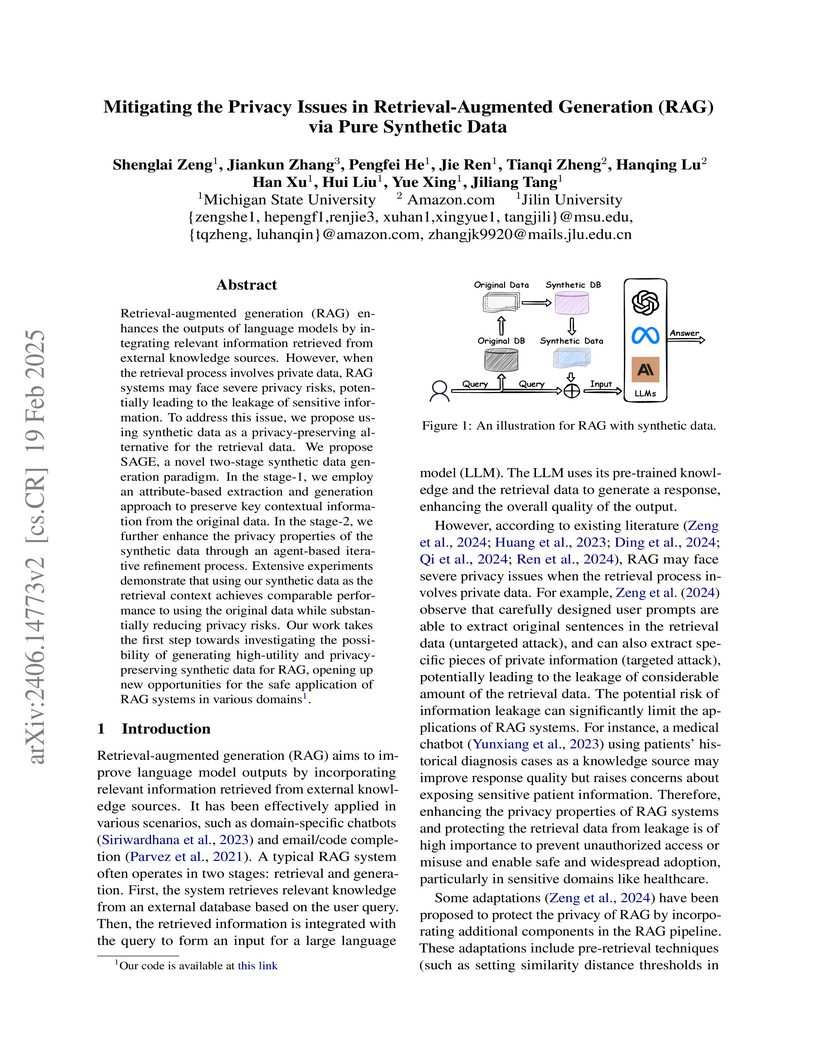

Retrieval-augmented generation (RAG) enhances the outputs of language models

by integrating relevant information retrieved from external knowledge sources.

However, when the retrieval process involves private data, RAG systems may face

severe privacy risks, potentially leading to the leakage of sensitive

information. To address this issue, we propose using synthetic data as a

privacy-preserving alternative for the retrieval data. We propose SAGE, a novel

two-stage synthetic data generation paradigm. In the stage-1, we employ an

attribute-based extraction and generation approach to preserve key contextual

information from the original data. In the stage-2, we further enhance the

privacy properties of the synthetic data through an agent-based iterative

refinement process. Extensive experiments demonstrate that using our synthetic

data as the retrieval context achieves comparable performance to using the

original data while substantially reducing privacy risks. Our work takes the

first step towards investigating the possibility of generating high-utility and

privacy-preserving synthetic data for RAG, opening up new opportunities for the

safe application of RAG systems in various domains.

To achieve successful assistance with long-horizon web-based tasks, AI agents must be able to sequentially follow real-world user instructions over a long period. Unlike existing web-based agent benchmarks, sequential instruction following in the real world poses significant challenges beyond performing a single, clearly defined task. For instance, real-world human instructions can be ambiguous, require different levels of AI assistance, and may evolve over time, reflecting changes in the user's mental state. To address this gap, we introduce RealWebAssist, a novel benchmark designed to evaluate sequential instruction-following in realistic scenarios involving long-horizon interactions with the web, visual GUI grounding, and understanding ambiguous real-world user instructions. RealWebAssist includes a dataset of sequential instructions collected from real-world human users. Each user instructs a web-based assistant to perform a series of tasks on multiple websites. A successful agent must reason about the true intent behind each instruction, keep track of the mental state of the user, understand user-specific routines, and ground the intended tasks to actions on the correct GUI elements. Our experimental results show that state-of-the-art models struggle to understand and ground user instructions, posing critical challenges in following real-world user instructions for long-horizon web assistance.

Researchers at Amazon developed Multimodal Image Matching (MIM) models, integrating vision-language alignment into deep metric learning to enhance the accuracy and semantic relevance of their visual search system. This approach yielded a 4.95% relative increase in image matching Click-Through Rate and enabled a new multimodal search experience with a 1.35% CTR improvement in online A/B tests.

Researchers from Michigan State University and collaborators present LLM-GNN, a framework for label-free node classification on text-attributed graphs by leveraging Large Language Models to annotate a small subset of nodes to train Graph Neural Networks. This approach achieved 74.9% accuracy on the OGBN-PRODUCTS dataset for under one dollar in annotation cost, demonstrating superior cost-effectiveness compared to direct LLM prediction and existing label-free methods.

Amazon.com and academic partners introduce Amazon-M2, a large-scale, multilingual, multi-locale shopping session dataset featuring rich textual product attributes across six locales and languages. This dataset supports next-product recommendation and a novel next-product title generation task, revealing that popularity heuristics often outperform deep learning models and simple last-product title heuristics outperform LLMs for exact title generation.

Researchers developed Shopping MMLU, a comprehensive benchmark with 57 tasks derived from real-world Amazon data, to evaluate Large Language Models' (LLMs) abilities as general shop assistants across four key skills including multi-linguality. Evaluation of over 20 LLMs revealed strong correlations among shopping tasks, effective transfer of general knowledge, and that proprietary models currently outperform open-source models while highlighting challenges in instruction fine-tuning and in-context learning.

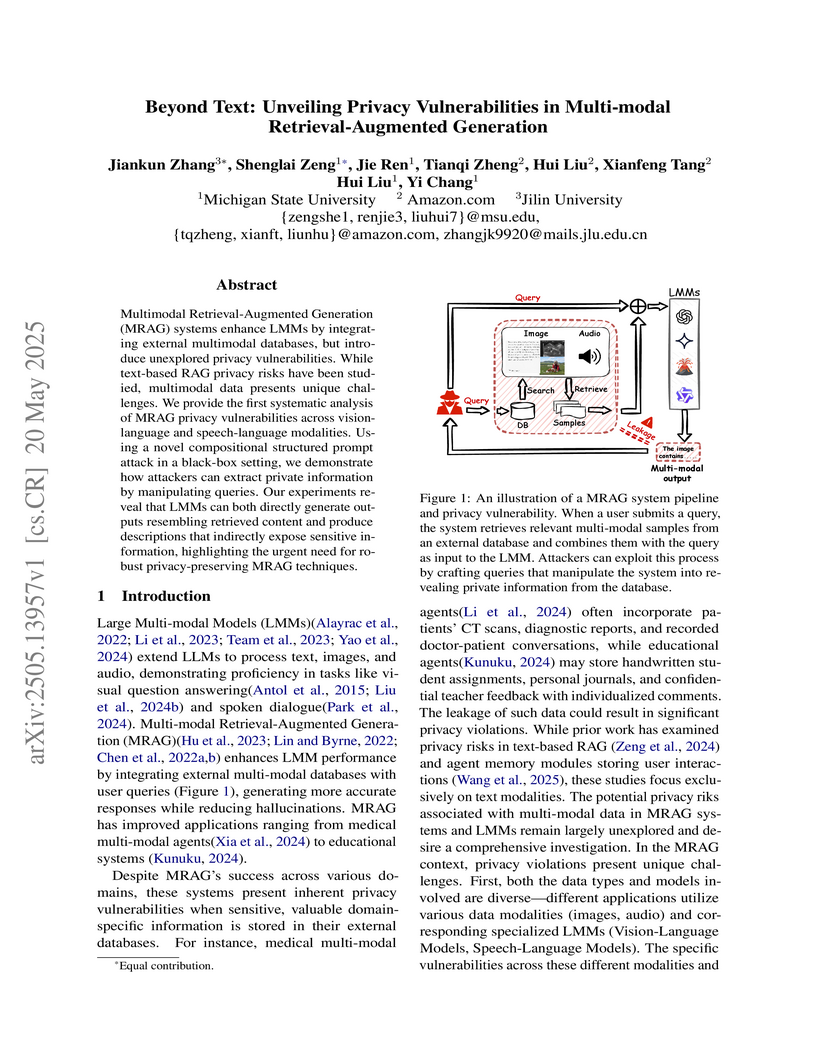

Multimodal Retrieval-Augmented Generation (MRAG) systems enhance LMMs by

integrating external multimodal databases, but introduce unexplored privacy

vulnerabilities. While text-based RAG privacy risks have been studied,

multimodal data presents unique challenges. We provide the first systematic

analysis of MRAG privacy vulnerabilities across vision-language and

speech-language modalities. Using a novel compositional structured prompt

attack in a black-box setting, we demonstrate how attackers can extract private

information by manipulating queries. Our experiments reveal that LMMs can both

directly generate outputs resembling retrieved content and produce descriptions

that indirectly expose sensitive information, highlighting the urgent need for

robust privacy-preserving MRAG techniques.

Retrieval-Augmented Generation (RAG) systems have shown promise in enhancing the performance of Large Language Models (LLMs). However, these systems face challenges in effectively integrating external knowledge with the LLM's internal knowledge, often leading to issues with misleading or unhelpful information. This work aims to provide a systematic study on knowledge checking in RAG systems. We conduct a comprehensive analysis of LLM representation behaviors and demonstrate the significance of using representations in knowledge checking. Motivated by the findings, we further develop representation-based classifiers for knowledge filtering. We show substantial improvements in RAG performance, even when dealing with noisy knowledge databases. Our study provides new insights into leveraging LLM representations for enhancing the reliability and effectiveness of RAG systems.

Researchers from Xiaomi Inc. and Amazon.com, Inc. developed X-ARES, a comprehensive framework to evaluate audio encoder performance across speech, environmental sound, and music domains using 22 tasks and two evaluation paradigms (MLP and k-NN). Their evaluation of state-of-the-art encoders revealed performance trade-offs between domain-specific and general models, with k-NN shedding light on the intrinsic quality of representations.

Ensembles of Low-Rank Expert Adapters (ELREA) introduces a framework for fine-tuning Large Language Models by clustering training data based on instruction gradient similarity and training specialized LoRA experts on these clusters. This approach addresses conflicting gradient directions during optimization, leading to performance improvements of up to 9.67% over standard LoRA on mathematical reasoning tasks and demonstrating robust generalization on diverse language understanding benchmarks.

In real-world NLP applications, Large Language Models (LLMs) offer promising

solutions due to their extensive training on vast datasets. However, the large

size and high computation demands of LLMs limit their practicality in many

applications, especially when further fine-tuning is required. To address these

limitations, smaller models are typically preferred for deployment. However,

their training is hindered by the scarcity of labeled data. In contrast,

unlabeled data is often readily which can be leveraged by using LLMs to

generate pseudo-labels for training smaller models. This enables the smaller

models (student) to acquire knowledge from LLMs(teacher) while reducing

computational costs. This process introduces challenges, such as potential

noisy pseudo-labels. Selecting high-quality and informative data is therefore

critical to enhance model performance while improving the efficiency of data

utilization. To address this, we propose LLKD that enables Learning with Less

computational resources and less data for Knowledge Distillation from LLMs.

LLKD is an adaptive sample selection method that incorporates signals from both

the teacher and student. Specifically, it prioritizes samples where the teacher

demonstrates high confidence in its labeling, indicating reliable labels, and

where the student exhibits a high information need, identifying challenging

samples that require further learning. Our comprehensive experiments show that

LLKD achieves superior performance across various datasets with higher data

efficiency.

The rapid advancement of conversational search systems revolutionizes how information is accessed by enabling the multi-turn interaction between the user and the system. Existing conversational search systems are usually built with two different models. This separation restricts the system from leveraging the intrinsic knowledge of the models simultaneously, which cannot ensure the effectiveness of retrieval benefiting the generation. The existing studies for developing unified models cannot fully address the aspects of understanding conversational context, managing retrieval independently, and generating responses. In this paper, we explore how to unify dense retrieval and response generation for large language models in conversation. We conduct joint fine-tuning with different objectives and design two mechanisms to reduce the inconsistency risks while mitigating data discrepancy. The evaluations on five conversational search datasets demonstrate that our unified model can mutually improve both tasks and outperform the existing baselines.

Reinforcement learning with verifiable rewards (RLVR) can elicit strong reasoning in large language models (LLMs), while their performance after RLVR varies dramatically across different base models. This raises a fundamental question: what microscopic property of pre-trained models leads to this variation? To investigate, we formalize reasoning as chains of Horn clauses ("if-then" rules) built from features extracted from the LLM's latent space via cross-layer sparse autoencoders (SAEs). We estimate the transition probabilities between its features, and further categorize each rule by its semantic soundness level (e.g., strict, plausible, noisy) with an LLM. Our key discovery is that high-potential models are inherently soundness-aware: their internal probability distributions systematically shift across rules' soundness levels, becoming highly distinct for "strict" versus "noisy" rules. In contrast, weaker models are soundness-agnostic, collapsing to one distribution regardless of soundness levels. To quantify this, we introduce the Soundness-Aware Level (SAL), a microscopic metric using the Jensen-Shannon Divergence to measure the separation between these distributions. We show that SAL's predictions of post-RLVR reasoning performance follow a precise empirical law (R^2=0.87) across diverse model families (Qwen, Mistral, Llama, DeepSeek) and scales (0.5B-14B). This reveals that a model's reasoning potential is tied to its intrinsic, pre-trained ability to distinguish sound knowledge from unsound ones. These findings underscore the critical role of model pre-training in shaping reasoning and offer a practical metric grounded in the model's internal mechanisms for selecting/designing stronger base models.

Multi-task learning (MTL) has been widely adopted for its ability to simultaneously learn multiple tasks. While existing gradient manipulation methods often yield more balanced solutions than simple scalarization-based approaches, they typically incur a significant computational overhead of O(K) in both time and memory, where K is the number of tasks. In this paper, we propose LDC-MTL, a simple and scalable loss discrepancy control approach for MTL, formulated from a bilevel optimization perspective. Our method incorporates two key components: (i) a bilevel formulation for fine-grained loss discrepancy control, and (ii) a scalable first-order bilevel algorithm that requires only O(1) time and memory. Theoretically, we prove that LDC-MTL guarantees convergence not only to a stationary point of the bilevel problem with loss discrepancy control but also to an ϵ-accurate Pareto stationary point for all K loss functions under mild conditions. Extensive experiments on diverse multi-task datasets demonstrate the superior performance of LDC-MTL in both accuracy and efficiency.

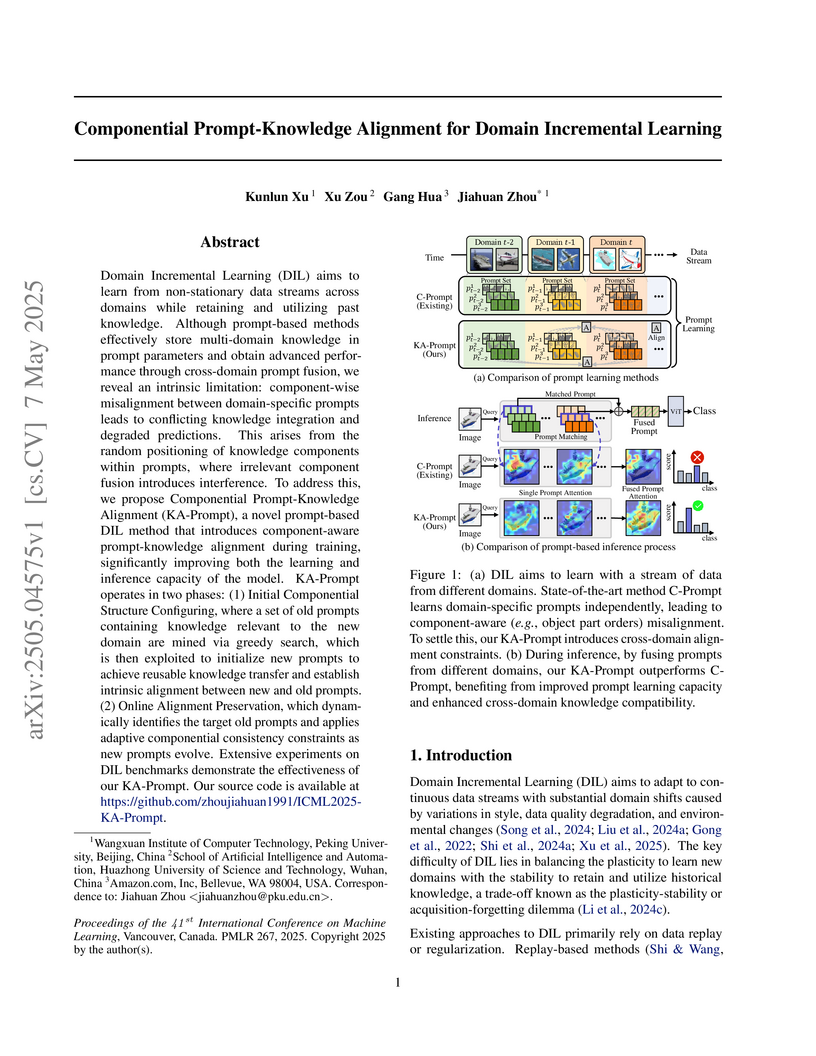

Domain Incremental Learning (DIL) aims to learn from non-stationary data

streams across domains while retaining and utilizing past knowledge. Although

prompt-based methods effectively store multi-domain knowledge in prompt

parameters and obtain advanced performance through cross-domain prompt fusion,

we reveal an intrinsic limitation: component-wise misalignment between

domain-specific prompts leads to conflicting knowledge integration and degraded

predictions. This arises from the random positioning of knowledge components

within prompts, where irrelevant component fusion introduces interference.To

address this, we propose Componential Prompt-Knowledge Alignment (KA-Prompt), a

novel prompt-based DIL method that introduces component-aware prompt-knowledge

alignment during training, significantly improving both the learning and

inference capacity of the model. KA-Prompt operates in two phases: (1) Initial

Componential Structure Configuring, where a set of old prompts containing

knowledge relevant to the new domain are mined via greedy search, which is then

exploited to initialize new prompts to achieve reusable knowledge transfer and

establish intrinsic alignment between new and old prompts. (2) Online Alignment

Preservation, which dynamically identifies the target old prompts and applies

adaptive componential consistency constraints as new prompts evolve. Extensive

experiments on DIL benchmarks demonstrate the effectiveness of our KA-Prompt.

Our source code is available at

this https URL

23 Jul 2019

Estimation of the accuracy of a large-scale knowledge graph (KG) often

requires humans to annotate samples from the graph. How to obtain statistically

meaningful estimates for accuracy evaluation while keeping human annotation

costs low is a problem critical to the development cycle of a KG and its

practical applications. Surprisingly, this challenging problem has largely been

ignored in prior research. To address the problem, this paper proposes an

efficient sampling and evaluation framework, which aims to provide quality

accuracy evaluation with strong statistical guarantee while minimizing human

efforts. Motivated by the properties of the annotation cost function observed

in practice, we propose the use of cluster sampling to reduce the overall cost.

We further apply weighted and two-stage sampling as well as stratification for

better sampling designs. We also extend our framework to enable efficient

incremental evaluation on evolving KG, introducing two solutions based on

stratified sampling and a weighted variant of reservoir sampling. Extensive

experiments on real-world datasets demonstrate the effectiveness and efficiency

of our proposed solution. Compared to baseline approaches, our best solutions

can provide up to 60% cost reduction on static KG evaluation and up to 80% cost

reduction on evolving KG evaluation, without loss of evaluation quality.

As one of the successful Transformer-based models in computer vision tasks, SegFormer demonstrates superior performance in semantic segmentation. Nevertheless, the high computational cost greatly challenges the deployment of SegFormer on edge devices. In this paper, we seek to design a lightweight SegFormer for efficient semantic segmentation. Based on the observation that neurons in SegFormer layers exhibit large variances across different images, we propose a dynamic gated linear layer, which prunes the most uninformative set of neurons based on the input instance. To improve the dynamically pruned SegFormer, we also introduce two-stage knowledge distillation to transfer the knowledge within the original teacher to the pruned student network. Experimental results show that our method can significantly reduce the computation overhead of SegFormer without an apparent performance drop. For instance, we can achieve 36.9% mIoU with only 3.3G FLOPs on ADE20K, saving more than 60% computation with the drop of only 0.5% in mIoU

HTML documents are an important medium for disseminating information on the Web for human consumption. An HTML document presents information in multiple text formats including unstructured text, structured key-value pairs, and tables. Effective representation of these documents is essential for machine understanding to enable a wide range of applications, such as Question Answering, Web Search, and Personalization. Existing work has either represented these documents using visual features extracted by rendering them in a browser, which is typically computationally expensive, or has simply treated them as plain text documents, thereby failing to capture useful information presented in their HTML structure. We argue that the text and HTML structure together convey important semantics of the content and therefore warrant a special treatment for their representation learning. In this paper, we introduce a novel representation learning approach for web pages, dubbed DOM-LM, which addresses the limitations of existing approaches by encoding both text and DOM tree structure with a transformer-based encoder and learning generalizable representations for HTML documents via self-supervised pre-training. We evaluate DOM-LM on a variety of webpage understanding tasks, including Attribute Extraction, Open Information Extraction, and Question Answering. Our extensive experiments show that DOM-LM consistently outperforms all baselines designed for these tasks. In particular, DOM-LM demonstrates better generalization performance both in few-shot and zero-shot settings, making it attractive for making it suitable for real-world application settings with limited labeled data.

18 Feb 2019

Sentiment analysis is a task that may suffer from a lack of data in certain cases, as the datasets are often generated and annotated by humans. In cases where data is inadequate for training discriminative models, generate models may aid training via data augmentation. Generative Adversarial Networks (GANs) are one such model that has advanced the state of the art in several tasks, including as image and text generation. In this paper, I train GAN models on low resource datasets, then use them for the purpose of data augmentation towards improving sentiment classifier generalization. Given the constraints of limited data, I explore various techniques to train the GAN models. I also present an analysis of the quality of generated GAN data as more training data for the GAN is made available. In this analysis, the generated data is evaluated as a test set (against a model trained on real data points) as well as a training set to train classification models. Finally, I also conduct a visual analysis by projecting the generated and the real data into a two-dimensional space using the t-Distributed Stochastic Neighbor Embedding (t-SNE) method.

Explainable deep learning models are advantageous in many situations. Prior

work mostly provide unimodal explanations through post-hoc approaches not part

of the original system design. Explanation mechanisms also ignore useful

textual information present in images. In this paper, we propose MTXNet, an

end-to-end trainable multimodal architecture to generate multimodal

explanations, which focuses on the text in the image. We curate a novel dataset

TextVQA-X, containing ground truth visual and multi-reference textual

explanations that can be leveraged during both training and evaluation. We then

quantitatively show that training with multimodal explanations complements

model performance and surpasses unimodal baselines by up to 7% in CIDEr scores

and 2% in IoU. More importantly, we demonstrate that the multimodal

explanations are consistent with human interpretations, help justify the

models' decision, and provide useful insights to help diagnose an incorrect

prediction. Finally, we describe a real-world e-commerce application for using

the generated multimodal explanations.

There are no more papers matching your filters at the moment.