CAIDAUC San Diego

M+ extends the MemoryLLM architecture, enabling Large Language Models to retain and recall information over sequence lengths exceeding 160,000 tokens, a substantial improvement over MemoryLLM's previous 20,000 token limit. This is achieved through a scalable long-term memory mechanism and a co-trained retriever that efficiently retrieves relevant hidden states, while maintaining competitive GPU memory usage.

Researchers from UCSD, UCLA, and Amazon developed MEMORYLLM, a Large Language Model architecture designed for continuous self-updatability by integrating a fixed-size memory pool directly into the transformer's latent space. The model efficiently absorbs new knowledge, retains previously learned information, and maintains performance integrity over extensive update cycles, demonstrating superior capabilities in model editing and long-context understanding while remaining robust after nearly a million updates.

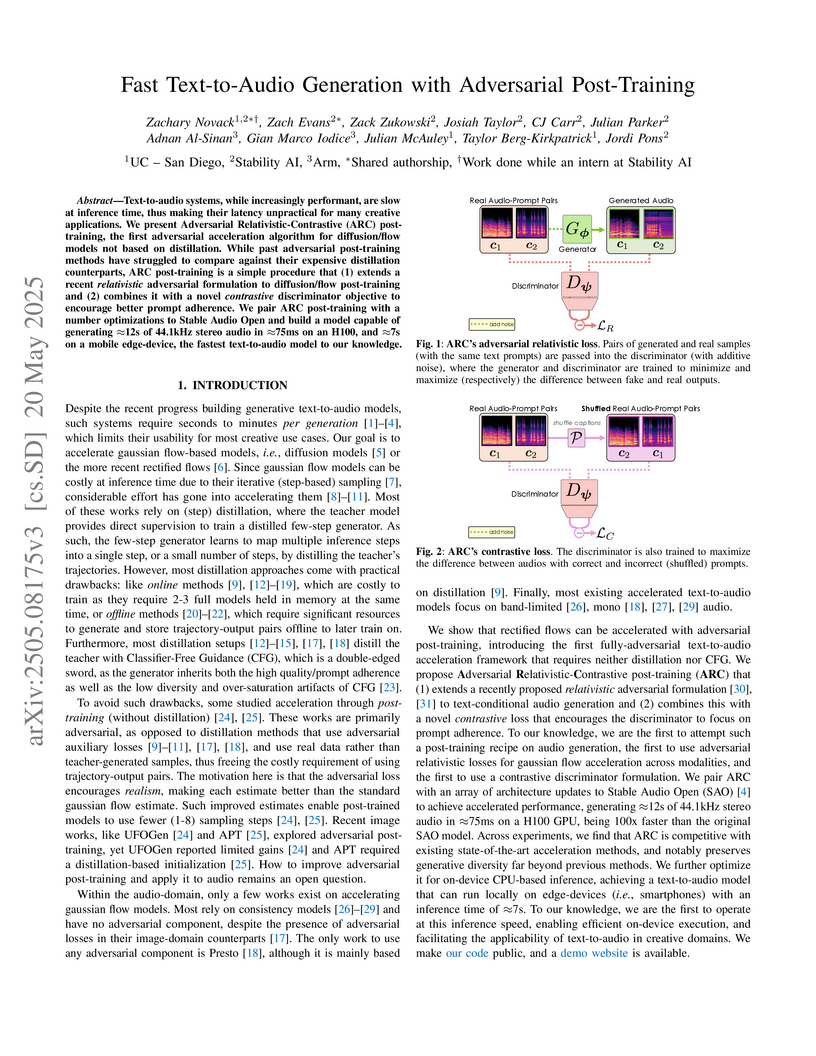

Text-to-audio systems, while increasingly performant, are slow at inference

time, thus making their latency unpractical for many creative applications. We

present Adversarial Relativistic-Contrastive (ARC) post-training, the first

adversarial acceleration algorithm for diffusion/flow models not based on

distillation. While past adversarial post-training methods have struggled to

compare against their expensive distillation counterparts, ARC post-training is

a simple procedure that (1) extends a recent relativistic adversarial

formulation to diffusion/flow post-training and (2) combines it with a novel

contrastive discriminator objective to encourage better prompt adherence. We

pair ARC post-training with a number optimizations to Stable Audio Open and

build a model capable of generating ≈12s of 44.1kHz stereo audio in

≈75ms on an H100, and ≈7s on a mobile edge-device, the fastest

text-to-audio model to our knowledge.

Researchers at University College Cork and Bar-Ilan University quantitatively mapped the efficiency and timescales of population inversion formation in relativistic plasmas through nonresonant interactions with Alfv´en waves. This study, crucial for synchrotron maser emission (SME) in Fast Radio Bursts (FRBs), finds that up to 30% of particle energy can be funneled into the inversion, particularly in highly magnetized plasmas, supporting the generation of FRB signals at GHz frequencies.

Despite advances in diffusion-based text-to-music (TTM) methods, efficient,

high-quality generation remains a challenge. We introduce Presto!, an approach

to inference acceleration for score-based diffusion transformers via reducing

both sampling steps and cost per step. To reduce steps, we develop a new

score-based distribution matching distillation (DMD) method for the EDM-family

of diffusion models, the first GAN-based distillation method for TTM. To reduce

the cost per step, we develop a simple, but powerful improvement to a recent

layer distillation method that improves learning via better preserving hidden

state variance. Finally, we combine our step and layer distillation methods

together for a dual-faceted approach. We evaluate our step and layer

distillation methods independently and show each yield best-in-class

performance. Our combined distillation method can generate high-quality outputs

with improved diversity, accelerating our base model by 10-18x (230/435ms

latency for 32 second mono/stereo 44.1kHz, 15x faster than comparable SOTA) --

the fastest high-quality TTM to our knowledge. Sound examples can be found at

this https URL

Researchers from UC San Diego and collaborators introduced WIKIDYK, a real-world, expert-curated benchmark for evaluating Large Language Models' (LLMs) ability to acquire and retain new facts. The study found that Bidirectional Language Models (BiLMs) outperform Causal Language Models (CLMs) in knowledge memorization, leading to a proposed modular ensemble framework for efficient knowledge injection.

28 Apr 2022

Shape restrictions have played a central role in economics as both testable

implications of theory and sufficient conditions for obtaining informative

counterfactual predictions. In this paper we provide a general procedure for

inference under shape restrictions in identified and partially identified

models defined by conditional moment restrictions. Our test statistics and

proposed inference methods are based on the minimum of the generalized method

of moments (GMM) objective function with and without shape restrictions.

Uniformly valid critical values are obtained through a bootstrap procedure that

approximates a subset of the true local parameter space. In an empirical

analysis of the effect of childbearing on female labor supply, we show that

employing shape restrictions in linear instrumental variables (IV) models can

lead to shorter confidence regions for both local and average treatment

effects. Other applications we discuss include inference for the variability of

quantile IV treatment effects and for bounds on average equivalent variation in

a demand model with general heterogeneity. We find in Monte Carlo examples that

the critical values are conservatively accurate and that tests about objects of

interest have good power relative to unrestricted GMM.

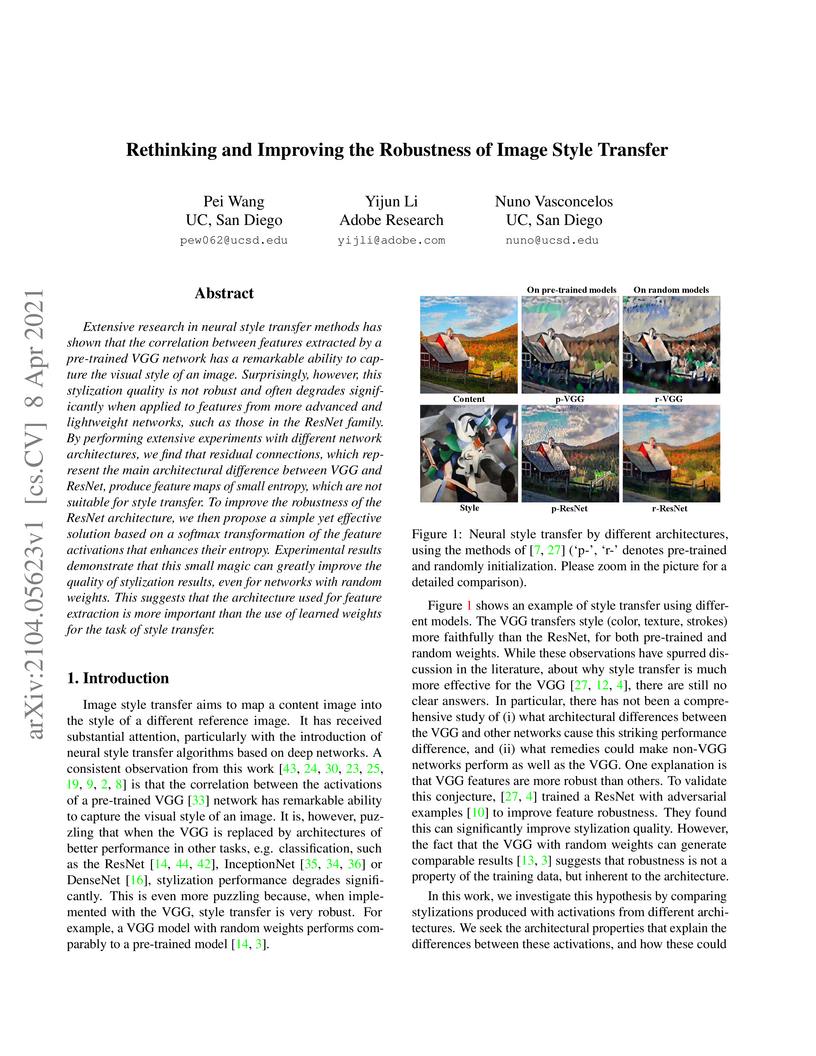

This research explains why VGG networks excel at neural style transfer while modern architectures struggle, attributing the issue to the low-entropy activation distributions caused by residual connections. The proposed solution, Stylization With Activation smoothinG (SWAG), applies a softmax transformation to feature activations during loss calculation, enabling modern networks like ResNet to achieve visual quality that matches or exceeds VGG’s performance in style transfer tasks.

07 Jul 2025

Threat hunting is an operational security process where an expert analyzes traffic, applying knowledge and lightweight tools on unlabeled data in order to identify and classify previously unknown phenomena. In this paper, we examine threat hunting metrics and practice by studying the detection of Crackonosh, a cryptojacking malware package, has on various metrics for identifying its behavior. Using a metric for discoverability, we model the ability of defenders to measure Crackonosh traffic as the malware population decreases, evaluate the strength of various detection methods, and demonstrate how different darkspace sizes affect both the ability to track the malware, but enable emergent behaviors by exploiting attacker mistakes.

04 Dec 2024

This research from Kim, Diagne, and Krstić at UC San Diego develops a robust control strategy that guarantees safety for high relative degree systems operating in the presence of unknown moving obstacles. The method combines Robust Control Barrier Functions (RCBFs) with CBF backstepping, introducing a novel smooth formulation (sRCBF) to overcome non-smoothness issues, and successfully avoids collisions in simulations where standard methods fail.

The rapid deployment of new Internet protocols over the last few years and the COVID-19 pandemic more recently (2020) has resulted in a change in the Internet traffic composition. Consequently, an updated microscopic view of traffic shares is needed to understand how the Internet is evolving to capture both such shorter- and longer-term events. Toward this end, we observe traffic composition at a research network in Japan and a Tier-1 ISP in the USA. We analyze the traffic traces passively captured at two inter-domain links: MAWI (Japan) and CAIDA (New York-Sao Paulo), which cover 100GB of data for MAWI traces and 4TB of data for CAIDA traces in total. We begin by studying the impact of COVID-19 on the MAWI link: We find a substantial increase in the traffic volume of OpenVPN and rsync, as well as increases in traffic volume from cloud storage and video conferencing services, which shows that clients shift to remote work during the pandemic. For traffic traces between March 2018 to December 2018, we find that the use of IPv6 is increasing quickly on the CAIDA monitor: The IPv6 traffic volume increases from 1.1% in March 2018 to 6.1% in December 2018, while the IPv6 traffic share remains stable in the MAWI dataset at around 9% of the traffic volume. Among other protocols at the application layer, 60%-70% of IPv4 traffic on the CAIDA link is HTTP(S) traffic, out of which two-thirds are encrypted; for the MAWI link, more than 90% of the traffic is Web, of which nearly 75% is encrypted. Compared to previous studies, this depicts a larger increase in encrypted Web traffic of up to a 3-to-1 ratio of HTTPS to HTTP. As such, our observations in this study further reconfirm that traffic shares change with time and can vary greatly depending on the vantage point studied despite the use of the same generalized methodology and analyses, which can also be applied to other traffic monitoring datasets.

02 May 2024

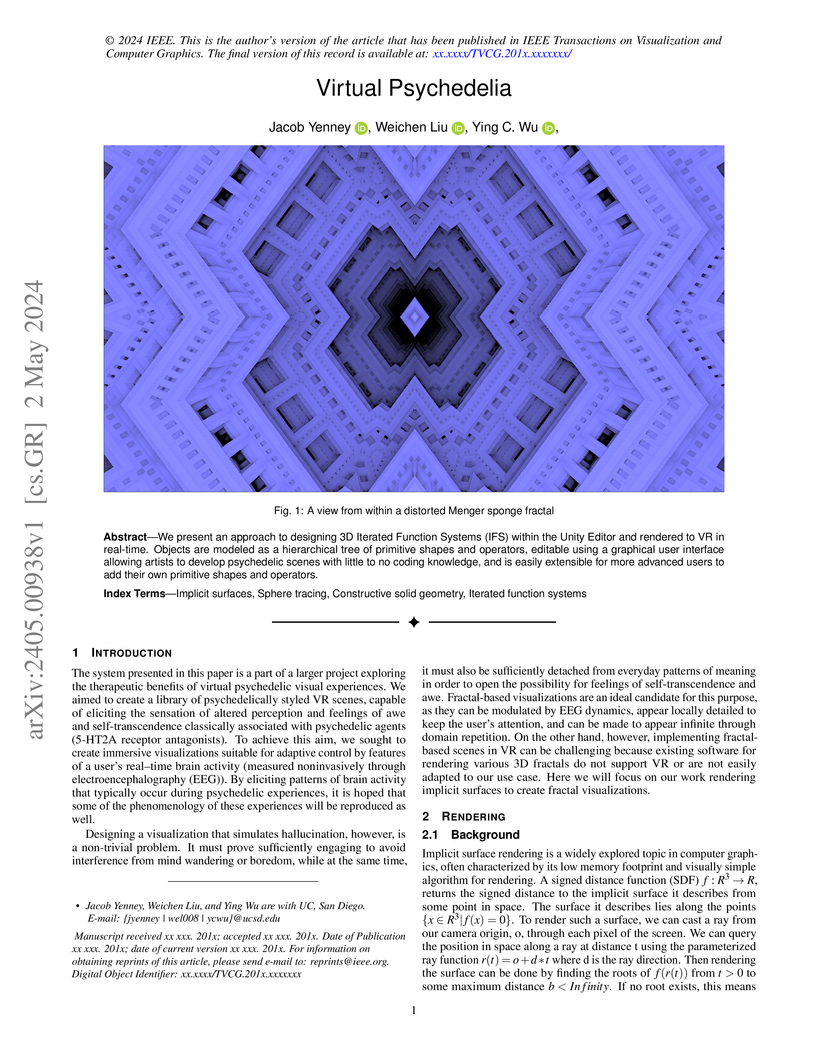

We present an approach to designing 3D Iterated Function Systems (IFS) within the Unity Editor and rendered to VR in real-time. Objects are modeled as a hierarchical tree of primitive shapes and operators, editable using a graphical user interface allowing artists to develop psychedelic scenes with little to no coding knowledge, and is easily extensible for more advanced users to add their own primitive shapes and operators.

Federated Learning (FL) has been a pivotal paradigm for collaborative training of machine learning models across distributed datasets. In heterogeneous settings, it has been observed that a single shared FL model can lead to low local accuracy, motivating personalized FL algorithms. In parallel, fair FL algorithms have been proposed to enforce group fairness on the global models. Again, in heterogeneous settings, global and local fairness do not necessarily align, motivating the recent literature on locally fair FL. In this paper, we propose new FL algorithms for heterogeneous settings, spanning the space between personalized and locally fair FL. Building on existing clustering-based personalized FL methods, we incorporate a new fairness metric into cluster assignment, enabling a tunable balance between local accuracy and fairness. Our methods match or exceed the performance of existing locally fair FL approaches, without explicit fairness intervention. We further demonstrate (numerically and analytically) that personalization alone can improve local fairness and that our methods exploit this alignment when present.

15 Oct 2024

Motivated by the impressive but diffuse scope of DDoS research and reporting, we undertake a multistakeholder (joint industry-academic) analysis to seek convergence across the best available macroscopic views of the relative trends in two dominant classes of attacks - direct-path attacks and reflection-amplification attacks. We first analyze 24 industry reports to extract trends and (in)consistencies across observations by commercial stakeholders in 2022. We then analyze ten data sets spanning industry and academic sources, across four years (2019-2023), to find and explain discrepancies based on data sources, vantage points, methods, and parameters. Our method includes a new approach: we share an aggregated list of DDoS targets with industry players who return the results of joining this list with their proprietary data sources to reveal gaps in visibility of the academic data sources. We use academic data sources to explore an industry-reported relative drop in spoofed reflection-amplification attacks in 2021-2022. Our study illustrates the value, but also the challenge, in independent validation of security-related properties of Internet infrastructure. Finally, we reflect on opportunities to facilitate greater common understanding of the DDoS landscape. We hope our results inform not only future academic and industry pursuits but also emerging policy efforts to reduce systemic Internet security vulnerabilities.

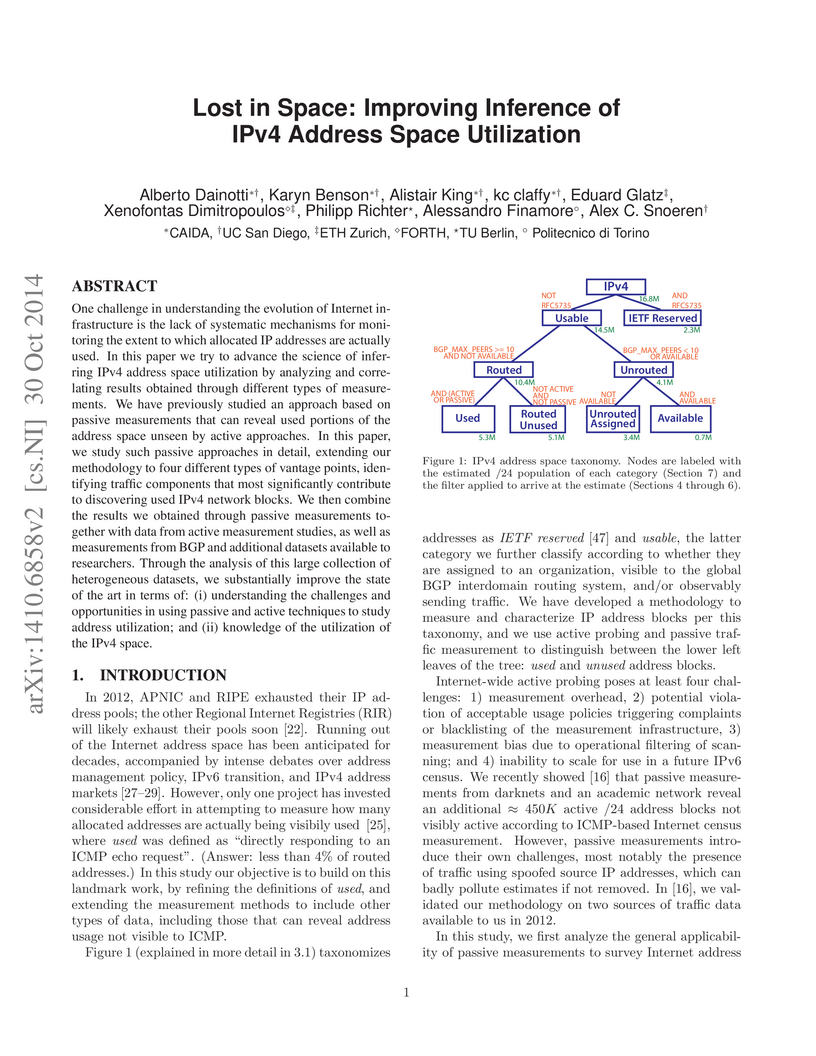

One challenge in understanding the evolution of Internet infrastructure is

the lack of systematic mechanisms for monitoring the extent to which allocated

IP addresses are actually used. In this paper we try to advance the science of

inferring IPv4 address space utilization by analyzing and correlating results

obtained through different types of measurements. We have previously studied an

approach based on passive measurements that can reveal used portions of the

address space unseen by active approaches. In this paper, we study such passive

approaches in detail, extending our methodology to four different types of

vantage points, identifying traffic components that most significantly

contribute to discovering used IPv4 network blocks. We then combine the results

we obtained through passive measurements together with data from active

measurement studies, as well as measurements from BGP and additional datasets

available to researchers. Through the analysis of this large collection of

heterogeneous datasets, we substantially improve the state of the art in terms

of: (i) understanding the challenges and opportunities in using passive and

active techniques to study address utilization; and (ii) knowledge of the

utilization of the IPv4 space.

The current framework of Internet interconnections, based on transit and

settlement-free peering relations, has systemic problems that often cause

peering disputes. We propose a new techno-economic interconnection framework

called Nash-Peering, which is based on the principles of Nash Bargaining in

game theory and economics. Nash-Peering constitutes a radical departure from

current interconnection practices, providing a broader and more economically

efficient set of interdomain relations. In particular, the direction of payment

is not determined by the direction of traffic or by rigid customer-provider

relationships but based on which AS benefits more from the interconnection. We

argue that Nash-Peering can address the root cause of various types of peering

disputes.

The Internet is a complex ecosystem composed of thousands of Autonomous Systems (ASs) operated by independent organizations; each AS having a very limited view outside its own network. These complexities and limitations impede network operators to finely pinpoint the causes of service degradation or disruption when the problem lies outside of their network. In this paper, we present Chocolatine, a solution to detect remote connectivity loss using Internet Background Radiation (IBR) through a simple and efficient method. IBR is unidirectional unsolicited Internet traffic, which is easily observed by monitoring unused address space. IBR features two remarkable properties: it is originated worldwide, across diverse ASs, and it is incessant. We show that the number of IP addresses observed from an AS or a geographical area follows a periodic pattern. Then, using Seasonal ARIMA to statistically model IBR data, we predict the number of IPs for the next time window. Significant deviations from these predictions indicate an outage. We evaluated Chocolatine using data from the UCSD Network Telescope, operated by CAIDA, with a set of documented outages. Our experiments show that the proposed methodology achieves a good trade-off between true-positive rate (90%) and false-positive rate (2%) and largely outperforms CAIDA's own IBR-based detection method. Furthermore, performing a comparison against other methods, i.e., with BGP monitoring and active probing, we observe that Chocolatine shares a large common set of outages with them in addition to many specific outages that would otherwise go undetected.

We introduce new tools and vantage points to develop and integrate proactive techniques to attract IPv6 scan traffic, thus enabling its analysis. By deploying the largest-ever IPv6 proactive telescope in a production ISP network, we collected over 600M packets of unsolicited traffic from 1.9k Autonomous Systems in 10 months. We characterized the sources of unsolicited traffic, evaluated the effectiveness of five major features across the network stack, and inferred scanners' sources of target addresses and their strategies.

21 Aug 2022

Generative Adversarial Networks (GANs) are a widely-used tool for generative

modeling of complex data. Despite their empirical success, the training of GANs

is not fully understood due to the min-max optimization of the generator and

discriminator. This paper analyzes these joint dynamics when the true samples,

as well as the generated samples, are discrete, finite sets, and the

discriminator is kernel-based. A simple yet expressive framework for analyzing

training called the Isolated Points Model is introduced. In the

proposed model, the distance between true samples greatly exceeds the kernel

width, so each generated point is influenced by at most one true point. Our

model enables precise characterization of the conditions for convergence, both

to good and bad minima. In particular, the analysis explains two common failure

modes: (i) an approximate mode collapse and (ii) divergence. Numerical

simulations are provided that predictably replicate these behaviors.

Domain adaptation (DA) is a technique that transfers predictive models

trained on a labeled source domain to an unlabeled target domain, with the core

difficulty of resolving distributional shift between domains. Currently, most

popular DA algorithms are based on distributional matching (DM). However in

practice, realistic domain shifts (RDS) may violate their basic assumptions and

as a result these methods will fail. In this paper, in order to devise robust

DA algorithms, we first systematically analyze the limitations of DM based

methods, and then build new benchmarks with more realistic domain shifts to

evaluate the well-accepted DM methods. We further propose InstaPBM, a novel

Instance-based Predictive Behavior Matching method for robust DA. Extensive

experiments on both conventional and RDS benchmarks demonstrate both the

limitations of DM methods and the efficacy of InstaPBM: Compared with the best

baselines, InstaPBM improves the classification accuracy respectively by

4.5%, 3.9% on Digits5, VisDA2017, and 2.2%, 2.9%, 3.6% on

DomainNet-LDS, DomainNet-ILDS, ID-TwO. We hope our intuitive yet effective

method will serve as a useful new direction and increase the robustness of DA

in real scenarios. Code will be available at anonymous link:

this https URL

There are no more papers matching your filters at the moment.