CSIRO’s Data61

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Monash University

Monash University Carnegie Mellon University

Carnegie Mellon University University of Notre Dame

University of Notre Dame UC Berkeley

UC Berkeley University College London

University College London Cornell UniversityCSIRO’s Data61

Cornell UniversityCSIRO’s Data61 Hugging FaceTU Darmstadt

Hugging FaceTU Darmstadt InriaSingapore Management UniversitySea AI Lab

InriaSingapore Management UniversitySea AI Lab MITIntelAWS AI Labs

MITIntelAWS AI Labs Shanghai Jiaotong University

Shanghai Jiaotong University Queen Mary University of London

Queen Mary University of London University of VirginiaUNC-Chapel Hill

University of VirginiaUNC-Chapel Hill ServiceNowContextual AIDetomo Inc

ServiceNowContextual AIDetomo IncBigCodeBench is a new benchmark that evaluates Large Language Models on their ability to generate Python code requiring diverse function calls and complex instructions, revealing that current models like GPT-4o achieve a maximum of 60% accuracy on these challenging tasks, significantly lagging human performance.

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Monash UniversityLeipzig University

Monash UniversityLeipzig University Northeastern University

Northeastern University University of Notre Dame

University of Notre Dame UC Berkeley

UC Berkeley University College London

University College London Cohere

Cohere Cornell University

Cornell University University of California, San Diego

University of California, San Diego University of British ColumbiaCSIRO’s Data61

University of British ColumbiaCSIRO’s Data61 NVIDIAIBM Research

NVIDIAIBM Research Hugging Face

Hugging Face Johns Hopkins University

Johns Hopkins University Technical University of MunichSea AI Lab

Technical University of MunichSea AI Lab MIT

MIT Princeton UniversityTechnical University of DarmstadtBaidu

Princeton UniversityTechnical University of DarmstadtBaidu ServiceNowKaggleWellesley CollegeContextual AIRobloxSalesforceIndependentMazzumaTechnion

Israel Institute of Technology

ServiceNowKaggleWellesley CollegeContextual AIRobloxSalesforceIndependentMazzumaTechnion

Israel Institute of TechnologyThe BigCode project releases StarCoder 2 models and The Stack v2 dataset, setting a new standard for open and ethically sourced Code LLM development. StarCoder 2 models, particularly the 15B variant, demonstrate competitive performance across code generation, completion, and reasoning tasks, often outperforming larger, closed-source alternatives, by prioritizing data quality and efficient architecture over sheer data quantity.

Monash UniversityLeipzig University

Monash UniversityLeipzig University Northeastern University

Northeastern University Carnegie Mellon University

Carnegie Mellon University New York University

New York University Stanford University

Stanford University McGill University

McGill University University of British ColumbiaCSIRO’s Data61IBM Research

University of British ColumbiaCSIRO’s Data61IBM Research Columbia UniversityScaDS.AI

Columbia UniversityScaDS.AI Hugging Face

Hugging Face Johns Hopkins UniversityWeizmann Institute of ScienceThe Alan Turing InstituteSea AI Lab

Johns Hopkins UniversityWeizmann Institute of ScienceThe Alan Turing InstituteSea AI Lab MIT

MIT Queen Mary University of LondonUniversity of VermontSAP

Queen Mary University of LondonUniversity of VermontSAP ServiceNowIsrael Institute of TechnologyWellesley CollegeEleuther AIRobloxUniversity ofTelefonica I+DTechnical University ofNotre DameMunichDiscover Dollar Pvt LtdUnfoldMLAllahabadTechnion –Saama AI Research LabTolokaForschungszentrum J",

ServiceNowIsrael Institute of TechnologyWellesley CollegeEleuther AIRobloxUniversity ofTelefonica I+DTechnical University ofNotre DameMunichDiscover Dollar Pvt LtdUnfoldMLAllahabadTechnion –Saama AI Research LabTolokaForschungszentrum J",StarCoder and StarCoderBase are large language models for code developed by The BigCode community, demonstrating state-of-the-art performance among open-access models on Python code generation, achieving 33.6% pass@1 on HumanEval, and strong multi-language capabilities, all while integrating responsible AI practices.

20 Mar 2025

A comprehensive framework introduces LLM-based Agentic Recommender Systems (LLM-ARS), combining multimodal large language models with autonomous capabilities to enable proactive, adaptive recommendation experiences while identifying core challenges in safety, efficiency, and personalization across recommendation domains.

Researchers from NEC Labs Europe, the University of Stuttgart, and CSIRO's Data61 introduce PDEBENCH, a comprehensive benchmark for scientific machine learning featuring 35 datasets derived from 11 diverse partial differential equations. This benchmark standardizes evaluation with physics-informed metrics, demonstrates that ML models like FNO can be orders of magnitude faster for inference than numerical solvers, and identifies challenges in modeling discontinuities and complex 3D systems.

This survey paper by Junda Wu and colleagues systematically categorizes and examines visual prompting techniques for Multimodal Large Language Models (MLLMs). It outlines how visual cues, such as bounding boxes and pixel-level masks, enhance MLLMs' abilities in visual grounding, object referring, and compositional reasoning by providing direct control over model attention.

19 May 2025

EFFIBENCH-X introduces the first multi-language benchmark designed to measure the execution time and memory efficiency of code generated by large language models. The evaluation of 26 state-of-the-art LLMs reveals a consistent efficiency gap compared to human-expert solutions, with top models achieving around 62% of human execution time efficiency and varying performance across different programming languages and problem types.

ETH Zurich

ETH Zurich University of Washington

University of Washington University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Monash University

Monash University University of Notre Dame

University of Notre Dame Google

Google University of OxfordNUS

University of OxfordNUS University of California, San DiegoCSIRO’s Data61

University of California, San DiegoCSIRO’s Data61 NVIDIATencent AI Lab

NVIDIATencent AI Lab Hugging Face

Hugging Face Purdue UniversitySingapore Management UniversityIBMInstitute of Automation, CAS

Purdue UniversitySingapore Management UniversityIBMInstitute of Automation, CAS ServiceNowComenius University in BratislavaHKUST GuangzhouCiscoTano LabsU.Va.UberCNRS, FranceNevsky CollectiveDetomo Inc

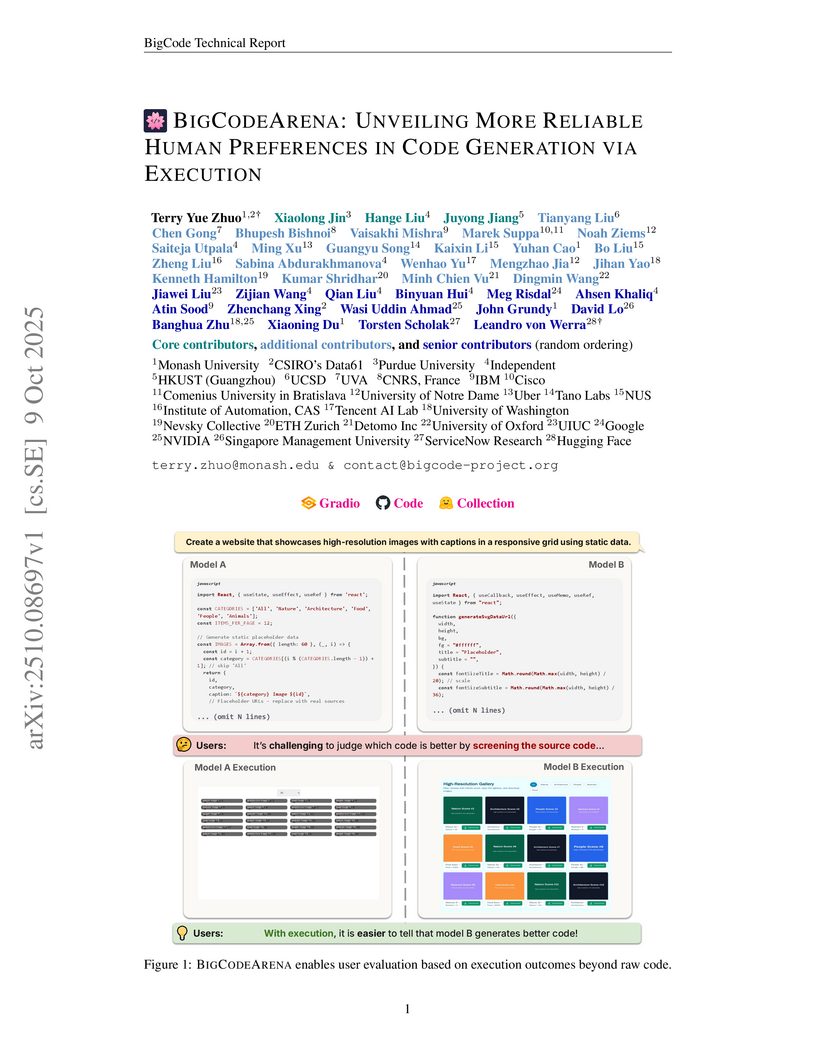

ServiceNowComenius University in BratislavaHKUST GuangzhouCiscoTano LabsU.Va.UberCNRS, FranceNevsky CollectiveDetomo IncBIGCODEARENA introduces an open, execution-backed human evaluation platform for large language model (LLM) generated code, collecting human preference data to form benchmarks for evaluating code LLMs and reward models. This approach demonstrates that execution feedback improves the reliability of evaluations and reveals detailed performance differences among models across various programming languages and environments.

20 Aug 2025

The SAND framework enables large language model (LLM) agents to self-teach explicit action deliberation through an iterative self-learning process. This approach helps agents proactively evaluate and compare multiple potential actions before committing, resulting in over 20% average performance improvement and enhanced generalization on interactive tasks like ALFWorld and ScienceWorld.

The Self-Expansion of Modularized Adaptation (SEMA) framework enables efficient and effective continual learning for pre-trained models by dynamically expanding its capacity with modular adapters only when new patterns are detected. It achieves state-of-the-art performance in rehearsal-free class-incremental learning while maintaining a sub-linear growth rate of model parameters.

Data61, CSIRO researchers introduced AgentOps, a specialized DevOps paradigm and comprehensive taxonomy for Large Language Model (LLM) agents, to enhance observability. This framework details critical artifacts and their relationships, offering a structured approach to tracing agent operations that addresses AI safety concerns and surpasses the capabilities of existing MLOps tools.

National University of Singapore

National University of Singapore Tsinghua UniversityCSIRO’s Data61

Tsinghua UniversityCSIRO’s Data61 The University of Hong KongThe University of AdelaideBeijing University of Posts and TelecommunicationsShenzhen International Graduate School, Tsinghua UniversityCSIRO

’s Data61Responsible AI Research (RAIR) Centre, The University of Adelaide

The University of Hong KongThe University of AdelaideBeijing University of Posts and TelecommunicationsShenzhen International Graduate School, Tsinghua UniversityCSIRO

’s Data61Responsible AI Research (RAIR) Centre, The University of AdelaideE2E-VGuard introduces a proactive defense framework against unauthorized voice cloning and malicious speech synthesis in production LLM-based and ASR-driven end-to-end systems. This framework integrates imperceptible perturbations to disrupt both speaker identity and pronunciation, significantly outperforming existing baselines and demonstrating robustness across diverse synthesizers, commercial APIs, and real-world scenarios.

An end-to-end Text-to-Speech framework called ParaStyleTTS provides expressive paralinguistic style control using text prompts, outperforming LLM-based systems in style accuracy and robustness while dramatically reducing computational overhead. The framework achieves 30x faster inference, an 8x smaller model size, and 2.5x less memory usage compared to state-of-the-art LLM-based models like CosyVoice.

Researchers at UNSW and CSIRO's Data61 developed CoDyRA, a continual learning framework for vision-language models like CLIP, utilizing dynamic rank-selective LoRA to adaptively balance new knowledge acquisition with the retention of previously learned information. This method achieves state-of-the-art performance on various benchmarks and enhances generalization to unseen data without incurring additional inference overhead.

28 Oct 2024

LLM-based code generation tools are essential to help developers in the

software development process. Existing tools often disconnect with the working

context, i.e., the code repository, causing the generated code to be not

similar to human developers. In this paper, we propose a novel code generation

framework, dubbed A^3-CodGen, to harness information within the code repository

to generate code with fewer potential logical errors, code redundancy, and

library-induced compatibility issues. We identify three types of representative

information for the code repository: local-aware information from the current

code file, global-aware information from other code files, and

third-party-library information. Results demonstrate that by adopting the

A^3-CodGen framework, we successfully extract, fuse, and feed code repository

information into the LLM, generating more accurate, efficient, and highly

reusable code. The effectiveness of our framework is further underscored by

generating code with a higher reuse rate, compared to human developers. This

research contributes significantly to the field of code generation, providing

developers with a more powerful tool to address the evolving demands in

software development in practice.

As data continues to grow in volume and complexity across domains such as

finance, manufacturing, and healthcare, effective anomaly detection is

essential for identifying irregular patterns that may signal critical issues.

Recently, foundation models (FMs) have emerged as a powerful tool for advancing

anomaly detection. They have demonstrated unprecedented capabilities in

enhancing anomaly identification, generating detailed data descriptions, and

providing visual explanations. This survey presents the first comprehensive

review of recent advancements in FM-based anomaly detection. We propose a novel

taxonomy that classifies FMs into three categories based on their roles in

anomaly detection tasks, i.e., as encoders, detectors, or interpreters. We

provide a systematic analysis of state-of-the-art methods and discuss key

challenges in leveraging FMs for improved anomaly detection. We also outline

future research directions in this rapidly evolving field.

11 Aug 2025

Researchers from CSIRO's Data61 and UNSW developed SHIELDA, a framework that provides a systematic and structured approach for handling exceptions in LLM-driven agentic workflows. It introduces a comprehensive taxonomy of 36 exception types and a triadic model for organizing 48 handler patterns, demonstrating effective multi-stage, phase-aware recovery in an empirical case study.

Learning deep discrete latent presentations offers a promise of better

symbolic and summarized abstractions that are more useful to subsequent

downstream tasks. Inspired by the seminal Vector Quantized Variational

Auto-Encoder (VQ-VAE), most of work in learning deep discrete representations

has mainly focused on improving the original VQ-VAE form and none of them has

studied learning deep discrete representations from the generative viewpoint.

In this work, we study learning deep discrete representations from the

generative viewpoint. Specifically, we endow discrete distributions over

sequences of codewords and learn a deterministic decoder that transports the

distribution over the sequences of codewords to the data distribution via

minimizing a WS distance between them. We develop further theories to connect

it with the clustering viewpoint of WS distance, allowing us to have a better

and more controllable clustering solution. Finally, we empirically evaluate our

method on several well-known benchmarks, where it achieves better qualitative

and quantitative performances than the other VQ-VAE variants in terms of the

codebook utilization and image reconstruction/generation.

03 Jun 2025

This paper introduces RAGOps, a comprehensive framework extending LLMOps to manage the continuous data lifecycle of Retrieval-Augmented Generation (RAG) systems. It addresses the operational challenges of dynamic external knowledge sources, enabling enhanced adaptability, monitorability, observability, traceability, and reliability for production RAG deployments.

14 Oct 2025

Web applications are prime targets for cyberattacks as gateways to critical services and sensitive data. Traditional penetration testing is costly and expertise-intensive, making it difficult to scale with the growing web ecosystem. While language model agents show promise in cybersecurity, modern web applications demand visual understanding, dynamic content handling, and multi-step interactions that only computer-use agents (CUAs) can perform. Yet, their ability to discover and exploit vulnerabilities through graphical interfaces remains largely unexplored. We present HackWorld, the first framework for systematically evaluating CUAs' capabilities to exploit web application vulnerabilities via visual interaction. Unlike sanitized benchmarks, HackWorld includes 36 real-world applications across 11 frameworks and 7 languages, featuring realistic flaws such as injection vulnerabilities, authentication bypasses, and unsafe input handling. Using a Capture-the-Flag (CTF) setup, it tests CUAs' capacity to identify and exploit these weaknesses while navigating complex web interfaces. Evaluation of state-of-the-art CUAs reveals concerning trends: exploitation rates below 12% and low cybersecurity awareness. CUAs often fail at multi-step attack planning and misuse security tools. These results expose the current limitations of CUAs in web security contexts and highlight opportunities for developing more security-aware agents capable of effective vulnerability detection and exploitation.

There are no more papers matching your filters at the moment.