Center for Quantum Information and Quantum BiologyThe University of Osaka

24 Sep 2025

Quantum compilers that reduce the number of T gates are essential for minimizing the overhead of fault-tolerant quantum computation. To achieve effective T-count reduction, it is necessary to identify equivalent circuit transformation rules that are not yet utilized in existing tools. In this paper, we rewrite any given Clifford+T circuit using a Clifford block followed by a sequential Pauli-based computation, and introduce a nontrivial and ancilla-free equivalent transformation rule, the multi-product commutation relation (MCR). This rule constructs gate sequences based on specific commutation properties among multi-Pauli operators, yielding seemingly non-commutative instances that can be commuted. To evaluate whether existing compilers account for this commutation rule, we create a benchmark circuit dataset using quantum circuit unoptimization. This technique intentionally adds redundancy to the circuit while keeping its equivalence. By leveraging the known structure of the original circuit before unoptimization, this method enables a quantitative evaluation of compiler performance by measuring how closely the optimized circuit matches the original one. Our numerical experiments reveal that the transformation rule based on MCR is not yet incorporated into current compilers. This finding suggests an untapped potential for further T-count reduction by integrating MCR-aware transformations, paving the way for improvements in quantum compilers.

A deterministic discrete denoising algorithm, employing a time-dependent herding method, significantly improves the efficiency and sample quality of discrete-state diffusion models. This "drop-in replacement" achieves up to a 10x improvement in perplexity for text generation and better FID/IS for images, often with fewer inference steps and without requiring model retraining.

21 Oct 2025

An architecture for measurement-based fault-tolerant quantum computation is introduced, designed for high-connectivity devices to enable megaquop to gigaquop scale operations using moderate physical qubit counts. It leverages Knill's error-correcting teleportation with self-dual CSS codes, offering a resource-efficient path to early fault-tolerant quantum computing.

A unified approach from Osaka University enables accurate reconstruction of both near and far objects in unbounded 3D scenes by representing Gaussian splatting in homogeneous coordinates, achieving state-of-the-art novel view synthesis while maintaining real-time rendering capabilities without requiring scene segmentation or pre-processing steps.

The Wavy Transformer introduces a novel architecture that re-conceptualizes transformer attention dynamics as second-order wave propagation, moving away from dissipative diffusion to mitigate over-smoothing. This approach enhances model performance across diverse tasks, achieving a +1.99 macro average score gain on GLUE benchmarks and a +0.92 Top-1 accuracy gain for DeiT-Ti models on ImageNet, while preserving feature diversity in deeper layers.

11 Oct 2025

Reinforcement Learning has emerged as a dominant post-training approach to elicit agentic RAG behaviors such as search and planning from language models. Despite its success with larger models, applying RL to compact models (e.g., 0.5--1B parameters) presents unique challenges. The compact models exhibit poor initial performance, resulting in sparse rewards and unstable training. To overcome these difficulties, we propose Distillation-Guided Policy Optimization (DGPO), which employs cold-start initialization from teacher demonstrations and continuous teacher guidance during policy optimization. To understand how compact models preserve agentic behavior, we introduce Agentic RAG Capabilities (ARC), a fine-grained metric analyzing reasoning, search coordination, and response synthesis. Comprehensive experiments demonstrate that DGPO enables compact models to achieve sophisticated agentic search behaviors, even outperforming the larger teacher model in some cases. DGPO makes agentic RAG feasible in computing resource-constrained environments.

14 Nov 2025

To simulate plasma phenomena, large-scale computational resources have been employed in developing high-precision and high-resolution plasma simulations. One of the main obstacles in plasma simulations is the requirement of computational resources that scale polynomially with the number of spatial grids, which poses a significant challenge for large-scale modeling. To address this issue, this study presents a quantum algorithm for simulating the nonlinear electromagnetic fluid dynamics that govern space plasmas. We map it, by applying Koopman-von Neumann linearization, to the Schrödinger equation and evolve the system using Hamiltonian simulation via quantum singular value transformation. Our algorithm scales O(sNxpolylog(Nx)T) in time complexity with s, Nx, and T being the spatial dimension, the number of spatial grid points per dimension, and the evolution time, respectively. Comparing the scaling O(sNxs(T5/4+TNx)) for the classical method with the finite volume scheme, this algorithm achieves polynomial speedup in Nx. The space complexity of this algorithm is exponentially reduced from O(sNxs) to O(spolylog(Nx)). Numerical experiments validate that accurate solutions are attainable with smaller m than theoretically anticipated and with practical values of m and R, underscoring the feasibility of the approach. As a practical demonstration, the method accurately reproduces the Kelvin-Helmholtz instability, underscoring its capability to tackle more intricate nonlinear dynamics. These results suggest that quantum computing can offer a viable pathway to overcome the computational barriers of multiscale plasma modeling.

28 Oct 2025

Researchers developed a method for efficient magic state distillation, replacing complex code transformations in Magic State Cultivation (MSC) with lattice surgery. This approach reduces spacetime overhead by over 50% while maintaining comparable logical error rates, and introduces a lookup table for a further 15% reduction through early rejection.

This research introduces KnowMT-Bench, a new benchmark for evaluating large language models in multi-turn, knowledge-intensive long-form question answering across medical, financial, and legal domains. It quantitatively demonstrates a decline in model factuality and efficiency caused by self-generated conversational noise and shows that Retrieval-Augmented Generation (RAG) effectively reverses this performance degradation.

This work introduces a decentralized multi-agent world model that integrates Collective Predictive Coding with temporal dynamics, enabling two agents to develop shared symbolic communication for coordination in partially observable environments. The research demonstrates that decentralized learning, without direct access to other agents' internal states, can lead to the emergence of more meaningful, environment-reflective symbolic representations that facilitate improved coordination.

Why and when is deep better than shallow? We answer this question in a framework that is agnostic to network implementation. We formulate a deep model as an abstract state-transition semigroup acting on a general metric space, and separate the implementation (e.g., ReLU nets, transformers, and chain-of-thought) from the abstract state transition. We prove a bias-variance decomposition in which the variance depends only on the abstract depth-k network and not on the implementation (Theorem 1). We further split the bounds into output and hidden parts to tie the depth dependence of the variance to the metric entropy of the state-transition semigroup (Theorem 2). We then investigate implementation-free conditions under which the variance grow polynomially or logarithmically with depth (Section 4). Combining these with exponential or polynomial bias decay identifies four canonical bias-variance trade-off regimes (EL/EP/PL/PP) and produces explicit optimal depths k∗. Across regimes, k∗>1 typically holds, giving a rigorous form of depth supremacy. The lowest generalization error bound is achieved under the EL regime (exp-decay bias + log-growth variance), explaining why and when deep is better, especially for iterative or hierarchical concept classes such as neural ODEs, diffusion/score models, and chain-of-thought reasoning.

Dynamic GNNs, which integrate temporal and spatial features in Electroencephalography (EEG) data, have shown great potential in automating seizure detection. However, fully capturing the underlying dynamics necessary to represent brain states, such as seizure and non-seizure, remains a non-trivial task and presents two fundamental challenges. First, most existing dynamic GNN methods are built on temporally fixed static graphs, which fail to reflect the evolving nature of brain connectivity during seizure progression. Second, current efforts to jointly model temporal signals and graph structures and, more importantly, their interactions remain nascent, often resulting in inconsistent performance. To address these challenges, we present the first theoretical analysis of these two problems, demonstrating the effectiveness and necessity of explicit dynamic modeling and time-then-graph dynamic GNN method. Building on these insights, we propose EvoBrain, a novel seizure detection model that integrates a two-stream Mamba architecture with a GCN enhanced by Laplacian Positional Encoding, following neurological insights. Moreover, EvoBrain incorporates explicitly dynamic graph structures, allowing both nodes and edges to evolve over time. Our contributions include (a) a theoretical analysis proving the expressivity advantage of explicit dynamic modeling and time-then-graph over other approaches, (b) a novel and efficient model that significantly improves AUROC by 23% and F1 score by 30%, compared with the dynamic GNN baseline, and (c) broad evaluations of our method on the challenging early seizure prediction tasks.

Entanglement asymmetry is a measure that quantifies the degree of symmetry breaking at the level of a subsystem. In this work, we investigate the entanglement asymmetry in su(N)k Wess-Zumino-Witten model and discuss the quantum Mpemba effect for SU(N) symmetry, the phenomenon that the more symmetry is initially broken, the faster it is restored. Due to the Coleman-Mermin-Wagner theorem, spontaneous breaking of continuous global symmetries is forbidden in 1+1 dimensions. To circumvent this no-go theorem, we consider excited initial states which explicitly break non-Abelian global symmetry. We particularly focus on the initial states built from primary operators in the fundamental and adjoint representations. In both cases, we study the real-time dynamics of the Rényi entanglement asymmetry and provide clear evidence of quantum Mpemba effect for SU(N) symmetry. Furthermore, we find a new type of quantum Mpemba effect for the primary operator in the fundamental representation: increasing the rank N leads to stronger initial symmetry breaking but faster symmetry restoration. Also, increasing the level k leads to weaker initial symmetry breaking but slower symmetry restoration. On the other hand, no such behavior is observed for adjoint case, which may suggest that this new type of quantum Mpemba effect is not universal.

Keisuke Fujii introduces Out-of-Time-Order Correlator (OTOC) spectroscopy, an algorithmic interpretation of higher-order OTOCs using Quantum Signal Processing (QSP). This framework establishes OTOCs as measurements of specific Fourier components of singular value distributions, enabling frequency-selective probing of quantum scrambling and diverse many-body dynamics.

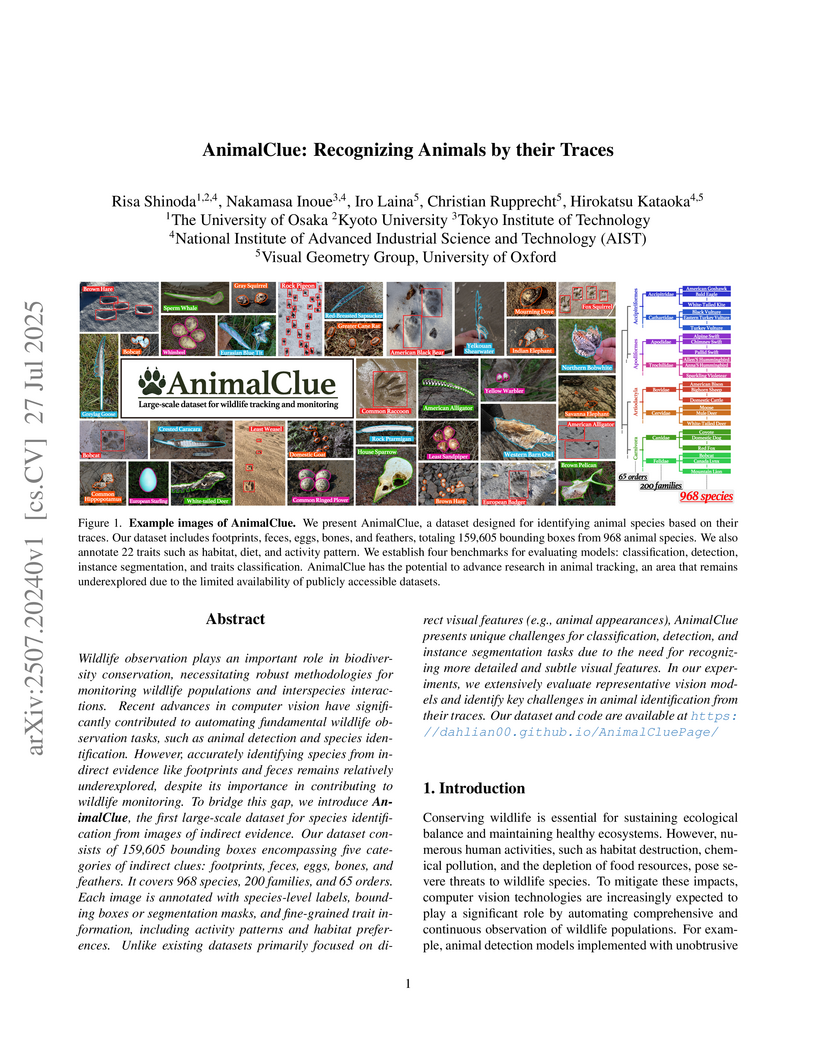

AnimalClue is introduced as the first large-scale, multi-modal dataset specifically designed for automated species identification from indirect biological evidence. It comprises 159,605 bounding boxes and 141,314 segmentation masks for 968 species across five trace types (footprints, feces, eggs, bones, feathers), and includes 22 fine-grained ecological trait annotations, setting new benchmarks for wildlife monitoring.

A tenet of contemporary physics is that novel physics beyond the Standard Model lurks at a scale related to the Planck length. The development and validation of a unified framework that merges general relativity and quantum physics is contingent upon the observation of Planck-scale physics. Here, we present a fully quantum model for measuring the nonstationary dynamics of a ng-mass mechanical resonator, which will slightly deviate from the predictions of standard quantum mechanics induced by modified commutation relations associated with quantum gravity effects at low-energy scalar. The deformed commutator is quantified by the oscillation frequency deviation, which is amplified by the nonlinear mechanism of the detection field. The measurement resolution is optimized to a precision level that is 15 orders of magnitude below the electroweak scale.

FRBs constitute a unique probe of various astrophysical and cosmological environments via their characteristic dispersion and rotation (RM) measures that encode information about the ionized gas traversed by the FRB sightlines. In this work, we analyse observed RM measured for 14 localized FRBs at 0.05≲z≲0.5, to infer total magnetic fields in various galactic environments. Additionally, we calculate fgas - the average fraction of halo baryons in the ionized CGM. We build a spectroscopic dataset of FRB foreground galaxy halos, acquired with VLT/MUSE and FLIMFLAM survey. We develop a novel Bayesian algorithm and use it to correlate the individual intervening halos with the observed RM. This approach allows us to disentangle the magnetic fields present in various environments traversed by the FRB. Our analysis yields the first direct FRB constraints on the strength of magnetic fields in the ISM and halos of the FRB host galaxies, as well as in halos of foreground galaxies. We find that the average magnetic field in the ISM of FRB hosts is Bhostlocal=5.44−0.87+1.13μG. Additionally, we place upper limits on average magnetic field in FRB host halos, B_{\rm host}^{\rm halo} < 4.81\mu{\rm G}, and in foreground intervening halos, B_{\rm f/g}^{\rm halo} < 4.31\mu{\rm G}. Moreover, we estimate the average fraction of cosmic baryons inside 10≲log10(Mhalo/M⊙)≲13.1 halos fgas=0.45−0.19+0.21. We find that the magnetic fields inferred in this work are in good agreement with previous measurements. In contrast to previous studies that analysed FRB RMs and have not considered contributions from the halos of the foreground and/or FRB host galaxies, we show that they can contribute a non-negligible amount of RM and must be taken into account when analysing future FRB samples.

Assessing image-text alignment models such as CLIP is crucial for bridging visual and linguistic representations. Yet existing benchmarks rely on rule-based perturbations or short captions, limiting their ability to measure fine-grained alignment. We introduce AlignBench, a benchmark that provides a new indicator of image-text alignment by evaluating detailed image-caption pairs generated by diverse image-to-text and text-to-image models. Each sentence is annotated for correctness, enabling direct assessment of VLMs as alignment evaluators. Benchmarking a wide range of decoder-based VLMs reveals three key findings: (i) CLIP-based models, even those tailored for compositional reasoning, remain nearly blind; (ii) detectors systematically over-score early sentences; and (iii) they show strong self-preference, favoring their own outputs and harming detection performance. Our project page will be available at this https URL.

Chinese Academy of SciencesRIKEN Interdisciplinary Theoretical and Mathematical Sciences (iTHEMS)Hiroshima UniversityChuo UniversityThe University of OsakaKavli Institute for Theoretical SciencesInternational Institute for Sustainability with Knotted Chiral Meta Matter (WPI-SKCM2)Sanyo-Onoda City University

Chinese Academy of SciencesRIKEN Interdisciplinary Theoretical and Mathematical Sciences (iTHEMS)Hiroshima UniversityChuo UniversityThe University of OsakaKavli Institute for Theoretical SciencesInternational Institute for Sustainability with Knotted Chiral Meta Matter (WPI-SKCM2)Sanyo-Onoda City UniversityIn this paper, we numerically investigate whether quantum thermalization occurs during the time evolution induced by a non-local Hamiltonian whose spectra exhibit integrability. This non-local and integrable Hamiltonian is constructed by combining two types of integrable Hamiltonians. From the time dependence of entanglement entropy and mutual information, we find that non-locality can evolve the system into the typical state. On the other hand, the time dependence of logarithmic negativity shows that the non-locality can destroy the quantum correlation. These findings suggest that the quantum thermalization induced by the non-local Hamiltonian does not require the quantum chaoticity of the system.

07 Oct 2025

We propose two Clifford+T synthesis algorithms that are optimal with respect to T-count. The first algorithm, called deterministic synthesis, approximates any single-qubit unitary by a single-qubit Clifford+T circuit with the minimum T-count. The second algorithm, called probabilistic synthesis, approximates any single-qubit unitary by a probabilistic mixture of single-qubit Clifford+T circuits with the minimum T-count. For most of single-qubit unitaries, the runtimes of deterministic synthesis and probabilistic synthesis are ε−1/2−o(1) and ε−1/4−o(1), respectively, for an approximation error ε. Although this complexity is exponential in the input size, we demonstrate that our algorithms run in practical time at ε≈10−15 and ε≈10−22, respectively. Furthermore, we show that, for most single-qubit unitaries, the deterministic synthesis algorithm requires at most 3log2(1/ε)+o(log2(1/ε)) T-gates, and the probabilistic synthesis algorithm requires at most 1.5log2(1/ε)+o(log2(1/ε)) T-gates. Remarkably, complexity analyses in this work do not rely on any numerical or number-theoretic conjectures.

There are no more papers matching your filters at the moment.