Dakota State University

06 Jan 2025

The Dead Internet Theory (DIT) suggests that much of today's internet, particularly social media, is dominated by non-human activity, AI-generated content, and corporate agendas, leading to a decline in authentic human interaction. This study explores the origins, core claims, and implications of DIT, emphasizing its relevance in the context of social media platforms. The theory emerged as a response to the perceived homogenization of online spaces, highlighting issues like the proliferation of bots, algorithmically generated content, and the prioritization of engagement metrics over genuine user interaction. AI technologies play a central role in this phenomenon, as social media platforms increasingly use algorithms and machine learning to curate content, drive engagement, and maximize advertising revenue. While these tools enhance scalability and personalization, they also prioritize virality and consumption over authentic communication, contributing to the erosion of trust, the loss of content diversity, and a dehumanized internet experience. This study redefines DIT in the context of social media, proposing that the commodification of content consumption for revenue has taken precedence over meaningful human connectivity. By focusing on engagement metrics, platforms foster a sense of artificiality and disconnection, underscoring the need for human-centric approaches to revive authentic online interaction and community building.

A survey extensively categorizes and analyzes existing Temporal Knowledge Graph Completion (TKGC) methods, providing a structured overview of interpolation and extrapolation techniques, benchmark datasets, and evaluation protocols. It identifies current challenges and proposes future research directions, serving as a foundational resource for the field.

Insider threats are a growing organizational problem due to the complexity of identifying their technical and behavioral elements. A large research body is dedicated to the study of insider threats from technological, psychological, and educational perspectives. However, research in this domain has been generally dependent on datasets that are static and limited access which restricts the development of adaptive detection models. This study introduces a novel, ethically grounded approach that uses the large language model (LLM) Claude Sonnet 3.7 to dynamically synthesize syslog messages, some of which contain indicators of insider threat scenarios. The messages reflect real-world data distributions by being highly imbalanced (1% insider threats). The syslogs were analyzed for insider threats by both Sonnet 3.7 and GPT-4o, with their performance evaluated through statistical metrics including accuracy, precision, recall, F1, specificity, FAR, MCC, and ROC AUC. Sonnet 3.7 consistently outperformed GPT-4o across nearly all metrics, particularly in reducing false alarms and improving detection accuracy. The results show strong promise for the use of LLMs in synthetic dataset generation and insider threat detection.

Influence maximization (IM) is formulated as selecting a set of initial users

from a social network to maximize the expected number of influenced users.

Researchers have made great progress in designing various traditional methods,

and their theoretical design and performance gain are close to a limit. In the

past few years, learning-based IM methods have emerged to achieve stronger

generalization ability to unknown graphs than traditional ones. However, the

development of learning-based IM methods is still limited by fundamental

obstacles, including 1) the difficulty of effectively solving the objective

function; 2) the difficulty of characterizing the diversified underlying

diffusion patterns; and 3) the difficulty of adapting the solution under

various node-centrality-constrained IM variants. To cope with the above

challenges, we design a novel framework DeepIM to generatively characterize the

latent representation of seed sets, and we propose to learn the diversified

information diffusion pattern in a data-driven and end-to-end manner. Finally,

we design a novel objective function to infer optimal seed sets under flexible

node-centrality-based budget constraints. Extensive analyses are conducted over

both synthetic and real-world datasets to demonstrate the overall performance

of DeepIM. The code and data are available at:

this https URL

01 Aug 2025

This study evaluates the ability of GPT-4o to autonomously solve beginner-level offensive security tasks by connecting the model to OverTheWire's Bandit capture-the-flag game. Of the 25 levels that were technically compatible with a single-command SSH framework, GPT-4o solved 18 unaided and another two after minimal prompt hints for an overall 80% success rate. The model excelled at single-step challenges that involved Linux filesystem navigation, data extraction or decoding, and straightforward networking. The approach often produced the correct command in one shot and at a human-surpassing speed. Failures involved multi-command scenarios that required persistent working directories, complex network reconnaissance, daemon creation, or interaction with non-standard shells. These limitations highlight current architectural deficiencies rather than a lack of general exploit knowledge. The results demonstrate that large language models (LLMs) can automate a substantial portion of novice penetration-testing workflow, potentially lowering the expertise barrier for attackers and offering productivity gains for defenders who use LLMs as rapid reconnaissance aides. Further, the unsolved tasks reveal specific areas where secure-by-design environments might frustrate simple LLM-driven attacks, informing future hardening strategies. Beyond offensive cybersecurity applications, results suggest the potential to integrate LLMs into cybersecurity education as practice aids.

This study investigates the meta-issues surrounding social media, which, while theoretically designed to enhance social interactions and improve our social lives by facilitating the sharing of personal experiences and life events, often results in adverse psychological impacts. Our investigation reveals a paradoxical outcome: rather than fostering closer relationships and improving social lives, the algorithms and structures that underlie social media platforms inadvertently contribute to a profound psychological impact on individuals, influencing them in unforeseen ways. This phenomenon is particularly pronounced among teenagers, who are disproportionately affected by curated online personas, peer pressure to present a perfect digital image, and the constant bombardment of notifications and updates that characterize their social media experience. As such, we issue a call to action for policymakers, platform developers, and educators to prioritize the well-being of teenagers in the digital age and work towards creating secure and safe social media platforms that protect the young from harm, online harassment, and exploitation.

Accurately modeling malware propagation is essential for designing effective cybersecurity defenses, particularly against adaptive threats that evolve in real time. While traditional epidemiological models and recent neural approaches offer useful foundations, they often fail to fully capture the nonlinear feedback mechanisms present in real-world networks. In this work, we apply scientific machine learning to malware modeling by evaluating three approaches: classical Ordinary Differential Equations (ODEs), Universal Differential Equations (UDEs), and Neural ODEs. Using data from the Code Red worm outbreak, we show that the UDE approach substantially reduces prediction error compared to both traditional and neural baselines by 44%, while preserving interpretability. We introduce a symbolic recovery method that transforms the learned neural feedback into explicit mathematical expressions, revealing suppression mechanisms such as network saturation, security response, and malware variant evolution. Our results demonstrate that hybrid physics-informed models can outperform both purely analytical and purely neural approaches, offering improved predictive accuracy and deeper insight into the dynamics of malware spread. These findings support the development of early warning systems, efficient outbreak response strategies, and targeted cyber defense interventions.

Researchers from Dakota State University developed an AI framework integrating drone footage, a Video Restoration Transformer (VRT) for super-resolution, and Google's Gemma3:27b Visual Language Model (VLM) for automated structural damage detection. This system provides human-interpretable assessments, improving efficiency and safety in post-disaster scenarios by accurately classifying damage and offering natural language explanations.

State-sponsored information operations (IOs) increasingly influence global discourse on social media platforms, yet their emotional and rhetorical strategies remain inadequately characterized in scientific literature. This study presents the first comprehensive analysis of toxic language deployment within such campaigns, examining 56 million posts from over 42 thousand accounts linked to 18 distinct geopolitical entities on X/Twitter. Using Google's Perspective API, we systematically detect and quantify six categories of toxic content and analyze their distribution across national origins, linguistic structures, and engagement metrics, providing essential information regarding the underlying patterns of such operations. Our findings reveal that while toxic content constitutes only 1.53% of all posts, they are associated with disproportionately high engagement and appear to be strategically deployed in specific geopolitical contexts. Notably, toxic content originating from Russian influence operations receives significantly higher user engagement compared to influence operations from any other country in our dataset. Our code is available at this https URL.

This paper presents a multi-cloud networking architecture built on zero trust

principles and micro-segmentation to provide secure connectivity with

authentication, authorization, and encryption in transit. The proposed design

includes the multi-cloud network to support a wide range of applications and

workload use cases, compute resources including containers, virtual machines,

and cloud-native services, including IaaS (Infrastructure as a Service (IaaS),

PaaS (Platform as a service). Furthermore, open-source tools provide

flexibility, agility, and independence from locking to one vendor technology.

The paper provides a secure architecture with micro-segmentation and follows

zero trust principles to solve multi-fold security and operational challenges.

Unikernels, an evolution of LibOSs, are emerging as a virtualization technology to rival those currently used by cloud providers. Unikernels combine the user and kernel space into one "uni"fied memory space and omit functionality that is not necessary for its application to run, thus drastically reducing the required resources. The removed functionality however is far-reaching and includes components that have become common security technologies such as Address Space Layout Randomization (ASLR), Data Execution Prevention (DEP), and Non-executable bits (NX bits). This raises questions about the real-world security of unikernels. This research presents a quantitative methodology using TF-IDF to analyze the focus of security discussions within unikernel research literature. Based on a corpus of 33 unikernel-related papers spanning 2013-2023, our analysis found that Memory Protection Extensions and Data Execution Prevention were the least frequently occurring topics, while SGX was the most frequent topic. The findings quantify priorities and assumptions in unikernel security research, bringing to light potential risks from underexplored attack surfaces. The quantitative approach is broadly applicable for revealing trends and gaps in niche security domains.

Digital engineering practices offer significant yet underutilized potential

for improving information assurance and system lifecycle management. This paper

examines how capabilities like model-based engineering, digital threads, and

integrated product lifecycles can address gaps in prevailing frameworks. A

reference model demonstrates applying digital engineering techniques to a

reference information system, exhibiting enhanced traceability, risk

visibility, accuracy, and integration. The model links strategic needs to

requirements and architecture while reusing authoritative elements across

views. Analysis of the model shows digital engineering closes gaps in

compliance, monitoring, change management, and risk assessment. Findings

indicate purposeful digital engineering adoption could transform cybersecurity,

operations, service delivery, and system governance through comprehensive

digital system representations. This research provides a foundation for

maturing application of digital engineering for information systems as

organizations modernize infrastructure and pursue digital transformation.

17 Apr 2023

Domain Name System (DNS) is the backbone of the Internet. However, threat actors have abused the antiquated protocol to facilitate command-and-control (C2) actions, to tunnel, or to exfiltrate sensitive information in novel ways. The FireEye breach and Solarwinds intrusions of late 2020 demonstrated the sophistication of hacker groups. Researchers were eager to reverse-engineer the malware and eager to decode the encrypted traffic. Noticeably, organizations were keen on being first to "solve the puzzle". Dr. Eric Cole of SANS Institute routinely expressed "prevention is ideal, but detection is a must". Detection analytics may not always provide the underlying context in encrypted traffic, but will at least give a fighting chance for defenders to detect the anomaly. SUNBURST is an open-source moniker for the backdoor that affected Solarwinds Orion. While analyzing the malware with security vendor research, there is a possible single-point-of-failure in the C2 phase of the Cyber Kill Chain provides an avenue for defenders to exploit and detect the activity itself. One small chance is better than none. The assumption is that encryption increases entropy in strings. SUNBURST relied on encryption to exfiltrate data through DNS queries of which the adversary prepended to registered Fully-Qualified Domain Names (FQDNs). These FQDNs were typo-squatted to mimic Amazon Web Services (AWS) domains. SUNBURST detection is possible through a simple 1-variable t-test across all DNS logs for a given day. The detection code is located on GitHub (this https URL).

CEKER: A Generalizable LLM Framework for Literature Analysis with a Case Study in Unikernel Security

CEKER: A Generalizable LLM Framework for Literature Analysis with a Case Study in Unikernel Security

Literature reviews are a critical component of formulating and justifying new research, but are a manual and often time-consuming process. This research introduces a novel, generalizable approach to literature analysis called CEKER which uses a three-step process to streamline the collection of literature, the extraction of key insights, and the summarized analysis of key trends and gaps. Leveraging Large Language Models (LLMs), this methodology represents a significant shift from traditional manual literature reviews, offering a scalable, flexible, and repeatable approach that can be applied across diverse research domains.

A case study on unikernel security illustrates CEKER's ability to generate novel insights validated against previous manual methods. CEKER's analysis highlighted reduced attack surface as the most prominent theme. Key security gaps included the absence of Address Space Layout Randomization, missing debugging tools, and limited entropy generation, all of which represent important challenges to unikernel security. The study also revealed a reliance on hypervisors as a potential attack vector and emphasized the need for dynamic security adjustments to address real-time threats.

This study investigates the prevalence and underlying causes of work-related stress and burnout among cybersecurity professionals using a quantitative survey approach guided by the Job Demands-Resources model. Analysis of responses from 50 cybersecurity practitioners reveals an alarming reality: 44% report experiencing severe work-related stress and burnout, while an additional 28% are uncertain about their condition. The demanding nature of cybersecurity roles, unrealistic expectations, and unsupportive organizational cultures emerge as primary factors fueling this crisis. Notably, 66% of respondents perceive cybersecurity jobs as more stressful than other IT positions, with 84% facing additional challenges due to the pandemic and recent high-profile breaches. The study finds that most cybersecurity experts are reluctant to report their struggles to management, perpetuating a cycle of silence and neglect. To address this critical issue, the paper recommends that organizations foster supportive work environments, implement mindfulness programs, and address systemic challenges. By prioritizing the mental health of cybersecurity professionals, organizations can cultivate a more resilient and effective workforce to protect against an ever-evolving threat landscape.

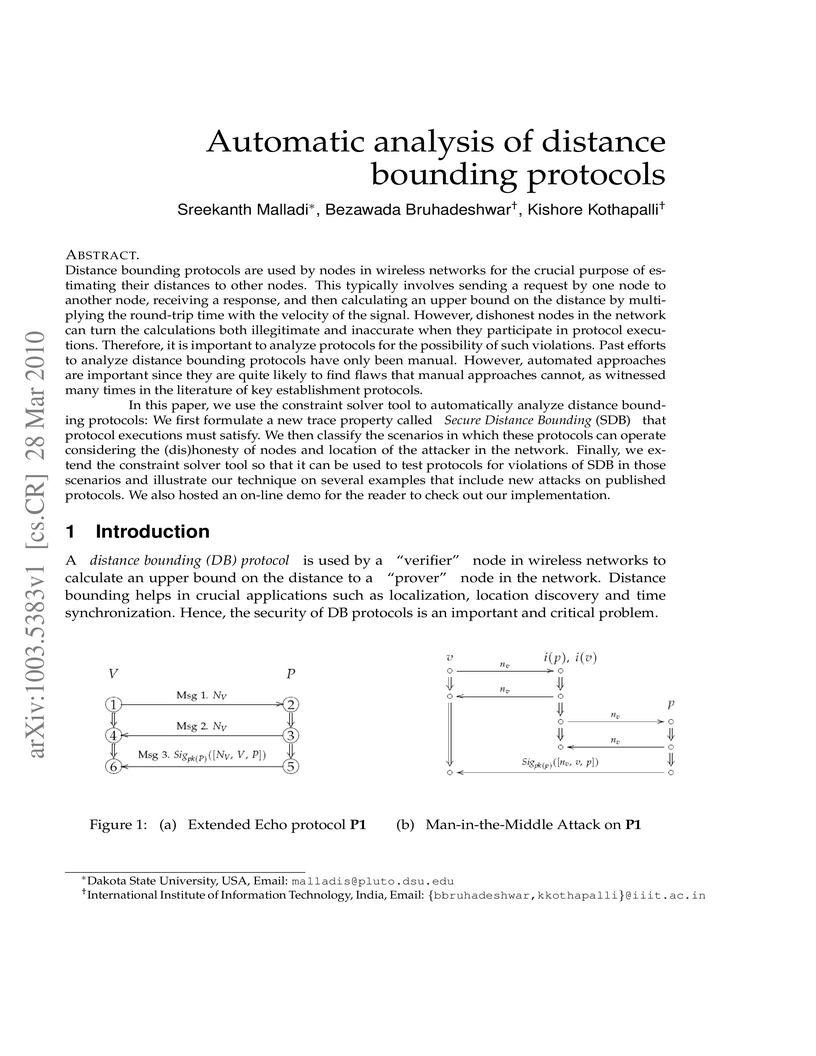

Distance bounding protocols are used by nodes in wireless networks to calculate upper bounds on their distances to other nodes. However, dishonest nodes in the network can turn the calculations both illegitimate and inaccurate when they participate in protocol executions. It is important to analyze protocols for the possibility of such violations. Past efforts to analyze distance bounding protocols have only been manual. However, automated approaches are important since they are quite likely to find flaws that manual approaches cannot, as witnessed in literature for analysis pertaining to key establishment protocols. In this paper, we use the constraint solver tool to automatically analyze distance bounding protocols. We first formulate a new trace property called Secure Distance Bounding (SDB) that protocol executions must satisfy. We then classify the scenarios in which these protocols can operate considering the (dis)honesty of nodes and location of the attacker in the network. Finally, we extend the constraint solver so that it can be used to test protocols for violations of SDB in these scenarios and illustrate our technique on some published protocols.

The application of deep learning-based architecture has seen a tremendous rise in recent years. For example, medical image classification using deep learning achieved breakthrough results. Convolutional Neural Networks (CNNs) are implemented predominantly in medical image classification and segmentation. On the other hand, transfer learning has emerged as a prominent supporting tool for enhancing the efficiency and accuracy of deep learning models. This paper investigates the development of CNN architectures using transfer learning techniques in the field of medical image classification using a timeline mapping model for key image classification challenges. Our findings help make an informed decision while selecting the optimum and state-of-the-art CNN architectures.

We aim to explore a more efficient way to simulate few-body dynamics on

quantum computers. Instead of mapping the second quantization of the system

Hamiltonian to qubit Pauli gates representation via the Jordan-Wigner

transform, we propose to use the few-body Hamiltonian matrix under the

statevector basis representation which is more economical on the required

number of quantum registers. For a single-particle excitation state on a

one-dimensional chain, Γ qubits can simulate N=2Γ number of

sites, in comparison to N qubits for N sites via the Jordan-Wigner

approach. A two-band diatomic tight-binding model is used to demonstrate the

effectiveness of the statevector basis representation. Both one-particle and

two-particle quantum circuits are constructed and some numerical tests on IBM

hardware are presented.

06 Jan 2025

This study aims to investigate the factors influencing the Human Development Index (HDI). Five variables-GDP per capita, health expenditure, education expenditure, infant mortality rate (per 1,000 live births), and average years of schooling-were analyzed to develop a regression model assessing their impact on HDI. The results indicate that GDP per capita, infant mortality rate, and average years of schooling are significant predictors of HDI. Specifically, the study finds a positive relationship between GDP per capita and average years of schooling with HDI, while infant mortality rate is negatively associated with HDI.

State-sponsored influence operations (SIOs) have become a pervasive and complex challenge in the digital age, particularly on social media platforms where information spreads rapidly and with minimal oversight. These operations are strategically employed by nation-state actors to manipulate public opinion, exacerbate social divisions, and project geopolitical narratives, often through the dissemination of misleading or inflammatory content. Despite increasing awareness of their existence, the specific linguistic and emotional strategies employed by these campaigns remain underexplored. This study addresses this gap by conducting a comprehensive analysis of sentiment, emotional valence, and abusive language across 2 million tweets attributed to influence operations linked to China, Iran, and Russia, using Twitter's publicly released dataset of state-affiliated accounts. We identify distinct affective and rhetorical patterns that characterize each nation's digital propaganda. Russian campaigns predominantly deploy negative sentiment and toxic language to intensify polarization and destabilize discourse. In contrast, Iranian operations blend antagonistic and supportive tones to simultaneously incite conflict and foster ideological alignment. Chinese activities emphasize positive sentiment and emotionally neutral rhetoric to promote favorable narratives and subtly influence global perceptions. These findings reveal how state actors tailor their information warfare tactics to achieve specific geopolitical objectives through differentiated content strategies.

There are no more papers matching your filters at the moment.