ETH AI Center

Researchers from ETH Zurich developed the Robotic World Model (RWM), a framework that learns robust world models for complex robotic environments without domain-specific biases. This approach enables policies trained solely in imagination to be deployed onto physical quadrupedal and humanoid robots with zero-shot transfer, effectively bridging the sim-to-real gap for complex low-level control tasks.

We present a novel approach for differentially private data synthesis of

protected tabular datasets, a relevant task in highly sensitive domains such as

healthcare and government. Current state-of-the-art methods predominantly use

marginal-based approaches, where a dataset is generated from private estimates

of the marginals. In this paper, we introduce PrivPGD, a new generation method

for marginal-based private data synthesis, leveraging tools from optimal

transport and particle gradient descent. Our algorithm outperforms existing

methods on a large range of datasets while being highly scalable and offering

the flexibility to incorporate additional domain-specific constraints.

Large language model (LLM) developers aim for their models to be honest, helpful, and harmless. However, when faced with malicious requests, models are trained to refuse, sacrificing helpfulness. We show that frontier LLMs can develop a preference for dishonesty as a new strategy, even when other options are available. Affected models respond to harmful requests with outputs that sound harmful but are crafted to be subtly incorrect or otherwise harmless in practice. This behavior emerges with hard-to-predict variations even within models from the same model family. We find no apparent cause for the propensity to deceive, but show that more capable models are better at executing this strategy. Strategic dishonesty already has a practical impact on safety evaluations, as we show that dishonest responses fool all output-based monitors used to detect jailbreaks that we test, rendering benchmark scores unreliable. Further, strategic dishonesty can act like a honeypot against malicious users, which noticeably obfuscates prior jailbreak attacks. While output monitors fail, we show that linear probes on internal activations can be used to reliably detect strategic dishonesty. We validate probes on datasets with verifiable outcomes and by using them as steering vectors. Overall, we consider strategic dishonesty as a concrete example of a broader concern that alignment of LLMs is hard to control, especially when helpfulness and harmlessness conflict.

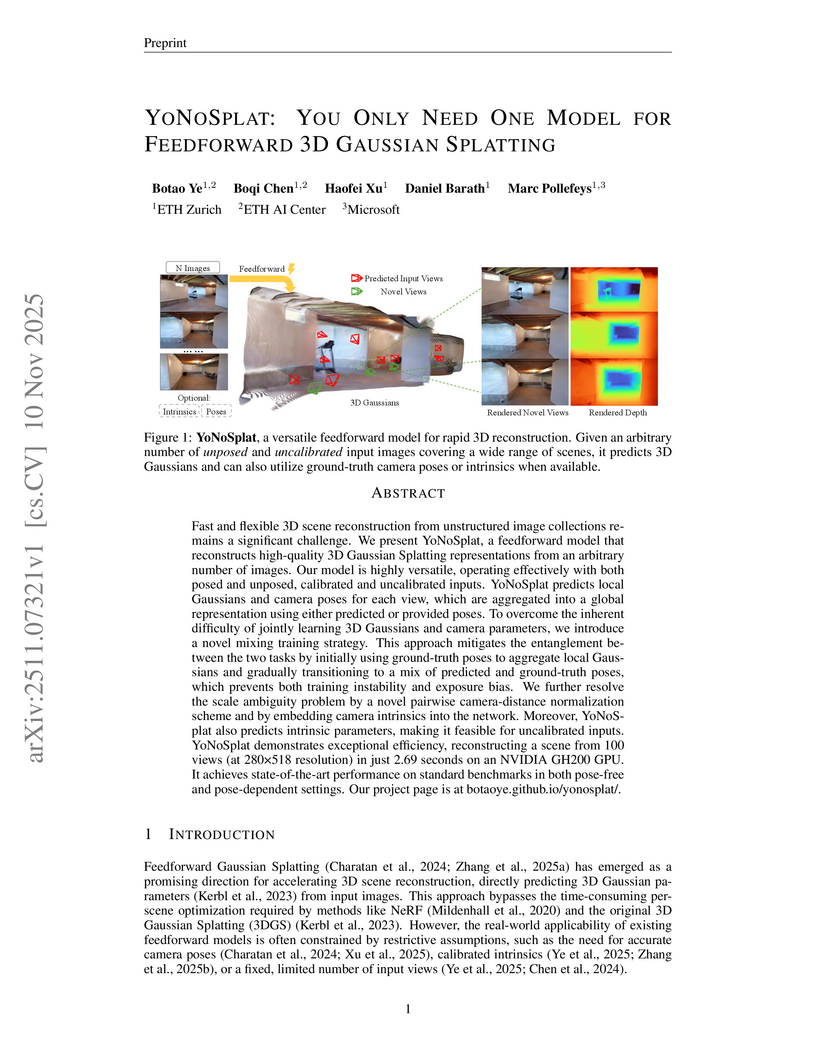

YONOSPLAT presents a single feedforward model capable of reconstructing high-fidelity 3D Gaussian Splatting representations from an arbitrary number of posed or unposed, calibrated or uncalibrated input images. The model achieves state-of-the-art novel view synthesis and significantly faster reconstruction times, completing a 100-view scene in 2.69 seconds.

A collaborative effort across prominent academic institutions and industry entities presents six architectural design patterns for building LLM agents with enhanced resistance to prompt injection attacks. The work demonstrates through diverse case studies how these patterns enable the construction of useful, application-specific agents that constrain capabilities to prevent unintended actions.

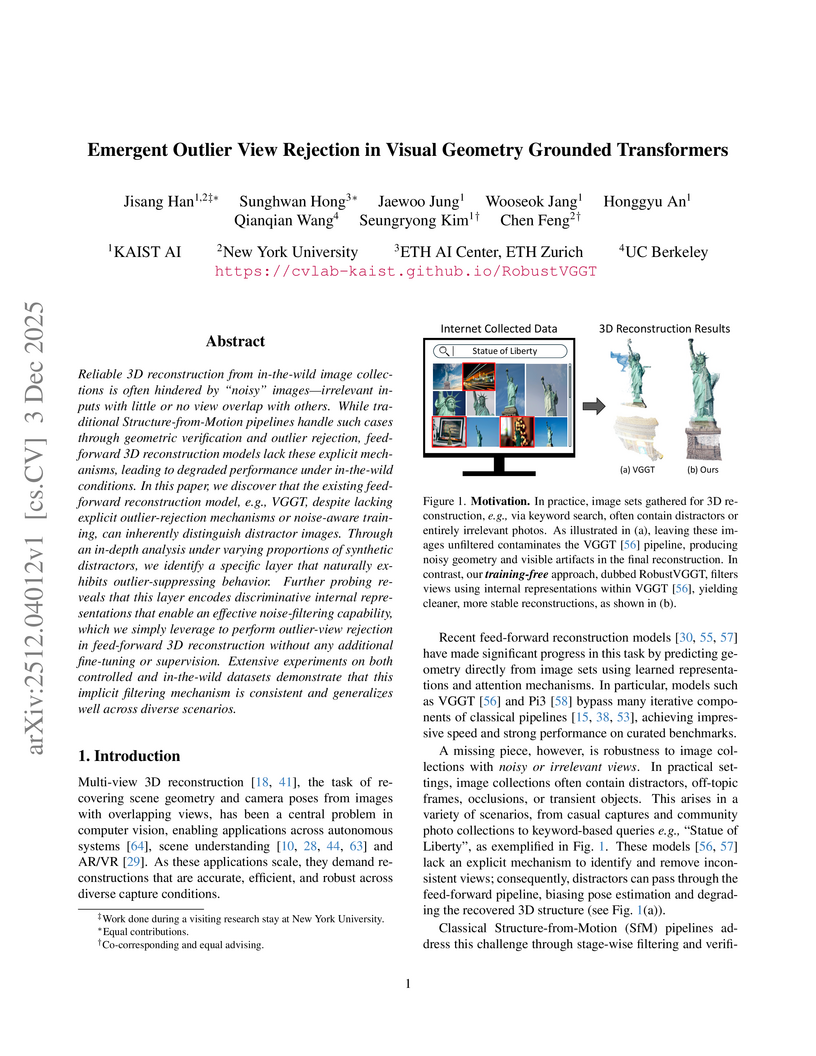

This research reveals that Visual Geometry Grounded Transformers (VGGT) develop an emergent, internal capability to distinguish geometrically consistent views from distractors. Leveraging this insight, the RobustVGGT method implements a training-free view rejection mechanism that improves 3D reconstruction robustness and accuracy, achieving superior performance on various datasets under noisy conditions compared to baselines.

This work introduces VLM-RMs, a method that leverages pre-trained Vision-Language Models like CLIP as zero-shot reward models for reinforcement learning. The approach allows complex visual tasks to be specified using single natural language sentences, enabling a MuJoCo humanoid robot to learn novel poses and classic control agents to solve tasks with high success rates, particularly when using larger VLMs and photorealistic environments.

18 Jun 2025

Researchers at ETH Zurich developed a framework that enables diffusion models to explore implicitly defined data manifolds by maximizing sample entropy. This approach allows generative models to discover novel, valid designs in low-density regions, demonstrated by increased diversity in synthetic data and more original image generations in text-to-image tasks.

University of Zurich UC Berkeley

UC Berkeley Stanford University

Stanford University Johns Hopkins UniversityUniversity of BolognaIstituto Italiano di TecnologiaJagiellonian UniversityÉcole Polytechnique Fédérale de Lausanne

Johns Hopkins UniversityUniversity of BolognaIstituto Italiano di TecnologiaJagiellonian UniversityÉcole Polytechnique Fédérale de Lausanne University of California, Santa CruzUniversity of California San FranciscoETH AI CenterIstanbul Medipol UniversityUniversity of Warmia and MazuryWarmian-Masurian Cancer Center

University of California, Santa CruzUniversity of California San FranciscoETH AI CenterIstanbul Medipol UniversityUniversity of Warmia and MazuryWarmian-Masurian Cancer Center

UC Berkeley

UC Berkeley Stanford University

Stanford University Johns Hopkins UniversityUniversity of BolognaIstituto Italiano di TecnologiaJagiellonian UniversityÉcole Polytechnique Fédérale de Lausanne

Johns Hopkins UniversityUniversity of BolognaIstituto Italiano di TecnologiaJagiellonian UniversityÉcole Polytechnique Fédérale de Lausanne University of California, Santa CruzUniversity of California San FranciscoETH AI CenterIstanbul Medipol UniversityUniversity of Warmia and MazuryWarmian-Masurian Cancer Center

University of California, Santa CruzUniversity of California San FranciscoETH AI CenterIstanbul Medipol UniversityUniversity of Warmia and MazuryWarmian-Masurian Cancer CenterEarly tumor detection save lives. Each year, more than 300 million computed tomography (CT) scans are performed worldwide, offering a vast opportunity for effective cancer screening. However, detecting small or early-stage tumors on these CT scans remains challenging, even for experts. Artificial intelligence (AI) models can assist by highlighting suspicious regions, but training such models typically requires extensive tumor masks--detailed, voxel-wise outlines of tumors manually drawn by radiologists. Drawing these masks is costly, requiring years of effort and millions of dollars. In contrast, nearly every CT scan in clinical practice is already accompanied by medical reports describing the tumor's size, number, appearance, and sometimes, pathology results--information that is rich, abundant, and often underutilized for AI training. We introduce R-Super, which trains AI to segment tumors that match their descriptions in medical reports. This approach scales AI training with large collections of readily available medical reports, substantially reducing the need for manually drawn tumor masks. When trained on 101,654 reports, AI models achieved performance comparable to those trained on 723 masks. Combining reports and masks further improved sensitivity by +13% and specificity by +8%, surpassing radiologists in detecting five of the seven tumor types. Notably, R-Super enabled segmentation of tumors in the spleen, gallbladder, prostate, bladder, uterus, and esophagus, for which no public masks or AI models previously existed. This study challenges the long-held belief that large-scale, labor-intensive tumor mask creation is indispensable, establishing a scalable and accessible path toward early detection across diverse tumor types.

We plan to release our trained models, code, and dataset at this https URL

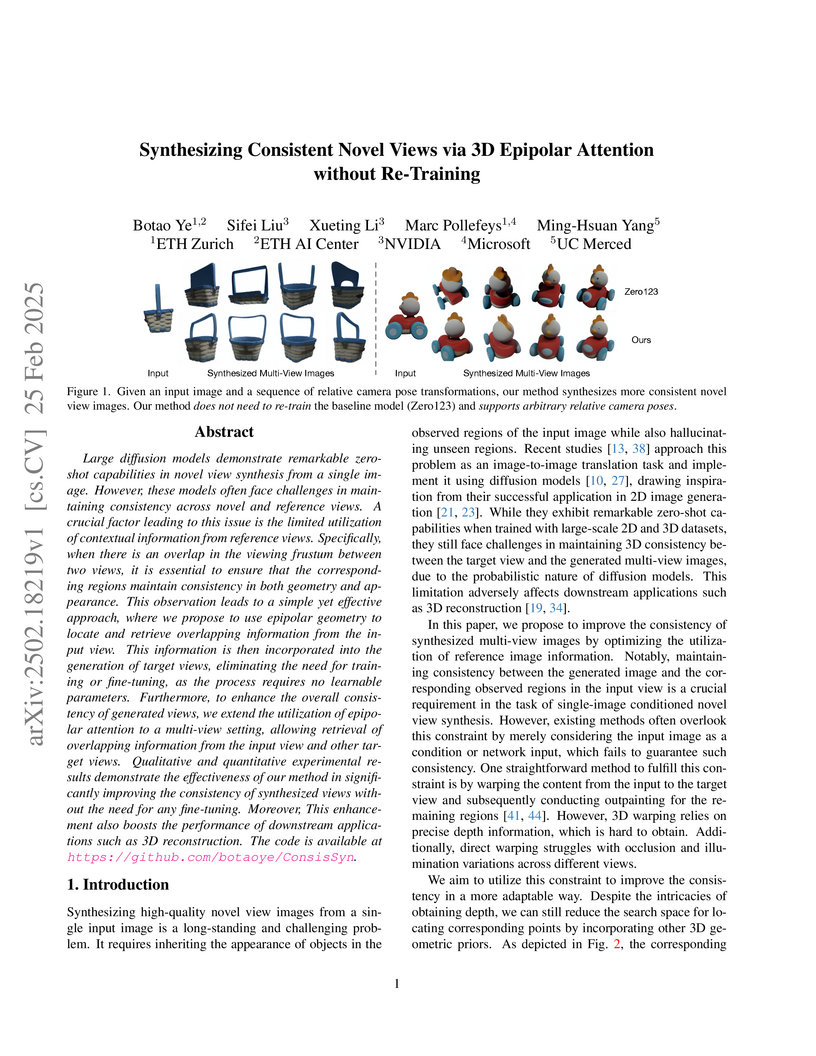

Large diffusion models demonstrate remarkable zero-shot capabilities in novel

view synthesis from a single image. However, these models often face challenges

in maintaining consistency across novel and reference views. A crucial factor

leading to this issue is the limited utilization of contextual information from

reference views. Specifically, when there is an overlap in the viewing frustum

between two views, it is essential to ensure that the corresponding regions

maintain consistency in both geometry and appearance. This observation leads to

a simple yet effective approach, where we propose to use epipolar geometry to

locate and retrieve overlapping information from the input view. This

information is then incorporated into the generation of target views,

eliminating the need for training or fine-tuning, as the process requires no

learnable parameters. Furthermore, to enhance the overall consistency of

generated views, we extend the utilization of epipolar attention to a

multi-view setting, allowing retrieval of overlapping information from the

input view and other target views. Qualitative and quantitative experimental

results demonstrate the effectiveness of our method in significantly improving

the consistency of synthesized views without the need for any fine-tuning.

Moreover, This enhancement also boosts the performance of downstream

applications such as 3D reconstruction. The code is available at

this https URL

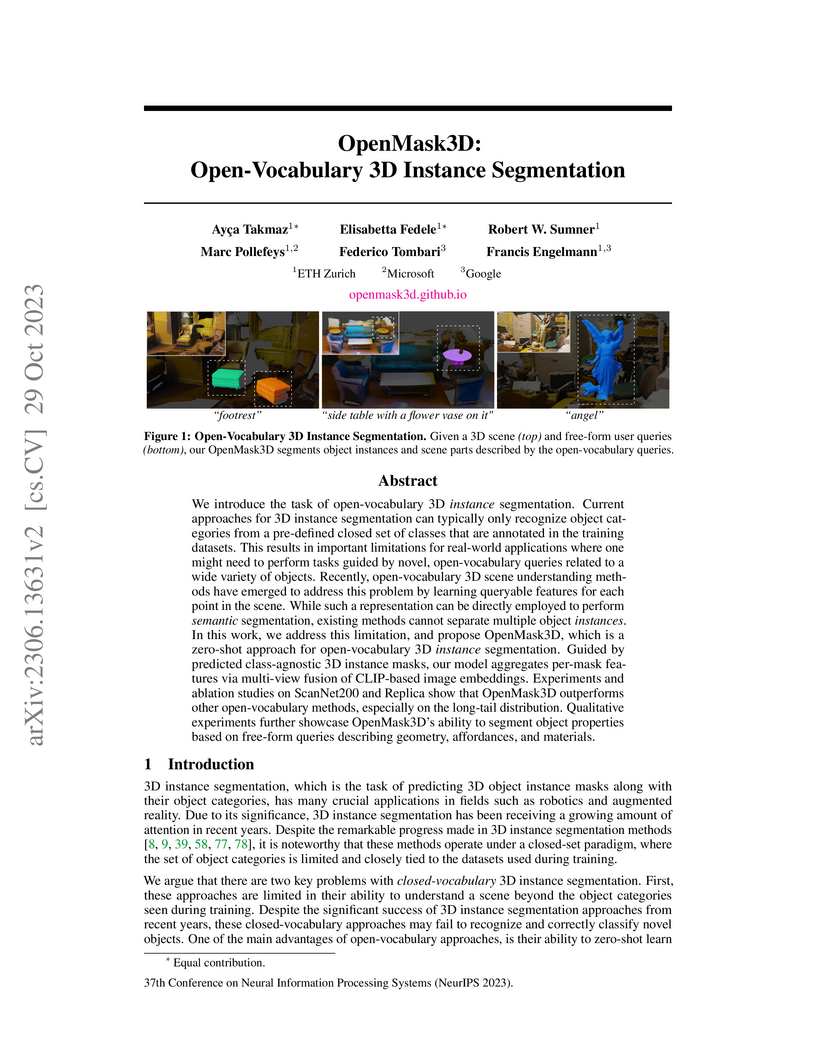

OpenMask3D introduces the first zero-shot method for open-vocabulary 3D instance segmentation, enabling the identification and precise segmentation of individual 3D objects from arbitrary text queries. It achieved 15.4 AP on ScanNet200, outperforming previous point-based open-vocabulary methods, and demonstrated strong generalization capabilities to novel categories and environments.

08 Dec 2025

The pretraining-finetuning paradigm has facilitated numerous transformative advancements in artificial intelligence research in recent years. However, in the domain of reinforcement learning (RL) for robot locomotion, individual skills are often learned from scratch despite the high likelihood that some generalizable knowledge is shared across all task-specific policies belonging to the same robot embodiment. This work aims to define a paradigm for pretraining neural network models that encapsulate such knowledge and can subsequently serve as a basis for warm-starting the RL process in classic actor-critic algorithms, such as Proximal Policy Optimization (PPO). We begin with a task-agnostic exploration-based data collection algorithm to gather diverse, dynamic transition data, which is then used to train a Proprioceptive Inverse Dynamics Model (PIDM) through supervised learning. The pretrained weights are then loaded into both the actor and critic networks to warm-start the policy optimization of actual tasks. We systematically validated our proposed method with 9 distinct robot locomotion RL environments comprising 3 different robot embodiments, showing significant benefits of this initialization strategy. Our proposed approach on average improves sample efficiency by 36.9% and task performance by 7.3% compared to random initialization. We further present key ablation studies and empirical analyses that shed light on the mechanisms behind the effectiveness of this method.

Deploying reinforcement learning (RL) in robotics, industry, and health care is blocked by two obstacles: the difficulty of specifying accurate rewards and the risk of unsafe, data-hungry exploration. We address this by proposing a two-stage framework that first learns a safe initial policy from a reward-free dataset of expert demonstrations, then fine-tunes it online using preference-based human feedback. We provide the first principled analysis of this offline-to-online approach and introduce BRIDGE, a unified algorithm that integrates both signals via an uncertainty-weighted objective. We derive regret bounds that shrink with the number of offline demonstrations, explicitly connecting the quantity of offline data to online sample efficiency. We validate BRIDGE in discrete and continuous control MuJoCo environments, showing it achieves lower regret than both standalone behavioral cloning and online preference-based RL. Our work establishes a theoretical foundation for designing more sample-efficient interactive agents.

The structure of many real-world datasets is intrinsically hierarchical, making the modeling of such hierarchies a critical objective in both unsupervised and supervised machine learning. Recently, novel approaches for hierarchical clustering with deep architectures have been proposed. In this work, we take a critical perspective on this line of research and demonstrate that many approaches exhibit major limitations when applied to realistic datasets, partly due to their high computational complexity. In particular, we show that a lightweight procedure implemented on top of pre-trained non-hierarchical clustering models outperforms models designed specifically for hierarchical clustering. Our proposed approach is computationally efficient and applicable to any pre-trained clustering model that outputs logits, without requiring any fine-tuning. To highlight the generality of our findings, we illustrate how our method can also be applied in a supervised setup, recovering meaningful hierarchies from a pre-trained ImageNet classifier.

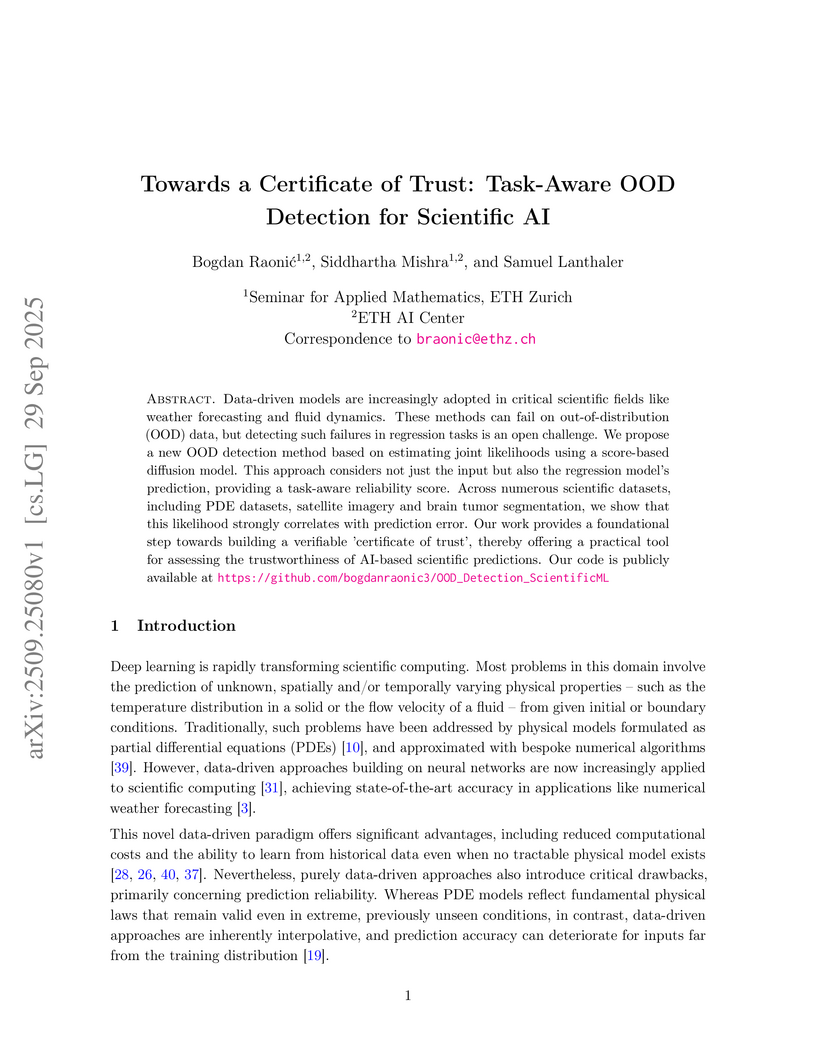

Data-driven models are increasingly adopted in critical scientific fields like weather forecasting and fluid dynamics. These methods can fail on out-of-distribution (OOD) data, but detecting such failures in regression tasks is an open challenge. We propose a new OOD detection method based on estimating joint likelihoods using a score-based diffusion model. This approach considers not just the input but also the regression model's prediction, providing a task-aware reliability score. Across numerous scientific datasets, including PDE datasets, satellite imagery and brain tumor segmentation, we show that this likelihood strongly correlates with prediction error. Our work provides a foundational step towards building a verifiable 'certificate of trust', thereby offering a practical tool for assessing the trustworthiness of AI-based scientific predictions. Our code is publicly available at this https URL

We study the potential of large language models (LLMs) as proxies for humans

to simplify preference elicitation (PE) in combinatorial assignment. While

traditional PE methods rely on iterative queries to capture preferences, LLMs

offer a one-shot alternative with reduced human effort. We propose a framework

for LLM proxies that can work in tandem with SOTA ML-powered preference

elicitation schemes. Our framework handles the novel challenges introduced by

LLMs, such as response variability and increased computational costs. We

experimentally evaluate the efficiency of LLM proxies against human queries in

the well-studied course allocation domain, and we investigate the model

capabilities required for success. We find that our approach improves

allocative efficiency by up to 20%, and these results are robust across

different LLMs and to differences in quality and accuracy of reporting.

ETH Zurich researchers develop an offline model-based reinforcement learning framework that learns robotic policies directly from real-world data without requiring physics simulators, combining uncertainty-aware dynamics modeling with penalized policy optimization to achieve successful deployment on an ANYmal D quadruped robot.

Researchers at ETH Zurich and ELLIS Institute Tübingen demonstrate that knowledge distillation's performance advantages are substantially amplified in low-data training scenarios, allowing models to achieve comparable performance with approximately three times less data than conventional label training. The work systematically re-evaluates established knowledge distillation hypotheses by introducing dataset size as a critical variable across diverse architectures and modalities.

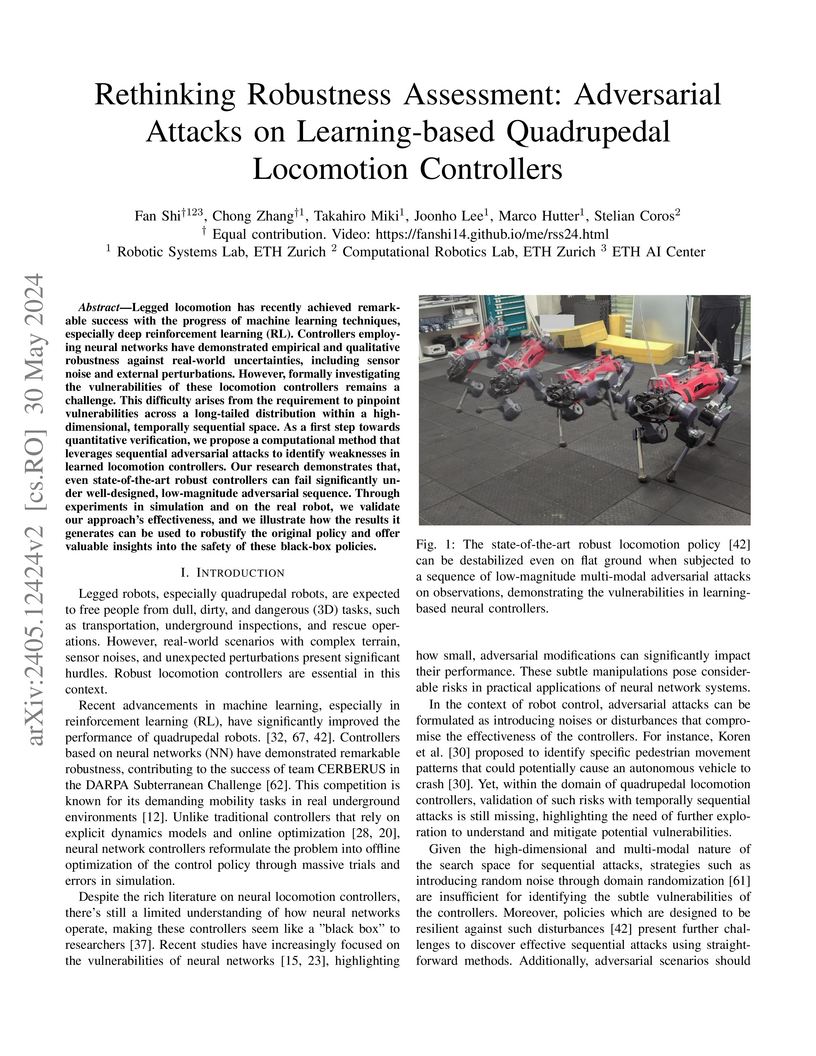

Researchers at ETH Zurich developed a computational method to identify subtle, sequential adversarial attacks capable of destabilizing state-of-the-art quadrupedal locomotion controllers, then demonstrated how these attacks can be used to robustify the policies for improved real-world performance.

Pricing algorithms have demonstrated the capability to learn tacit collusion

that is largely unaddressed by current regulations. Their increasing use in

markets, including oligopolistic industries with a history of collusion, calls

for closer examination by competition authorities. In this paper, we extend the

study of tacit collusion in learning algorithms from basic pricing games to

more complex markets characterized by perishable goods with fixed supply and

sell-by dates, such as airline tickets, perishables, and hotel rooms. We

formalize collusion within this framework and introduce a metric based on price

levels under both the competitive (Nash) equilibrium and collusive

(monopolistic) optimum. Since no analytical expressions for these price levels

exist, we propose an efficient computational approach to derive them. Through

experiments, we demonstrate that deep reinforcement learning agents can learn

to collude in this more complex domain. Additionally, we analyze the underlying

mechanisms and structures of the collusive strategies these agents adopt.

There are no more papers matching your filters at the moment.