Fujitsu Laboratories Ltd.

The paper introduces TreeFARMS, a method that fully enumerates the Rashomon set for sparse decision trees on real-world datasets. This approach enables the discovery of all almost-optimal models, demonstrating that numerous structurally diverse trees can achieve similar predictive performance, and facilitates the exact computation of Model Class Reliance.

A fundamental problem in drug discovery is to design molecules that bind to specific proteins. To tackle this problem using machine learning methods, here we propose a novel and effective framework, known as GraphBP, to generate 3D molecules that bind to given proteins by placing atoms of specific types and locations to the given binding site one by one. In particular, at each step, we first employ a 3D graph neural network to obtain geometry-aware and chemically informative representations from the intermediate contextual information. Such context includes the given binding site and atoms placed in the previous steps. Second, to preserve the desirable equivariance property, we select a local reference atom according to the designed auxiliary classifiers and then construct a local spherical coordinate system. Finally, to place a new atom, we generate its atom type and relative location w.r.t. the constructed local coordinate system via a flow model. We also consider generating the variables of interest sequentially to capture the underlying dependencies among them. Experiments demonstrate that our GraphBP is effective to generate 3D molecules with binding ability to target protein binding sites. Our implementation is available at this https URL.

Selective forgetting or removing information from deep neural networks (DNNs)

is essential for continual learning and is challenging in controlling the DNNs.

Such forgetting is crucial also in a practical sense since the deployed DNNs

may be trained on the data with outliers, poisoned by attackers, or with

leaked/sensitive information. In this paper, we formulate selective forgetting

for classification tasks at a finer level than the samples' level. We specify

the finer level based on four datasets distinguished by two conditions: whether

they contain information to be forgotten and whether they are available for the

forgetting procedure. Additionally, we reveal the need for such formulation

with the datasets by showing concrete and practical situations. Moreover, we

introduce the forgetting procedure as an optimization problem on three

criteria; the forgetting, the correction, and the remembering term.

Experimental results show that the proposed methods can make the model forget

to use specific information for classification. Notably, in specific cases, our

methods improved the model's accuracy on the datasets, which contains

information to be forgotten but is unavailable in the forgetting procedure.

Such data are unexpectedly found and misclassified in actual situations.

Finding a ground state of a given Hamiltonian of an Ising model on a graph

G=(V,E) is an important but hard problem. The standard approach for this kind

of problem is the application of algorithms that rely on single-spin-flip

Markov chain Monte Carlo methods, such as the simulated annealing based on

Glauber or Metropolis dynamics. In this paper, we investigate a particular kind

of stochastic cellular automata, in which all spins are updated independently

and simultaneously. We prove that (i) if the temperature is fixed sufficiently

high, then the mixing time is at most of order log∣V∣, and that (ii) if the

temperature drops in time n as 1/logn, then the limiting measure is

uniformly distributed over the ground states. We also provide some simulations

of the algorithms studied in this paper implemented on a GPU and show their

superior performance compared to the conventional simulated annealing.

Learning causal structures from observational data is a fundamental problem

facing important computational challenges when the number of variables is

large. In the context of linear structural equation models (SEMs), this paper

focuses on learning causal structures from the inverse covariance matrix. The

proposed method, called ICID for Independence-preserving Decomposition from

Inverse Covariance matrix, is based on continuous optimization of a matrix

decomposition model that preserves the nonzero patterns of the inverse

covariance matrix. Through theoretical and empirical evidences, we show that

ICID efficiently identifies the sought directed acyclic graph (DAG) assuming

the knowledge of noise variances. Moreover, ICID is shown empirically to be

robust under bounded misspecification of noise variances in the case where the

noise variances are non-equal. The proposed method enjoys a low complexity, as

reflected by its time efficiency in the experiments, and also enables a novel

regularization scheme that yields highly accurate solutions on the Simulated

fMRI data (Smith et al., 2011) in comparison with state-of-the-art algorithms.

In this study, we report the successful execution of in-air knotting of rope

using a dual-arm two-finger robot based on deep learning. Owing to its

flexibility, the state of the rope was in constant flux during the operation of

the robot. This required the robot control system to dynamically correspond to

the state of the object at all times. However, a manual description of

appropriate robot motions corresponding to all object states is difficult to be

prepared in advance. To resolve this issue, we constructed a model that

instructed the robot to perform bowknots and overhand knots based on two deep

neural networks trained using the data gathered from its sensorimotor,

including visual and proximity sensors. The resultant model was verified to be

capable of predicting the appropriate robot motions based on the sensory

information available online. In addition, we designed certain task motions

based on the Ian knot method using the dual-arm two-fingers robot. The designed

knotting motions do not require a dedicated workbench or robot hand, thereby

enhancing the versatility of the proposed method. Finally, experiments were

performed to estimate the knotting performance of the real robot while

executing overhand knots and bowknots on rope and its success rate. The

experimental results established the effectiveness and high performance of the

proposed method.

A New Fast Weighted All-pairs Shortest Path Search Algorithm Based on Pruning by Shortest Path Trees

A New Fast Weighted All-pairs Shortest Path Search Algorithm Based on Pruning by Shortest Path Trees

Recently we submitted a paper, whose title is A New Fast Unweighted All-pairs

Shortest Path Search Algorithm Based on Pruning by Shortest Path Trees, to

arXiv. This is related to unweighted graphs. This paper also presents a new

fast all-pairs shortest path algorithm for weighted graph based on the same

idea. In Dijkstra algorithm which is said to be fast in weighted graphs, the

average number of accesses to adjacent vertices (expressed by {\alpha}) is

about equal to the average degree of the graph. On the other hand, our

algorithm utilizes the shortest path trees of adjacent vertices of each source

vertex in the same manner as the algorithm for unweighted graphs, and reduce

{\alpha} drastically in comparison with Dijkstra algorithm. Roughly speaking

{\alpha} is reduced to the value close to 1, because the average degree of a

tree is about 2, and one is used to come in and the other is used to go out,

although that does not hold true when the depth of the short path trees is

small. In case of weighted graphs, a problem which does not occur in unweighted

graphs occurs. It is waiting for the generation of the shortest path tree of an

adjacent vertex. Therefore, it is possible that a deadlock occurs. We prove our

algorithm is deadlock-free. We compared our algorithm with Dijkstra and Peng

algorithms. On Dijkstra algorithm ours outperforms it on speed and {\alpha}

except that Dijkstra algorithm slightly outperforms ours or they are almost the

same on CPU time in sparse scale-free graphs. The result on Peng algorithm is

as follows: In speed and {\alpha}, ours outperforms Peng algorithm in

hypercube-shaped and dense scale-free graphs, but conversely Peng algorithm

outperforms ours in sparse scale-free graphs.

The Bezier simplex fitting is a novel data modeling technique which exploits geometric structures of data to approximate the Pareto front of multi-objective optimization problems. There are two fitting methods based on different sampling strategies. The inductive skeleton fitting employs a stratified subsampling from each skeleton of a simplex, whereas the all-at-once fitting uses a non-stratified sampling which treats a simplex as a whole. In this paper, we analyze the asymptotic risks of those Bézier simplex fitting methods and derive the optimal subsample ratio for the inductive skeleton fitting. It is shown that the inductive skeleton fitting with the optimal ratio has a smaller risk when the degree of a Bezier simplex is less than three. Those results are verified numerically under small to moderate sample sizes. In addition, we provide two complementary applications of our theory: a generalized location problem and a multi-objective hyper-parameter tuning of the group lasso. The former can be represented by a Bezier simplex of degree two where the inductive skeleton fitting outperforms. The latter can be represented by a Bezier simplex of degree three where the all-at-once fitting gets an advantage.

13 Dec 2018

Multi-objective optimization problems require simultaneously optimizing two

or more objective functions. Many studies have reported that the solution set

of an M-objective optimization problem often forms an (M-1)-dimensional

topological simplex (a curved line for M=2, a curved triangle for M=3, a curved

tetrahedron for M=4, etc.). Since the dimensionality of the solution set

increases as the number of objectives grows, an exponentially large sample size

is needed to cover the solution set. To reduce the required sample size, this

paper proposes a Bezier simplex model and its fitting algorithm. These

techniques can exploit the simplex structure of the solution set and decompose

a high-dimensional surface fitting task into a sequence of low-dimensional

ones. An approximation theorem of Bezier simplices is proven. Numerical

experiments with synthetic and real-world optimization problems demonstrate

that the proposed method achieves an accurate approximation of high-dimensional

solution sets with small samples. In practice, such an approximation will be

conducted in the post-optimization process and enable a better trade-off

analysis.

Japanese character figurines are popular and have pivot position in Otaku

culture. Although numerous robots have been developed, less have focused on

otaku-culture or on embodying the anime character figurine. Therefore, we take

the first steps to bridge this gap by developing Hatsuki, which is a humanoid

robot platform with anime based design. Hatsuki's novelty lies in aesthetic

design, 2D facial expressions, and anime-style behaviors that allows it to

deliver rich interaction experiences resembling anime-characters. We explain

our design implementation process of Hatsuki, followed by our evaluations. In

order to explore user impressions and opinions towards Hatsuki, we conducted a

questionnaire in the world's largest anime-figurine event. The results indicate

that participants were generally very satisfied with Hatsuki's design, and

proposed various use case scenarios and deployment contexts for Hatsuki. The

second evaluation focused on imitation learning, as such method can provide

better interaction ability in the real world and generate rich,

context-adaptive behavior in different situations. We made Hatsuki learn 11

actions, combining voice, facial expressions and motions, through neuron

network based policy model with our proposed interface. Results show our

approach was successfully able to generate the actions through self-organized

contexts, which shows the potential for generalizing our approach in further

actions under different contexts. Lastly, we present our future research

direction for Hatsuki, and provide our conclusion.

There has been a strong demand for algorithms that can execute machine

learning as faster as possible and the speed of deep learning has accelerated

by 30 times only in the past two years. Distributed deep learning using the

large mini-batch is a key technology to address the demand and is a great

challenge as it is difficult to achieve high scalability on large clusters

without compromising accuracy. In this paper, we introduce optimization methods

which we applied to this challenge. We achieved the training time of 74.7

seconds using 2,048 GPUs on ABCI cluster applying these methods. The training

throughput is over 1.73 million images/sec and the top-1 validation accuracy is

75.08%.

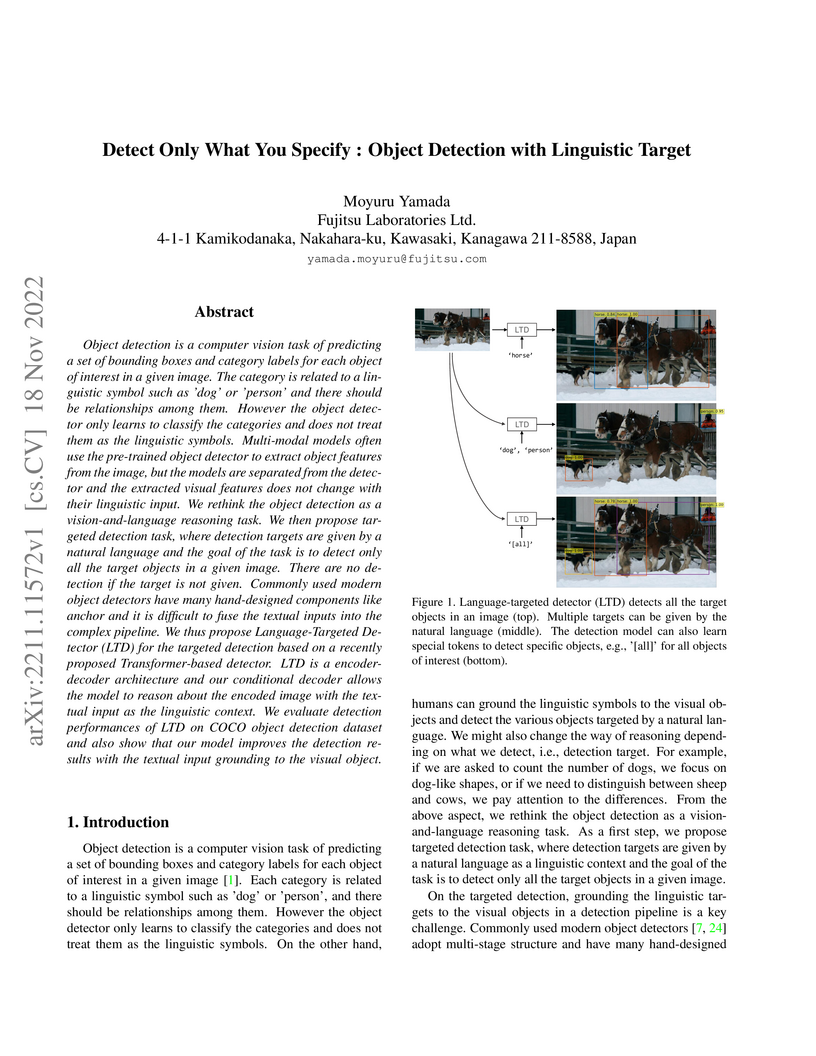

Object detection is a computer vision task of predicting a set of bounding boxes and category labels for each object of interest in a given image. The category is related to a linguistic symbol such as 'dog' or 'person' and there should be relationships among them. However the object detector only learns to classify the categories and does not treat them as the linguistic symbols. Multi-modal models often use the pre-trained object detector to extract object features from the image, but the models are separated from the detector and the extracted visual features does not change with their linguistic input. We rethink the object detection as a vision-and-language reasoning task. We then propose targeted detection task, where detection targets are given by a natural language and the goal of the task is to detect only all the target objects in a given image. There are no detection if the target is not given. Commonly used modern object detectors have many hand-designed components like anchor and it is difficult to fuse the textual inputs into the complex pipeline. We thus propose Language-Targeted Detector (LTD) for the targeted detection based on a recently proposed Transformer-based detector. LTD is a encoder-decoder architecture and our conditional decoder allows the model to reason about the encoded image with the textual input as the linguistic context. We evaluate detection performances of LTD on COCO object detection dataset and also show that our model improves the detection results with the textual input grounding to the visual object.

Variational autoencoder (VAE) estimates the posterior parameters (mean and

variance) of latent variables corresponding to each input data. While it is

used for many tasks, the transparency of the model is still an underlying

issue. This paper provides a quantitative understanding of VAE property through

the differential geometric and information-theoretic interpretations of VAE.

According to the Rate-distortion theory, the optimal transform coding is

achieved by using an orthonormal transform with PCA basis where the transform

space is isometric to the input. Considering the analogy of transform coding to

VAE, we clarify theoretically and experimentally that VAE can be mapped to an

implicit isometric embedding with a scale factor derived from the posterior

parameter. As a result, we can estimate the data probabilities in the input

space from the prior, loss metrics, and corresponding posterior parameters, and

further, the quantitative importance of each latent variable can be evaluated

like the eigenvalue of PCA.

Nowadays, systems containing components based on machine learning (ML)

methods are becoming more widespread. In order to ensure the intended behavior

of a software system, there are standards that define necessary quality aspects

of the system and its components (such as ISO/IEC 25010). Due to the different

nature of ML, we have to adjust quality aspects or add additional ones (such as

trustworthiness) and be very precise about which aspect is really relevant for

which object of interest (such as completeness of training data), and how to

objectively assess adherence to quality requirements. In this article, we

present the construction of a quality model (i.e., evaluation objects, quality

aspects, and metrics) for an ML system based on an industrial use case. This

quality model enables practitioners to specify and assess quality requirements

for such kinds of ML systems objectively. In the future, we want to learn how

the term quality differs between different types of ML systems and come up with

general guidelines for specifying and assessing qualities of ML systems.

Domain shifts in the training data are common in practical applications of

machine learning; they occur for instance when the data is coming from

different sources. Ideally, a ML model should work well independently of these

shifts, for example, by learning a domain-invariant representation. However,

common ML losses do not give strong guarantees on how consistently the ML model

performs for different domains, in particular, whether the model performs well

on a domain at the expense of its performance on another domain. In this paper,

we build new theoretical foundations for this problem, by contributing a set of

mathematical relations between classical losses for supervised ML and the

Wasserstein distance in joint space (i.e. representation and output space). We

show that classification or regression losses, when combined with a GAN-type

discriminator between domains, form an upper-bound to the true Wasserstein

distance between domains. This implies a more invariant representation and also

more stable prediction performance across domains. Theoretical results are

corroborated empirically on several image datasets. Our proposed approach

systematically produces the highest minimum classification accuracy across

domains, and the most invariant representation.

With Artificial intelligence (AI) to aid or automate decision-making advancing rapidly, a particular concern is its fairness. In order to create reliable, safe and trustworthy systems through human-centred artificial intelligence (HCAI) design, recent efforts have produced user interfaces (UIs) for AI experts to investigate the fairness of AI models. In this work, we provide a design space exploration that supports not only data scientists but also domain experts to investigate AI fairness. Using loan applications as an example, we held a series of workshops with loan officers and data scientists to elicit their requirements. We instantiated these requirements into FairHIL, a UI to support human-in-the-loop fairness investigations, and describe how this UI could be generalized to other use cases. We evaluated FairHIL through a think-aloud user study. Our work contributes better designs to investigate an AI model's fairness-and move closer towards responsible AI.

We present a new fast all-pairs shortest path algorithm for unweighted

graphs. In breadth-first search which is said to representative and fast in

unweighted graphs, the average number of accesses to adjacent vertices

(expressed by {\alpha}) is about equal to the average degree of the graph. On

the other hand, our algorithm utilizes the shortest path trees of adjacent

vertices of each source vertex, and reduce {\alpha} drastically. Roughly

speaking {\alpha} is reduced to the value close to 1, because the average

degree of a tree is about 2, and one is used to come in and the other is used

to go out, although that does not hold true when the depth of the shortest path

trees is small. We compared our algorithm with breadth-first search algorithm,

and our results showed that ours outperforms breadth-first search on speed and

{\alpha}.

07 Aug 2020

This paper studies mean-risk portfolio optimization models using the conditional value-at-risk (CVaR) as a risk measure. We also employ a cardinality constraint for limiting the number of invested assets. Solving such a cardinality-constrained mean-CVaR model is computationally challenging for two main reasons. First, this model is formulated as a mixed-integer optimization (MIO) problem because of the cardinality constraint, so solving it exactly is very hard when the number of investable assets is large. Second, the problem size depends on the number of asset return scenarios, and the computational efficiency decreases when the number of scenarios is large. To overcome these challenges, we propose a high-performance algorithm named the \emph{bilevel cutting-plane algorithm} for exactly solving the cardinality-constrained mean-CVaR portfolio optimization problem. We begin by reformulating the problem as a bilevel optimization problem and then develop a cutting-plane algorithm for solving the upper-level problem. To speed up computations for cut generation, we apply to the lower-level problem another cutting-plane algorithm for efficiently minimizing CVaR with a large number of scenarios. Moreover, we prove the convergence properties of our bilevel cutting-plane algorithm. Numerical experiments demonstrate that, compared with other MIO approaches, our algorithm can provide optimal solutions to large problem instances faster.

In many practical control applications, the performance level of a

closed-loop system degrades over time due to the change of plant

characteristics. Thus, there is a strong need for redesigning a controller

without going through the system modeling process, which is often difficult for

closed-loop systems. Reinforcement learning (RL) is one of the promising

approaches that enable model-free redesign of optimal controllers for nonlinear

dynamical systems based only on the measurement of the closed-loop system.

However, the learning process of RL usually requires a considerable number of

trial-and-error experiments using the poorly controlled system that may

accumulate wear on the plant. To overcome this limitation, we propose a

model-free two-step design approach that improves the transient learning

performance of RL in an optimal regulator redesign problem for unknown

nonlinear systems. Specifically, we first design a linear control law that

attains some degree of control performance in a model-free manner, and then,

train the nonlinear optimal control law with online RL by using the designed

linear control law in parallel. We introduce an offline RL algorithm for the

design of the linear control law and theoretically guarantee its convergence to

the LQR controller under mild assumptions. Numerical simulations show that the

proposed approach improves the transient learning performance and efficiency in

hyperparameter tuning of RL.

10 Dec 2019

Blockchain is one of the most popular distributed ledger technologies. It can solve the trust issue among enterprises. Hyperledger Fabric is a permissioned blockchain aiming at enterprise-grade business applications. However, compared to traditional distributed database solutions, one issue of blockchain based application development is the limited data access. For Fabric, the ledger data can only be retrieved by limited interfaces provided by Fabric SDKs or chaincode. In order to meet the requirements of data query and provide flexible query functions for real applications built on Fabric, this paper proposed a ledger data query platform called Ledgerdata Refiner. With ledger data analysis middleware, we provide sufficient interfaces for users to retrieve block or transaction efficiently. It is also able to track historical operations for any specific state. In addition, schemas of ledger state have been analyzed and clustered, which enable users to perform rich queries against ledger data. Finally, we validate the effectiveness of our query platform on a real application.

There are no more papers matching your filters at the moment.