Heinrich Heine University

08 Jun 2025

Sharing and reusing research artifacts, such as datasets, publications, or

methods is a fundamental part of scientific activity, where heterogeneity of

resources and metadata and the common practice of capturing information in

unstructured publications pose crucial challenges. Reproducibility of research

and finding state-of-the-art methods or data have become increasingly

challenging. In this context, the concept of Research Knowledge Graphs (RKGs)

has emerged, aiming at providing an easy to use and machine-actionable

representation of research artifacts and their relations. That is facilitated

through the use of established principles for data representation, the

consistent adoption of globally unique persistent identifiers and the reuse and

linking of vocabularies and data. This paper provides the first

conceptualisation of the RKG vision, a categorisation of in-use RKGs together

with a description of RKG building blocks and principles. We also survey

real-world RKG implementations differing with respect to scale, schema, data,

used vocabulary, and reliability of the contained data. We also characterise

different RKG construction methodologies and provide a forward-looking

perspective on the diverse applications, opportunities, and challenges

associated with the RKG vision.

Cultural phylogenies, or "trees" of culture, are typically built using methods from biology that use similarities and differences in artifacts to infer the historical relationships between the populations that produced them. While these methods have yielded important insights, particularly in linguistics, researchers continue to debate the extent to which cultural phylogenies are tree-like or reticulated due to high levels of horizontal transmission. In this study, we propose a novel method for phylogenetic reconstruction using dynamic community detection that explicitly accounts for transmission between lineages. We used data from 1,498,483 collaborative relationships between electronic music artists to construct a cultural phylogeny based on observed population structure. The results suggest that, although the phylogeny is fundamentally tree-like, horizontal transmission is common and populations never become fully isolated from one another. In addition, we found evidence that electronic music diversity has increased between 1975 and 1999. The method used in this study is available as a new R package called DynCommPhylo. Future studies should apply this method to other cultural systems such as academic publishing and film, as well as biological systems where high resolution reproductive data is available, to assess how levels of reticulation in evolution vary across domains.

In this paper, we deal with bias mitigation techniques that remove specific data points from the training set to aim for a fair representation of the population in that set. Machine learning models are trained on these pre-processed datasets, and their predictions are expected to be fair. However, such approaches may exclude relevant data, making the attained subsets less trustworthy for further usage. To enhance the trustworthiness of prior methods, we propose additional requirements and objectives that the subsets must fulfill in addition to fairness: (1) group coverage, and (2) minimal data loss. While removing entire groups may improve the measured fairness, this practice is very problematic as failing to represent every group cannot be considered fair. In our second concern, we advocate for the retention of data while minimizing discrimination. By introducing a multi-objective optimization problem that considers fairness and data loss, we propose a methodology to find Pareto-optimal solutions that balance these objectives. By identifying such solutions, users can make informed decisions about the trade-off between fairness and data quality and select the most suitable subset for their application. Our method is distributed as a Python package via PyPI under the name FairDo (this https URL).

We present a general methodology that learns to classify images without labels by leveraging pretrained feature extractors. Our approach involves self-distillation training of clustering heads based on the fact that nearest neighbours in the pretrained feature space are likely to share the same label. We propose a novel objective that learns associations between image features by introducing a variant of pointwise mutual information together with instance weighting. We demonstrate that the proposed objective is able to attenuate the effect of false positive pairs while efficiently exploiting the structure in the pretrained feature space. As a result, we improve the clustering accuracy over k-means on 17 different pretrained models by 6.1\% and 12.2\% on ImageNet and CIFAR100, respectively. Finally, using self-supervised vision transformers, we achieve a clustering accuracy of 61.6\% on ImageNet. The code is available at this https URL.

12 Mar 2025

This research focuses on evaluating and enhancing data readiness for the

development of an Artificial Intelligence (AI)-based Clinical Decision Support

System (CDSS) in the context of skin cancer treatment. The study, conducted at

the Skin Tumor Center of the University Hospital M\"unster, delves into the

essential role of data quality, availability, and extractability in

implementing effective AI applications in oncology. By employing a multifaceted

methodology, including literature review, data readiness assessment, and expert

workshops, the study addresses the challenges of integrating AI into clinical

decision-making. The research identifies crucial data points for skin cancer

treatment decisions, evaluates their presence and quality in various

information systems, and highlights the difficulties in extracting information

from unstructured data. The findings underline the significance of

high-quality, accessible data for the success of AI-driven CDSS in medical

settings, particularly in the complex field of oncology.

Recent developments in the registration of histology and micro-computed tomography ({\mu}CT) have broadened the perspective of pathological applications such as virtual histology based on {\mu}CT. This topic remains challenging because of the low image quality of soft tissue CT. Additionally, soft tissue samples usually deform during the histology slide preparation, making it difficult to correlate the structures between histology slide and {\mu}CT. In this work, we propose a novel 2D-3D multi-modal deformable image registration method. The method uses a machine learning (ML) based initialization followed by the registration. The registration is finalized by an analytical out-of-plane deformation refinement. The method is evaluated on datasets acquired from tonsil and tumor tissues. {\mu}CTs of both phase-contrast and conventional absorption modalities are investigated. The registration results from the proposed method are compared with those from intensity- and keypoint-based methods. The comparison is conducted using both visual and fiducial-based evaluations. The proposed method demonstrates superior performance compared to the other two methods.

Sequential recommender systems (SRSs) aim to suggest next item for a user based on her historical interaction sequences. Recently, many research efforts have been devoted to attenuate the influence of noisy items in sequences by either assigning them with lower attention weights or discarding them directly. The major limitation of these methods is that the former would still prone to overfit noisy items while the latter may overlook informative items. To the end, in this paper, we propose a novel model named Multi-level Sequence Denoising with Cross-signal Contrastive Learning (MSDCCL) for sequential recommendation. To be specific, we first introduce a target-aware user interest extractor to simultaneously capture users' long and short term interest with the guidance of target items. Then, we develop a multi-level sequence denoising module to alleviate the impact of noisy items by employing both soft and hard signal denoising strategies. Additionally, we extend existing curriculum learning by simulating the learning pattern of human beings. It is worth noting that our proposed model can be seamlessly integrated with a majority of existing recommendation models and significantly boost their effectiveness. Experimental studies on five public datasets are conducted and the results demonstrate that the proposed MSDCCL is superior to the state-of-the-art baselines. The source code is publicly available at this https URL.

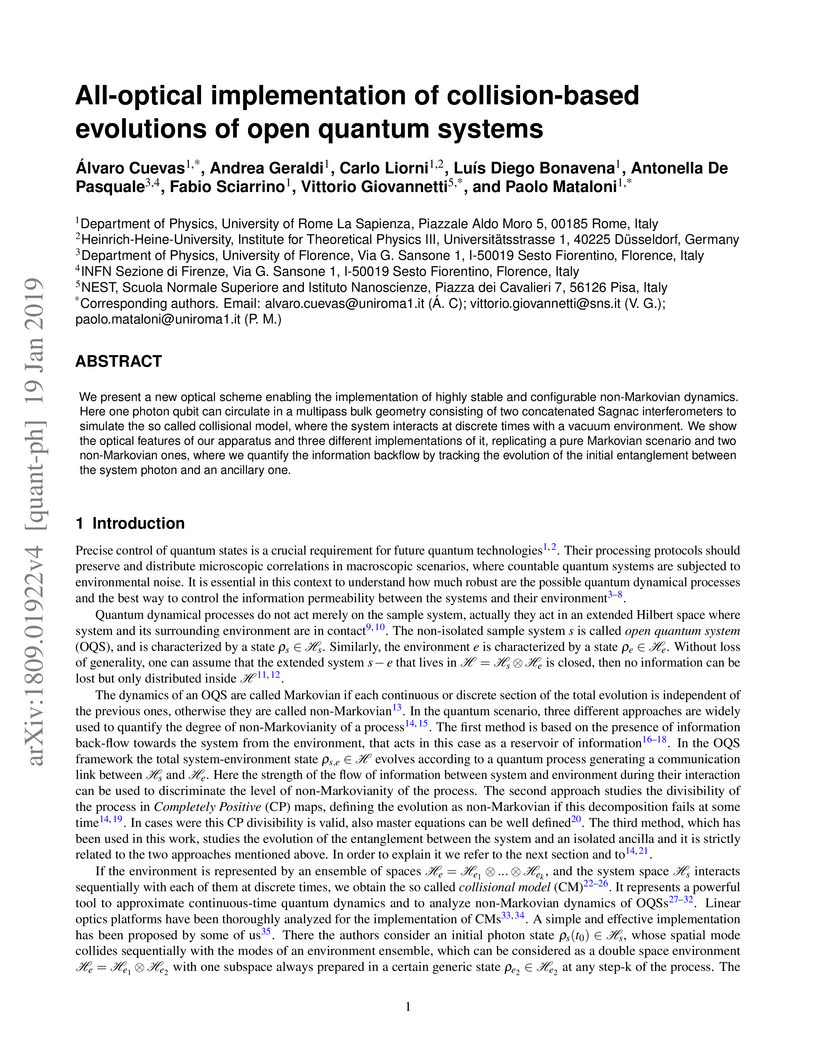

19 Jan 2019

We present a new optical scheme enabling the implementation of highly stable and configurable non-Markovian dynamics. Here one photon qubit can circulate in a multipass bulk geometry consisting of two concatenated Sagnac interferometers to simulate the so called collisional model, where the system interacts at discrete times with a vacuum environment. We show the optical features of our apparatus and three different implementations of it, replicating a pure Markovian scenario and two non-Markovian ones, where we quantify the information backflow by tracking the evolution of the initial entanglement between the system photon and an ancillary one.

Classifying samples as in-distribution or out-of-distribution (OOD) is a

challenging problem of anomaly detection and a strong test of the

generalisation power for models of the in-distribution. In this paper, we

present a simple and generic framework, {\it SemSAD}, that makes use of a

semantic similarity score to carry out anomaly detection. The idea is to first

find for any test example the semantically closest examples in the training

set, where the semantic relation between examples is quantified by the cosine

similarity between feature vectors that leave semantics unchanged under

transformations, such as geometric transformations (images), time shifts (audio

signals), and synonymous word substitutions (text). A trained discriminator is

then used to classify a test example as OOD if the semantic similarity to its

nearest neighbours is significantly lower than the corresponding similarity for

test examples from the in-distribution. We are able to outperform previous

approaches for anomaly, novelty, or out-of-distribution detection in the visual

domain by a large margin. In particular, we obtain AUROC values close to one

for the challenging task of detecting examples from CIFAR-10 as

out-of-distribution given CIFAR-100 as in-distribution, without making use of

label information.

The reason behind the unfair outcomes of AI is often rooted in biased datasets. Therefore, this work presents a framework for addressing fairness by debiasing datasets containing a (non-)binary protected attribute. The framework proposes a combinatorial optimization problem where heuristics such as genetic algorithms can be used to solve for the stated fairness objectives. The framework addresses this by finding a data subset that minimizes a certain discrimination measure. Depending on a user-defined setting, the framework enables different use cases, such as data removal, the addition of synthetic data, or exclusive use of synthetic data. The exclusive use of synthetic data in particular enhances the framework's ability to preserve privacy while optimizing for fairness. In a comprehensive evaluation, we demonstrate that under our framework, genetic algorithms can effectively yield fairer datasets compared to the original data. In contrast to prior work, the framework exhibits a high degree of flexibility as it is metric- and task-agnostic, can be applied to both binary or non-binary protected attributes, and demonstrates efficient runtime.

31 Oct 2023

In this paper, we describe our submission to the BabyLM Challenge 2023 shared

task on data-efficient language model (LM) pretraining (Warstadt et al., 2023).

We train transformer-based masked language models that incorporate unsupervised

predictions about hierarchical sentence structure into the model architecture.

Concretely, we use the Structformer architecture (Shen et al., 2021) and

variants thereof. StructFormer models have been shown to perform well on

unsupervised syntactic induction based on limited pretraining data, and to

yield performance improvements over a vanilla transformer architecture (Shen et

al., 2021). Evaluation of our models on 39 tasks provided by the BabyLM

challenge shows promising improvements of models that integrate a hierarchical

bias into the architecture at some particular tasks, even though they fail to

consistently outperform the RoBERTa baseline model provided by the shared task

organizers on all tasks.

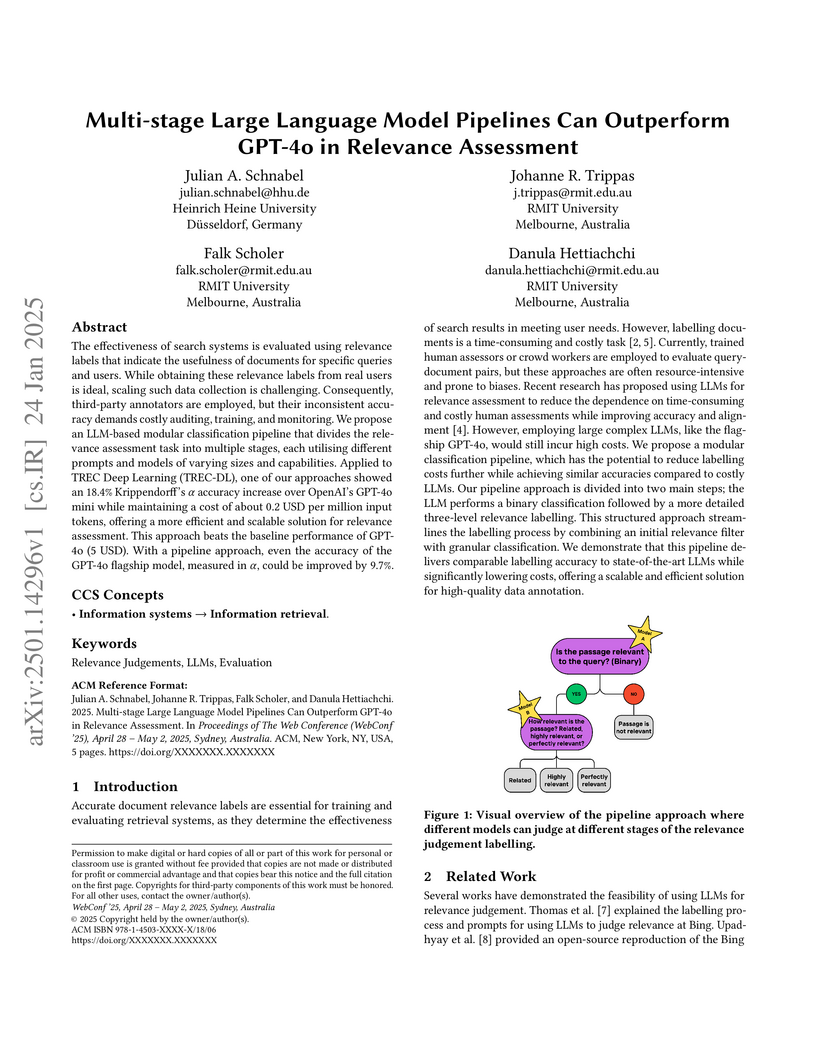

24 Jan 2025

The effectiveness of search systems is evaluated using relevance labels that indicate the usefulness of documents for specific queries and users. While obtaining these relevance labels from real users is ideal, scaling such data collection is challenging. Consequently, third-party annotators are employed, but their inconsistent accuracy demands costly auditing, training, and monitoring. We propose an LLM-based modular classification pipeline that divides the relevance assessment task into multiple stages, each utilising different prompts and models of varying sizes and capabilities. Applied to TREC Deep Learning (TREC-DL), one of our approaches showed an 18.4% Krippendorff's α accuracy increase over OpenAI's GPT-4o mini while maintaining a cost of about 0.2 USD per million input tokens, offering a more efficient and scalable solution for relevance assessment. This approach beats the baseline performance of GPT-4o (5 USD). With a pipeline approach, even the accuracy of the GPT-4o flagship model, measured in α, could be improved by 9.7%.

In recent years, research unveiled more and more evidence for the so-called

Bayesian Brain Paradigm, i.e. the human brain is interpreted as a probabilistic

inference machine and Bayesian modelling approaches are hence used

successfully. One of the many theories is that of Probabilistic Population

Codes (PPC). Although this model has so far only been considered as meaningful

and useful for sensory perception as well as motor control, it has always been

suggested that this mechanism also underlies higher cognition and

decision-making. However, the adequacy of PPC for this regard cannot be

confirmed by means of neurological standard measurement procedures.

In this article we combine the parallel research branches of recommender

systems and predictive data mining with theoretical neuroscience. The nexus of

both fields is given by behavioural variability and resulting internal

distributions. We adopt latest experimental settings and measurement approaches

from predictive data mining to obtain these internal distributions, to inform

the theoretical PPC approach and to deduce medical correlates which can indeed

be measured in vivo. This is a strong hint for the applicability of the PPC

approach and the Bayesian Brain Paradigm for higher cognition and human

decision-making.

Despite the immense societal importance of ethically designing artificial

intelligence (AI), little research on the public perceptions of ethical AI

principles exists. This becomes even more striking when considering that

ethical AI development has the aim to be human-centric and of benefit for the

whole society. In this study, we investigate how ethical principles

(explainability, fairness, security, accountability, accuracy, privacy, machine

autonomy) are weighted in comparison to each other. This is especially

important, since simultaneously considering ethical principles is not only

costly, but sometimes even impossible, as developers must make specific

trade-off decisions. In this paper, we give first answers on the relative

importance of ethical principles given a specific use case - the use of AI in

tax fraud detection. The results of a large conjoint survey (n=1099) suggest

that, by and large, German respondents found the ethical principles equally

important. However, subsequent cluster analysis shows that different preference

models for ethically designed systems exist among the German population. These

clusters substantially differ not only in the preferred attributes, but also in

the importance level of the attributes themselves. We further describe how

these groups are constituted in terms of sociodemographics as well as opinions

on AI. Societal implications as well as design challenges are discussed.

03 Apr 2014

Metagenomics characterizes microbial communities by random shotgun sequencing

of DNA isolated directly from an environment of interest. An essential step in

computational metagenome analysis is taxonomic sequence assignment, which

allows us to identify the sequenced community members and to reconstruct

taxonomic bins with sequence data for the individual taxa. We describe an

algorithm and the accompanying software, taxator-tk, which performs taxonomic

sequence assignments by fast approximate determination of evolutionary

neighbors from sequence similarities. Taxator-tk was precise in its taxonomic

assignment across all ranks and taxa for a range of evolutionary distances and

for short sequences. In addition to the taxonomic binning of metagenomes, it is

well suited for profiling microbial communities from metagenome samples

becauseit identifies bacterial, archaeal and eukaryotic community members

without being affected by varying primer binding strengths, as in marker gene

amplification, or copy number variations of marker genes across different taxa.

Taxator-tk has an efficient, parallelized implementation that allows the

assignment of 6 Gb of sequence data per day on a standard multiprocessor system

with ten CPU cores and microbial RefSeq as the genomic reference data.

We present a comprehensive experimental study on pretrained feature

extractors for visual out-of-distribution (OOD) detection, focusing on adapting

contrastive language-image pretrained (CLIP) models. Without fine-tuning on the

training data, we are able to establish a positive correlation (R2≥0.92)

between in-distribution classification and unsupervised OOD detection for CLIP

models in 4 benchmarks. We further propose a new simple and scalable method

called \textit{pseudo-label probing} (PLP) that adapts vision-language models

for OOD detection. Given a set of label names of the training set, PLP trains a

linear layer using the pseudo-labels derived from the text encoder of CLIP. To

test the OOD detection robustness of pretrained models, we develop a novel

feature-based adversarial OOD data manipulation approach to create adversarial

samples. Intriguingly, we show that (i) PLP outperforms the previous

state-of-the-art \citep{ming2022mcm} on all 5 large-scale benchmarks based on

ImageNet, specifically by an average AUROC gain of 3.4\% using the largest CLIP

model (ViT-G), (ii) we show that linear probing outperforms fine-tuning by

large margins for CLIP architectures (i.e. CLIP ViT-H achieves a mean gain of

7.3\% AUROC on average on all ImageNet-based benchmarks), and (iii)

billion-parameter CLIP models still fail at detecting adversarially manipulated

OOD images. The code and adversarially created datasets will be made publicly

available.

09 Nov 2020

A measure of primal importance for capturing the serial dependence of a

stationary time series at extreme levels is provided by the limiting cluster

size distribution. New estimators based on a blocks declustering scheme are

proposed and analyzed both theoretically and by means of a large-scale

simulation study. A sliding blocks version of the estimators is shown to

outperform a disjoint blocks version. In contrast to some competitors from the

literature, the estimators only depend on one unknown parameter to be chosen by

the statistician.

Text simplification is an intralingual translation task in which documents,

or sentences of a complex source text are simplified for a target audience. The

success of automatic text simplification systems is highly dependent on the

quality of parallel data used for training and evaluation. To advance sentence

simplification and document simplification in German, this paper presents

DEplain, a new dataset of parallel, professionally written and manually aligned

simplifications in plain German ("plain DE" or in German: "Einfache Sprache").

DEplain consists of a news domain (approx. 500 document pairs, approx. 13k

sentence pairs) and a web-domain corpus (approx. 150 aligned documents, approx.

2k aligned sentence pairs). In addition, we are building a web harvester and

experimenting with automatic alignment methods to facilitate the integration of

non-aligned and to be published parallel documents. Using this approach, we are

dynamically increasing the web domain corpus, so it is currently extended to

approx. 750 document pairs and approx. 3.5k aligned sentence pairs. We show

that using DEplain to train a transformer-based seq2seq text simplification

model can achieve promising results. We make available the corpus, the adapted

alignment methods for German, the web harvester and the trained models here:

this https URL

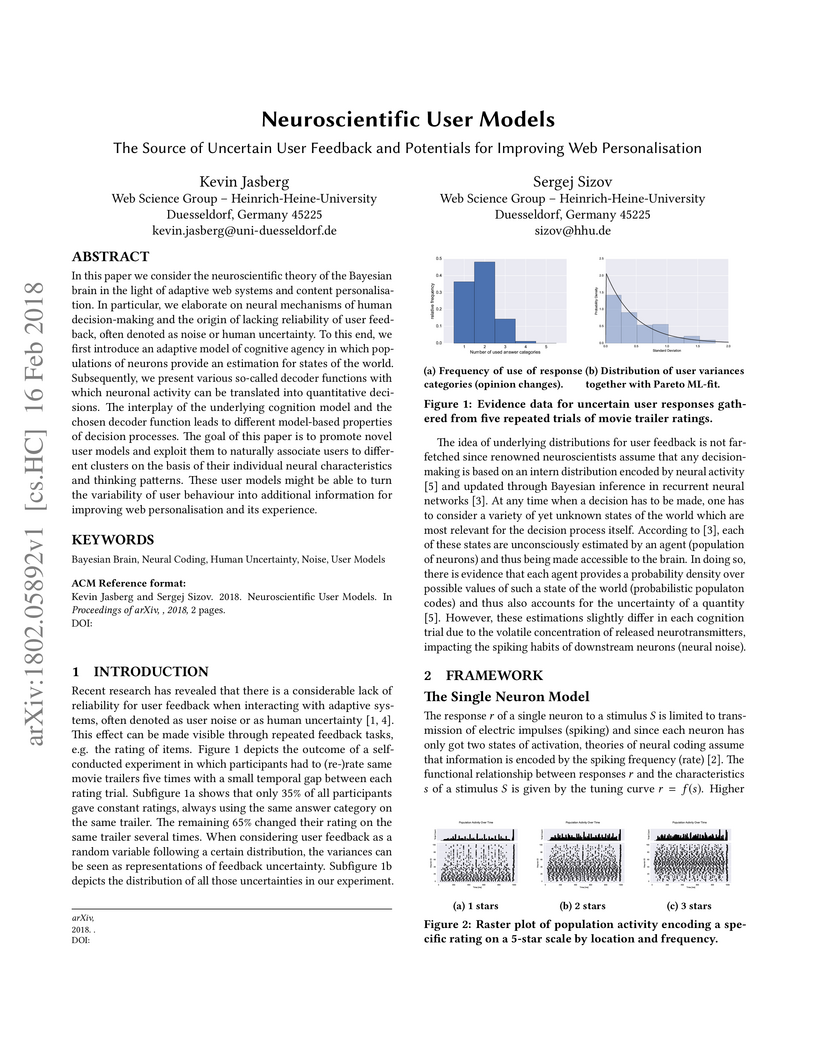

In this paper we consider the neuroscientific theory of the Bayesian brain in the light of adaptive web systems and content personalisation. In particular, we elaborate on neural mechanisms of human decision-making and the origin of lacking reliability of user feedback, often denoted as noise or human uncertainty. To this end, we first introduce an adaptive model of cognitive agency in which populations of neurons provide an estimation for states of the world. Subsequently, we present various so-called decoder functions with which neuronal activity can be translated into quantitative decisions. The interplay of the underlying cognition model and the chosen decoder function leads to different model-based properties of decision processes. The goal of this paper is to promote novel user models and exploit them to naturally associate users to different clusters on the basis of their individual neural characteristics and thinking patterns. These user models might be able to turn the variability of user behaviour into additional information for improving web personalisation and its experience.

Imperial College London

Imperial College London RIKENCERN, European Organization for Nuclear ResearchUniversitat de BarcelonaTechnical University of DarmstadtJohannes Gutenberg-Universität MainzTRIUMFMax-Planck-Institut für PhysikInstitució Catalana de Recerca i Estudis AvançatsTechnical University of CartagenaHeinrich Heine UniversityInstitut de Ciències del Cosmos, Universitat de BarcelonaYebes Observatory (IGN)Center for Astroparticles and High Energy Physics (CAPA), Universidad de ZaragozaInstituto de Física Corpuscular (IFIC), CSIC-University of ValenciaInstitute for Optics and Quantum Electronics, Friedrich Schiller University Jena

RIKENCERN, European Organization for Nuclear ResearchUniversitat de BarcelonaTechnical University of DarmstadtJohannes Gutenberg-Universität MainzTRIUMFMax-Planck-Institut für PhysikInstitució Catalana de Recerca i Estudis AvançatsTechnical University of CartagenaHeinrich Heine UniversityInstitut de Ciències del Cosmos, Universitat de BarcelonaYebes Observatory (IGN)Center for Astroparticles and High Energy Physics (CAPA), Universidad de ZaragozaInstituto de Física Corpuscular (IFIC), CSIC-University of ValenciaInstitute for Optics and Quantum Electronics, Friedrich Schiller University JenaIn the near future BabyIAXO will be the most powerful axion helioscope, relying on a custom-made magnet of two bores of 70 cm diameter and 10 m long, with a total available magnetic volume of more than 7 m3. In this document, we propose and describe the implementation of low-frequency axion haloscope setups suitable for operation inside the BabyIAXO magnet. The RADES proposal has a potential sensitivity to the axion-photon coupling gaγ down to values corresponding to the KSVZ model, in the (currently unexplored) mass range between 1 and 2 μeV, after a total effective exposure of 440 days. This mass range is covered by the use of four differently dimensioned 5-meter-long cavities, equipped with a tuning mechanism based on inner turning plates. A setup like the one proposed would also allow an exploration of the same mass range for hidden photons coupled to photons. An additional complementary apparatus is proposed using LC circuits and exploring the low energy range (∼10−4−10−1 μeV). The setup includes a cryostat and cooling system to cool down the BabyIAXO bore down to about 5 K, as well as appropriate low-noise signal amplification and detection chain.

There are no more papers matching your filters at the moment.