IBM Research - Almaden

This research introduces Analog Foundation Models (AFMs), a method for adapting large, pre-trained language models to operate robustly on energy-efficient analog in-memory computing (AIMC) hardware. The approach uses hardware-aware training and knowledge distillation to significantly improve performance under realistic analog noise conditions compared to standard quantization techniques, while maintaining model capabilities.

The pervasiveness of large language models (LLMs) in enterprise settings has also brought forth a significant amount of risks associated with their usage. Guardrails technologies aim to mitigate this risk by filtering LLMs' input/output text through various detectors. However, developing and maintaining robust detectors faces many challenges, one of which is the difficulty in acquiring production-quality labeled data on real LLM outputs prior to deployment. In this work, we propose backprompting, a simple yet intuitive solution to generate production-like labeled data for health advice guardrails development. Furthermore, we pair our backprompting method with a sparse human-in-the-loop clustering technique to label the generated data. Our aim is to construct a parallel corpus roughly representative of the original dataset yet resembling real LLM output. We then infuse existing datasets with our synthetic examples to produce robust training data for our detector. We test our technique in one of the most difficult and nuanced guardrails: the identification of health advice in LLM output, and demonstrate improvement versus other solutions. Our detector is able to outperform GPT-4o by up to 3.73%, despite having 400x less parameters.

We provide a new robust convergence analysis of the well-known power method for computing the dominant singular vectors of a matrix that we call the noisy power method. Our result characterizes the convergence behavior of the algorithm when a significant amount noise is introduced after each matrix-vector multiplication. The noisy power method can be seen as a meta-algorithm that has recently found a number of important applications in a broad range of machine learning problems including alternating minimization for matrix completion, streaming principal component analysis (PCA), and privacy-preserving spectral analysis. Our general analysis subsumes several existing ad-hoc convergence bounds and resolves a number of open problems in multiple applications including streaming PCA and privacy-preserving singular vector computation.

Membership inference attacks (MIAs) pose a significant threat to the privacy of machine learning models and are widely used as tools for privacy assessment, auditing, and machine unlearning. While prior MIA research has primarily focused on performance metrics such as AUC, accuracy, and TPR@low FPR - either by developing new methods to enhance these metrics or using them to evaluate privacy solutions - we found that it overlooks the disparities among different attacks. These disparities, both between distinct attack methods and between multiple instantiations of the same method, have crucial implications for the reliability and completeness of MIAs as privacy evaluation tools. In this paper, we systematically investigate these disparities through a novel framework based on coverage and stability analysis. Extensive experiments reveal significant disparities in MIAs, their potential causes, and their broader implications for privacy evaluation. To address these challenges, we propose an ensemble framework with three distinct strategies to harness the strengths of state-of-the-art MIAs while accounting for their disparities. This framework not only enables the construction of more powerful attacks but also provides a more robust and comprehensive methodology for privacy evaluation.

Stochastic convex optimization, where the objective is the expectation of a

random convex function, is an important and widely used method with numerous

applications in machine learning, statistics, operations research and other

areas. We study the complexity of stochastic convex optimization given only

statistical query (SQ) access to the objective function. We show that

well-known and popular first-order iterative methods can be implemented using

only statistical queries. For many cases of interest we derive nearly matching

upper and lower bounds on the estimation (sample) complexity including linear

optimization in the most general setting. We then present several consequences

for machine learning, differential privacy and proving concrete lower bounds on

the power of convex optimization based methods.

The key ingredient of our work is SQ algorithms and lower bounds for

estimating the mean vector of a distribution over vectors supported on a convex

body in Rd. This natural problem has not been previously studied

and we show that our solutions can be used to get substantially improved SQ

versions of Perceptron and other online algorithms for learning halfspaces.

We present a novel multimodal language model approach for predicting molecular properties by combining chemical language representation with physicochemical features. Our approach, MULTIMODAL-MOLFORMER, utilizes a causal multistage feature selection method that identifies physicochemical features based on their direct causal effect on a specific target property. These causal features are then integrated with the vector space generated by molecular embeddings from MOLFORMER. In particular, we employ Mordred descriptors as physicochemical features and identify the Markov blanket of the target property, which theoretically contains the most relevant features for accurate prediction. Our results demonstrate a superior performance of our proposed approach compared to existing state-of-the-art algorithms, including the chemical language-based MOLFORMER and graph neural networks, in predicting complex tasks such as biodegradability and PFAS toxicity estimation. Moreover, we demonstrate the effectiveness of our feature selection method in reducing the dimensionality of the Mordred feature space while maintaining or improving the model's performance. Our approach opens up promising avenues for future research in molecular property prediction by harnessing the synergistic potential of both chemical language and physicochemical features, leading to enhanced performance and advancements in the field.

ETH ZurichHeidelberg UniversityConsiglio Nazionale delle Ricerche

ETH ZurichHeidelberg UniversityConsiglio Nazionale delle Ricerche University of Southern California

University of Southern California University College LondonUniversity of Hong Kong

University College LondonUniversity of Hong Kong Peking UniversityUC Santa Barbara

Peking UniversityUC Santa Barbara Purdue UniversityPolitecnico di MilanoKing Abdullah University of Science and Technology

Purdue UniversityPolitecnico di MilanoKing Abdullah University of Science and Technology University of GroningenCEA-LetiLoughborough UniversityHewlett Packard LabsUniversity of MuensterIBM Research - AlmadenSPINTECSTMicroelectronicsAachen UniversityNaMLab gGmbHForschungszentrum J ̈ulich Peter Gr ̈unberg InstitutInstitute of Microelectronics of SevilleNational Yang Ming-Chiao Tung UniversityTechnion

Israel Institute of TechnologyUniversit

e Paris-SudIBM Research Europe ","Zurich

University of GroningenCEA-LetiLoughborough UniversityHewlett Packard LabsUniversity of MuensterIBM Research - AlmadenSPINTECSTMicroelectronicsAachen UniversityNaMLab gGmbHForschungszentrum J ̈ulich Peter Gr ̈unberg InstitutInstitute of Microelectronics of SevilleNational Yang Ming-Chiao Tung UniversityTechnion

Israel Institute of TechnologyUniversit

e Paris-SudIBM Research Europe ","ZurichThe roadmap is organized into several thematic sections, outlining current computing challenges, discussing the neuromorphic computing approach, analyzing mature and currently utilized technologies, providing an overview of emerging technologies, addressing material challenges, exploring novel computing concepts, and finally examining the maturity level of emerging technologies while determining the next essential steps for their advancement.

The advancement of Deep Learning (DL) is driven by efficient Deep Neural

Network (DNN) design and new hardware accelerators. Current DNN design is

primarily tailored for general-purpose use and deployment on commercially

viable platforms. Inference at the edge requires low latency, compact and

power-efficient models, and must be cost-effective. Digital processors based on

typical von Neumann architectures are not conducive to edge AI given the large

amounts of required data movement in and out of memory. Conversely,

analog/mixed signal in-memory computing hardware accelerators can easily

transcend the memory wall of von Neuman architectures when accelerating

inference workloads. They offer increased area and power efficiency, which are

paramount in edge resource-constrained environments. In this paper, we propose

AnalogNAS, a framework for automated DNN design targeting deployment on analog

In-Memory Computing (IMC) inference accelerators. We conduct extensive hardware

simulations to demonstrate the performance of AnalogNAS on State-Of-The-Art

(SOTA) models in terms of accuracy and deployment efficiency on various Tiny

Machine Learning (TinyML) tasks. We also present experimental results that show

AnalogNAS models achieving higher accuracy than SOTA models when implemented on

a 64-core IMC chip based on Phase Change Memory (PCM). The AnalogNAS search

code is released: this https URL

Real-valued logics underlie an increasing number of neuro-symbolic approaches, though typically their logical inference capabilities are characterized only qualitatively. We provide foundations for establishing the correctness and power of such systems. We give a sound and strongly complete axiomatization that can be parametrized to cover essentially every real-valued logic, including all the common fuzzy logics. Our class of sentences are very rich, and each describes a set of possible real values for a collection of formulas of the real-valued logic, including which combinations of real values are possible. Strong completeness allows us to derive exactly what information can be inferred about the combinations of real values of a collection of formulas given information about the combinations of real values of several other collections of formulas. We then extend the axiomatization to deal with weighted subformulas. Finally, we give a decision procedure based on linear programming for deciding, for certain real-valued logics and under certain natural assumptions, whether a set of our sentences logically implies another of our sentences.

Large-scale pre-training methodologies for chemical language models represent a breakthrough in cheminformatics. These methods excel in tasks such as property prediction and molecule generation by learning contextualized representations of input tokens through self-supervised learning on large unlabeled corpora. Typically, this involves pre-training on unlabeled data followed by fine-tuning on specific tasks, reducing dependence on annotated datasets and broadening chemical language representation understanding. This paper introduces a large encoder-decoder chemical foundation models pre-trained on a curated dataset of 91 million SMILES samples sourced from PubChem, which is equivalent to 4 billion of molecular tokens. The proposed foundation model supports different complex tasks, including quantum property prediction, and offer flexibility with two main variants (289M and 8×289M). Our experiments across multiple benchmark datasets validate the capacity of the proposed model in providing state-of-the-art results for different tasks. We also provide a preliminary assessment of the compositionality of the embedding space as a prerequisite for the reasoning tasks. We demonstrate that the produced latent space is separable compared to the state-of-the-art with few-shot learning capabilities.

Statistical query (SQ) algorithms are algorithms that have access to an {\em

SQ oracle} for the input distribution D instead of i.i.d.~ samples from D.

Given a query function ϕ:X→[−1,1], the oracle returns an

estimate of Ex∼D[ϕ(x)] within some tolerance τϕ

that roughly corresponds to the number of samples.

In this work we demonstrate that the complexity of solving general problems

over distributions using SQ algorithms can be captured by a relatively simple

notion of statistical dimension that we introduce. SQ algorithms capture a

broad spectrum of algorithmic approaches used in theory and practice, most

notably, convex optimization techniques. Hence our statistical dimension allows

to investigate the power of a variety of algorithmic approaches by analyzing a

single linear-algebraic parameter. Such characterizations were investigated

over the past 20 years in learning theory but prior characterizations are

restricted to the much simpler setting of classification problems relative to a

fixed distribution on the domain (Blum et al., 1994; Bshouty and Feldman, 2002;

Yang, 2001; Simon, 2007; Feldman, 2012; Szorenyi, 2009). Our characterization

is also the first to precisely characterize the necessary tolerance of queries.

We give applications of our techniques to two open problems in learning theory

and to algorithms that are subject to memory and communication constraints.

13 Nov 2021

Digital quantum computers provide a computational framework for solving the Schrödinger equation for a variety of many-particle systems. Quantum computing algorithms for the quantum simulation of these systems have recently witnessed remarkable growth, notwithstanding the limitations of existing quantum hardware, especially as a tool for electronic structure computations in molecules. In this review, we provide a self-contained introduction to emerging algorithms for the simulation of Hamiltonian dynamics and eigenstates, with emphasis on their applications to the electronic structure in molecular systems. Theoretical foundations and implementation details of the method are discussed, and their strengths, limitations, and recent advances are presented.

We study the tradeoff between the statistical error and communication cost of distributed statistical estimation problems in high dimensions. In the distributed sparse Gaussian mean estimation problem, each of the m machines receives n data points from a d-dimensional Gaussian distribution with unknown mean θ which is promised to be k-sparse. The machines communicate by message passing and aim to estimate the mean θ. We provide a tight (up to logarithmic factors) tradeoff between the estimation error and the number of bits communicated between the machines. This directly leads to a lower bound for the distributed \textit{sparse linear regression} problem: to achieve the statistical minimax error, the total communication is at least Ω(min{n,d}m), where n is the number of observations that each machine receives and d is the ambient dimension. These lower results improve upon [Sha14,SD'14] by allowing multi-round iterative communication model. We also give the first optimal simultaneous protocol in the dense case for mean estimation.

As our main technique, we prove a \textit{distributed data processing inequality}, as a generalization of usual data processing inequalities, which might be of independent interest and useful for other problems.

Graph Neural Networks (GNNs) have enjoyed wide spread applications in

graph-structured data. However, existing graph based applications commonly lack

annotated data. GNNs are required to learn latent patterns from a limited

amount of training data to perform inferences on a vast amount of test data.

The increased complexity of GNNs, as well as a single point of model parameter

initialization, usually lead to overfitting and sub-optimal performance. In

addition, it is known that GNNs are vulnerable to adversarial attacks. In this

paper, we push one step forward on the ensemble learning of GNNs with improved

accuracy, generalization, and adversarial robustness. Following the principles

of stochastic modeling, we propose a new method called GNN-Ensemble to

construct an ensemble of random decision graph neural networks whose capacity

can be arbitrarily expanded for improvement in performance. The essence of the

method is to build multiple GNNs in randomly selected substructures in the

topological space and subfeatures in the feature space, and then combine them

for final decision making. These GNNs in different substructure and subfeature

spaces generalize their classification in complementary ways. Consequently,

their combined classification performance can be improved and overfitting on

the training data can be effectively reduced. In the meantime, we show that

GNN-Ensemble can significantly improve the adversarial robustness against

attacks on GNNs.

Federated learning facilitates the collaborative training of models without the sharing of raw data. However, recent attacks demonstrate that simply maintaining data locality during training processes does not provide sufficient privacy guarantees. Rather, we need a federated learning system capable of preventing inference over both the messages exchanged during training and the final trained model while ensuring the resulting model also has acceptable predictive accuracy. Existing federated learning approaches either use secure multiparty computation (SMC) which is vulnerable to inference or differential privacy which can lead to low accuracy given a large number of parties with relatively small amounts of data each. In this paper, we present an alternative approach that utilizes both differential privacy and SMC to balance these trade-offs. Combining differential privacy with secure multiparty computation enables us to reduce the growth of noise injection as the number of parties increases without sacrificing privacy while maintaining a pre-defined rate of trust. Our system is therefore a scalable approach that protects against inference threats and produces models with high accuracy. Additionally, our system can be used to train a variety of machine learning models, which we validate with experimental results on 3 different machine learning algorithms. Our experiments demonstrate that our approach out-performs state of the art solutions.

Researchers at IBM Research - Almaden introduce the Hopfield Encoding Network (HEN) framework, which integrates pre-trained neural encoder-decoder models with Modern Hopfield Networks to effectively mitigate meta-stable states and enhance practical storage capacity. The framework successfully enables both high-fidelity auto-associative image recall and natural language-based hetero-associative image retrieval on large-scale datasets, addressing key limitations of existing associative memory systems.

20 May 2022

Ergodic quantum many-body systems undergoing unitary dynamics evolve towards

increasingly entangled states characterized by an extensive scaling of

entanglement entropy with system volume. At the other extreme, quantum systems

repeatedly measured may be stabilized in a measurement eigenstate, a phenomenon

known as the quantum Zeno effect. Recently, the intermediate regime in which

unitary evolution is interspersed with quantum measurements has become of

interest. Numerical studies have reported the existence of distinct phases

characterized by volume- and area-law entanglement entropy scaling for

infrequent and frequent measurement rates, respectively, separated by a

critical measurement rate. The experimental investigation of these dynamic

quantum phases of matter on near-term quantum hardware is challenging due to

the need for repeated high-fidelity mid-circuit measurements and fine control

over the evolving unitaries. Here, we report the realization of a

measurement-induced entanglement transition on superconducting quantum

processors with mid-circuit readout capability. We directly observe extensive

and sub-extensive scaling of entanglement entropy in the volume- and area-law

phases, respectively, by varying the rate of projective measurements. We

further demonstrate phenomenological critical behavior of the transition by

performing a data collapse for different system sizes. Our work paves the way

for the use of mid-circuit measurement as an effective resource for quantum

simulation on near-term quantum computers, for instance by facilitating the

study of dynamic and long-range entangled quantum phases.

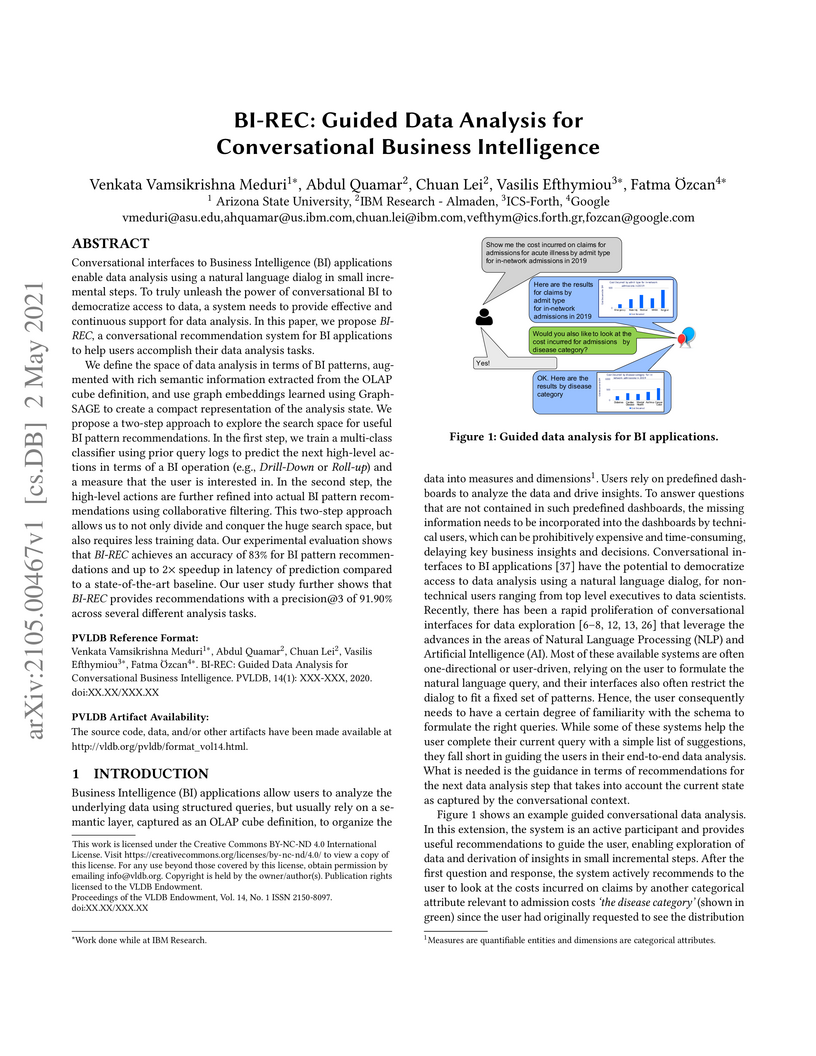

Conversational interfaces to Business Intelligence (BI) applications enable

data analysis using a natural language dialog in small incremental steps. To

truly unleash the power of conversational BI to democratize access to data, a

system needs to provide effective and continuous support for data analysis. In

this paper, we propose BI-REC, a conversational recommendation system for BI

applications to help users accomplish their data analysis tasks.

We define the space of data analysis in terms of BI patterns, augmented with

rich semantic information extracted from the OLAP cube definition, and use

graph embeddings learned using GraphSAGE to create a compact representation of

the analysis state. We propose a two-step approach to explore the search space

for useful BI pattern recommendations. In the first step, we train a

multi-class classifier using prior query logs to predict the next high-level

actions in terms of a BI operation (e.g., {\em Drill-Down} or {\em Roll-up})

and a measure that the user is interested in. In the second step, the

high-level actions are further refined into actual BI pattern recommendations

using collaborative filtering. This two-step approach allows us to not only

divide and conquer the huge search space, but also requires less training data.

Our experimental evaluation shows that BI-REC achieves an accuracy of 83% for

BI pattern recommendations and up to 2X speedup in latency of prediction

compared to a state-of-the-art baseline. Our user study further shows that

BI-REC provides recommendations with a precision@3 of 91.90% across several

different analysis tasks.

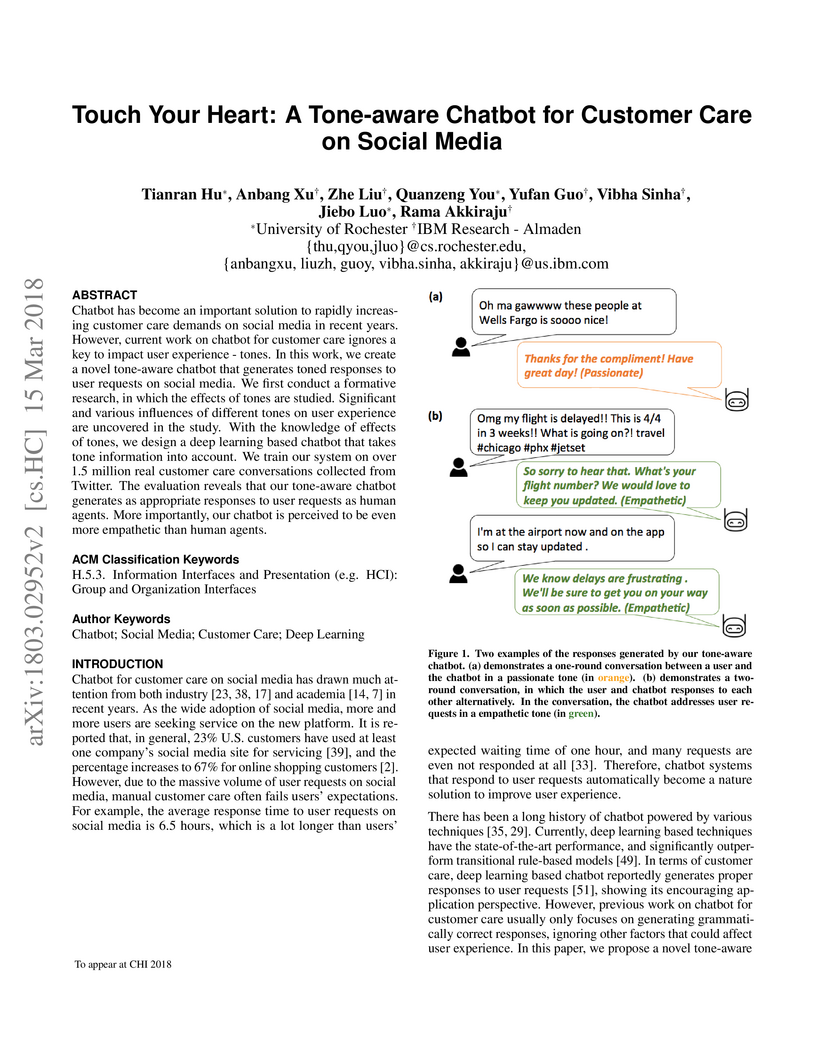

15 Mar 2018

Chatbot has become an important solution to rapidly increasing customer care

demands on social media in recent years. However, current work on chatbot for

customer care ignores a key to impact user experience - tones. In this work, we

create a novel tone-aware chatbot that generates toned responses to user

requests on social media. We first conduct a formative research, in which the

effects of tones are studied. Significant and various influences of different

tones on user experience are uncovered in the study. With the knowledge of

effects of tones, we design a deep learning based chatbot that takes tone

information into account. We train our system on over 1.5 million real customer

care conversations collected from Twitter. The evaluation reveals that our

tone-aware chatbot generates as appropriate responses to user requests as human

agents. More importantly, our chatbot is perceived to be even more empathetic

than human agents.

06 Nov 2020

Current and near term quantum computers (i.e. NISQ devices) are limited in their computational power in part due to qubit decoherence. Here we seek to take advantage of qubit decoherence as a resource in simulating the behavior of real world quantum systems, which are always subject to decoherence, with no additional computational overhead. As a first step toward this goal we simulate the thermal relaxation of quantum beats in radical ion pairs (RPs) on a quantum computer as a proof of concept of the method. We present three methods for implementing the thermal relaxation, one which explicitly applies the relaxation Kraus operators, one which combines results from two separate circuits in a classical post-processing step, and one which relies on leveraging the inherent decoherence of the qubits themselves. We use our methods to simulate two real world systems and find excellent agreement between our results, experimental data, and the theoretical prediction.

There are no more papers matching your filters at the moment.