Binjiang Institute of Zhejiang University

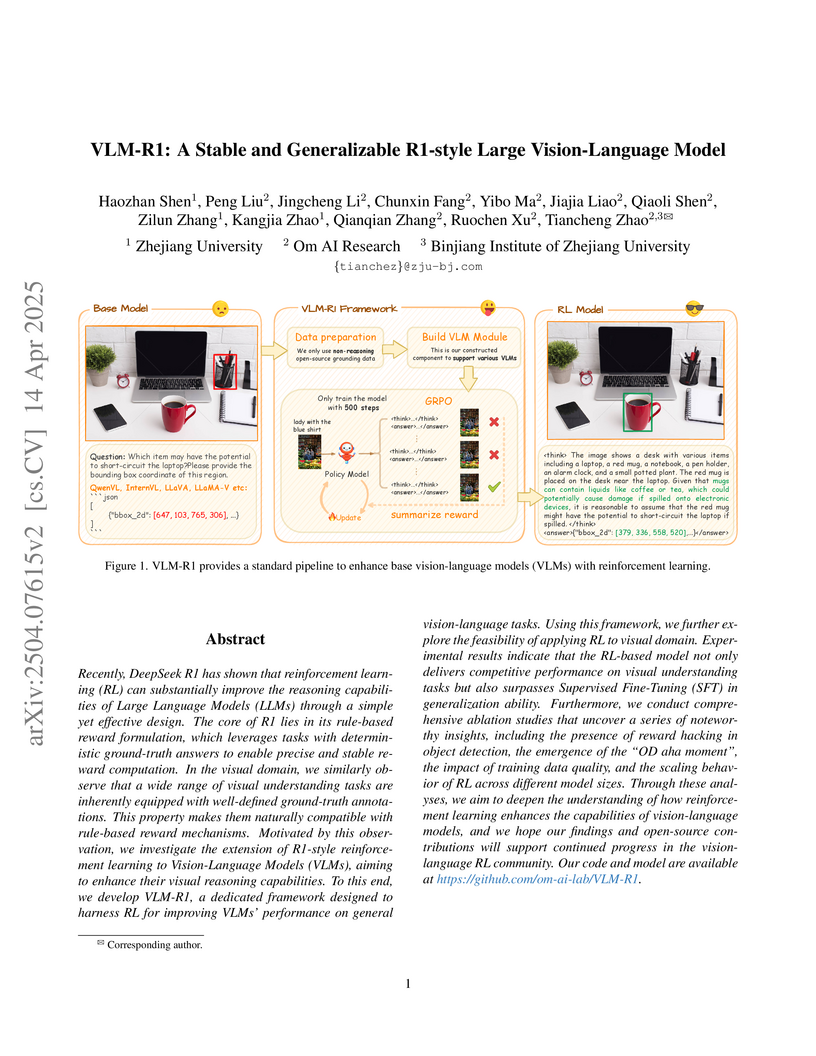

VLM-R1 introduces an open-source framework that applies rule-based reinforcement learning to Vision-Language Models (VLMs), enhancing their visual reasoning and generalization abilities on tasks like Referring Expression Comprehension and Open-Vocabulary Object Detection. The approach demonstrates improved out-of-domain performance compared to supervised fine-tuning and showcases emergent reasoning behaviors.

VLM-FO1, a plug-and-play framework from Om AI Research and Zhejiang University, enhances pre-trained Vision-Language Models with fine-grained perception by bridging high-level reasoning and precise spatial localization. It achieves state-of-the-art performance across object grounding (44.4 mAP on COCO), regional understanding, and visual reasoning benchmarks, while effectively preserving the base VLM's general capabilities.

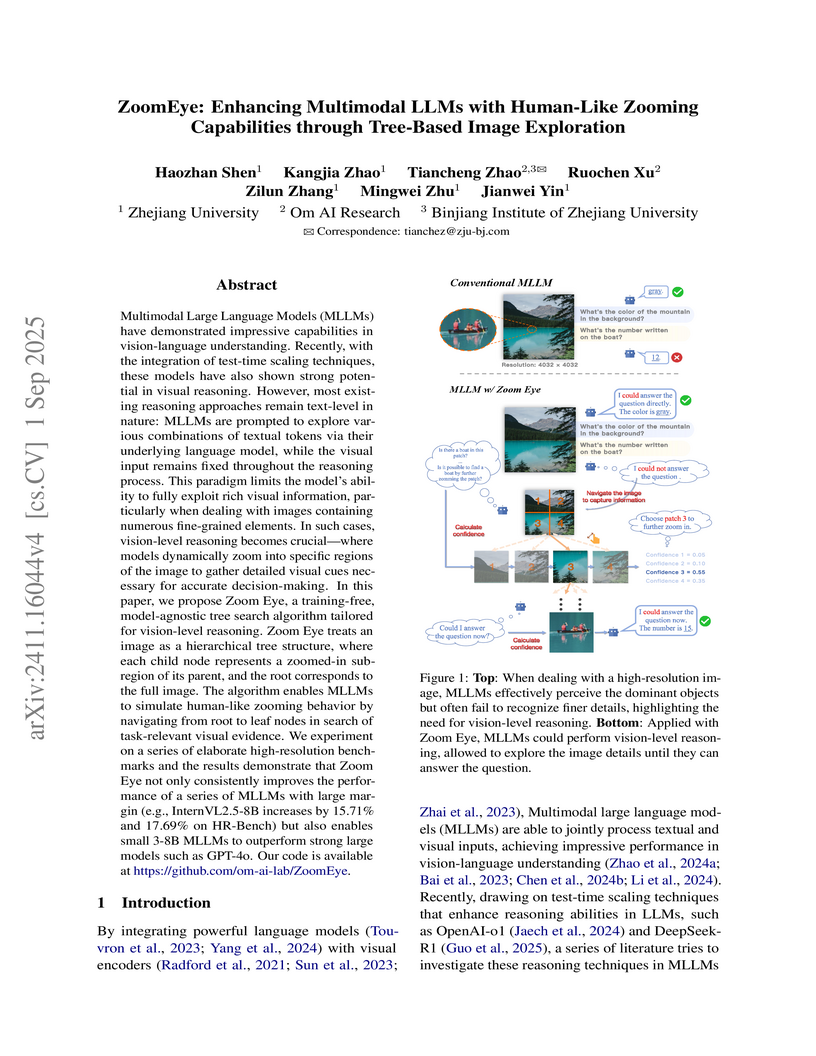

ZoomEye, a training-free and model-agnostic framework, enhances Multimodal Large Language Models (MLLMs) with human-like zooming capabilities through tree-based image exploration. This approach substantially improves MLLM performance on high-resolution visual tasks, enabling smaller models (3B-8B parameters) to surpass larger commercial models like GPT-4o on specific detail-oriented benchmarks.

Researchers developed MCP-Guard, a multi-layered defense framework protecting Large Language Model-tool interactions against threats like prompt injection, achieving an average 98.47% recall and 89.07% F1-score. This system significantly reduced detection latency by up to 12x compared to existing baselines, and introduced MCP-AttackBench, a comprehensive dataset of over 70,000 attack samples for robust evaluation.

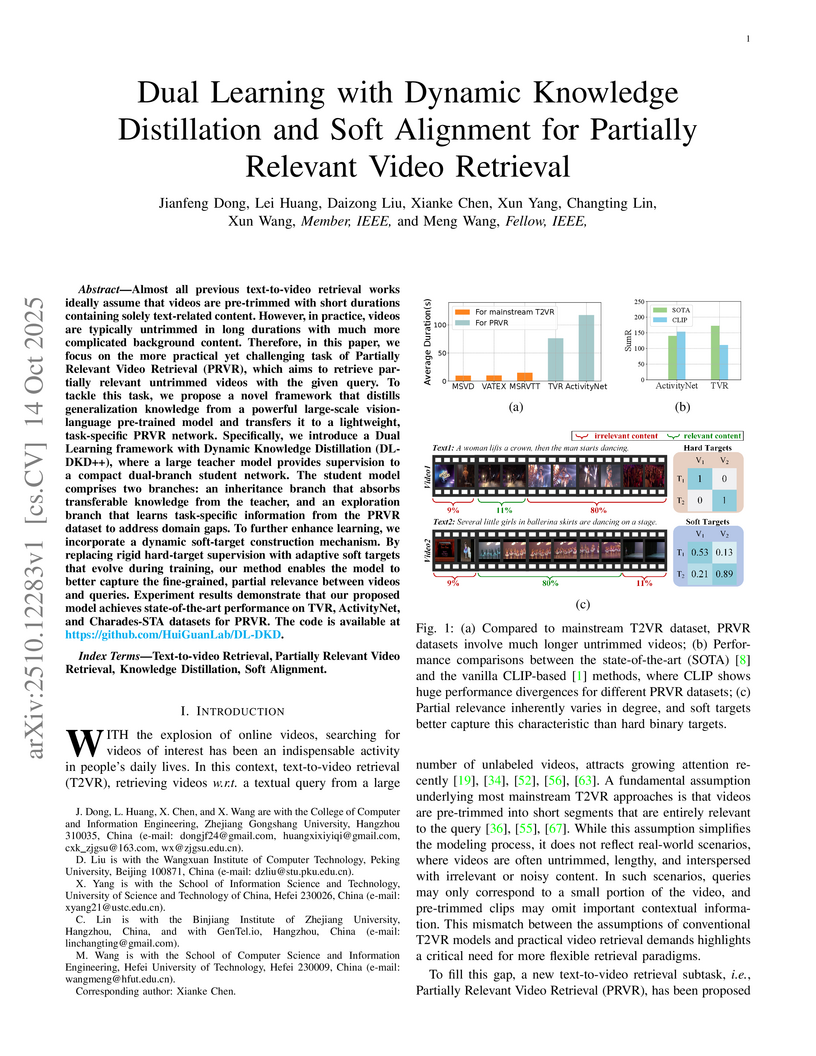

Researchers introduce DL-DKD++, a framework for partially relevant video retrieval that employs dual learning with dynamic knowledge distillation from large vision-language models and dynamic soft alignment for nuanced relevance scoring. The approach establishes new state-of-the-art performance on datasets like TVR (SumR 184.8), ActivityNet-Captions (SumR 149.9), and Charades-STA.

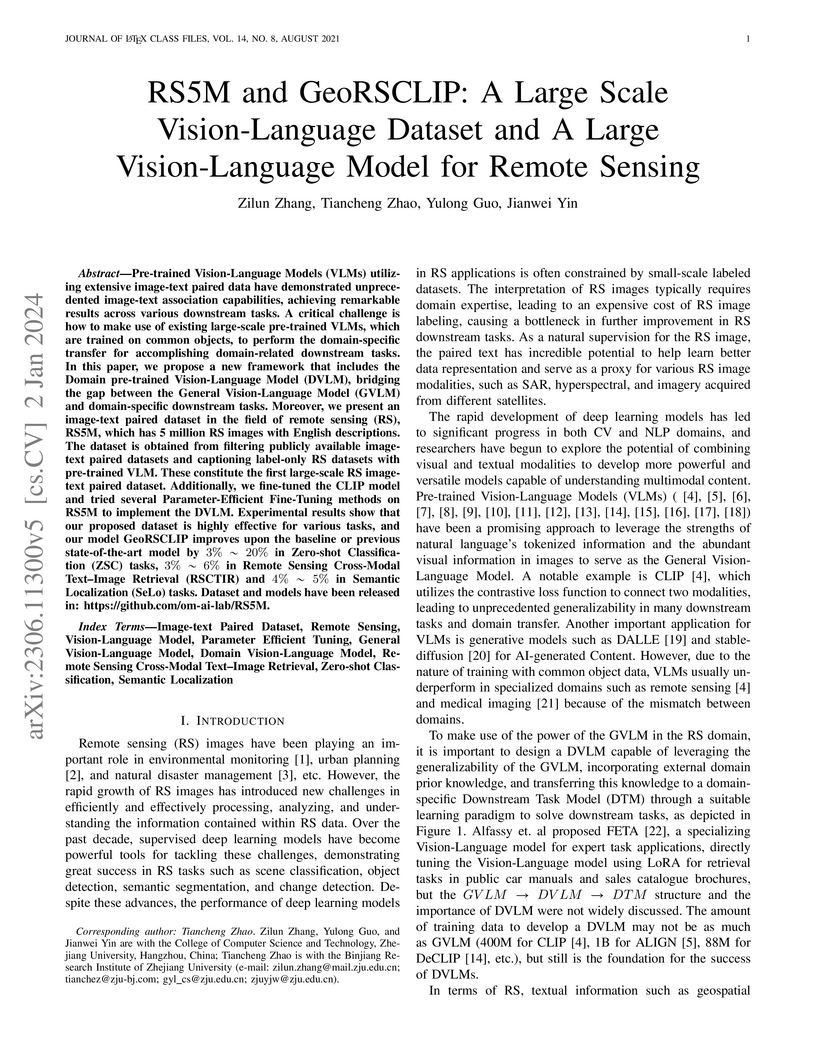

Researchers at Zhejiang University introduced RS5M, the first large-scale (5 million pairs) remote sensing image-text dataset, and GeoRSCLIP, a specialized Vision-Language Model. GeoRSCLIP, fine-tuned on RS5M, achieved 3-20% performance improvements over baselines on various remote sensing tasks, effectively bridging the domain gap for general VLMs and advancing GeoAI applications.

OmAgent, a multi-modal agent framework developed by Om AI Research and Zhejiang University, improves long-form video understanding by addressing information loss through a novel "rewinder" tool integrated within an autonomous agent. The framework outperformed existing methods on general problem-solving benchmarks, achieving 88.3% on MBPP and 79.7% on FreshQA, and demonstrated enhanced comprehension across various complex long video understanding tasks.

Researchers at Zhejiang University and Om AI Research introduced GUI Testing Arena (GTArena), a unified, end-to-end benchmark for autonomous GUI testing. The framework formalizes the testing process and evaluates state-of-the-art multimodal large language models, revealing a substantial performance gap between current AI capabilities and real-world applicability.

A new benchmark, OVDEval, is introduced to provide a comprehensive, fine-grained evaluation of Open-Vocabulary Detection (OVD) models across six linguistic aspects, utilizing meticulously designed hard negatives. This work also proposes NMS-AP, a refined metric addressing the "Inflated AP Problem" in traditional Average Precision, revealing that state-of-the-art OVD models show strong general object detection but poor fine-grained linguistic comprehension.

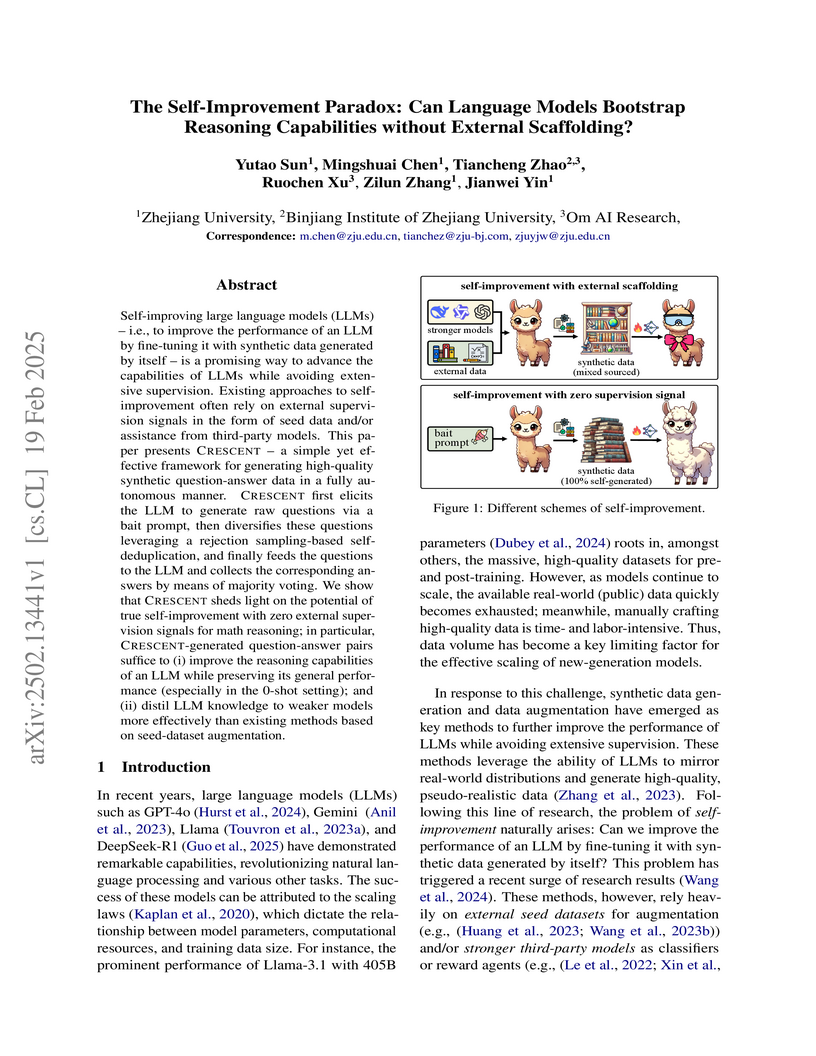

Self-improving large language models (LLMs) -- i.e., to improve the

performance of an LLM by fine-tuning it with synthetic data generated by itself

-- is a promising way to advance the capabilities of LLMs while avoiding

extensive supervision. Existing approaches to self-improvement often rely on

external supervision signals in the form of seed data and/or assistance from

third-party models. This paper presents Crescent -- a simple yet effective

framework for generating high-quality synthetic question-answer data in a fully

autonomous manner. Crescent first elicits the LLM to generate raw questions via

a bait prompt, then diversifies these questions leveraging a rejection

sampling-based self-deduplication, and finally feeds the questions to the LLM

and collects the corresponding answers by means of majority voting. We show

that Crescent sheds light on the potential of true self-improvement with zero

external supervision signals for math reasoning; in particular,

Crescent-generated question-answer pairs suffice to (i) improve the reasoning

capabilities of an LLM while preserving its general performance (especially in

the 0-shot setting); and (ii) distil LLM knowledge to weaker models more

effectively than existing methods based on seed-dataset augmentation.

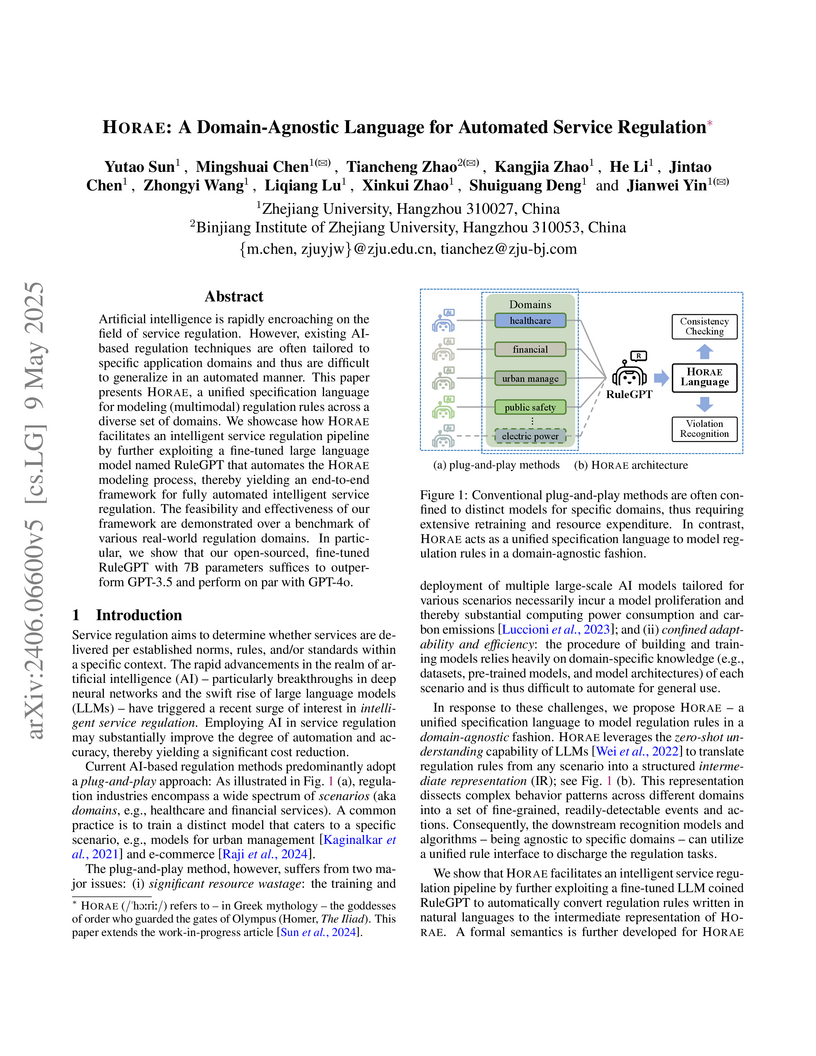

Artificial intelligence is rapidly encroaching on the field of service regulation. However, existing AI-based regulation techniques are often tailored to specific application domains and thus are difficult to generalize in an automated manner. This paper presents Horae, a unified specification language for modeling (multimodal) regulation rules across a diverse set of domains. We showcase how Horae facilitates an intelligent service regulation pipeline by further exploiting a fine-tuned large language model named RuleGPT that automates the Horae modeling process, thereby yielding an end-to-end framework for fully automated intelligent service regulation. The feasibility and effectiveness of our framework are demonstrated over a benchmark of various real-world regulation domains. In particular, we show that our open-sourced, fine-tuned RuleGPT with 7B parameters suffices to outperform GPT-3.5 and perform on par with GPT-4o.

Backdoor-based fingerprinting has emerged as an effective technique for tracing the ownership of large language models. However, in real-world deployment scenarios, developers often instantiate multiple downstream models from a shared base model, and applying fingerprinting to each variant individually incurs prohibitive computational overhead. While inheritance-based approaches -- where fingerprints are embedded into the base model and expected to persist through fine-tuning -- appear attractive, they suffer from three key limitations: late-stage fingerprinting, fingerprint instability, and interference with downstream adaptation. To address these challenges, we propose a novel mechanism called the Fingerprint Vector. Our method first embeds a fingerprint into the base model via backdoor-based fine-tuning, then extracts a task-specific parameter delta as a fingerprint vector by computing the difference between the fingerprinted and clean models. This vector can be directly added to any structurally compatible downstream model, allowing the fingerprint to be transferred post hoc without additional fine-tuning. Extensive experiments show that Fingerprint Vector achieves comparable or superior performance to direct injection across key desiderata. It maintains strong effectiveness across diverse model architectures as well as mainstream downstream variants within the same family. It also preserves harmlessness and robustness in most cases. Even when slight robustness degradation is observed, the impact remains within acceptable bounds and is outweighed by the scalability benefits of our approach.

With the continuous advancement of vision language models (VLMs) technology,

remarkable research achievements have emerged in the dermatology field, the

fourth most prevalent human disease category. However, despite these

advancements, VLM still faces explainable problems to user in diagnosis due to

the inherent complexity of dermatological conditions, existing tools offer

relatively limited support for user comprehension. We propose SkinGEN, a

diagnosis-to-generation framework that leverages the stable diffusion(SD) model

to generate reference demonstrations from diagnosis results provided by VLM,

thereby enhancing the visual explainability for users. Through extensive

experiments with Low-Rank Adaptation (LoRA), we identify optimal strategies for

skin condition image generation. We conduct a user study with 32 participants

evaluating both the system performance and explainability. Results demonstrate

that SkinGEN significantly improves users' comprehension of VLM predictions and

fosters increased trust in the diagnostic process. This work paves the way for

more transparent and user-centric VLM applications in dermatology and beyond.

Lin et al. introduce Analyzing-based Jailbreak (ABJ), a method that manipulates Large Language Models' internal reasoning processes to elicit harmful content from neutral inputs. ABJ achieved over 80% attack success rates on state-of-the-art LLMs like GPT-4o and Claude-3-haiku, demonstrating its ability to bypass common input-stage defenses.

Sun Yat-Sen University

Sun Yat-Sen University UC Berkeley

UC Berkeley University of Oxford

University of Oxford Beihang University

Beihang University Tsinghua University

Tsinghua University Zhejiang University

Zhejiang University The Chinese University of Hong Kong

The Chinese University of Hong Kong MetaUniversity of Electronic Science and Technology of China

MetaUniversity of Electronic Science and Technology of China ETH ZürichChina University of Mining and Technology

ETH ZürichChina University of Mining and Technology Nanyang Technological UniversityAarhus University

Nanyang Technological UniversityAarhus University Johns Hopkins UniversityA*STARPolitecnico di MilanoPengcheng LaboratoryZhongguancun LaboratoryHefei Comprehensive National Science CenterBinjiang Institute of Zhejiang UniversityInstitute of Computing Technology, CASZhejiang Gongshang UniversityUCASCASGoogle BrainGenTel.io

Johns Hopkins UniversityA*STARPolitecnico di MilanoPengcheng LaboratoryZhongguancun LaboratoryHefei Comprehensive National Science CenterBinjiang Institute of Zhejiang UniversityInstitute of Computing Technology, CASZhejiang Gongshang UniversityUCASCASGoogle BrainGenTel.ioMultimodal Large Language Models (MLLMs) have enabled transformative advancements across diverse applications but remain susceptible to safety threats, especially jailbreak attacks that induce harmful outputs. To systematically evaluate and improve their safety, we organized the Adversarial Testing & Large-model Alignment Safety Grand Challenge (ATLAS) 2025}. This technical report presents findings from the competition, which involved 86 teams testing MLLM vulnerabilities via adversarial image-text attacks in two phases: white-box and black-box evaluations. The competition results highlight ongoing challenges in securing MLLMs and provide valuable guidance for developing stronger defense mechanisms. The challenge establishes new benchmarks for MLLM safety evaluation and lays groundwork for advancing safer multimodal AI systems. The code and data for this challenge are openly available at this https URL.

11 Aug 2025

Invoking external tools enables Large Language Models (LLMs) to perform complex, real-world tasks, yet selecting the correct tool from large, hierarchically-structured libraries remains a significant challenge. The limited context windows of LLMs and noise from irrelevant options often lead to low selection accuracy and high computational costs. To address this, we propose the Hierarchical Gaussian Mixture Framework (HGMF), a probabilistic pruning method for scalable tool invocation. HGMF first maps the user query and all tool descriptions into a unified semantic space. The framework then operates in two stages: it clusters servers using a Gaussian Mixture Model (GMM) and filters them based on the query's likelihood. Subsequently, it applies the same GMM-based clustering and filtering to the tools associated with the selected servers. This hierarchical process produces a compact, high-relevance candidate set, simplifying the final selection task for the LLM. Experiments on a public dataset show that HGMF significantly improves tool selection accuracy while reducing inference latency, confirming the framework's scalability and effectiveness for large-scale tool libraries.

18F-fluorodeoxyglucose (18F-FDG) Positron Emission Tomography (PET) imaging

usually needs a full-dose radioactive tracer to obtain satisfactory diagnostic

results, which raises concerns about the potential health risks of radiation

exposure, especially for pediatric patients. Reconstructing the low-dose PET

(L-PET) images to the high-quality full-dose PET (F-PET) ones is an effective

way that both reduces the radiation exposure and remains diagnostic accuracy.

In this paper, we propose a resource-efficient deep learning framework for

L-PET reconstruction and analysis, referred to as transGAN-SDAM, to generate

F-PET from corresponding L-PET, and quantify the standard uptake value ratios

(SUVRs) of these generated F-PET at whole brain. The transGAN-SDAM consists of

two modules: a transformer-encoded Generative Adversarial Network (transGAN)

and a Spatial Deformable Aggregation Module (SDAM). The transGAN generates

higher quality F-PET images, and then the SDAM integrates the spatial

information of a sequence of generated F-PET slices to synthesize whole-brain

F-PET images. Experimental results demonstrate the superiority and rationality

of our approach.

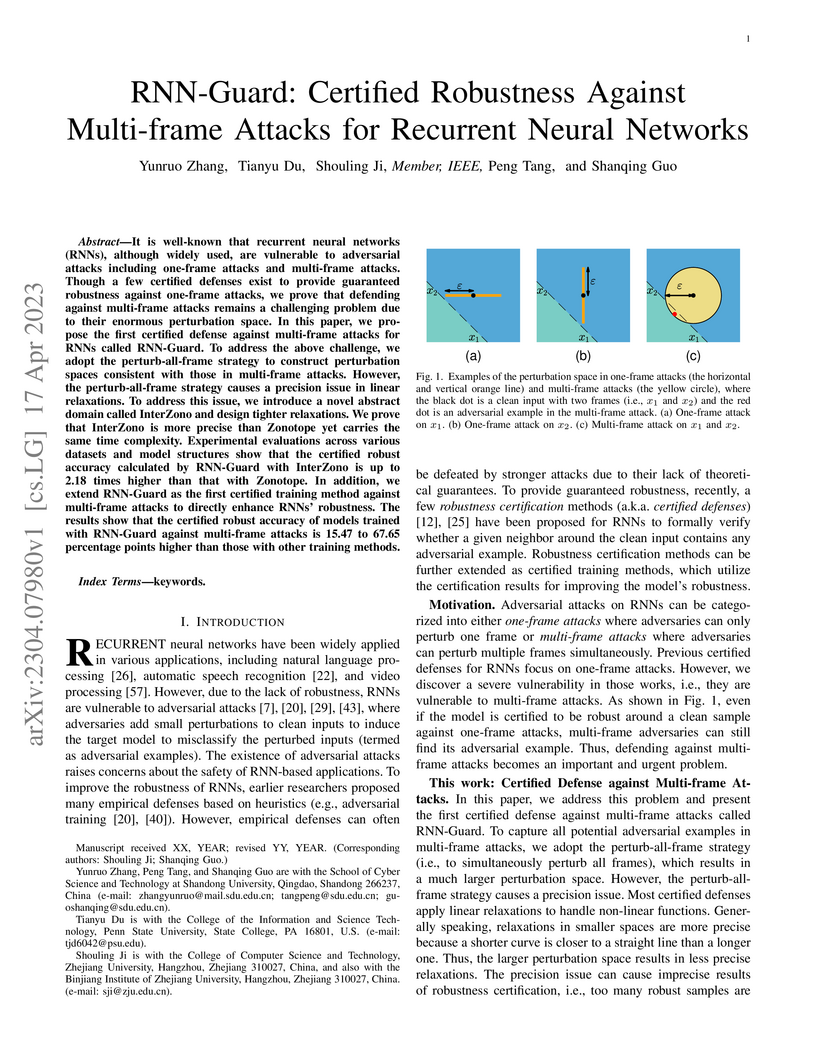

It is well-known that recurrent neural networks (RNNs), although widely used,

are vulnerable to adversarial attacks including one-frame attacks and

multi-frame attacks. Though a few certified defenses exist to provide

guaranteed robustness against one-frame attacks, we prove that defending

against multi-frame attacks remains a challenging problem due to their enormous

perturbation space. In this paper, we propose the first certified defense

against multi-frame attacks for RNNs called RNN-Guard. To address the above

challenge, we adopt the perturb-all-frame strategy to construct perturbation

spaces consistent with those in multi-frame attacks. However, the

perturb-all-frame strategy causes a precision issue in linear relaxations. To

address this issue, we introduce a novel abstract domain called InterZono and

design tighter relaxations. We prove that InterZono is more precise than

Zonotope yet carries the same time complexity. Experimental evaluations across

various datasets and model structures show that the certified robust accuracy

calculated by RNN-Guard with InterZono is up to 2.18 times higher than that

with Zonotope. In addition, we extend RNN-Guard as the first certified training

method against multi-frame attacks to directly enhance RNNs' robustness. The

results show that the certified robust accuracy of models trained with

RNN-Guard against multi-frame attacks is 15.47 to 67.65 percentage points

higher than those with other training methods.

30 May 2025

Researchers from Om AI Research and Zhejiang University introduce AGORA, a unified framework that enables standardized development and comprehensive evaluation of diverse language agent algorithms through a modular, graph-based architecture. Extensive experiments on mathematical reasoning and high-resolution image question-answering reveal that simpler algorithms often demonstrate robust performance with lower computational overhead, and prompt engineering significantly impacts results.

We introduce OmChat, a model designed to excel in handling long contexts and video understanding tasks. OmChat's new architecture standardizes how different visual inputs are processed, making it more efficient and adaptable. It uses a dynamic vision encoding process to effectively handle images of various resolutions, capturing fine details across a range of image qualities. OmChat utilizes an active progressive multimodal pretraining strategy, which gradually increases the model's capacity for long contexts and enhances its overall abilities. By selecting high-quality data during training, OmChat learns from the most relevant and informative data points. With support for a context length of up to 512K, OmChat demonstrates promising performance in tasks involving multiple images and videos, outperforming most open-source models in these benchmarks. Additionally, OmChat proposes a prompting strategy for unifying complex multimodal inputs including single image text, multi-image text and videos, and achieving competitive performance on single-image benchmarks. To further evaluate the model's capabilities, we proposed a benchmark dataset named Temporal Visual Needle in a Haystack. This dataset assesses OmChat's ability to comprehend temporal visual details within long videos. Our analysis highlights several key factors contributing to OmChat's success: support for any-aspect high image resolution, the active progressive pretraining strategy, and high-quality supervised fine-tuning datasets. This report provides a detailed overview of OmChat's capabilities and the strategies that enhance its performance in visual understanding.

There are no more papers matching your filters at the moment.