Ask or search anything...

Researchers from the University of Cologne developed a system leveraging DistilBERT to classify song genres and predict both a song's success (based on views) and its approximate release year solely from lyrical content. The system achieved 65% accuracy for genre classification, 79% accuracy for success prediction, and an RMSE of 14.18 for release year estimation using extracted BERT embeddings with SVR.

View blogResearchers from Argonne National Laboratory and the University of Illinois Urbana-Champaign, with over 50 collaborators, introduce CritPt, a benchmark to evaluate Large Language Models (LLMs) on unpublished, research-level physics problems. The study found that current LLMs achieve very low accuracy on end-to-end scientific challenges (best base model at 5.7%) but show limited potential on modular sub-tasks, revealing a significant gap in their ability for genuine scientific reasoning and consistent reliability.

View blogTimeCMA introduces a framework for multivariate time series forecasting that leverages large language models (LLMs) through a novel cross-modality alignment module to generate disentangled yet robust time series embeddings. This approach, combined with efficient last token embedding storage, consistently outperforms state-of-the-art baselines across eight datasets while significantly reducing computational overhead.

View blogThis survey paper from a diverse group of researchers including SenseTime Research provides a comprehensive overview of Reasoning Agentic Retrieval-Augmented Generation (RAG) systems. It introduces a taxonomy categorizing these systems into 'Predefined Reasoning' (System 1) and 'Agentic Reasoning' (System 2), addressing the limitations of basic RAG for complex industry challenges by detailing advancements in dynamic information acquisition and synthesis.

View blogA Spatial-Temporal Large Language Model (ST-LLM) is developed to enhance traffic prediction by explicitly modeling both spatial and temporal dependencies within a large language model framework. It consistently achieved the best prediction performance on real-world traffic datasets, outperforming existing state-of-the-art models and demonstrating strong generalization capabilities in data-constrained scenarios.

View blogThe Event Horizon Telescope Collaboration conducted the first multi-epoch polarimetric imaging of M87* at event-horizon scales, observing a stable black hole shadow diameter while detecting substantial year-to-year variability in the ring's azimuthal brightness and linear polarization patterns, along with initial constraints on extended jet emission.

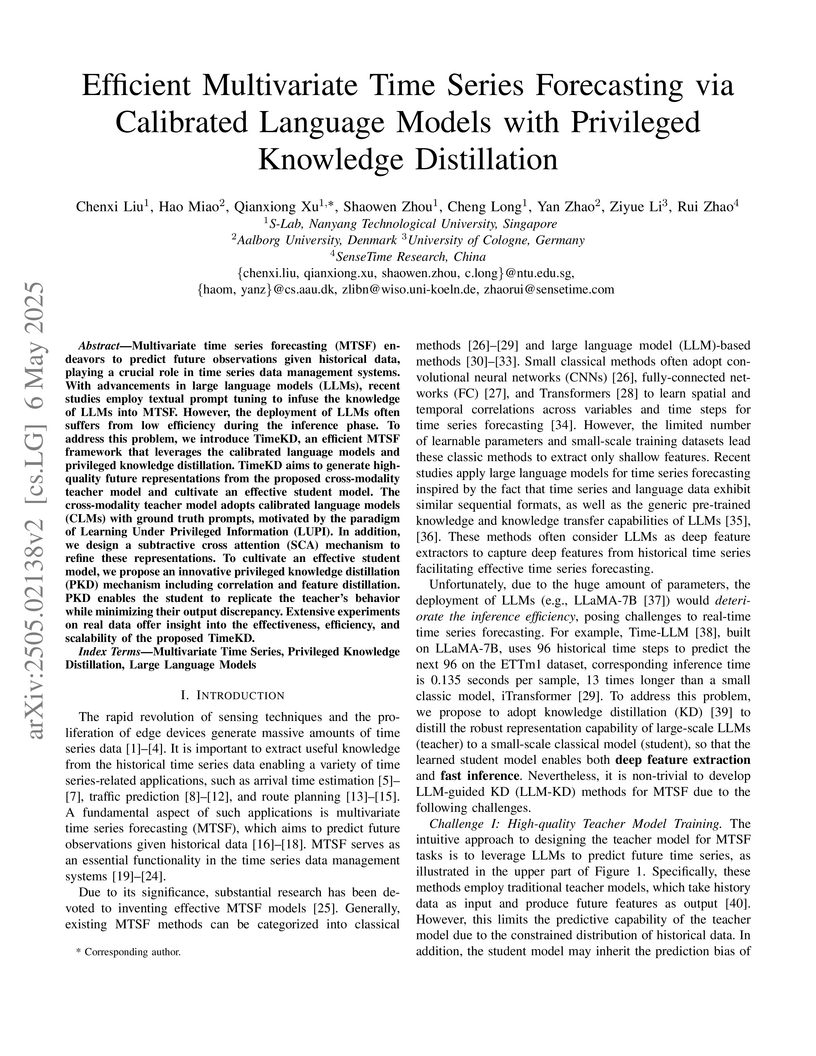

View blogTimeKD introduces an efficient framework for multivariate time series forecasting that leverages the robust representation capabilities of Large Language Models while mitigating their high inference costs. This is achieved through a novel privileged knowledge distillation method, which enables a lightweight student model to effectively learn from a calibrated LLM teacher that processes privileged information (future ground truth data) during training, leading to improved forecasting accuracy and superior computational efficiency.

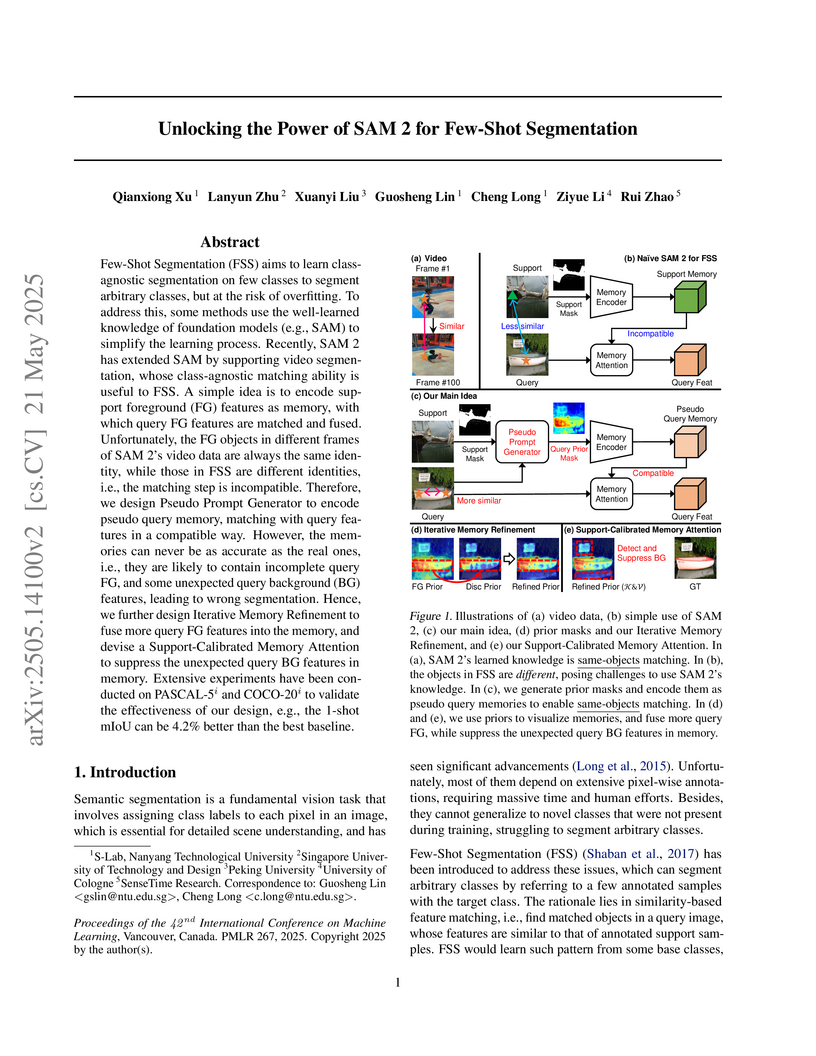

View blogThe FSSAM framework effectively adapts the SAM 2 foundation model for few-shot semantic segmentation by converting the task into a "same-object matching" problem, aligning with SAM 2's core competence. This approach, incorporating a Pseudo Prompt Generator, Iterative Memory Refinement, and Support-Calibrated Memory Attention, achieves new state-of-the-art performance on PASCAL-5i and COCO-20i datasets, demonstrating up to a 4.7% mIoU improvement over prior foundation-based methods.

View blogResearchers from the University of Cologne and Rotterdam School of Management found that the impact of Large Language Models on student learning depends on how students use them and their existing knowledge. While LLM access generally showed no average effect, substitutive use (e.g., copy-pasting solutions) negatively affected long-term learning and understanding, especially for students with lower prior knowledge, who experienced reduced comprehension.

View blogSingular Vector Fine-Tuning (SVFT) proposes a parameter-efficient fine-tuning approach that leverages the singular value decomposition of pre-trained weight matrices for targeted updates. This method achieves performance competitive with full fine-tuning, recovering up to 96% of its accuracy while updating as little as 0.006% to 0.25% of the model parameters across vision and language tasks.

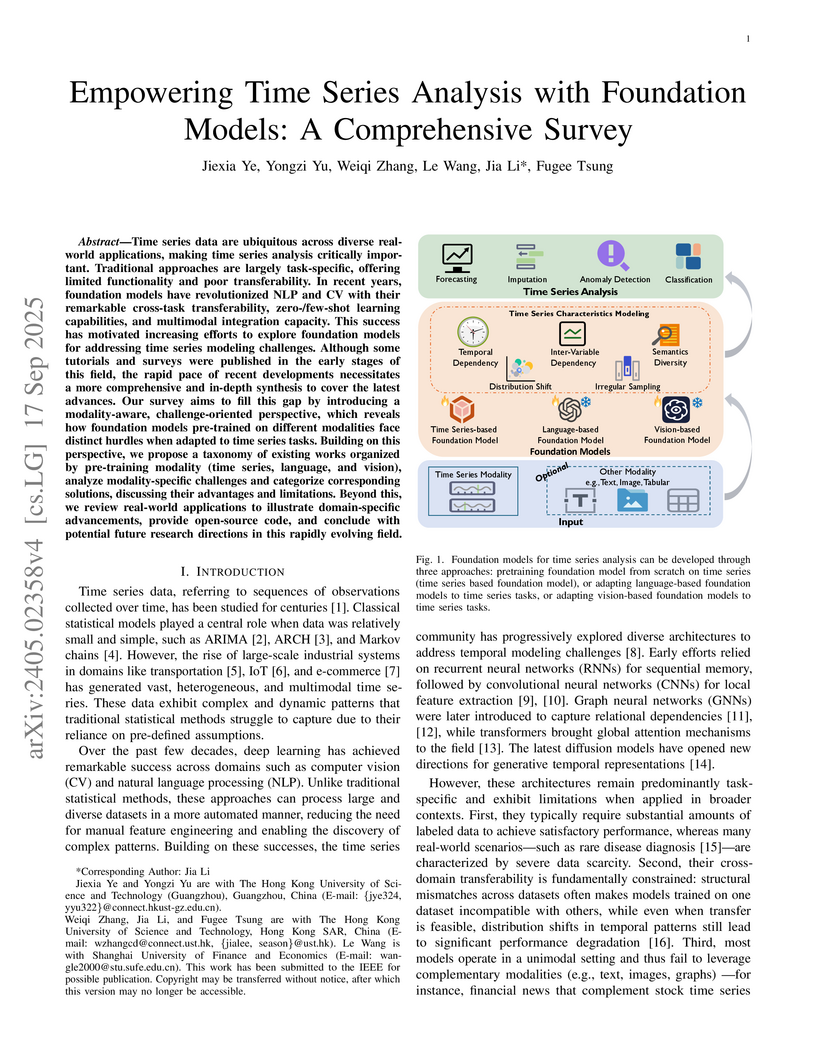

View blogThis survey provides a comprehensive analysis of cross-modality modeling for time series analytics in the LLM era, addressing the challenge of integrating textual and numerical time series data. It categorizes approaches by textual data types and integration strategies, finding that incorporating textual data can improve forecasting performance, with numerical and statistical prompts, alongside alignment strategies, showing particular promise.

View blogResearchers from KAUST, Tsinghua University, and other institutions developed XTraffic, the first comprehensive dataset that spatiotemporally aligns traffic time-series data with incident records and detailed road infrastructure attributes. This dataset, collected from California's transportation system throughout 2023, enables the development of more explainable and causally-aware traffic models by integrating previously separated information.

View blog

University of Toronto

University of Toronto California Institute of Technology

California Institute of Technology

Peking University

Peking University Nanyang Technological University

Nanyang Technological University

University of Washington

University of Washington

ETH Zurich

ETH Zurich

Aalborg University

Aalborg University

University of Texas at Austin

University of Texas at Austin

University of Amsterdam

University of Amsterdam

Chinese Academy of Sciences

Chinese Academy of Sciences University of Notre Dame

University of Notre Dame