Jožef Stefan Institute

05 Oct 2025

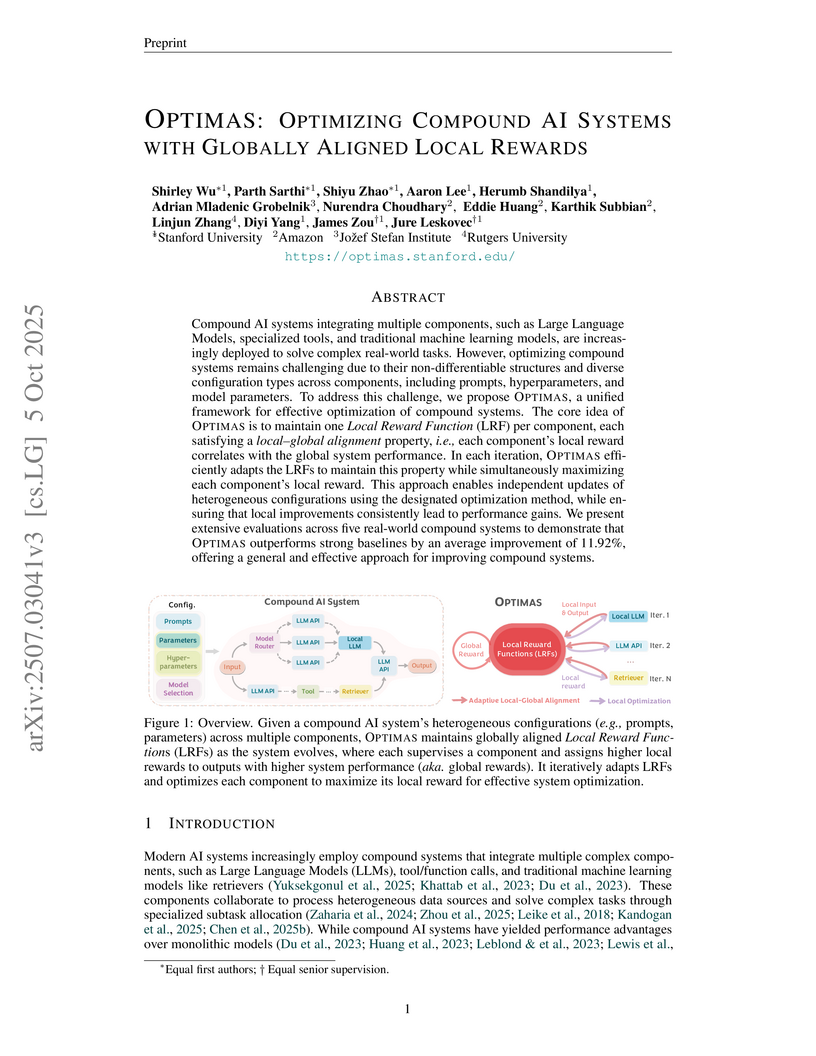

OPTIMAS is a framework for optimizing compound AI systems, utilizing globally aligned local reward functions (LRFs) to enable efficient, decentralized optimization of heterogeneous components. It achieved an average relative improvement of 11.92% over strong baselines across five real-world benchmarks, while also demonstrating high data efficiency and interpretability of its LRFs.

A survey systematically reviews imitation learning (IL) research for contact-rich robotic tasks, detailing demonstration collection, learning algorithms, and real-world applications. It highlights the growing role of multimodal data and foundation models in advancing robotic capabilities for complex physical interactions, while also identifying key challenges and future directions in the field.

Researchers from the University of Auckland and the Jožef Stefan Institute developed Counterfactual-CI, an end-to-end method that leverages Large Language Models to extract causal graphs and perform counterfactual causal inference directly from unstructured natural language. The work reveals that while LLMs excel at discovering causal relationships in text, their capacity for accurate counterfactual reasoning is limited by their ability to perform logical predictions on given causal inputs, even when the correct causal structure is provided.

A comprehensive survey on automated scientific discovery critically analyzes its history, current state, and future, consolidating efforts from equation discovery to autonomous systems. It identifies a key research gap in integrating interpretable knowledge generation with autonomous experimentation, proposing a path towards AI scientists capable of producing human-interpretable scientific knowledge.

14 Sep 2024

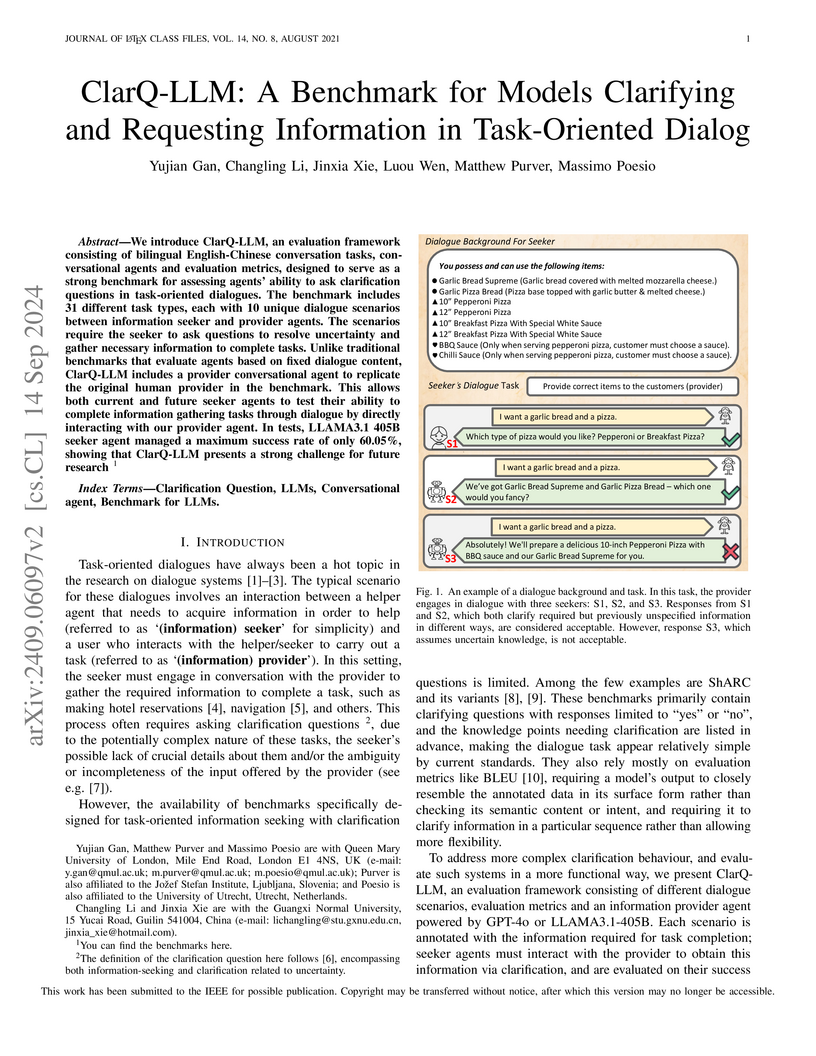

ClarQ-LLM presents a comprehensive benchmark designed to assess large language models' capacity for asking effective clarification questions in complex task-oriented dialogues. Experiments using this benchmark indicate that even state-of-the-art LLMs achieve success rates of only around 50-60%, significantly trailing human performance of 80-85%, and often struggle with uncertainty analysis, context retention, and conciseness.

Multimodal machine learning (MML) is rapidly reshaping the way mental-health disorders are detected, characterized, and longitudinally monitored. Whereas early studies relied on isolated data streams -- such as speech, text, or wearable signals -- recent research has converged on architectures that integrate heterogeneous modalities to capture the rich, complex signatures of psychiatric conditions. This survey provides the first comprehensive, clinically grounded synthesis of MML for mental health. We (i) catalog 26 public datasets spanning audio, visual, physiological signals, and text modalities; (ii) systematically compare transformer, graph, and hybrid-based fusion strategies across 28 models, highlighting trends in representation learning and cross-modal alignment. Beyond summarizing current capabilities, we interrogate open challenges: data governance and privacy, demographic and intersectional fairness, evaluation explainability, and the complexity of mental health disorders in multimodal settings. By bridging methodological innovation with psychiatric utility, this survey aims to orient both ML researchers and mental-health practitioners toward the next generation of trustworthy, multimodal decision-support systems.

A survey details how Literature-Based Discovery (LBD) has advanced between 2000 and 2025, particularly through the integration of artificial intelligence technologies such as knowledge graphs, deep learning, and large language models, while also outlining remaining challenges and future research directions.

This paper introduces an approach that leverages large language models (LLMs) as frozen feature encoders to improve deep learning performance on tabular data. The method converts tabular feature-value pairs into natural language sentences, generates embeddings with an LLM, and then feeds these to a shallow neural network and a downstream predictive model, achieving improved accuracy across various datasets and narrowing the performance gap with tree-based methods.

04 Sep 2025

Probing the CP nature of the Higgs Yukawa couplings is a promising avenue for unraveling new physics effects. In this work, we investigate the possible single- and two-field UV origins of CP-violating leptonic Yukawa couplings, using the Standard Model Effective Field Theory as a stepping stone. We demonstrate a rich set of constraints on the UV model parameters, including direct Higgs measurements, electric and magnetic dipole moments of leptons, charged lepton flavor violating observables, and electroweak precision tests. Studying representative flavor assumptions we find that the precision constraints are often, but not always, more constraining than the dedicated LHC analyses of modified leptonic Yukawa couplings.

Harvard University

Harvard University University of Chicago

University of Chicago Stanford UniversityThe University of EdinburghJožef Stefan Institute

Stanford UniversityThe University of EdinburghJožef Stefan Institute NVIDIAOxford Immune Algorithmics

NVIDIAOxford Immune Algorithmics University of SouthamptonThe Alan Turing InstituteSanta Fe Institute

University of SouthamptonThe Alan Turing InstituteSanta Fe Institute King’s College LondonKing Abdullah University of Science and TechnologyKarolinska InstitutetZuse Institute BerlinFrancis Crick InstituteAllen Discovery Center at Tufts UniversityNetwork Rail

King’s College LondonKing Abdullah University of Science and TechnologyKarolinska InstitutetZuse Institute BerlinFrancis Crick InstituteAllen Discovery Center at Tufts UniversityNetwork RailA comprehensive review analyzes how Large Language Models can function as 'creative engines' in scientific discovery, augmenting each stage of the scientific method from hypothesis generation to experimentation, highlighting their potential beyond traditional research assistance.

University of WashingtonAllen Institute for Artificial Intelligence

University of WashingtonAllen Institute for Artificial Intelligence Georgia Institute of TechnologyLMU MunichIT University of CopenhagenJožef Stefan InstituteBeijing Academy of Artificial Intelligence

Georgia Institute of TechnologyLMU MunichIT University of CopenhagenJožef Stefan InstituteBeijing Academy of Artificial Intelligence Sorbonne UniversitéINRIA ParisUniversity of BathNational UniversityBen Gurion UniversityComenius University in BratislavaCiscoDuolingo

Sorbonne UniversitéINRIA ParisUniversity of BathNational UniversityBen Gurion UniversityComenius University in BratislavaCiscoDuolingoWe introduce Universal NER (UNER), an open, community-driven project to develop gold-standard NER benchmarks in many languages. The overarching goal of UNER is to provide high-quality, cross-lingually consistent annotations to facilitate and standardize multilingual NER research. UNER v1 contains 18 datasets annotated with named entities in a cross-lingual consistent schema across 12 diverse languages. In this paper, we detail the dataset creation and composition of UNER; we also provide initial modeling baselines on both in-language and cross-lingual learning settings. We release the data, code, and fitted models to the public.

This survey paper systematically categorizes and analyzes the synergy between Knowledge Graphs (KGs) and Large Language Models (LLMs), identifying critical challenges in scalability, computational efficiency, and data quality that differentiate it from prior reviews. It provides a structured framework for understanding current integration approaches and outlines key open problems for future research in creating more reliable and interpretable AI systems.

This paper addresses the Longest Filled Common Subsequence (LFCS) problem, a challenging NP-hard problem with applications in bioinformatics, including gene mutation prediction and genomic data reconstruction. Existing approaches, including exact, metaheuristic, and approximation algorithms, have primarily been evaluated on small-sized instances, which offer limited insights into their scalability. In this work, we introduce a new benchmark dataset with significantly larger instances and demonstrate that existing datasets lack the discriminative power needed to meaningfully assess algorithm performance at scale. To solve large instances efficiently, we utilize an adaptive Construct, Merge, Solve, Adapt (CMSA) framework that iteratively generates promising subproblems via component-based construction and refines them using feedback from prior iterations. Subproblems are solved using an external black-box solver. Extensive experiments on both standard and newly introduced benchmarks show that the proposed adaptive CMSA achieves state-of-the-art performance, outperforming five leading methods. Notably, on 1,510 problem instances with known optimal solutions, our approach solves 1,486 of them -- achieving over 99.9% optimal solution quality and demonstrating exceptional scalability. We additionally propose a novel application of LFCS for song identification from degraded audio excerpts as an engineering contribution, using real-world energy-profile instances from popular music. Finally, we conducted an empirical explainability analysis to identify critical feature combinations influencing algorithm performance, i.e., the key problem features contributing to success or failure of the approaches across different instance types are revealed.

Data is vital in enabling machine learning models to advance research and practical applications in finance, where accurate and robust models are essential for investment and trading decision-making. However, real-world data is limited despite its quantity, quality, and variety. The data shortage of various financial assets directly hinders the performance of machine learning models designed to trade and invest in these assets. Generative methods can mitigate this shortage. In this paper, we introduce a set of novel techniques for time series data generation (we name them Fiaingen) and assess their performance across three criteria: (a) overlap of real-world and synthetic data on a reduced dimensionality space, (b) performance on downstream machine learning tasks, and (c) runtime performance. Our experiments demonstrate that the methods achieve state-of-the-art performance across the three criteria listed above. Synthetic data generated with Fiaingen methods more closely mirrors the original time series data while keeping data generation time close to seconds - ensuring the scalability of the proposed approach. Furthermore, models trained on it achieve performance close to those trained with real-world data.

Katarina Trailović analytically demonstrated that the collision of two vacuum bubbles during an early Universe first-order phase transition yields a linearly polarized gravitational wave signal. The study also characterized the conditions under which such two-bubble transitions can occur and found the resulting signals to be detectable by future observatories like LISA and the Einstein Telescope.

Scar eigenstates in a many-body system refers to a small subset of non-thermal finite energy density eigenstates embedded into an otherwise thermal spectrum. This novel non-thermal behaviour has been seen in recent experiments simulating a one-dimensional PXP model with a kinetically-constrained local Hilbert space realized by a chain of Rydberg atoms. We probe these small sets of special eigenstates starting from particular initial states by computing the spread complexity associated to time evolution of the PXP hamiltonian. Since the scar subspace in this model is embedded only loosely, the scar states form a weakly broken representation of the Lie Algebra. We demonstrate why a careful usage of the Forward Scattering Approximation (or similar strategies thereof) is required to extract an appropriate set of Lanczos coefficients in this case as the consequence of this approximate symmetry. This leads to a well defined notion of a closed Krylov subspace and consequently, that of spread complexity. We show how the spread complexity shows approximate revivals starting from both ∣Z2⟩ and ∣Z3⟩ states and how these revivals can be made more accurate by adding optimal perturbations to the bare Hamiltonian. We also investigate the case of the vacuum as the initial state, where revivals can be stabilized using an iterative process of adding few-body terms.

Addressing the mismatch between natural language descriptions and the corresponding SQL queries is a key challenge for text-to-SQL translation. To bridge this gap, we propose an SQL intermediate representation (IR) called Natural SQL (NatSQL). Specifically, NatSQL preserves the core functionalities of SQL, while it simplifies the queries as follows: (1) dispensing with operators and keywords such as GROUP BY, HAVING, FROM, JOIN ON, which are usually hard to find counterparts for in the text descriptions; (2) removing the need for nested subqueries and set operators; and (3) making schema linking easier by reducing the required number of schema items. On Spider, a challenging text-to-SQL benchmark that contains complex and nested SQL queries, we demonstrate that NatSQL outperforms other IRs, and significantly improves the performance of several previous SOTA models. Furthermore, for existing models that do not support executable SQL generation, NatSQL easily enables them to generate executable SQL queries, and achieves the new state-of-the-art execution accuracy.

03 Mar 2025

Mar Bastero-Gil and colleagues resolved a theoretical inconsistency regarding the Schwinger current in de Sitter space by implementing a revised renormalization approach that accounts for a necessary tachyonic photon mass. Their work produced a physically consistent, finite, and positive Schwinger current for various charged particles, even in the massless limit.

29 Aug 2025

Next-generation experiments, such as the Deep Underground Neutrino Experiment and the European Spallation Source, are set to dramatically improve sensitivity to neutron-antineutron oscillation that is a direct probe of ΔB=2 baryon number violation. The discovery of such a rare process would indicate physics beyond the Standard Model and could point to specific unified theories that allow observable n−n transitions. We accordingly examine n−n oscillations within a unified framework that accounts for charged fermion masses and generates viable neutrino masses via the seesaw mechanism. More specifically, we show that n−n oscillations can arise from two specific topologies within two distinct SU(5) scenarios. One topology requires a presence of two color-sextet scalars in the Type II seesaw framework, whereas the other involves a scalar sextet and a color-octet fermion in the Type III seesaw framework. While the former topology can be realized in the SO(10)/Pati-Salam frameworks, the latter finds a natural embedding in SU(5), which constitutes one of the key novelties of our work. Remarkably enough, the same dynamics responsible for fermion masses also induces baryon number violation, thus linking n−n oscillations to the flavor structure of the theory. We show that upcoming searches of such ΔB=2 processes can probe new colored states with masses up to 1011 GeV that is well beyond the reach of colliders. This positions n−n oscillations as a rare low-energy portal to grand unification and ultra-heavy new physics.

Statistical mechanics provides a framework for describing the physics of

large, complex many-body systems using only a few macroscopic parameters to

determine the state of the system. For isolated quantum many-body systems, such

a description is achieved via the eigenstate thermalization hypothesis (ETH),

which links thermalization, ergodicity and quantum chaotic behavior. However,

tendency towards thermalization is not observed at finite system sizes and

evolution times in a robust many-body localization (MBL) regime found

numerically and experimentally in the dynamics of interacting many-body systems

at strong disorder. Although the phenomenology of the MBL regime is

well-established, the central question remains unanswered: under what

conditions does the MBL regime give rise to an MBL phase in which the

thermalization does not occur even in the asymptotic limit of infinite system

size and evolution time?

This review focuses on recent numerical investigations aiming to clarify the

status of the MBL phase, and it establishes the critical open questions about

the dynamics of disordered many-body systems. Persistent finite size drifts

towards ergodicity consistently emerge in spectral properties of disordered

many-body systems, excluding naive single-parameter scaling hypothesis and

preventing comprehension of the status of the MBL phase. The drifts are related

to tendencies towards thermalization and non-vanishing transport observed in

the dynamics of many-body systems, even at strong disorder. These phenomena

impede understanding of microscopic processes at the ETH-MBL crossover.

Nevertheless, the abrupt slowdown of dynamics with increasing disorder strength

suggests the proximity of the MBL phase. This review concludes that the

questions about thermalization and its failure in disordered many-body systems

remain a captivating area open for further explorations.

There are no more papers matching your filters at the moment.