Advances in machine learning over the past decade have resulted in a proliferation of algorithmic applications for encoding, characterizing, and acting on complex data that may contain many high dimensional features. Recently, the emergence of deep-learning models trained across very large datasets has created a new paradigm for machine learning in the form of Foundation Models. Foundation Models are programs trained on very large and broad datasets with an extensive number of parameters. Once built, these powerful, and flexible, models can be utilized in less resource-intensive ways to build many different, downstream applications that can integrate previously disparate, multimodal data. The development of these applications can be done rapidly and with a much lower demand for machine learning expertise. And the necessary infrastructure and models themselves are already being established within agencies such as NASA and ESA. At NASA this work is across several divisions of the Science Mission Directorate including the NASA Goddard and INDUS Large Language Models and the Prithvi Geospatial Foundation Model. And ESA initiatives to bring Foundation Models to Earth observations has led to the development of TerraMind. A workshop was held by the NASA Ames Research Center and the SETI Institute, in February 2025, to investigate the potential of Foundation Models for astrobiological research and to determine what steps would be needed to build and utilize such a model or models. This paper shares the findings and recommendations of that workshop, and describes clear near-term, and future opportunities in the development of a Foundation Model (or Models) for astrobiology applications. These applications would include a biosignature, or life characterization, task, a mission development and operations task, and a natural language task for integrating and supporting astrobiology research needs.

19 Jan 2024

Today's experimental noisy quantum processors can compete with and surpass all known algorithms on state-of-the-art supercomputers for the computational benchmark task of Random Circuit Sampling [1-5]. Additionally, a circuit-based quantum simulation of quantum information scrambling [6], which measures a local observable, has already outperformed standard full wave function simulation algorithms, e.g., exact Schrodinger evolution and Matrix Product States (MPS). However, this experiment has not yet surpassed tensor network contraction for computing the value of the observable. Based on those studies, we provide a unified framework that utilizes the underlying effective circuit volume to explain the tradeoff between the experimentally achievable signal-to-noise ratio for a specific observable, and the corresponding computational cost. We apply this framework to recent quantum processor experiments of Random Circuit Sampling [5], quantum information scrambling [6], and a Floquet circuit unitary [7]. This allows us to reproduce the results of Ref. [7] in less than one second per data point using one GPU.

We present Prophecy, a tool for automatically inferring formal properties of feed-forward neural networks. Prophecy is based on the observation that a significant part of the logic of feed-forward networks is captured in the activation status of the neurons at inner layers. Prophecy works by extracting rules based on neuron activations (values or on/off statuses) as preconditions that imply certain desirable output property, e.g., the prediction being a certain class. These rules represent network properties captured in the hidden layers that imply the desired output behavior. We present the architecture of the tool, highlight its features and demonstrate its usage on different types of models and output properties. We present an overview of its applications, such as inferring and proving formal explanations of neural networks, compositional verification, run-time monitoring, repair, and others. We also show novel results highlighting its potential in the era of large vision-language models.

We introduce and analyze a model for self-reconfigurable robots made up of unit-cube modules. Compared to past models, our model aims to newly capture two important practical aspects of real-world robots. First, modules often do not occupy an exact unit cube, but rather have features like bumps extending outside the allotted space so that modules can interlock. Thus, for example, our model forbids modules from squeezing in between two other modules that are one unit distance apart. Second, our model captures the practical scenario of many passive modules assembled by a single robot, instead of requiring all modules to be able to move on their own.

We prove two universality results. First, with a supply of auxiliary modules, we show that any connected polycube structure can be constructed by a carefully aligned plane sweep. Second, without additional modules, we show how to construct any structure for which a natural notion of external feature size is at least a constant; this property largely consolidates forbidden-pattern properties used in previous works on reconfigurable modular robots.

21 Jun 2024

Quantum computing is one of the most enticing computational paradigms with the potential to revolutionize diverse areas of future-generation computational systems. While quantum computing hardware has advanced rapidly, from tiny laboratory experiments to quantum chips that can outperform even the largest supercomputers on specialized computational tasks, these noisy-intermediate scale quantum (NISQ) processors are still too small and non-robust to be directly useful for any real-world applications. In this paper, we describe NASA's work in assessing and advancing the potential of quantum computing. We discuss advances in algorithms, both near- and longer-term, and the results of our explorations on current hardware as well as with simulations, including illustrating the benefits of algorithm-hardware co-design in the NISQ era. This work also includes physics-inspired classical algorithms that can be used at application scale today. We discuss innovative tools supporting the assessment and advancement of quantum computing and describe improved methods for simulating quantum systems of various types on high-performance computing systems that incorporate realistic error models. We provide an overview of recent methods for benchmarking, evaluating, and characterizing quantum hardware for error mitigation, as well as insights into fundamental quantum physics that can be harnessed for computational purposes.

We introduce a self-attending task generative adversarial network (SATGAN)

and apply it to the problem of augmenting synthetic high contrast scientific

imagery of resident space objects with realistic noise patterns and sensor

characteristics learned from collected data. Augmenting these synthetic data is

challenging due to the highly localized nature of semantic content in the data

that must be preserved. Real collected images are used to train a network what

a given class of sensor's images should look like. The trained network then

acts as a filter on noiseless context images and outputs realistic-looking

fakes with semantic content unaltered. The architecture is inspired by

conditional GANs but is modified to include a task network that preserves

semantic information through augmentation. Additionally, the architecture is

shown to reduce instances of hallucinatory objects or obfuscation of semantic

content in context images representing space observation scenes.

15 Jul 2021

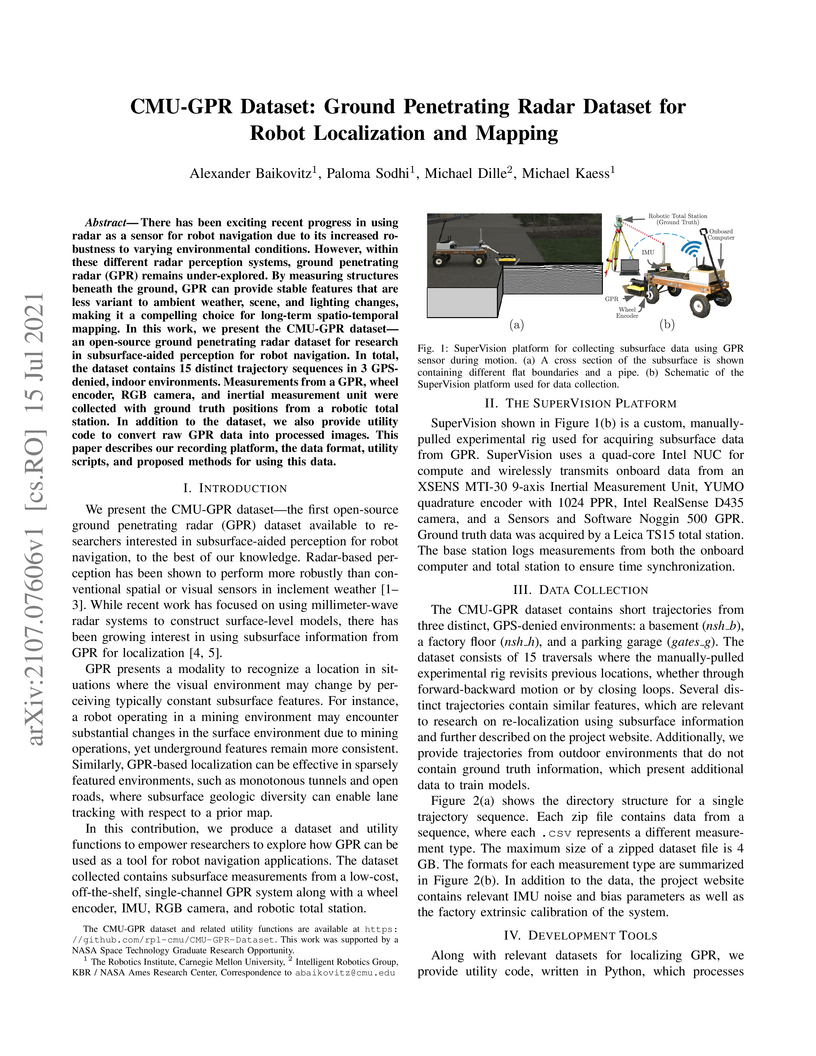

There has been exciting recent progress in using radar as a sensor for robot navigation due to its increased robustness to varying environmental conditions. However, within these different radar perception systems, ground penetrating radar (GPR) remains under-explored. By measuring structures beneath the ground, GPR can provide stable features that are less variant to ambient weather, scene, and lighting changes, making it a compelling choice for long-term spatio-temporal mapping. In this work, we present the CMU-GPR dataset--an open-source ground penetrating radar dataset for research in subsurface-aided perception for robot navigation. In total, the dataset contains 15 distinct trajectory sequences in 3 GPS-denied, indoor environments. Measurements from a GPR, wheel encoder, RGB camera, and inertial measurement unit were collected with ground truth positions from a robotic total station. In addition to the dataset, we also provide utility code to convert raw GPR data into processed images. This paper describes our recording platform, the data format, utility scripts, and proposed methods for using this data.

04 Nov 2023

Quantum algorithms have been widely studied in the context of combinatorial optimization problems. While this endeavor can often analytically and practically achieve quadratic speedups, theoretical and numeric studies remain limited, especially compared to the study of classical algorithms. We propose and study a new class of hybrid approaches to quantum optimization, termed Iterative Quantum Algorithms, which in particular generalizes the Recursive Quantum Approximate Optimization Algorithm. This paradigm can incorporate hard problem constraints, which we demonstrate by considering the Maximum Independent Set (MIS) problem. We show that, for QAOA with depth p=1, this algorithm performs exactly the same operations and selections as the classical greedy algorithm for MIS. We then turn to deeper p>1 circuits and other ways to modify the quantum algorithm that can no longer be easily mimicked by classical algorithms, and empirically confirm improved performance. Our work demonstrates the practical importance of incorporating proven classical techniques into more effective hybrid quantum-classical algorithms.

The exceptional progress in the field of machine learning (ML) in recent years has attracted a lot of interest in using this technology in aviation. Possible airborne applications of ML include safety-critical functions, which must be developed in compliance with rigorous certification standards of the aviation industry. Current certification standards for the aviation industry were developed prior to the ML renaissance without taking specifics of ML technology into account. There are some fundamental incompatibilities between traditional design assurance approaches and certain aspects of ML-based systems. In this paper, we analyze the current airborne certification standards and show that all objectives of the standards can be achieved for a low-criticality ML-based system if certain assumptions about ML development workflow are applied.

Transparent conducting oxides (TCO) such as indium-tin-oxide (ITO) exhibit

strong optical nonlinearity in the frequency range where their permittivities

are near zero. We leverage this nonlinear optical response to realize a

sub-picosecond time-gate based on upconversion (or sum-) four-wave mixing (FWM)

between two ultrashort pulses centered at the epsilon-near-zero (ENZ)

wavelength in a sub-micron-thick ITO film. The time-gate removes the effect of

both static and dynamic scattering on the signal pulse by retaining only the

ballistic photons of the pulse, that is, the photons that are not scattered.

Thus, the spatial information encoded in either the intensity or the phase of

the signal pulse can be preserved and transmitted with high fidelity through

scattering media. Furthermore, in the presence of time-varying scattering, our

time-gate can reduce the resulting scintillation by two orders of magnitude. In

contrast to traditional bulk nonlinear materials, time gating by sum-FWM in a

sub-wavelength-thick ENZ film can produce a scattering-free upconverted signal

at a visible wavelength without sacrificing spatial resolution, which is

usually limited by the phase-matching condition. Our proof-of-principle

experiment can have implications for potential applications such as \textit{in

vivo} diagnostic imaging and free-space optical communication.

There is rising interest in differentiable rendering, which allows explicitly modeling geometric priors and constraints in optimization pipelines using first-order methods such as backpropagation. Incorporating such domain knowledge can lead to deep neural networks that are trained more robustly and with limited data, as well as the capability to solve ill-posed inverse problems. Existing efforts in differentiable rendering have focused on imagery from electro-optical sensors, particularly conventional RGB-imagery. In this work, we propose an approach for differentiable rendering of Synthetic Aperture Radar (SAR) imagery, which combines methods from 3D computer graphics with neural rendering. We demonstrate the approach on the inverse graphics problem of 3D Object Reconstruction from limited SAR imagery using high-fidelity simulated SAR data.

We present Chook, an open-source Python-based tool to generate discrete optimization problems of tunable complexity with a priori known solutions. Chook provides a cross-platform unified environment for solution planting using a number of techniques, such as tile planting, Wishart planting, equation planting, and deceptive cluster loop planting. Chook also incorporates planted solutions for higher-order (beyond quadratic) binary optimization problems. The support for various planting schemes and the tunable hardness allows the user to generate problems with a wide range of complexity on different graph topologies ranging from hypercubic lattices to fully-connected graphs.

We study the relationship between the Quantum Approximate Optimization

Algorithm (QAOA) and the underlying symmetries of the objective function to be

optimized. Our approach formalizes the connection between quantum symmetry

properties of the QAOA dynamics and the group of classical symmetries of the

objective function. The connection is general and includes but is not limited

to problems defined on graphs. We show a series of results exploring the

connection and highlight examples of hard problem classes where a nontrivial

symmetry subgroup can be obtained efficiently. In particular we show how

classical objective function symmetries lead to invariant measurement outcome

probabilities across states connected by such symmetries, independent of the

choice of algorithm parameters or number of layers. To illustrate the power of

the developed connection, we apply machine learning techniques towards

predicting QAOA performance based on symmetry considerations. We provide

numerical evidence that a small set of graph symmetry properties suffices to

predict the minimum QAOA depth required to achieve a target approximation ratio

on the MaxCut problem, in a practically important setting where QAOA parameter

schedules are constrained to be linear and hence easier to optimize.

Electrochemical batteries are ubiquitous devices in our society. When they

are employed in mission-critical applications, the ability to precisely predict

the end of discharge under highly variable environmental and operating

conditions is of paramount importance in order to support operational

decision-making. While there are accurate predictive models of the processes

underlying the charge and discharge phases of batteries, the modelling of

ageing and its effect on performance remains poorly understood. Such a lack of

understanding often leads to inaccurate models or the need for time-consuming

calibration procedures whenever the battery ages or its conditions change

significantly. This represents a major obstacle to the real-world deployment of

efficient and robust battery management systems. In this paper, we propose for

the first time an approach that can predict the voltage discharge curve for

batteries of any degradation level without the need for calibration. In

particular, we introduce Dynaformer, a novel Transformer-based deep learning

architecture which is able to simultaneously infer the ageing state from a

limited number of voltage/current samples and predict the full voltage

discharge curve for real batteries with high precision. Our experiments show

that the trained model is effective for input current profiles of different

complexities and is robust to a wide range of degradation levels. In addition

to evaluating the performance of the proposed framework on simulated data, we

demonstrate that a minimal amount of fine-tuning allows the model to bridge the

simulation-to-real gap between simulations and real data collected from a set

of batteries. The proposed methodology enables the utilization of

battery-powered systems until the end of discharge in a controlled and

predictable way, thereby significantly prolonging the operating cycles and

reducing costs.

Deep neural network (DNN) models, including those used in safety-critical domains, need to be thoroughly tested to ensure that they can reliably perform well in different scenarios. In this article, we provide an overview of structural coverage metrics for testing DNN models, including neuron coverage (NC), k-multisection neuron coverage (kMNC), top-k neuron coverage (TKNC), neuron boundary coverage (NBC), strong neuron activation coverage (SNAC) and modified condition/decision coverage (MC/DC). We evaluate the metrics on realistic DNN models used for perception tasks (including LeNet-1, LeNet-4, LeNet-5, and ResNet20) as well as on networks used in autonomy (TaxiNet). We also provide a tool, DNNCov, which can measure the testing coverage for all these metrics. DNNCov outputs an informative coverage report to enable researchers and practitioners to assess the adequacy of DNN testing, compare different coverage measures, and to more conveniently inspect the model's internals during testing.

In recent years, the remarkable progress of Machine Learning (ML)

technologies within the domain of Artificial Intelligence (AI) systems has

presented unprecedented opportunities for the aviation industry, paving the way

for further advancements in automation, including the potential for single

pilot or fully autonomous operation of large commercial airplanes. However, ML

technology faces major incompatibilities with existing airborne certification

standards, such as ML model traceability and explainability issues or the

inadequacy of traditional coverage metrics. Certification of ML-based airborne

systems using current standards is problematic due to these challenges. This

paper presents a case study of an airborne system utilizing a Deep Neural

Network (DNN) for airport sign detection and classification. Building upon our

previous work, which demonstrates compliance with Design Assurance Level (DAL)

D, we upgrade the system to meet the more stringent requirements of Design

Assurance Level C. To achieve DAL C, we employ an established architectural

mitigation technique involving two redundant and dissimilar Deep Neural

Networks. The application of novel ML-specific data management techniques

further enhances this approach. This work is intended to illustrate how the

certification challenges of ML-based systems can be addressed for medium

criticality airborne applications.

Vibrational and magnetic properties of single-crystal uranium-thorium dioxide (UxTh1-xO2) with a full range of 0

Solar energetic particles (SEPs) can pose hazardous radiation risks to both humans in space and spacecraft electronics. Numerical modeling based on first principles offers valuable insights into SEPs, providing synthetic observables for SEPs at any time and location in space. In this work, we present a high-resolution scheme based on integral relations for Poisson brackets to solve the kinetic equation for particle acceleration and transport processes. We implement this scheme within the Space Weather Modeling Framework (SWMF), developed at the University of Michigan, to conduct a comprehensive study of solar energetic protons during the 2013 April 11 SEP event. In addition, a shock capturing tool is developed to study the coronal-mass-ejection-driven shock starting from the low solar corona. Multi-point spacecraft observations, including SOHO/ERNE, SDO/AIA, GOES and ACE at Earth, and STEREO-A/B, are used for model-data comparison and validation. New synthetic observables such as white-light images, shock geometry and properties, as well as SEP intensity-time profiles and spectra provide insights for SEP studies. The influences of the mean free path on SEP intensity-time profiles and spectra are also discussed. The results demonstrate: (1) the successful implementation of the Poisson bracket scheme with a self-consistent particle tracker within the SWMF, (2) the capability of capturing the time-evolving shock surface in the SWMF, and (3) the complexity of the mean free path impacts on SEPs. Overall, this study contributes to both scientific research and operational objectives by advancing our understanding of particle behaviors and showing the readiness for more accurate SEP predictions.

George Mason UniversityUS Naval Research LaboratoryPeratonComputational Physics Inc.KBRAerospace CorporationCooperative Institute for Research in Environmental Sciences, University of ColoradoSpace Systems Research CorporationTSCNational Air and Space Administration/Goddard Space Flight CenterSoftware Control Solutions, LLCSilver Engineering Inc.Aerotemp ConsultingMcCallie AssociatesNational Centers for Environmental Information, National Oceanographic and Atmospheric AdministrationSpace Weather Prediction Center, National Oceanographic and Atmospheric AdministrationResearch Support Instruments, Inc.National Oceanographic and Atmospheric Administration NESDIS Office of Space Weather Observations (SWO)Columbus Technologies and Services, Inc.ASRC Federal System SolutionsNational Aeronautics and Space Administration, Earth Science Division

The CCOR Compact Coronagraph is a series of two operational solar coronagraphs sponsored by the National Oceanic and Atmospheric Administration (NOAA). They were designed, built, and tested by the U.S. Naval Research Laboratory (NRL). The CCORs will be used by NOAA's Space Weather Prediction Center to detect and track Coronal Mass Ejections (CMEs) and predict the Space Weather. CCOR-1 is on board the Geostationary Operational Environmental Satellite -U (GOES-U, now GOES-19/GOES-East). GOES-U was launched from Kennedy Space Flight Center, Florida, on 25 June 2024. CCOR-2 is on board the Space Weather Follow On at Lagrange point 1 (SWFO-L1). SWFO-L1 is scheduled to launch in the fall of 2025. SWFO will be renamed SOLAR-1 once it reaches L1. The CCORs are white-light coronagraphs that have a field of view and performance similar to the SOHO LASCO C3 coronagraph. CCOR-1 FOV spans from 4 to 22 Rsun, while CCOR-2 spans from 3.5 to 26 Rsun. The spatial resolution is 39 arcsec for CCOR-1 and 65 arcsec for CCOR-2. They both operate in a band-pass of 470 - 740 nm. The synoptic cadence is 15 min and the latency from image capture to the forecaster on the ground is less than 30 min. Compared to past generation coronagraphs such as the Large Angle and Spectrometric Coronagraph (LASCO), CCOR uses a compact design; all the solar occultation is done with a single multi-disk external occulter. No internal occulter is used. This allowed a substantial reduction in size and mass compared to SECCHI COR-2, for example, but with slightly lower signal-to-noise ratio. In this article, we review the science that the CCORs will capitalize on for the purpose of operational space weather prediction. We give a description of the driving requirements and accommodations, and provide details on the instrument design. In the end, information on ground processing and data levels is provided.

10 Oct 2025

In this work, we demonstrate a passivation-free Ga-polar recessed-gate AlGaN/GaN HEMT on a sapphire substrate for W-band operation, featuring a 5.5 nm Al0.35Ga0.65N barrier under the gate and a 31 nm Al0.35Ga0.65N barrier in the gate access regions. The device achieves a drain current density of 1.8 A/mm, a peak transconductance of 750 mS/mm, and low gate leakage with a high on/off ratio of 10^7. Small-signal characterization reveals a current-gain cutoff frequency of 127 GHz and a maximum oscillation frequency of 203 GHz. Continuous-wave load-pull measurements at 94 GHz demonstrate an output power density of 2.8 W/mm with 26.8% power-added efficiency (PAE), both of which represent the highest values reported for Ga-polar GaN HEMTs on sapphire substrates and are comparable to state-of-the-art Ga-polar GaN HEMTs on SiC substrates. Considering the low cost of sapphire, the simplicity of the epitaxial design, and the reduced fabrication complexity relative to N-polar devices, this work highlights the potential of recessed-gate Ga-polar AlGaN/GaN HEMTs on sapphire as a promising candidate for next-generation millimeter-wave power applications.

There are no more papers matching your filters at the moment.