Khulna University

In this chapter, we aim to explore an in-depth exploration of the specialized hardware accelerators designed to enhance Artificial Intelligence (AI) applications, focusing on their necessity, development, and impact on the field of AI. It covers the transition from traditional computing systems to advanced AI-specific hardware, addressing the growing demands of AI algorithms and the inefficiencies of conventional architectures. The discussion extends to various types of accelerators, including GPUs, FPGAs, and ASICs, and their roles in optimizing AI workloads. Additionally, it touches on the challenges and considerations in designing and implementing these accelerators, along with future prospects in the evolution of AI hardware. This comprehensive overview aims to equip readers with a clear understanding of the current landscape and future directions in AI hardware development, making it accessible to both experts and newcomers to the field.

11 Dec 2022

Source code segment authorship identification is the task of identifying the author of a source code segment through supervised learning. It has vast importance in plagiarism detection, digital forensics, and several other law enforcement issues. However, when a source code segment is written by multiple authors, typical author identification methods no longer work. Here, an author identification technique, capable of predicting the authorship of source code segments, even in the case of multiple authors, has been proposed which uses a stacking ensemble classifier. This proposed technique is built upon several deep neural networks, random forests and support vector machine classifiers. It has been shown that for identifying the author group, a single classification technique is no longer sufficient and using a deep neural network-based stacking ensemble method can enhance the accuracy significantly. The performance of the proposed technique has been compared with some existing methods which only deal with the source code segments written precisely by a single author. Despite the harder task of authorship identification for source code segments written by multiple authors, our proposed technique has achieved promising results evidenced by the identification accuracy, compared to the related works which only deal with code segments written by a single author.

Antibiotic resistance presents a growing global health threat by diminishing the effectiveness of treatments and allowing once-manageable bacterial infections to persist. This study develops and analyzes an optimization-based mathematical model to investigate the impact of varying antibiotic dosages on bacterial resistance, incorporating the role of the immune system. Additionally, to capture the effects of over and underdosing, a floor function is newly introduced into the model as a switch function. The model is examined both analytically and numerically. As part of the analytical solution, the validity of the model through the existence and uniqueness theorem, stability at the equilibrium points, and characteristics of equilibrium points in relation to state variables have been investigated. Numerical simulations, performed using the Runge Kutta 4th order method, reveal that while antibiotics effectively reduce sensitive bacteria, they simultaneously increase resistant strains and suppress immune cell levels. The results also demonstrate that underdosing antibiotics increases the risk of resistance through bacterial mutation, while overdosing weakens the immune system by disrupting beneficial microbes. These findings emphasize that improper dosing whether below or above the prescribed level can accelerate the development of antibiotic resistance, underscoring the need for carefully regulated treatment strategies that preserve both antimicrobial effectiveness and immune system integrity.

20 Oct 2025

In this study, we present a highly sensitive Surface Plasmon Resonance (SPR)-based biosensor integrated with a circular-lattice Photonic Crystal Fiber (PCF) for early-stage cancer detection. The proposed sensor leverages the synergy between SPR and PCF technologies to overcome the bulkiness and limited sensitivity of traditional SPR systems. A thin gold (Au) layer, responsible for plasmon excitation, is deposited on the fiber structure, while a nanolayer of vanadium pentoxide (V2O5) is introduced to enhance adhesion between the gold and the silica background, improving structural stability and field confinement. The sensor is designed to detect refractive index (RI) variations in biological analytes, specifically targeting cancerous cells from skin, blood, and adrenal gland tissues. The optical characteristics and performance of the sensor were thoroughly analyzed using the Finite Element Method (FEM) in COMSOL Multiphysics 6.1, allowing for precise simulation and optimization. The sensor demonstrates high sensitivity within the RI range of 1.360-1.395, corresponding to the RI values of the target cancer cells. Remarkable wavelength sensitivities of 21,250 nm/RIU, 53,571 nm/RIU, and 103,571 nm/RIU were achieved for skin, blood, and adrenal gland cancers, respectively. In addition, a maximum figure of merit (FOM) of 306.424 RIU^-1 and a spectral resolution (SR) of 9.57x10^-7 RIU further affirm the sensor's exceptional detection capabilities. These findings indicate the proposed SPR-PCF sensor's strong potential for real-time, label-free biosensing applications, particularly in precise and early cancer diagnostics.

Yoga has recently become an essential aspect of human existence for maintaining a healthy body and mind. People find it tough to devote time to the gym for workouts as their lives get more hectic and they work from home. This kind of human pose estimation is one of the notable problems as it has to deal with locating body key points or joints. Yoga-82, a benchmark dataset for large-scale yoga pose recognition with 82 classes, has challenging positions that could make precise annotations impossible. We have used VGG-16, ResNet-50, ResNet-101, and DenseNet-121 and finetuned them in different ways to get better results. We also used Neural Architecture Search to add more layers on top of this pre-trained architecture. The experimental result shows the best performance of DenseNet-121 having the top-1 accuracy of 85% and top-5 accuracy of 96% outperforming the current state-of-the-art result.

In this paper, we formulate the colorization problem into a multinomial classification problem and then apply a weighted function to classes. We propose a set of formulas to transform color values into color classes and vice versa. To optimize the classes, we experiment with different bin sizes for color class transformation. Observing class appearance, standard deviation, and model parameters on various extremely large-scale real-time images in practice we propose 532 color classes for our classification task. During training, we propose a class-weighted function based on true class appearance in each batch to ensure proper saturation of individual objects. We adjust the weights of the major classes, which are more frequently observed, by lowering them, while escalating the weights of the minor classes, which are less commonly observed. In our class re-weight formula, we propose a hyper-parameter for finding the optimal trade-off between the major and minor appeared classes. As we apply regularization to enhance the stability of the minor class, occasional minor noise may appear at the object's edges. We propose a novel object-selective color harmonization method empowered by the Segment Anything Model (SAM) to refine and enhance these edges. We propose two new color image evaluation metrics, the Color Class Activation Ratio (CCAR), and the True Activation Ratio (TAR), to quantify the richness of color components. We compare our proposed model with state-of-the-art models using six different dataset: Place, ADE, Celeba, COCO, Oxford 102 Flower, and ImageNet, in qualitative and quantitative approaches. The experimental results show that our proposed model outstrips other models in visualization, CNR and in our proposed CCAR and TAR measurement criteria while maintaining satisfactory performance in regression (MSE, PSNR), similarity (SSIM, LPIPS, UIUI), and generative criteria (FID).

The Bangla linguistic variety is a fascinating mix of regional dialects that contributes to the cultural diversity of the Bangla-speaking community. Despite extensive study into translating Bangla to English, English to Bangla, and Banglish to Bangla in the past, there has been a noticeable gap in translating Bangla regional dialects into standard Bangla. In this study, we set out to fill this gap by creating a collection of 32,500 sentences, encompassing Bangla, Banglish, and English, representing five regional Bangla dialects. Our aim is to translate these regional dialects into standard Bangla and detect regions accurately. To tackle the translation and region detection tasks, we propose two novel models: DialectBanglaT5 for translating regional dialects into standard Bangla and DialectBanglaBERT for identifying the dialect's region of origin. DialectBanglaT5 demonstrates superior performance across all dialects, achieving the highest BLEU score of 71.93, METEOR of 0.8503, and the lowest WER of 0.1470 and CER of 0.0791 on the Mymensingh dialect. It also achieves strong ROUGE scores across all dialects, indicating both accuracy and fluency in capturing dialectal nuances. In parallel, DialectBanglaBERT achieves an overall region classification accuracy of 89.02%, with notable F1-scores of 0.9241 for Chittagong and 0.8736 for Mymensingh, confirming its effectiveness in handling regional linguistic variation. This is the first large-scale investigation focused on Bangla regional dialect translation and region detection. Our proposed models highlight the potential of dialect-specific modeling and set a new benchmark for future research in low-resource and dialect-rich language settings.

University of New South WalesNagasaki UniversityKhulna UniversityUniversity of CanberraWorld Health OrganizationBangabandhu Sheikh Mujibur Rahman Science and Technology UniversityThe Garvan Institute of Medical ResearchJatiya Kabi Kazi Nazrul Islam UniversityRoyal North Shore Hospital SERT Institute

Background: Providing appropriate care for people suffering from COVID-19,

the disease caused by the pandemic SARS-CoV-2 virus is a significant global

challenge. Many individuals who become infected have pre-existing conditions

that may interact with COVID-19 to increase symptom severity and mortality

risk. COVID-19 patient comorbidities are likely to be informative about

individual risk of severe illness and mortality. Accurately determining how

comorbidities are associated with severe symptoms and mortality would thus

greatly assist in COVID-19 care planning and provision.

Methods: To assess the interaction of patient comorbidities with COVID-19

severity and mortality we performed a meta-analysis of the published global

literature, and machine learning predictive analysis using an aggregated

COVID-19 global dataset.

Results: Our meta-analysis identified chronic obstructive pulmonary disease

(COPD), cerebrovascular disease (CEVD), cardiovascular disease (CVD), type 2

diabetes, malignancy, and hypertension as most significantly associated with

COVID-19 severity in the current published literature. Machine learning

classification using novel aggregated cohort data similarly found COPD, CVD,

CKD, type 2 diabetes, malignancy and hypertension, as well as asthma, as the

most significant features for classifying those deceased versus those who

survived COVID-19. While age and gender were the most significant predictor of

mortality, in terms of symptom-comorbidity combinations, it was observed that

Pneumonia-Hypertension, Pneumonia-Diabetes and Acute Respiratory Distress

Syndrome (ARDS)-Hypertension showed the most significant effects on COVID-19

mortality.

Conclusions: These results highlight patient cohorts most at risk of COVID-19

related severe morbidity and mortality which have implications for

prioritization of hospital resources.

Rice leaf diseases significantly reduce productivity and cause economic losses, highlighting the need for early detection to enable effective management and improve yields. This study proposes Artificial Neural Network (ANN)-based image-processing techniques for timely classification and recognition of rice diseases. Despite the prevailing approach of directly inputting images of rice leaves into ANNs, there is a noticeable absence of thorough comparative analysis between the Feature Analysis Detection Model (FADM) and Direct Image-Centric Detection Model (DICDM), specifically when it comes to evaluating the effectiveness of Feature Extraction Algorithms (FEAs). Hence, this research presents initial experiments on the Feature Analysis Detection Model, utilizing various image Feature Extraction Algorithms, Dimensionality Reduction Algorithms (DRAs), Feature Selection Algorithms (FSAs), and Extreme Learning Machine (ELM). The experiments are carried out on datasets encompassing bacterial leaf blight, brown spot, leaf blast, leaf scald, Sheath blight rot, and healthy leaf, utilizing 10-fold Cross-Validation method. A Direct Image-Centric Detection Model is established without the utilization of any FEA, and the evaluation of classification performance relies on different metrics. Ultimately, an exhaustive contrast is performed between the achievements of the Feature Analysis Detection Model and Direct Image-Centric Detection Model in classifying rice leaf diseases. The results reveal that the highest performance is attained using the Feature Analysis Detection Model. The adoption of the proposed Feature Analysis Detection Model for detecting rice leaf diseases holds excellent potential for improving crop health, minimizing yield losses, and enhancing overall productivity and sustainability of rice farming.

In this paper, we study the resource slicing problem in a dynamic

multiplexing scenario of two distinct 5G services, namely Ultra-Reliable Low

Latency Communications (URLLC) and enhanced Mobile BroadBand (eMBB). While eMBB

services focus on high data rates, URLLC is very strict in terms of latency and

reliability. In view of this, the resource slicing problem is formulated as an

optimization problem that aims at maximizing the eMBB data rate subject to a

URLLC reliability constraint, while considering the variance of the eMBB data

rate to reduce the impact of immediately scheduled URLLC traffic on the eMBB

reliability. To solve the formulated problem, an optimization-aided Deep

Reinforcement Learning (DRL) based framework is proposed, including: 1) eMBB

resource allocation phase, and 2) URLLC scheduling phase. In the first phase,

the optimization problem is decomposed into three subproblems and then each

subproblem is transformed into a convex form to obtain an approximate resource

allocation solution. In the second phase, a DRL-based algorithm is proposed to

intelligently distribute the incoming URLLC traffic among eMBB users.

Simulation results show that our proposed approach can satisfy the stringent

URLLC reliability while keeping the eMBB reliability higher than 90%.

Automatic colorization of gray images with objects of different colors and

sizes is challenging due to inter- and intra-object color variation and the

small area of the main objects due to extensive backgrounds. The learning

process often favors dominant features, resulting in a biased model. In this

paper, we formulate the colorization problem into a multinomial classification

problem and then apply a weighted function to classes. We propose a set of

formulas to transform color values into color classes and vice versa. Class

optimization and balancing feature distribution are the keys for good

performance. Observing class appearance on various extremely large-scale

real-time images in practice, we propose 215 color classes for our colorization

task. During training, we propose a class-weighted function based on true class

appearance in each batch to ensure proper color saturation of individual

objects. We establish a trade-off between major and minor classes to provide

orthodox class prediction by eliminating major classes' dominance over minor

classes. As we apply regularization to enhance the stability of the minor

class, occasional minor noise may appear at the object's edges. We propose a

novel object-selective color harmonization method empowered by the SAM to

refine and enhance these edges. We propose a new color image evaluation metric,

the Chromatic Number Ratio (CNR), to quantify the richness of color components.

We compare our proposed model with state-of-the-art models using five different

datasets: ADE, Celeba, COCO, Oxford 102 Flower, and ImageNet, in both

qualitative and quantitative approaches. The experimental results show that our

proposed model outstrips other models in visualization and CNR measurement

criteria while maintaining satisfactory performance in regression (MSE, PSNR),

similarity (SSIM, LPIPS, UIQI), and generative criteria (FID).

Monkeypox virus (MPXV) is a zoonotic virus that poses a significant threat to public health, particularly in remote parts of Central and West Africa. Early detection of monkeypox lesions is crucial for effective treatment. However, due to its similarity with other skin diseases, monkeypox lesion detection is a challenging task. To detect monkeypox, many researchers used various deep-learning models such as MobileNetv2, VGG16, ResNet50, InceptionV3, DenseNet121, EfficientNetB3, MobileNetV2, and Xception. However, these models often require high storage space due to their large size. This study aims to improve the existing challenges by introducing a CNN model named MpoxSLDNet (Monkeypox Skin Lesion Detector Network) to facilitate early detection and categorization of Monkeypox lesions and Non-Monkeypox lesions in digital images. Our model represents a significant advancement in the field of monkeypox lesion detection by offering superior performance metrics, including precision, recall, F1-score, accuracy, and AUC, compared to traditional pre-trained models such as VGG16, ResNet50, and DenseNet121. The key novelty of our approach lies in MpoxSLDNet's ability to achieve high detection accuracy while requiring significantly less storage space than existing models. By addressing the challenge of high storage requirements, MpoxSLDNet presents a practical solution for early detection and categorization of monkeypox lesions in resource-constrained healthcare settings. In this study, we have used "Monkeypox Skin Lesion Dataset" comprising 1428 skin images of monkeypox lesions and 1764 skin images of Non-Monkeypox lesions. Dataset's limitations could potentially impact the model's ability to generalize to unseen cases. However, the MpoxSLDNet model achieved a validation accuracy of 94.56%, compared to 86.25%, 84.38%, and 67.19% for VGG16, DenseNet121, and ResNet50, respectively.

Acute Lymphoblastic Leukemia (ALL) is a blood cell cancer characterized by

numerous immature lymphocytes. Even though automation in ALL prognosis is an

essential aspect of cancer diagnosis, it is challenging due to the

morphological correlation between malignant and normal cells. The traditional

ALL classification strategy demands experienced pathologists to carefully read

the cell images, which is arduous, time-consuming, and often suffers

inter-observer variations. This article has automated the ALL detection task

from microscopic cell images, employing deep Convolutional Neural Networks

(CNNs). We explore the weighted ensemble of different deep CNNs to recommend a

better ALL cell classifier. The weights for the ensemble candidate models are

estimated from their corresponding metrics, such as accuracy, F1-score, AUC,

and kappa values. Various data augmentations and pre-processing are

incorporated for achieving a better generalization of the network. We utilize

the publicly available C-NMC-2019 ALL dataset to conduct all the comprehensive

experiments. Our proposed weighted ensemble model, using the kappa values of

the ensemble candidates as their weights, has outputted a weighted F1-score of

88.6 %, a balanced accuracy of 86.2 %, and an AUC of 0.941 in the preliminary

test set. The qualitative results displaying the gradient class activation maps

confirm that the introduced model has a concentrated learned region. In

contrast, the ensemble candidate models, such as Xception, VGG-16,

DenseNet-121, MobileNet, and InceptionResNet-V2, separately produce coarse and

scatter learned areas for most example cases. Since the proposed kappa

value-based weighted ensemble yields a better result for the aimed task in this

article, it can experiment in other domains of medical diagnostic applications.

Combinatorial problems such as combinatorial optimization and constraint satisfaction problems arise in decision-making across various fields of science and technology. In real-world applications, when multiple optimal or constraint-satisfying solutions exist, enumerating all these solutions -- rather than finding just one -- is often desirable, as it provides flexibility in decision-making. However, combinatorial problems and their enumeration versions pose significant computational challenges due to combinatorial explosion. To address these challenges, we propose enumeration algorithms for combinatorial optimization and constraint satisfaction problems using Ising machines. Ising machines are specialized devices designed to efficiently solve combinatorial problems. Typically, they sample low-cost solutions in a stochastic manner. Our enumeration algorithms repeatedly sample solutions to collect all desirable solutions. The crux of the proposed algorithms is their stopping criteria for sampling, which are derived based on probability theory. In particular, the proposed algorithms have theoretical guarantees that the failure probability of enumeration is bounded above by a user-specified value, provided that lower-cost solutions are sampled more frequently and equal-cost solutions are sampled with equal probability. Many physics-based Ising machines are expected to (approximately) satisfy these conditions. As a demonstration, we applied our algorithm using simulated annealing to maximum clique enumeration on random graphs. We found that our algorithm enumerates all maximum cliques in large dense graphs faster than a conventional branch-and-bound algorithm specially designed for maximum clique enumeration. This demonstrates the promising potential of our proposed approach.

21 Dec 2019

The recent surge in hardware security is significant due to offshoring the proprietary Intellectual property (IP). One distinct dimension of the disruptive threat is malicious logic insertion, also known as Hardware Trojan (HT). HT subverts the normal operations of a device stealthily. The diversity in HTs activation mechanisms and their location in design brings no catch-all detection techniques. In this paper, we propose to leverage principle features of social network analysis to security analysis of Register Transfer Level (RTL) designs against HT. The approach is based on investigating design properties, and it extends the current detection techniques. In particular, we perform both node- and graph-level analysis to determine the direct and indirect interactions between nets in a design. This technique helps not only in finding vulnerable nets that can act as HT triggering signals but also their interactions to influence a particular net to act as HT payload signal. We experiment the technique on 420 combinational HT instances, and on average, we can detect both triggering and payload signals with accuracy up to 97.37%.

The Bengali language, spoken extensively across South Asia and among diasporic communities, exhibits considerable dialectal diversity shaped by geography, culture, and history. Phonological and pronunciation-based classifications broadly identify five principal dialect groups: Eastern Bengali, Manbhumi, Rangpuri, Varendri, and Rarhi. Within Bangladesh, further distinctions emerge through variation in vocabulary, syntax, and morphology, as observed in regions such as Chittagong, Sylhet, Rangpur, Rajshahi, Noakhali, and Barishal. Despite this linguistic richness, systematic research on the computational processing of Bengali dialects remains limited. This study seeks to document and analyze the phonetic and morphological properties of these dialects while exploring the feasibility of building computational models particularly Automatic Speech Recognition (ASR) systems tailored to regional varieties. Such efforts hold potential for applications in virtual assistants and broader language technologies, contributing to both the preservation of dialectal diversity and the advancement of inclusive digital tools for Bengali-speaking communities. The dataset created for this study is released for public use.

12 Jan 2024

We investigated the magnetization reversal of a perpendicularly magnetized

nanodevice using a chirped current pulse (CCP) via spin-orbit torques (SOT).

Our findings demonstrate that both the field-like (FL) and damping-like (DL)

components of SOT in CCP can efficiently induce ultrafast magnetization

reversal without any symmetry-breaking means. For a wide frequency range of the

CCP, the minimal current density obtained is significantly smaller compared to

the current density of conventional SOT-reversal. This ultrafast reversal is

due to the CCP triggering enhanced energy absorption (emission) of the

magnetization from (to) the FL- and DL-components of SOT before (after)

crossing over the energy barrier. We also verified the robustness of the

CCP-driven magnetization reversal at room temperature. Moreover, this strategy

can be extended to switch the magnetic states of perpendicular synthetic

antiferromagnetic (SAF) and ferrimagnetic (SFi) nanodevices. Therefore, these

studies enrich the basic understanding of field-free SOT-reversal and provide a

novel way to realize ultrafast SOT-MRAM devices with various free layer

designs: ferromagnetic, SAF, and SFi.

This study explores the connection between disinformation, defined as

deliberate spread of false information, and rate-induced tipping (R-tipping), a

phenomenon where systems undergo sudden changes due to rapid shifts in

ex-ternal forces. While traditionally, tipping points were associated with

exceeding critical thresholds, R-tipping highlights the influence of the rate

of change, even without crossing specific levels. The study argues that

disinformation campaigns, often organized and fast-paced, can trigger R-tipping

events in public opinion and societal behavior. This can happen even if the

disinformation itself doesn't reach a critical mass, making it challenging to

predict and control. Here, by Transforming a population dynamics model into a

network model, Investigating the interplay between the source of

disinformation, the exposed population, and the medium of transmission under

the influence of external sources, the study aims to provide valuable insights

for predicting and controlling the spread of disinformation. This mathematical

approach holds promise for developing effective countermeasures against this

increasingly prevalent threat to public discourse and decision-making.

29 Feb 2024

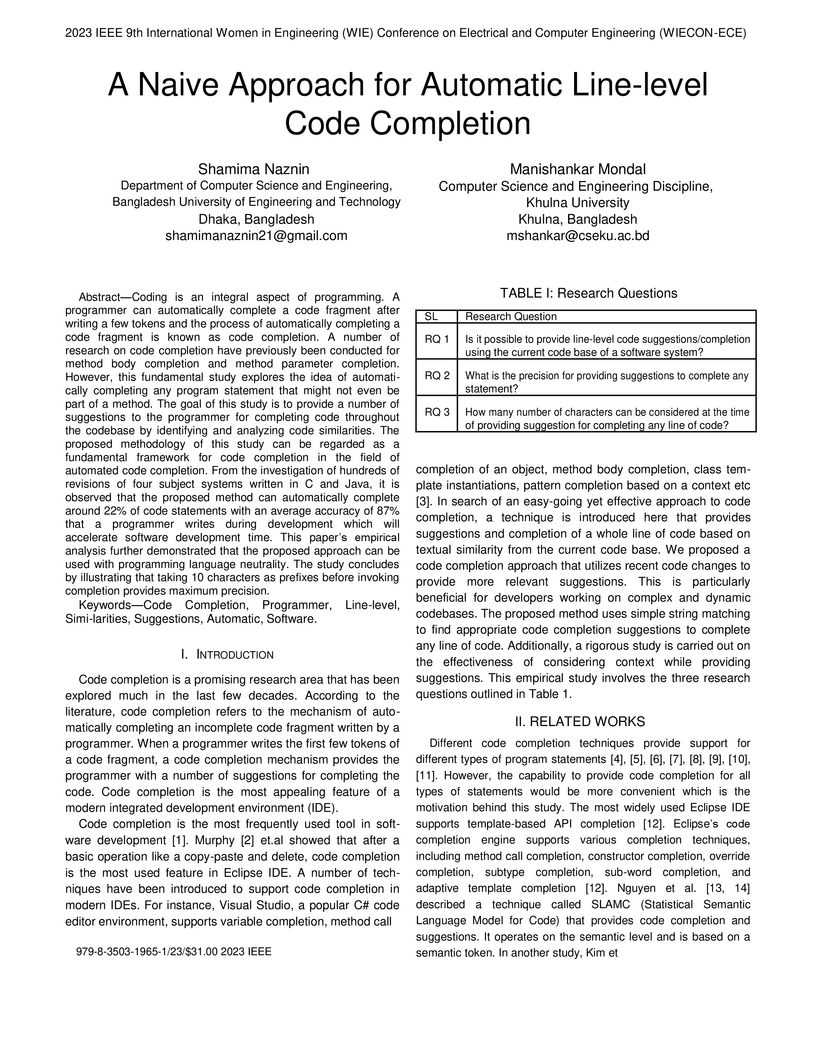

A straightforward string-matching approach to line-level code completion leverages an existing codebase to suggest complete lines of code based on a typed prefix. The method can automatically complete approximately 22% of code statements with an average precision of 87%, achieving optimal performance with a 10-character prefix.

Intrusion detection system (IDS) is a piece of hardware or software that looks for malicious activity or policy violations in a network. It looks for malicious activity or security flaws on a network or system. IDS protects hosts or networks by looking for indications of known attacks or deviations from normal behavior (Network-based intrusion detection system, or NIDS for short). Due to the rapidly increasing amount of network data, traditional intrusion detection systems (IDSs) are far from being able to quickly and efficiently identify complex and varied network attacks, especially those linked to low-frequency attacks. The SCGNet (Stacked Convolution with Gated Recurrent Unit Network) is a novel deep learning architecture that we propose in this study. It exhibits promising results on the NSL-KDD dataset in both task, network attack detection, and attack type classification with 99.76% and 98.92% accuracy, respectively. We have also introduced a general data preprocessing pipeline that is easily applicable to other similar datasets. We have also experimented with conventional machine-learning techniques to evaluate the performance of the data processing pipeline.

There are no more papers matching your filters at the moment.