LIPNCNRS UMR

In this paper, we propose a novel method for joint entity and relation extraction from unstructured text by framing it as a conditional sequence generation problem. In contrast to conventional generative information extraction models that are left-to-right token-level generators, our approach is \textit{span-based}. It generates a linearized graph where nodes represent text spans and edges represent relation triplets. Our method employs a transformer encoder-decoder architecture with pointing mechanism on a dynamic vocabulary of spans and relation types. Our model can capture the structural characteristics and boundaries of entities and relations through span representations while simultaneously grounding the generated output in the original text thanks to the pointing mechanism. Evaluation on benchmark datasets validates the effectiveness of our approach, demonstrating competitive results. Code is available at this https URL.

10 Apr 2015

We propose a definition of quantum computable functions as mappings between superpositions of natural numbers to probability distributions of natural numbers. Each function is obtained as a limit of an infinite computation of a quantum Turing machine. The class of quantum computable functions is recursively enumerable, thus opening the door to a quantum computability theory which may follow some of the classical developments.

The GraphER model reframes information extraction as a Graph Structure Learning task, utilizing a Token Graph Transformer to explicitly model and refine the graph of entities and relations. The model achieved competitive performance on the ACE05 dataset and state-of-the-art results on the CoNLL04 and SciERC benchmarks for joint entity and relation extraction.

01 Apr 2025

We investigate the relationship between the analytical properties of the

Green's function and Z2 topological insulators, focusing on

three-dimensional inversion-symmetric systems. We show that the diagonal zeros

of the Green's function in the orbital basis provide a direct and visual way to

calculate the strong and weak Z2 topological invariants. We

introduce the surface of crossings of diagonal zeros in the Brillouin zone, and

show that it separates TRIMs of opposite parity in two-band models, enabling

the visual computation of the Z2 invariants by counting the

relevant TRIMs on either side. In three-band systems, a similar property holds

in every case except when a trivial band is added in the band gap of a

non-trivial two-band system, reminiscent of the band topology of fragile

topological insulators. Our work could open avenues to experimental

measurements of Z2 topological invariants using ARPES measurements.

The Unified Modeling Language (UML) is a standard for modeling dynamic systems. UML behavioral state machines are used for modeling the dynamic behavior of object-oriented designs. The UML specification, maintained by the Object Management Group (OMG), is documented in natural language (in contrast to formal language). The inherent ambiguity of natural languages may introduce inconsistencies in the resulting state machine model. Formalizing UML state machine specification aims at solving the ambiguity problem and at providing a uniform view to software designers and developers. Such a formalization also aims at providing a foundation for automatic verification of UML state machine models, which can help to find software design vulnerabilities at an early stage and reduce the development cost. We provide here a comprehensive survey of existing work from 1997 to 2021 related to formalizing UML state machine semantics for the purpose of conducting model checking at the design stage.

Even though Variational Autoencoders (VAEs) are widely used for semi-supervised learning, the reason why they work remains unclear. In fact, the addition of the unsupervised objective is most often vaguely described as a regularization. The strength of this regularization is controlled by down-weighting the objective on the unlabeled part of the training set. Through an analysis of the objective of semi-supervised VAEs, we observe that they use the posterior of the learned generative model to guide the inference model in learning the partially observed latent variable. We show that given this observation, it is possible to gain finer control on the effect of the unsupervised objective on the training procedure. Using importance weighting, we derive two novel objectives that prioritize either one of the partially observed latent variable, or the unobserved latent variable. Experiments on the IMDB english sentiment analysis dataset and on the AG News topic classification dataset show the improvements brought by our prioritization mechanism and exhibit a behavior that is inline with our description of the inner working of Semi-Supervised VAEs.

Diffusion models have emerged as a leading framework for high-quality image generation, offering stable training and strong performance across diverse domains. However, they remain computationally intensive, particularly during the iterative denoising process. Latent-space models like Stable Diffusion alleviate some of this cost by operating in compressed representations, though at the expense of fine-grained detail. More recent approaches such as Retrieval-Augmented Diffusion Models (RDM) address efficiency by conditioning denoising on similar examples retrieved from large external memory banks. While effective, these methods introduce drawbacks: they require costly storage and retrieval infrastructure, depend on static vision-language models like CLIP for similarity, and lack adaptability during training. We propose the Prototype Diffusion Model (PDM), a method that integrates prototype learning directly into the diffusion process for efficient and adaptive visual conditioning - without external memory. Instead of retrieving reference samples, PDM constructs a dynamic set of compact visual prototypes from clean image features using contrastive learning. These prototypes guide the denoising steps by aligning noisy representations with semantically relevant visual patterns, enabling efficient generation with strong semantic grounding. Experiments show that PDM maintains high generation quality while reducing computational and storage overhead, offering a scalable alternative to retrieval-based conditioning in diffusion models.

14 Aug 2025

The Game Description Language (GDL) is a widely used formalism for specifying the rules of general games. Writing correct GDL descriptions can be challenging, especially for non-experts. Automated theorem proving has been proposed to assist game design by verifying if a GDL description satisfies desirable logical properties. However, when a description is proved to be faulty, the repair task itself can only be done manually. Motivated by the work on repairing unsolvable planning domain descriptions, we define a more general problem of finding minimal repairs for GDL descriptions that violate formal requirements, and we provide complexity results for various computational problems related to minimal repair. Moreover, we present an Answer Set Programming-based encoding for solving the minimal repair problem and demonstrate its application for automatically repairing ill-defined game descriptions.

07 Jul 2013

In robotics, gradient-free optimization algorithms (e.g. evolutionary

algorithms) are often used only in simulation because they require the

evaluation of many candidate solutions. Nevertheless, solutions obtained in

simulation often do not work well on the real device. The transferability

approach aims at crossing this gap between simulation and reality by

\emph{making the optimization algorithm aware of the limits of the simulation}.

In the present paper, we first describe the transferability function, that

maps solution descriptors to a score representing how well a simulator matches

the reality. We then show that this function can be learned using a regression

algorithm and a few experiments with the real devices. Our results are

supported by an extensive study of the reality gap for a simple quadruped robot

whose control parameters are optimized. In particular, we mapped the whole

search space in reality and in simulation to understand the differences between

the fitness landscapes.

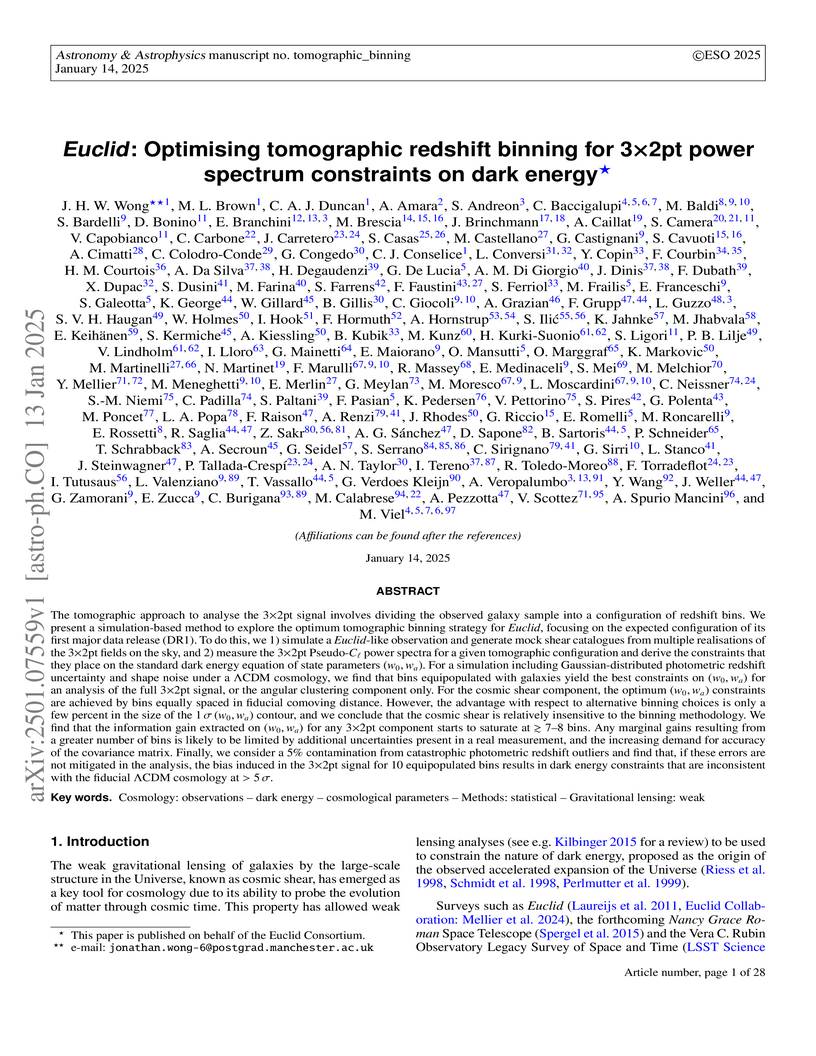

California Institute of TechnologyUniversity of Oslo

California Institute of TechnologyUniversity of Oslo University of CambridgeINFN Sezione di Napoli

University of CambridgeINFN Sezione di Napoli University of OxfordUniversity of BonnUniversity of Edinburgh

University of OxfordUniversity of BonnUniversity of Edinburgh INFN

INFN Space Telescope Science Institute

Space Telescope Science Institute Université Paris-SaclayHelsinki Institute of PhysicsUniversity of HelsinkiUniversité de GenèveUniversity of Bologna

Université Paris-SaclayHelsinki Institute of PhysicsUniversity of HelsinkiUniversité de GenèveUniversity of Bologna Leiden UniversityUniversity of GenevaICREAUniversity of PortsmouthNASA Jet Propulsion LaboratoryUniversidade do PortoUniversity of SussexINAFUniversity of CaliforniaRuđer Bošković Institute

Leiden UniversityUniversity of GenevaICREAUniversity of PortsmouthNASA Jet Propulsion LaboratoryUniversidade do PortoUniversity of SussexINAFUniversity of CaliforniaRuđer Bošković Institute European Southern ObservatorySISSAUniversity of TriesteLudwig-Maximilians-UniversitätNational Observatory of AthensINAF-Istituto di RadioastronomiaINAF – Osservatorio Astronomico di RomaUniversity of LyonInstitut de Física d’Altes Energies (IFAE)INFN - Sezione di PadovaUniversité Claude Bernard LyonIFPUDARK, Niels Bohr InstituteINAF-IASF MilanoKavli Institute for CosmologyIN2P3/CNRSAix Marseille Université, CNRS, CNES, LAMDTU SpaceINFN-Sezione di BolognaInstituto de Astrofísica e Ciências do Espaço, Faculdade de Ciências, Universidade de LisboaUniversité de Paris, CNRS, Astroparticule et Cosmologie,Università degli Studi Federico IIIstituto Nazionale di Fisica Nucleare, Sezione di PadovaFaculdade de Ciências - Universidade de LisboaInstituto de Astrofísica e Ciências do Espaço, Universidade de LisboaCNRS UMRObservatoire de Paris, PSL UniversityNorsk RomsenterIP2I Lyon / IN2P3 / CNRSUniversité Saint-Joseph de BeyrouthDipartimento di Fisica e AstronomiaINFN-Sezione di FerraraCosmic Dawn Center(DAWN)Universit

di FerraraINAF

Osservatorio Astronomico di CapodimonteUniversit

de LorraineExcellence Cluster ‘Origins’Universit

de StrasbourgUniversită di GenovaUniversit

di PadovaUniversit

de MontpellierINAF Osservatorio di Astrofisica e Scienza dello Spazio di BolognaINAF

` Osservatorio Astronomico di Trieste

European Southern ObservatorySISSAUniversity of TriesteLudwig-Maximilians-UniversitätNational Observatory of AthensINAF-Istituto di RadioastronomiaINAF – Osservatorio Astronomico di RomaUniversity of LyonInstitut de Física d’Altes Energies (IFAE)INFN - Sezione di PadovaUniversité Claude Bernard LyonIFPUDARK, Niels Bohr InstituteINAF-IASF MilanoKavli Institute for CosmologyIN2P3/CNRSAix Marseille Université, CNRS, CNES, LAMDTU SpaceINFN-Sezione di BolognaInstituto de Astrofísica e Ciências do Espaço, Faculdade de Ciências, Universidade de LisboaUniversité de Paris, CNRS, Astroparticule et Cosmologie,Università degli Studi Federico IIIstituto Nazionale di Fisica Nucleare, Sezione di PadovaFaculdade de Ciências - Universidade de LisboaInstituto de Astrofísica e Ciências do Espaço, Universidade de LisboaCNRS UMRObservatoire de Paris, PSL UniversityNorsk RomsenterIP2I Lyon / IN2P3 / CNRSUniversité Saint-Joseph de BeyrouthDipartimento di Fisica e AstronomiaINFN-Sezione di FerraraCosmic Dawn Center(DAWN)Universit

di FerraraINAF

Osservatorio Astronomico di CapodimonteUniversit

de LorraineExcellence Cluster ‘Origins’Universit

de StrasbourgUniversită di GenovaUniversit

di PadovaUniversit

de MontpellierINAF Osservatorio di Astrofisica e Scienza dello Spazio di BolognaINAF

` Osservatorio Astronomico di TriesteWe present a simulation-based method to explore the optimum tomographic redshift binning strategy for 3x2pt analyses with Euclid, focusing on the expected configuration of its first major data release (DR1). To do this, we 1) simulate a Euclid-like observation and generate mock shear catalogues from multiple realisations of the 3x2pt fields on the sky, and 2) measure the 3x2pt Pseudo-Cl power spectra for a given tomographic configuration and derive the constraints that they place on the standard dark energy equation of state parameters (w0, wa). For a simulation including Gaussian-distributed photometric redshift uncertainty and shape noise under a LambdaCDM cosmology, we find that bins equipopulated with galaxies yield the best constraints on (w0, wa) for an analysis of the full 3x2pt signal, or the angular clustering component only. For the cosmic shear component, the optimum (w0, wa) constraints are achieved by bins equally spaced in fiducial comoving distance. However, the advantage with respect to alternative binning choices is only a few percent in the size of the 1σ(w0, wa) contour, and we conclude that the cosmic shear is relatively insensitive to the binning methodology. We find that the information gain extracted on (w0, wa) for any 3x2pt component starts to saturate at ≳ 7-8 bins. Any marginal gains resulting from a greater number of bins is likely to be limited by additional uncertainties present in a real measurement, and the increasing demand for accuracy of the covariance matrix. Finally, we consider a 5% contamination from catastrophic photometric redshift outliers and find that, if these errors are not mitigated in the analysis, the bias induced in the 3x2pt signal for 10 equipopulated bins results in dark energy constraints that are inconsistent with the fiducial LambdaCDM cosmology at >5σ.

Several methods for triclustering three-dimensional data require the cluster size or the number of clusters in each dimension to be specified. To address this issue, the Multi-Slice Clustering (MSC) for 3-order tensor finds signal slices that lie in a low dimensional subspace for a rank-one tensor dataset in order to find a cluster based on the threshold similarity. We propose an extension algorithm called MSC-DBSCAN to extract the different clusters of slices that lie in the different subspaces from the data if the dataset is a sum of r rank-one tensor (r > 1). Our algorithm uses the same input as the MSC algorithm and can find the same solution for rank-one tensor data as MSC.

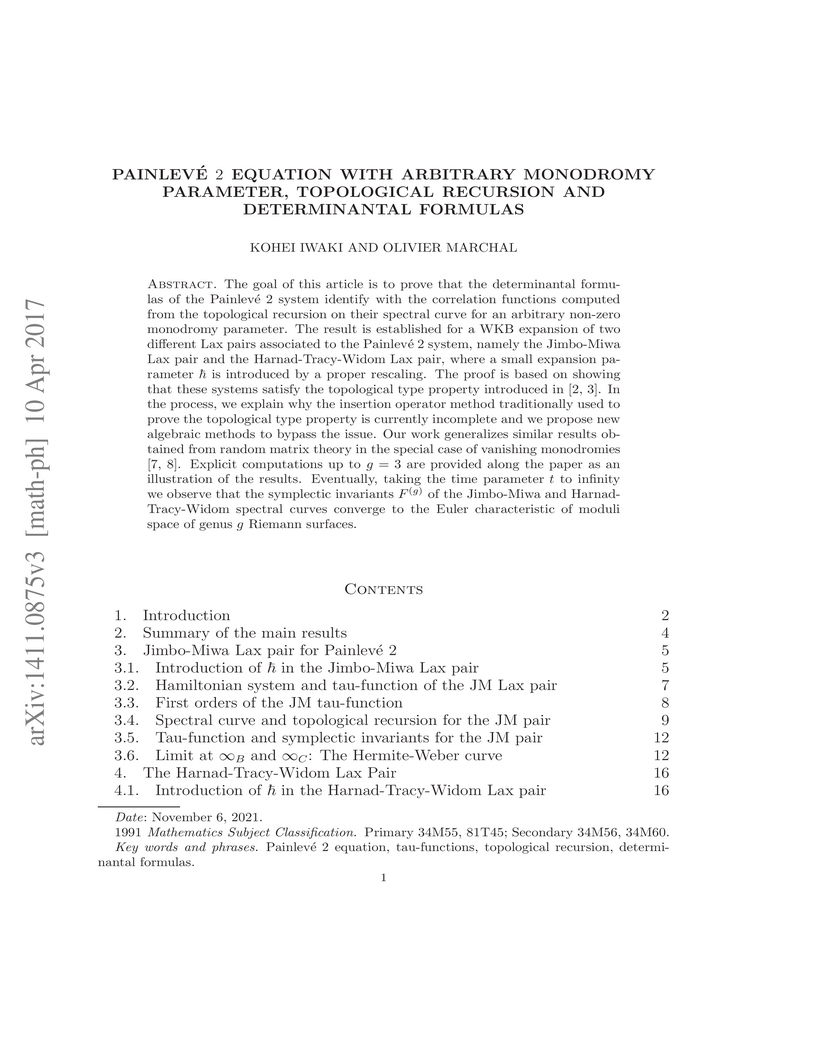

The goal of this article is to prove that the determinantal formulas of the

Painlev'e 2 system identify with the correlation functions computed from the

topological recursion on their spectral curve for an arbitrary non-zero

monodromy parameter. The result is established for two different Lax pairs

associated to the Painlev'e 2 system, namely the Jimbo-Miwa Lax pair and the

Harnad-Tracy-Widom Lax pair, whose spectral curves are not connected by any

symplectic transformation. We provide a new method to prove the topological

type property without using the insertion operators. In the process, taking the

time parameter t to infinity gives that the symplectic invariants F(g) computed

from the Hermite-Weber curve and the Bessel curve are equal to respectively.

This result generalizes similar results obtained from random matrix theory in

the special case where {\theta} = 0. We believe that this approach should apply

for all 6 Painlev'e equations with arbitrary monodromy parameters. Explicit

computations up to g = 3 are provided along the paper as an illustration of the

results.

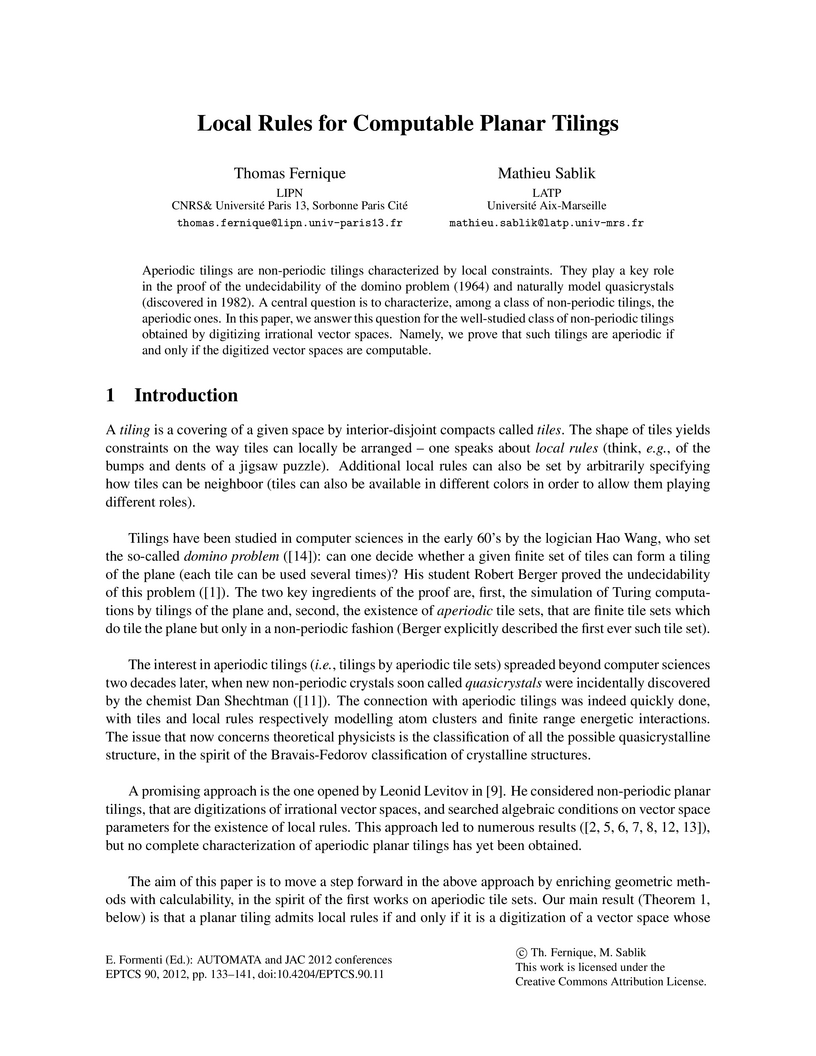

Aperiodic tilings are non-periodic tilings characterized by local

constraints. They play a key role in the proof of the undecidability of the

domino problem (1964) and naturally model quasicrystals (discovered in 1982). A

central question is to characterize, among a class of non-periodic tilings, the

aperiodic ones. In this paper, we answer this question for the well-studied

class of non-periodic tilings obtained by digitizing irrational vector spaces.

Namely, we prove that such tilings are aperiodic if and only if the digitized

vector spaces are computable.

18 Oct 2013

We prove that, for X, Y, A and B matrices with entries in a

non-commutative ring such that [Xij,Ykℓ]=−AiℓBkj,

satisfying suitable commutation relations (in particular, X is a Manin

matrix), the following identity holds: $ \mathrm{coldet} X \mathrm{coldet} Y =

< 0 | \mathrm{coldet} (a A + X (I-a^{\dagger} B)^{-1} Y) |0 > $. Furthermore,

if also Y is a Manin matrix, $ \mathrm{coldet} X \mathrm{coldet} Y =\int

\mathcal{D}(\psi, \psi^{\dagger}) \exp [ \sum_{k \geq 0} \frac{1}{k+1}

(\psi^{\dagger} A \psi)^{k} (\psi^{\dagger} X B^k Y \psi) ] .Notations: < 0

|,| 0 >$, are respectively the bra and the ket of the ground state,

a† and a the creation and annihilation operators of a quantum

harmonic oscillator, while ψi† and ψi are Grassmann

variables in a Berezin integral. These results should be seen as a

generalization of the classical Cauchy-Binet formula, in which A and B are

null matrices, and of the non-commutative generalization, the Capelli identity,

in which A and B are identity matrices and

[Xij,Xkℓ]=[Yij,Ykℓ]=0.

We propose a novel discriminative model for sequence labeling called Bregman

conditional random fields (BCRF). Contrary to standard linear-chain conditional

random fields, BCRF allows fast parallelizable inference algorithms based on

iterative Bregman projections. We show how such models can be learned using

Fenchel-Young losses, including extension for learning from partial labels.

Experimentally, our approach delivers comparable results to CRF while being

faster, and achieves better results in highly constrained settings compared to

mean field, another parallelizable alternative.

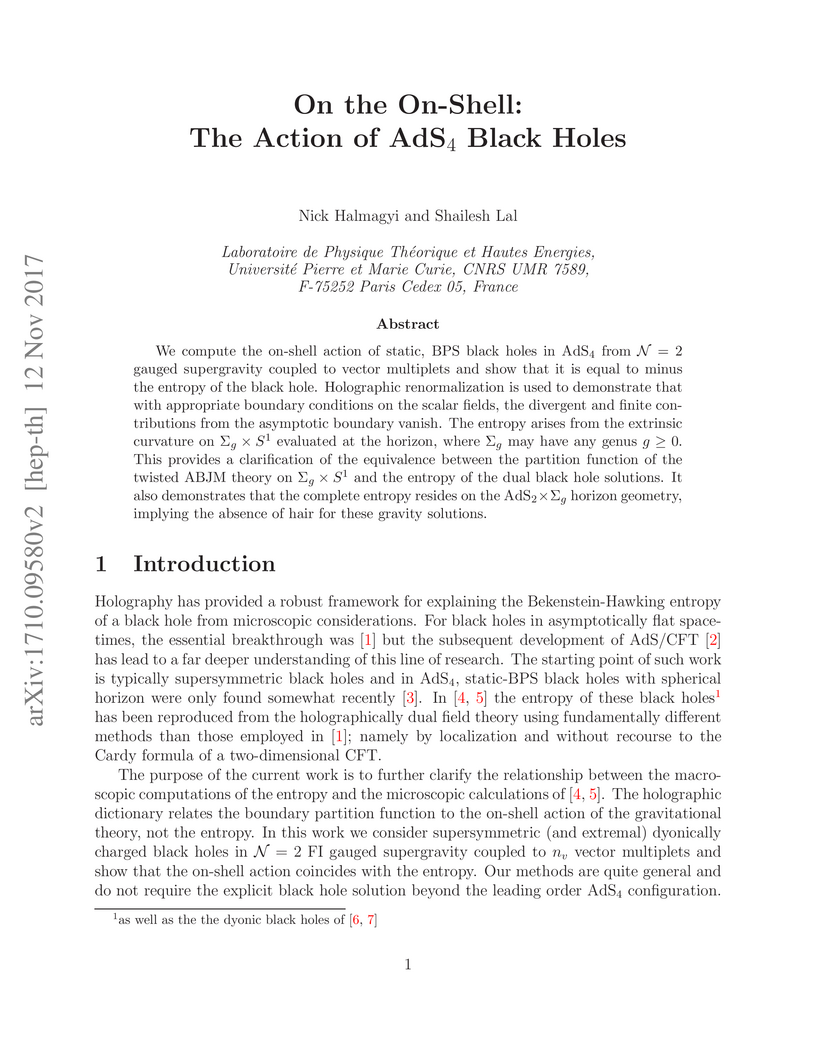

12 Nov 2017

We compute the on-shell action of static, BPS black holes in AdS4 from

N=2 gauged supergravity coupled to vector multiplets and show that it

is equal to minus the entropy of the black hole. Holographic renormalization is

used to demonstrate that with appropriate boundary conditions on the scalar

fields, the divergent and finite contributions from the asymptotic boundary

vanish. The entropy arises from the extrinsic curvature on Σg×S1

evaluated at the horizon, where Σg may have any genus g≥0. This

provides a clarification of the equivalence between the partition function of

the twisted ABJM theory on Σg×S1 and the entropy of the dual

black hole solutions. It also demonstrates that the complete entropy resides on

the AdS2×Σg horizon geometry, implying the absence of hair for

these gravity solutions.

Parametric timed automata are a powerful formalism for reasoning on concurrent real-time systems with unknown or uncertain timing constants. Reducing their state space is a significant way to reduce the inherently large analysis times. We present here different merging reduction techniques based on convex union of constraints (parametric zones), allowing to decrease the number of states while preserving the correctness of verification and synthesis results. We perform extensive experiments, and identify the best heuristics in practice, bringing a significant decrease in the computation time on a benchmarks library.

03 Feb 2018

This article extends bimetric formulations of massive gravity to make the

mass of the graviton to depend on its environment. This minimal extension

offers a novel way to reconcile massive gravity with local tests of general

relativity without invoking the Vainshtein mechanism. On cosmological scales,

it is argued that the model is stable and that it circumvents the Higuchi

bound, hence relaxing the constraints on the parameter space. Moreover, with

this extension the strong coupling scale is also environmentally dependent in

such a way that it is kept sufficiently higher than the expansion rate all the

way up to the very early universe, while the present graviton mass is low

enough to be phenomenologically interesting. In this sense the extended

bigravity theory serves as a partial UV completion of the standard bigravity

theory. This extension is very generic and robust and a simple specific example

is described.

Communication-avoiding algorithms allow redundant computations to minimize the number of inter-process communications. In this paper, we propose to exploit this redundancy for fault-tolerance purpose. We illustrate this idea with QR factorization of tall and skinny matrices, and we evaluate the number of failures our algorithm can tolerate under different semantics.

Real-world tabular learning production scenarios typically involve evolving data streams, where data arrives continuously and its distribution may change over time. In such a setting, most studies in the literature regarding supervised learning favor the use of instance incremental algorithms due to their ability to adapt to changes in the data distribution. Another significant reason for choosing these algorithms is \textit{avoid storing observations in memory} as commonly done in batch incremental settings. However, the design of instance incremental algorithms often assumes immediate availability of labels, which is an optimistic assumption. In many real-world scenarios, such as fraud detection or credit scoring, labels may be delayed. Consequently, batch incremental algorithms are widely used in many real-world tasks. This raises an important question: "In delayed settings, is instance incremental learning the best option regarding predictive performance and computational efficiency?" Unfortunately, this question has not been studied in depth, probably due to the scarcity of real datasets containing delayed information. In this study, we conduct a comprehensive empirical evaluation and analysis of this question using a real-world fraud detection problem and commonly used generated datasets. Our findings indicate that instance incremental learning is not the superior option, considering on one side state-of-the-art models such as Adaptive Random Forest (ARF) and other side batch learning models such as XGBoost. Additionally, when considering the interpretability of the learning systems, batch incremental solutions tend to be favored. Code: \url{this https URL}

There are no more papers matching your filters at the moment.