NICT

04 Oct 2025

The proliferation of multimedia content on social media platforms has dramatically transformed how information is consumed and disseminated. While this shift enables real-time coverage of global events, it also facilitates the rapid spread of misinformation and disinformation, especially during crises such as wars, natural disasters, or elections. The rise of synthetic media and the reuse of authentic content in misleading contexts have intensified the need for robust multimedia verification tools. In this paper, we present a comprehensive system developed for the ACM Multimedia 2025 Grand Challenge on Multimedia Verification. Our system assesses the authenticity and contextual accuracy of multimedia content in multilingual settings and generates both expert-oriented verification reports and accessible summaries for the general public. We introduce a unified verification pipeline that integrates visual forensics, textual analysis, and multimodal reasoning, and propose a hybrid approach to detect out-of-context (OOC) media through semantic similarity, temporal alignment, and geolocation cues. Extensive evaluations on the Grand Challenge benchmark demonstrate the system's effectiveness across diverse real-world scenarios. Our contributions advance the state of the art in multimedia verification and offer practical tools for journalists, fact-checkers, and researchers confronting information integrity challenges in the digital age.

Contemporary deep learning models effectively handle languages with diverse

morphology despite not being directly integrated into them. Morphology and word

order are closely linked, with the latter incorporated into transformer-based

models through positional encodings. This prompts a fundamental inquiry: Is

there a correlation between the morphological complexity of a language and the

utilization of positional encoding in pre-trained language models? In pursuit

of an answer, we present the first study addressing this question, encompassing

22 languages and 5 downstream tasks. Our findings reveal that the importance of

positional encoding diminishes with increasing morphological complexity in

languages. Our study motivates the need for a deeper understanding of

positional encoding, augmenting them to better reflect the different languages

under consideration.

Vision Language Models (VLMs) often struggle with culture-specific knowledge,

particularly in languages other than English and in underrepresented cultural

contexts. To evaluate their understanding of such knowledge, we introduce

WorldCuisines, a massive-scale benchmark for multilingual and multicultural,

visually grounded language understanding. This benchmark includes a visual

question answering (VQA) dataset with text-image pairs across 30 languages and

dialects, spanning 9 language families and featuring over 1 million data

points, making it the largest multicultural VQA benchmark to date. It includes

tasks for identifying dish names and their origins. We provide evaluation

datasets in two sizes (12k and 60k instances) alongside a training dataset (1

million instances). Our findings show that while VLMs perform better with

correct location context, they struggle with adversarial contexts and

predicting specific regional cuisines and languages. To support future

research, we release a knowledge base with annotated food entries and images

along with the VQA data.

We announce the initial release of "Airavata," an instruction-tuned LLM for Hindi. Airavata was created by fine-tuning OpenHathi with diverse, instruction-tuning Hindi datasets to make it better suited for assistive tasks. Along with the model, we also share the IndicInstruct dataset, which is a collection of diverse instruction-tuning datasets to enable further research for Indic LLMs. Additionally, we present evaluation benchmarks and a framework for assessing LLM performance across tasks in Hindi. Currently, Airavata supports Hindi, but we plan to expand this to all 22 scheduled Indic languages. You can access all artifacts at this https URL.

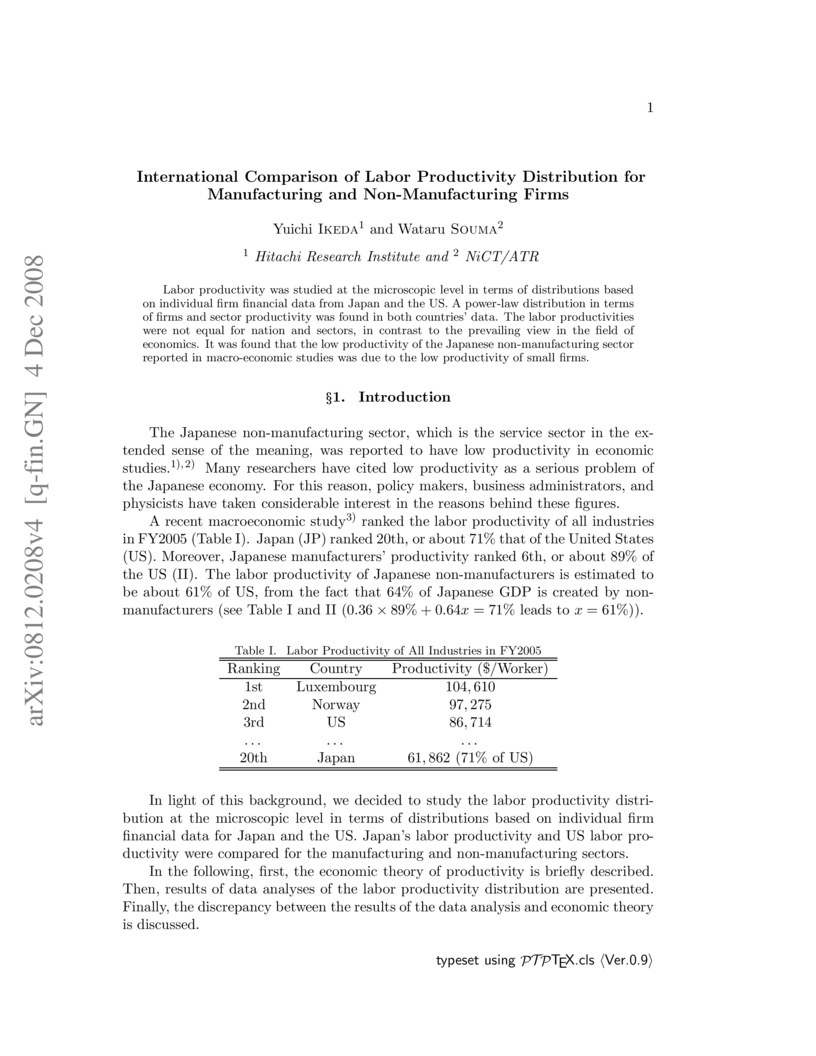

Labor productivity was studied at the microscopic level in terms of distributions based on individual firm financial data from Japan and the US. A power-law distribution in terms of firms and sector productivity was found in both countries' data. The labor productivities were not equal for nation and sectors, in contrast to the prevailing view in the field of economics. It was found that the low productivity of the Japanese non-manufacturing sector reported in macro-economic studies was due to the low productivity of small firms.

Humans are susceptible to optical illusions, which serve as valuable tools

for investigating sensory and cognitive processes. Inspired by human vision

studies, research has begun exploring whether machines, such as large vision

language models (LVLMs), exhibit similar susceptibilities to visual illusions.

However, studies often have used non-abstract images and have not distinguished

actual and apparent features, leading to ambiguous assessments of machine

cognition. To address these limitations, we introduce a visual question

answering (VQA) dataset, categorized into genuine and fake illusions, along

with corresponding control images. Genuine illusions present discrepancies

between actual and apparent features, whereas fake illusions have the same

actual and apparent features even though they look illusory due to the similar

geometric configuration. We evaluate the performance of LVLMs for genuine and

fake illusion VQA tasks and investigate whether the models discern actual and

apparent features. Our findings indicate that although LVLMs may appear to

recognize illusions by correctly answering questions about both feature types,

they predict the same answers for both Genuine Illusion and Fake Illusion VQA

questions. This suggests that their responses might be based on prior knowledge

of illusions rather than genuine visual understanding. The dataset is available

at this https URL

20 Nov 2024

The necessary time required to control a many-body quantum system is a critically important issue for the future development of quantum technologies. However, it is generally quite difficult to analyze directly, since the time evolution operator acting on a quantum system is in the form of time-ordered exponential. In this work, we examine the Baker-Campbell-Hausdorff (BCH) formula in detail and show that a distance between unitaries can be introduced, allowing us to obtain a lower bound on the control time. We find that, as far as we can compare, this lower bound on control time is tighter (better) than the standard quantum speed limits. This is because this distance takes into account the algebraic structure induced by Hamiltonians through the BCH formula, reflecting the curved nature of operator space. Consequently, we can avoid estimates based on shortcuts through algebraically impossible paths, in contrast to geometric methods that estimate the control time solely by looking at the target state or unitary operator.

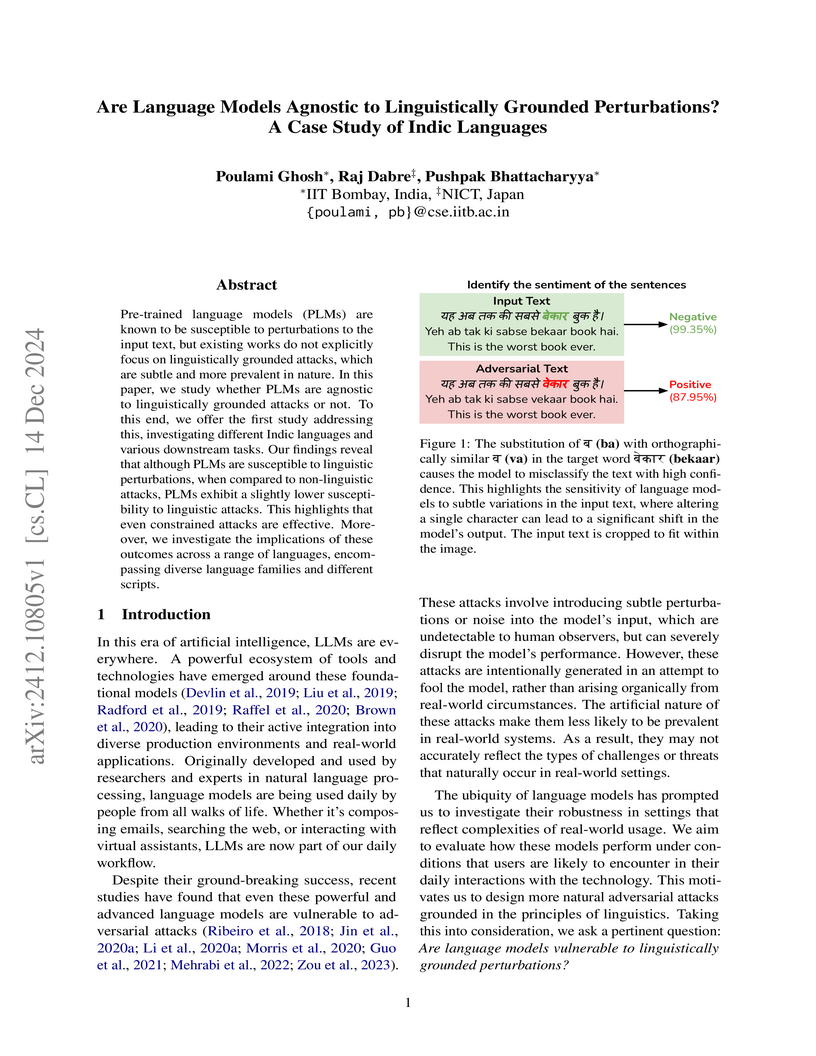

Pre-trained language models (PLMs) are known to be susceptible to perturbations to the input text, but existing works do not explicitly focus on linguistically grounded attacks, which are subtle and more prevalent in nature. In this paper, we study whether PLMs are agnostic to linguistically grounded attacks or not. To this end, we offer the first study addressing this, investigating different Indic languages and various downstream tasks. Our findings reveal that although PLMs are susceptible to linguistic perturbations, when compared to non-linguistic attacks, PLMs exhibit a slightly lower susceptibility to linguistic attacks. This highlights that even constrained attacks are effective. Moreover, we investigate the implications of these outcomes across a range of languages, encompassing diverse language families and different scripts.

09 May 2024

System Management Mode (SMM) is the highest-privileged operating mode of x86

and x86-64 processors. Through SMM exploitation, attackers can tamper with the

Unified Extensible Firmware Interface (UEFI) firmware, disabling the security

mechanisms implemented by the operating system and hypervisor. Vulnerabilities

enabling SMM code execution are often reported as Common Vulnerabilities and

Exposures (CVEs); however, no security mechanisms currently exist to prevent

attackers from analyzing those vulnerabilities. To increase the cost of

vulnerability analysis of SMM modules, we introduced SmmPack. The core concept

of SmmPack involves encrypting an SMM module with the key securely stored in a

Trusted Platform Module (TPM). We assessed the effectiveness of SmmPack in

preventing attackers from obtaining and analyzing SMM modules using various

acquisition methods. Our results show that SmmPack significantly increases the

cost by narrowing down the means of module acquisition. Furthermore, we

demonstrated that SmmPack operates without compromising the performance of the

original SMM modules. We also clarified the management and adoption methods of

SmmPack, as well as the procedure for applying BIOS updates, and demonstrated

that the implementation of SmmPack is realistic.

Personality recognition is useful for enhancing robots' ability to tailor

user-adaptive responses, thus fostering rich human-robot interactions. One of

the challenges in this task is a limited number of speakers in existing

dialogue corpora, which hampers the development of robust, speaker-independent

personality recognition models. Additionally, accurately modeling both the

interdependencies among interlocutors and the intra-dependencies within the

speaker in dialogues remains a significant issue. To address the first

challenge, we introduce personality trait interpolation for speaker data

augmentation. For the second, we propose heterogeneous conversational graph

networks to independently capture both contextual influences and inherent

personality traits. Evaluations on the RealPersonaChat corpus demonstrate our

method's significant improvements over existing baselines.

Speech enhancement (SE) performance has improved considerably owing to the use of deep learning models as a base function. Herein, we propose a perceptual contrast stretching (PCS) approach to further improve SE performance. The PCS is derived based on the critical band importance function and is applied to modify the targets of the SE model. Specifically, the contrast of target features is stretched based on perceptual importance, thereby improving the overall SE performance. Compared with post-processing-based implementations, incorporating PCS into the training phase preserves performance and reduces online computation. Notably, PCS can be combined with different SE model architectures and training criteria. Furthermore, PCS does not affect the causality or convergence of SE model training. Experimental results on the VoiceBank-DEMAND dataset show that the proposed method can achieve state-of-the-art performance on both causal (PESQ score = 3.07) and noncausal (PESQ score = 3.35) SE tasks.

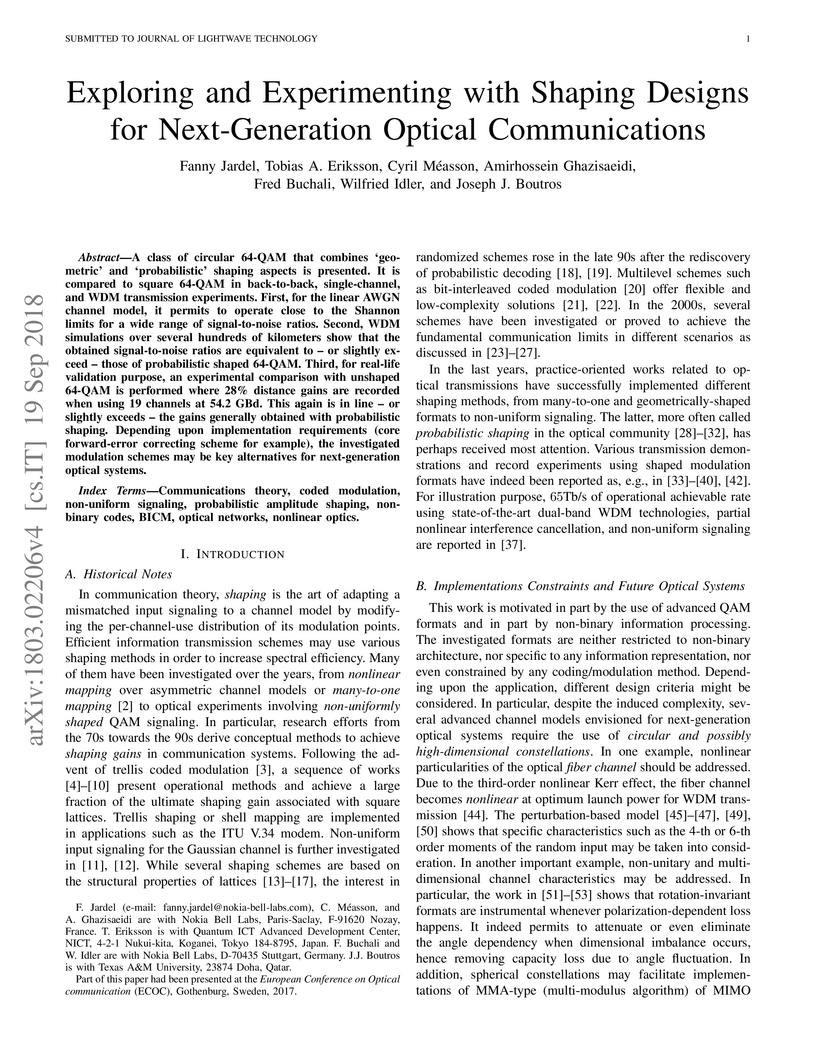

19 Sep 2018

A class of circular 64-QAM that combines 'geometric' and 'probabilistic'

shaping aspects is presented. It is compared to square 64-QAM in back-to-back,

single-channel, and WDM transmission experiments. First, for the linear AWGN

channel model, it permits to operate close to the Shannon limits for a wide

range of signal-to-noise ratios. Second, WDM simulations over several hundreds

of kilometers show that the obtained signal-to-noise ratios are equivalent to -

or slightly exceed - those of probabilistic shaped 64-QAM. Third, for real-life

validation purpose, an experimental comparison with unshaped 64-QAM is

performed where 28% distance gains are recorded when using 19 channels at 54.2

GBd. This again is in line - or slightly exceeds - the gains generally obtained

with probabilistic shaping. Depending upon implementation requirements (core

forward-error correcting scheme for example), the investigated modulation

schemes may be key alternatives for next-generation optical systems.

We have constructed NAIST Academic Travelogue Dataset (ATD) and released it free of charge for academic research. This dataset is a Japanese text dataset with a total of over 31 million words, comprising 4,672 Japanese domestic travelogues and 9,607 overseas travelogues. Before providing our dataset, there was a scarcity of widely available travelogue data for research purposes, and each researcher had to prepare their own data. This hinders the replication of existing studies and fair comparative analysis of experimental results. Our dataset enables any researchers to conduct investigation on the same data and to ensure transparency and reproducibility in research. In this paper, we describe the academic significance, characteristics, and prospects of our dataset.

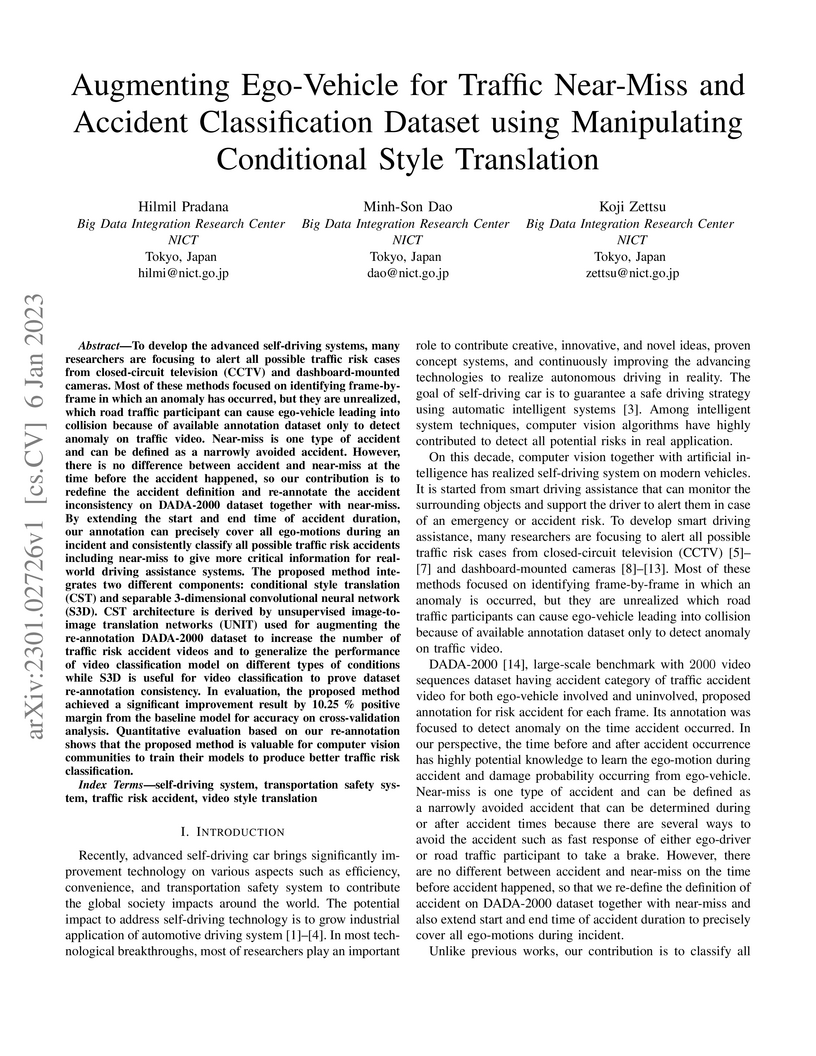

To develop the advanced self-driving systems, many researchers are focusing to alert all possible traffic risk cases from closed-circuit television (CCTV) and dashboard-mounted cameras. Most of these methods focused on identifying frame-by-frame in which an anomaly has occurred, but they are unrealized, which road traffic participant can cause ego-vehicle leading into collision because of available annotation dataset only to detect anomaly on traffic video. Near-miss is one type of accident and can be defined as a narrowly avoided accident. However, there is no difference between accident and near-miss at the time before the accident happened, so our contribution is to redefine the accident definition and re-annotate the accident inconsistency on DADA-2000 dataset together with near-miss. By extending the start and end time of accident duration, our annotation can precisely cover all ego-motions during an incident and consistently classify all possible traffic risk accidents including near-miss to give more critical information for real-world driving assistance systems. The proposed method integrates two different components: conditional style translation (CST) and separable 3-dimensional convolutional neural network (S3D). CST architecture is derived by unsupervised image-to-image translation networks (UNIT) used for augmenting the re-annotation DADA-2000 dataset to increase the number of traffic risk accident videos and to generalize the performance of video classification model on different types of conditions while S3D is useful for video classification to prove dataset re-annotation consistency. In evaluation, the proposed method achieved a significant improvement result by 10.25% positive margin from the baseline model for accuracy on cross-validation analysis.

Previous studies on sequence-based extraction of human movement trajectories have an issue of inadequate trajectory representation. Specifically, a pair of locations may not be lined up in a sequence especially when one location includes the other geographically. In this study, we propose a graph representation that retains information on the geographic hierarchy as well as the temporal order of visited locations, and have constructed a benchmark dataset for graph-structured trajectory extraction. The experiments with our baselines have demonstrated that it is possible to accurately predict visited locations and the order among them, but it remains a challenge to predict the hierarchical relations.

Large Language Models (LLMs) have grown increasingly expensive to deploy,

driving the need for effective model compression techniques. While block

pruning offers a straightforward approach to reducing model size, existing

methods often struggle to maintain performance or require substantial

computational resources for recovery. We present IteRABRe, a simple yet

effective iterative pruning method that achieves superior compression results

while requiring minimal computational resources. Using only 2.5M tokens for

recovery, our method outperforms baseline approaches by ~3% on average when

compressing the Llama3.1-8B and Qwen2.5-7B models. IteRABRe demonstrates

particular strength in the preservation of linguistic capabilities, showing an

improvement 5% over the baselines in language-related tasks. Our analysis

reveals distinct pruning characteristics between these models, while also

demonstrating preservation of multilingual capabilities.

29 Apr 2025

In this paper, we present a flowchart-based description of the decoy-state

BB84 quantum key distribution (QKD) protocol and provide a step-by-step,

self-contained information-theoretic security proof for this protocol within

the universal composable security framework. As a result, our proof yields a

key rate consistent with previous findings. Importantly, unlike all the prior

security proofs, our approach offers a fully rigorous and mathematical

justification for achieving the key rate with the claimed correctness and

secrecy parameters, thereby representing a significant step toward the formal

certification of QKD systems.

One of the main objectives in developing large vision-language models (LVLMs) is to engineer systems that can assist humans with multimodal tasks, including interpreting descriptions of perceptual experiences. A central phenomenon in this context is amodal completion, in which people perceive objects even when parts of those objects are hidden. Although numerous studies have assessed whether computer-vision algorithms can detect or reconstruct occluded regions, the inferential abilities of LVLMs on texts related to amodal completion remain unexplored. To address this gap, we constructed a benchmark grounded in Basic Formal Ontology to achieve a systematic classification of amodal completion. Our results indicate that while many LVLMs achieve human-comparable performance overall, their accuracy diverges for certain types of objects being completed. Notably, in certain categories, some LLaVA-NeXT variants and Claude 3.5 Sonnet exhibit lower accuracy on original images compared to blank stimuli lacking visual content. Intriguingly, this disparity emerges only under Japanese prompting, suggesting a deficiency in Japanese-specific linguistic competence among these models.

Cognates are variants of the same lexical form across different languages;

for example 'fonema' in Spanish and 'phoneme' in English are cognates, both of

which mean 'a unit of sound'. The task of automatic detection of cognates among

any two languages can help downstream NLP tasks such as Cross-lingual

Information Retrieval, Computational Phylogenetics, and Machine Translation. In

this paper, we demonstrate the use of cross-lingual word embeddings for

detecting cognates among fourteen Indian Languages. Our approach introduces the

use of context from a knowledge graph to generate improved feature

representations for cognate detection. We, then, evaluate the impact of our

cognate detection mechanism on neural machine translation (NMT), as a

downstream task. We evaluate our methods to detect cognates on a challenging

dataset of twelve Indian languages, namely, Sanskrit, Hindi, Assamese, Oriya,

Kannada, Gujarati, Tamil, Telugu, Punjabi, Bengali, Marathi, and Malayalam.

Additionally, we create evaluation datasets for two more Indian languages,

Konkani and Nepali. We observe an improvement of up to 18% points, in terms of

F-score, for cognate detection. Furthermore, we observe that cognates extracted

using our method help improve NMT quality by up to 2.76 BLEU. We also release

our code, newly constructed datasets and cross-lingual models publicly.

In this paper, we describe MorisienMT, a dataset for benchmarking machine translation quality of Mauritian Creole. Mauritian Creole (Morisien) is the lingua franca of the Republic of Mauritius and is a French-based creole language. MorisienMT consists of a parallel corpus between English and Morisien, French and Morisien and a monolingual corpus for Morisien. We first give an overview of Morisien and then describe the steps taken to create the corpora and, from it, the training and evaluation splits. Thereafter, we establish a variety of baseline models using the created parallel corpora as well as large French--English corpora for transfer learning. We release our datasets publicly for research purposes and hope that this spurs research for Morisien machine translation.

There are no more papers matching your filters at the moment.