Pusan National University

This paper provides a comprehensive survey of collaborative mechanisms in Large Language Model (LLM)-based multi-agent systems

Harvard University

Harvard University the University of TokyoPusan National UniversityHarvard Medical School

the University of TokyoPusan National UniversityHarvard Medical School Technical University of MunichDana-Farber Cancer InstituteMass General BrighamBroad Institute of Harvard and MITHelmholtz Munich – German Research Center for Environment and HealthEmory University School of MedicineNational Cancer Center Exploratory Oncology Research & Clinical Trial Center

Technical University of MunichDana-Farber Cancer InstituteMass General BrighamBroad Institute of Harvard and MITHelmholtz Munich – German Research Center for Environment and HealthEmory University School of MedicineNational Cancer Center Exploratory Oncology Research & Clinical Trial CenterThis paper introduces TITAN, a multimodal foundation model that effectively processes whole slide pathology images and text through a three-stage pretraining approach

HEST-1k introduces a large, meticulously curated dataset of paired spatial transcriptomics and histology data, alongside a supporting library and benchmark, enabling the evaluation of deep learning models for predicting gene expression from tissue morphology. This resource helps advance the capabilities of foundation models in pathology and facilitates the exploration of morphomolecular relationships.

University of Washington

University of Washington University of Cambridge

University of Cambridge Harvard UniversityPusan National University

Harvard UniversityPusan National University University of British ColumbiaHarvard Medical SchoolDana-Farber Cancer InstituteMass General BrighamBroad Institute of Harvard and MITRutgers Robert Wood Johnson Medical SchoolEmory University School of MedicineJohns Hopkins HospitalBeth-Israel Deaconess Medical Center

University of British ColumbiaHarvard Medical SchoolDana-Farber Cancer InstituteMass General BrighamBroad Institute of Harvard and MITRutgers Robert Wood Johnson Medical SchoolEmory University School of MedicineJohns Hopkins HospitalBeth-Israel Deaconess Medical CenterResearchers developed VORTEX, an artificial intelligence framework that predicts 3D spatial gene expression patterns across entire tissue volumes using 3D morphological imaging data and limited 2D spatial transcriptomics. This method successfully mapped complex expression landscapes and tumor microenvironments in various cancer types, overcoming the limitations of traditional 2D spatial transcriptomics.

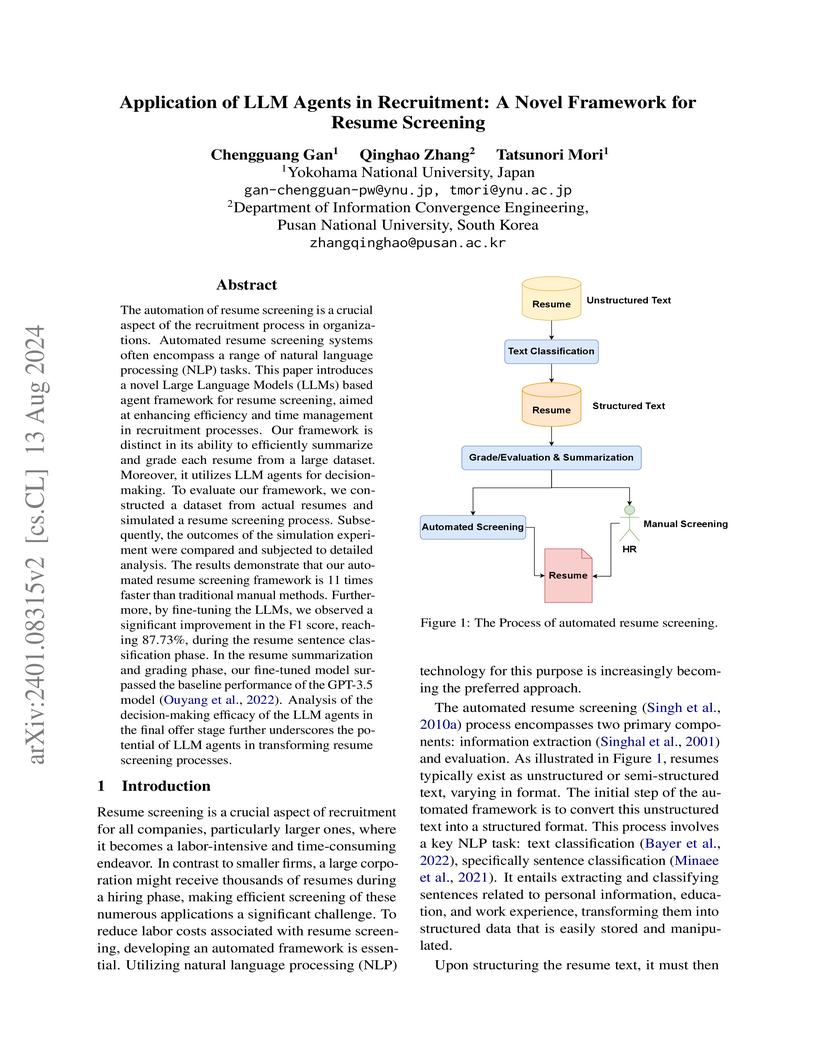

The automation of resume screening is a crucial aspect of the recruitment process in organizations. Automated resume screening systems often encompass a range of natural language processing (NLP) tasks. This paper introduces a novel Large Language Models (LLMs) based agent framework for resume screening, aimed at enhancing efficiency and time management in recruitment processes. Our framework is distinct in its ability to efficiently summarize and grade each resume from a large dataset. Moreover, it utilizes LLM agents for decision-making. To evaluate our framework, we constructed a dataset from actual resumes and simulated a resume screening process. Subsequently, the outcomes of the simulation experiment were compared and subjected to detailed analysis. The results demonstrate that our automated resume screening framework is 11 times faster than traditional manual methods. Furthermore, by fine-tuning the LLMs, we observed a significant improvement in the F1 score, reaching 87.73\%, during the resume sentence classification phase. In the resume summarization and grading phase, our fine-tuned model surpassed the baseline performance of the GPT-3.5 model. Analysis of the decision-making efficacy of the LLM agents in the final offer stage further underscores the potential of LLM agents in transforming resume screening processes.

Recent advances in dynamic scene reconstruction have significantly benefited from 3D Gaussian Splatting, yet existing methods show inconsistent performance across diverse scenes, indicating no single approach effectively handles all dynamic challenges. To overcome these limitations, we propose Mixture of Experts for Dynamic Gaussian Splatting (MoE-GS), a unified framework integrating multiple specialized experts via a novel Volume-aware Pixel Router. Our router adaptively blends expert outputs by projecting volumetric Gaussian-level weights into pixel space through differentiable weight splatting, ensuring spatially and temporally coherent results. Although MoE-GS improves rendering quality, the increased model capacity and reduced FPS are inherent to the MoE architecture. To mitigate this, we explore two complementary directions: (1) single-pass multi-expert rendering and gate-aware Gaussian pruning, which improve efficiency within the MoE framework, and (2) a distillation strategy that transfers MoE performance to individual experts, enabling lightweight deployment without architectural changes. To the best of our knowledge, MoE-GS is the first approach incorporating Mixture-of-Experts techniques into dynamic Gaussian splatting. Extensive experiments on the N3V and Technicolor datasets demonstrate that MoE-GS consistently outperforms state-of-the-art methods with improved efficiency. Video demonstrations are available at this https URL.

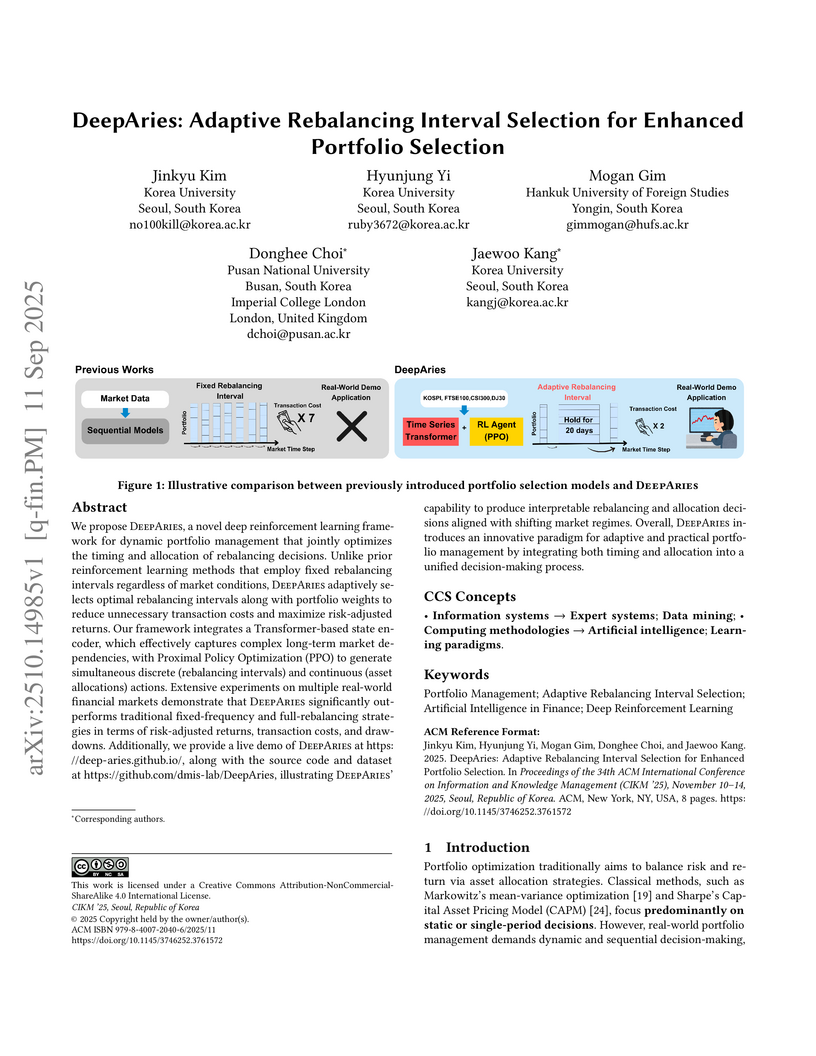

We propose DeepAries , a novel deep reinforcement learning framework for dynamic portfolio management that jointly optimizes the timing and allocation of rebalancing decisions. Unlike prior reinforcement learning methods that employ fixed rebalancing intervals regardless of market conditions, DeepAries adaptively selects optimal rebalancing intervals along with portfolio weights to reduce unnecessary transaction costs and maximize risk-adjusted returns. Our framework integrates a Transformer-based state encoder, which effectively captures complex long-term market dependencies, with Proximal Policy Optimization (PPO) to generate simultaneous discrete (rebalancing intervals) and continuous (asset allocations) actions. Extensive experiments on multiple real-world financial markets demonstrate that DeepAries significantly outperforms traditional fixed-frequency and full-rebalancing strategies in terms of risk-adjusted returns, transaction costs, and drawdowns. Additionally, we provide a live demo of DeepAries at this https URL, along with the source code and dataset at this https URL, illustrating DeepAries' capability to produce interpretable rebalancing and allocation decisions aligned with shifting market regimes. Overall, DeepAries introduces an innovative paradigm for adaptive and practical portfolio management by integrating both timing and allocation into a unified decision-making process.

High-resolution optical microscopy has transformed biological imaging, yet its resolution and contrast deteriorate with depth due to multiple light scattering. Conventional correction strategies typically approximate the medium as one or a few discrete layers. While effective in the presence of dominant scattering layers, these approaches break down in thick, volumetric tissues, where accurate modeling would require an impractically large number of layers. To address this challenge, we introduce an inverse-scattering framework that represents the entire volume as a superposition of angular deflectors, each corresponding to scattering at a specific angle. This angular formulation is particularly well suited to biological tissues, where narrow angular spread due to the dominant forward scattering allow most multiple scattering to be captured with relatively few components. Within this framework, we solve the inverse problem by progressively incorporating contributions from small to large deflection angles. Applied to simulations and in vivo reflection-mode imaging through intact mouse skull, our method reconstructs up to 121 angular components, converting ~80% of multiply scattered light into signal. This enables non-invasive visualization of osteocytes in the skull that remain inaccessible to existing layer-based methods. These results establish the scattering-angle basis as a deterministic framework for imaging through complex media, paving the way for high-resolution microscopy deep inside living tissues.

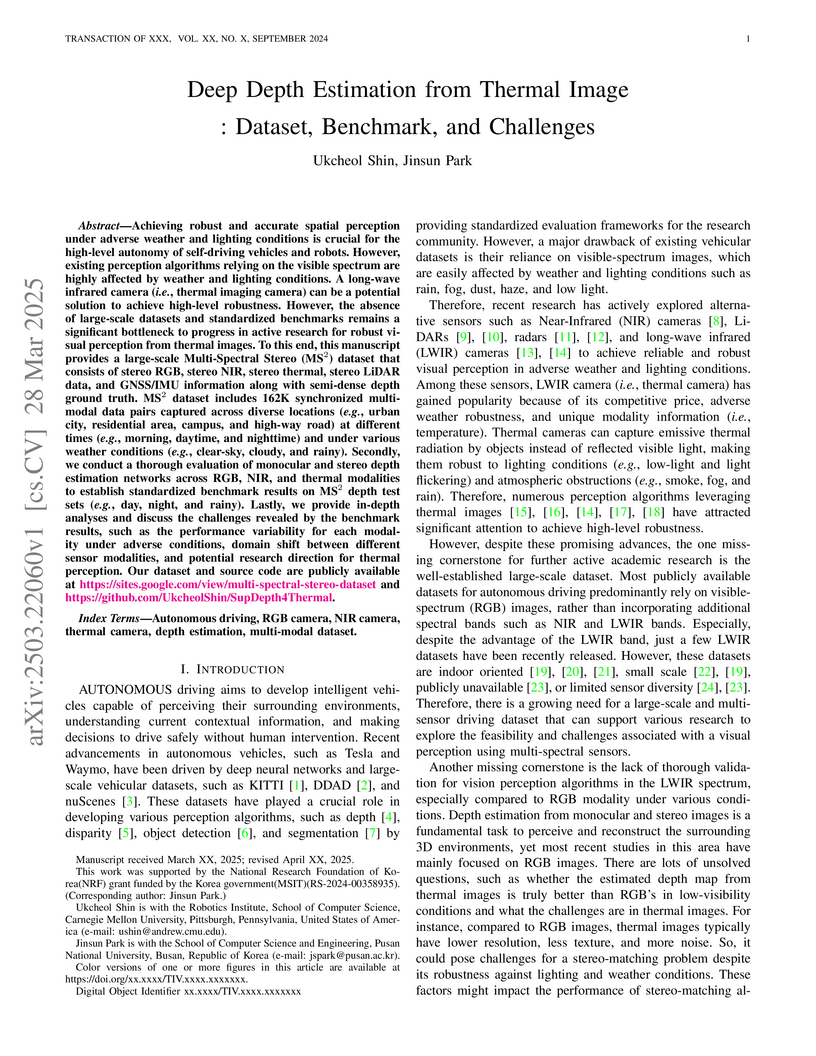

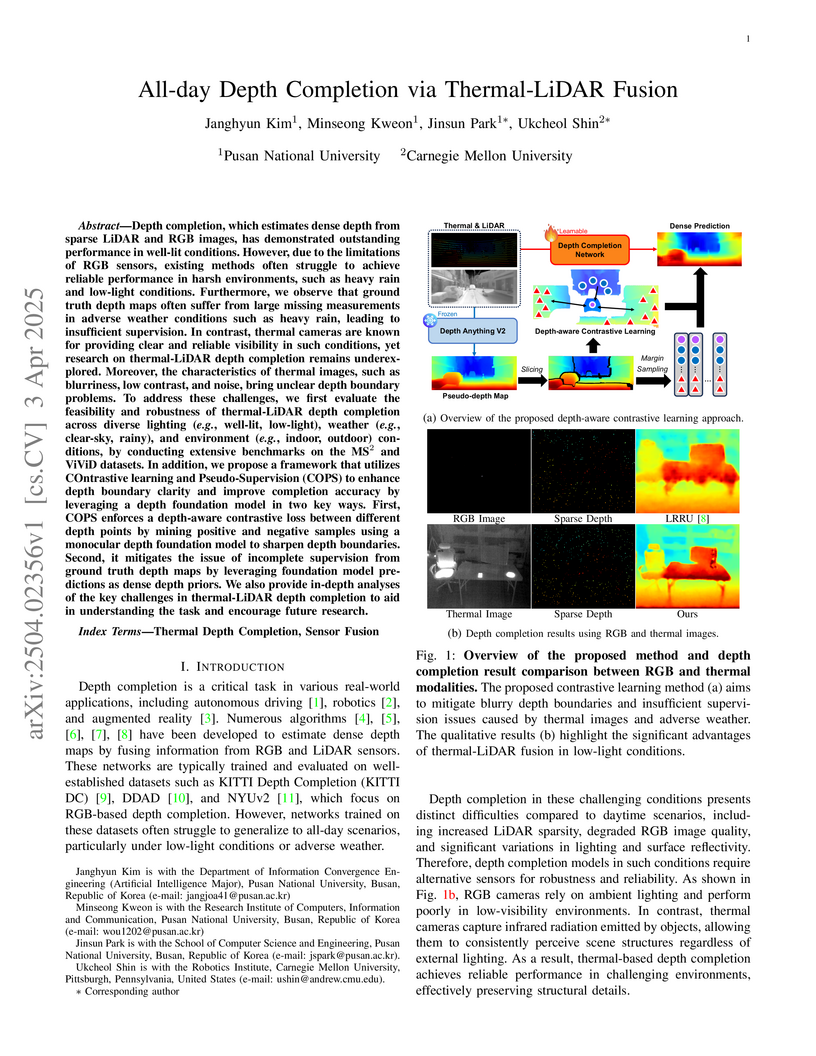

This research introduces the large-scale Multi-Spectral Stereo (MS²) dataset and a standardized benchmark for monocular and stereo depth estimation across RGB, NIR, and thermal modalities. It demonstrates that thermal cameras provide the most robust depth estimation in challenging conditions like night and rain, offering a critical resource for developing condition-agnostic autonomous systems.

17 Oct 2025

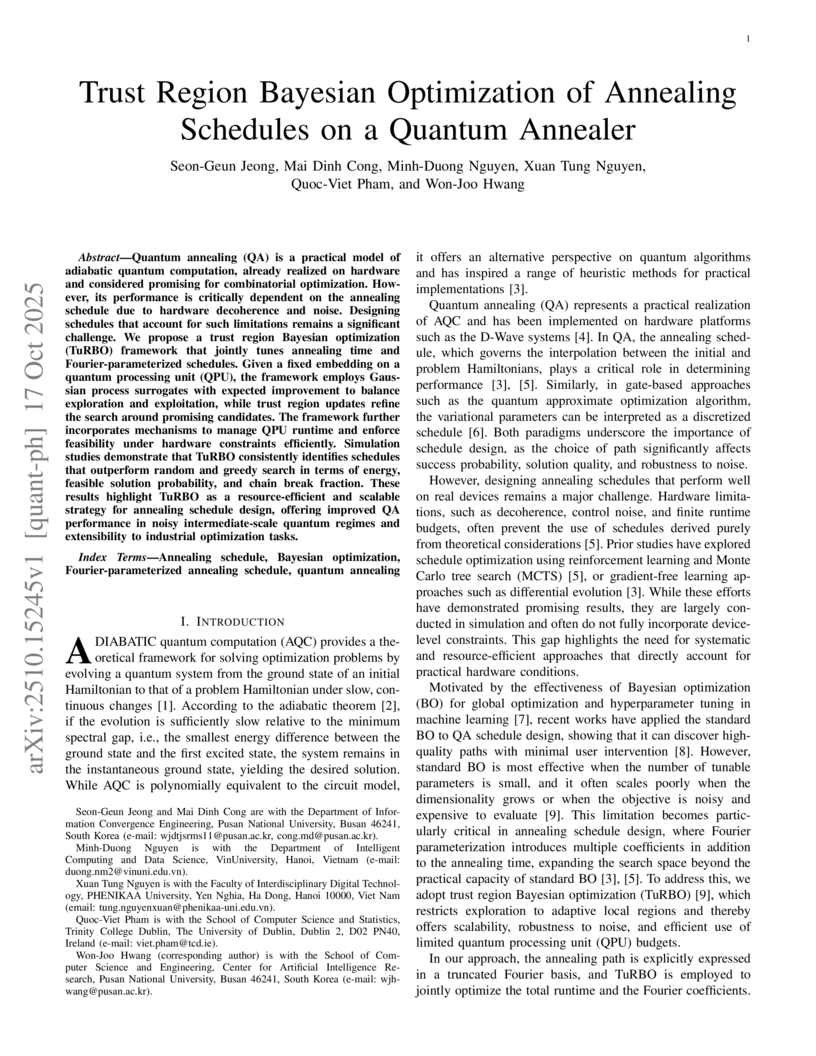

Quantum annealing (QA) is a practical model of adiabatic quantum computation, already realized on hardware and considered promising for combinatorial optimization. However, its performance is critically dependent on the annealing schedule due to hardware decoherence and noise. Designing schedules that account for such limitations remains a significant challenge. We propose a trust region Bayesian optimization (TuRBO) framework that jointly tunes annealing time and Fourier-parameterized schedules. Given a fixed embedding on a quantum processing unit (QPU), the framework employs Gaussian process surrogates with expected improvement to balance exploration and exploitation, while trust region updates refine the search around promising candidates. The framework further incorporates mechanisms to manage QPU runtime and enforce feasibility under hardware constraints efficiently. Simulation studies demonstrate that TuRBO consistently identifies schedules that outperform random and greedy search in terms of energy, feasible solution probability, and chain break fraction. These results highlight TuRBO as a resource-efficient and scalable strategy for annealing schedule design, offering improved QA performance in noisy intermediate-scale quantum regimes and extensibility to industrial optimization tasks.

Recent LiDAR-based 3D Object Detection (3DOD) methods show promising results, but they often do not generalize well to target domains outside the source (or training) data distribution. To reduce such domain gaps and thus to make 3DOD models more generalizable, we introduce a novel unsupervised domain adaptation (UDA) method, called CMDA, which (i) leverages visual semantic cues from an image modality (i.e., camera images) as an effective semantic bridge to close the domain gap in the cross-modal Bird's Eye View (BEV) representations. Further, (ii) we also introduce a self-training-based learning strategy, wherein a model is adversarially trained to generate domain-invariant features, which disrupt the discrimination of whether a feature instance comes from a source or an unseen target domain. Overall, our CMDA framework guides the 3DOD model to generate highly informative and domain-adaptive features for novel data distributions. In our extensive experiments with large-scale benchmarks, such as nuScenes, Waymo, and KITTI, those mentioned above provide significant performance gains for UDA tasks, achieving state-of-the-art performance.

Protein-ligand binding affinity is critical in drug discovery, but experimentally determining it is time-consuming and expensive. Artificial intelligence (AI) has been used to predict binding affinity, significantly accelerating this process. However, the high-performance requirements and vast datasets involved in affinity prediction demand increasingly large AI models, requiring substantial computational resources and training time. Quantum machine learning has emerged as a promising solution to these challenges. In particular, hybrid quantum-classical models can reduce the number of parameters while maintaining or improving performance compared to classical counterparts. Despite these advantages, challenges persist: why hybrid quantum models achieve these benefits, whether quantum neural networks (QNNs) can replace classical neural networks, and whether such models are feasible on noisy intermediate-scale quantum (NISQ) devices. This study addresses these challenges by proposing a hybrid quantum neural network (HQNN) that empirically demonstrates the capability to approximate non-linear functions in the latent feature space derived from classical embedding. The primary goal of this study is to achieve a parameter-efficient model in binding affinity prediction while ensuring feasibility on NISQ devices. Numerical results indicate that HQNN achieves comparable or superior performance and parameter efficiency compared to classical neural networks, underscoring its potential as a viable replacement. This study highlights the potential of hybrid QML in computational drug discovery, offering insights into its applicability and advantages in addressing the computational challenges of protein-ligand binding affinity prediction.

Researchers at Pusan National University developed Cityscape-Adverse, a new benchmark dataset featuring diverse adverse environmental conditions, generated using advanced diffusion-based image editing while meticulously preserving semantic labels. This benchmark helps evaluate semantic segmentation model robustness and demonstrates that training with such high-fidelity synthetic data significantly enhances model generalization to real-world challenging scenarios.

12 Sep 2023

Behind The Wings: The Case of Reverse Engineering and Drone Hijacking in DJI Enhanced Wi-Fi Protocol

Behind The Wings: The Case of Reverse Engineering and Drone Hijacking in DJI Enhanced Wi-Fi Protocol

This research paper entails an examination of the Enhanced Wi-Fi protocol, focusing on its control command reverse-engineering analysis and subsequent demonstration of a hijacking attack. Our investigation discovered vulnerabilities in the Enhanced Wi-Fi control commands, rendering them susceptible to hijacking attacks. Notably, the study established that even readily available and cost-effective commercial off-the-shelf Wi-Fi routers could be leveraged as effective tools for executing such attacks. To illustrate this vulnerability, a proof-of-concept remote hijacking attack was carried out on a DJI Mini SE drone, whereby we intercepted the control commands to manipulate the drone's flight trajectory. The findings of this research emphasize the critical necessity of implementing robust security measures to safeguard unmanned aerial vehicles against potential hijacking threats. Considering that civilian drones are now used as war weapons, the study underscores the urgent need for further exploration and advancement in the domain of civilian drone security.

29 Aug 2024

The increase in global trade, the impact of COVID-19, and the tightening of environmental and safety regulations have brought significant changes to the maritime transportation market. To address these challenges, the port logistics sector is rapidly adopting advanced technologies such as big data, Internet of Things, and AI. However, despite these efforts, solving several issues related to productivity, environment, and safety in the port logistics sector requires collaboration among various stakeholders. In this study, we introduce an AI-based port logistics metaverse framework (PLMF) that facilitates communication, data sharing, and decision-making among diverse stakeholders in port logistics. The developed PLMF includes 11 AI-based metaverse content modules related to productivity, environment, and safety, enabling the monitoring, simulation, and decision making of real port logistics processes. Examples of these modules include the prediction of expected time of arrival, dynamic port operation planning, monitoring and prediction of ship fuel consumption and port equipment emissions, and detection and monitoring of hazardous ship routes and accidents between workers and port equipment. We conducted a case study using historical data from Busan Port to analyze the effectiveness of the PLMF. By predicting the expected arrival time of ships within the PLMF and optimizing port operations accordingly, we observed that the framework could generate additional direct revenue of approximately 7.3 million dollars annually, along with a 79% improvement in ship punctuality, resulting in certain environmental benefits for the port. These findings indicate that PLMF not only provides a platform for various stakeholders in port logistics to participate and collaborate but also significantly enhances the accuracy and sustainability of decision-making in port logistics through AI-based simulations.

University of Cincinnati University College LondonPusan National University

University College LondonPusan National University City University of Hong Kong

City University of Hong Kong King’s College LondonShenzhen UniversityTufts UniversityUW–MadisonHong Kong Centre for Cerebro-Cardiovascular Health EngineeringDKFZ German Cancer Research CenterShenzhen RayShape Medical Technology Inc.University of Wisconsin (UW) School of Medicine and Public Health

King’s College LondonShenzhen UniversityTufts UniversityUW–MadisonHong Kong Centre for Cerebro-Cardiovascular Health EngineeringDKFZ German Cancer Research CenterShenzhen RayShape Medical Technology Inc.University of Wisconsin (UW) School of Medicine and Public Health

University College LondonPusan National University

University College LondonPusan National University City University of Hong Kong

City University of Hong Kong King’s College LondonShenzhen UniversityTufts UniversityUW–MadisonHong Kong Centre for Cerebro-Cardiovascular Health EngineeringDKFZ German Cancer Research CenterShenzhen RayShape Medical Technology Inc.University of Wisconsin (UW) School of Medicine and Public Health

King’s College LondonShenzhen UniversityTufts UniversityUW–MadisonHong Kong Centre for Cerebro-Cardiovascular Health EngineeringDKFZ German Cancer Research CenterShenzhen RayShape Medical Technology Inc.University of Wisconsin (UW) School of Medicine and Public HealthTrackerless freehand ultrasound reconstruction aims to reconstruct 3D volumes from sequences of 2D ultrasound images without relying on external tracking systems. By eliminating the need for optical or electromagnetic trackers, this approach offers a low-cost, portable, and widely deployable alternative to more expensive volumetric ultrasound imaging systems, particularly valuable in resource-constrained clinical settings. However, predicting long-distance transformations and handling complex probe trajectories remain challenging. The TUS-REC2024 Challenge establishes the first benchmark for trackerless 3D freehand ultrasound reconstruction by providing a large publicly available dataset, along with a baseline model and a rigorous evaluation framework. By the submission deadline, the Challenge had attracted 43 registered teams, of which 6 teams submitted 21 valid dockerized solutions. The submitted methods span a wide range of approaches, including the state space model, the recurrent model, the registration-driven volume refinement, the attention mechanism, and the physics-informed model. This paper provides a comprehensive background introduction and literature review in the field, presents an overview of the challenge design and dataset, and offers a comparative analysis of submitted methods across multiple evaluation metrics. These analyses highlight both the progress and the current limitations of state-of-the-art approaches in this domain and provide insights for future research directions. All data and code are publicly available to facilitate ongoing development and reproducibility. As a live and evolving benchmark, it is designed to be continuously iterated and improved. The Challenge was held at MICCAI 2024 and is organised again at MICCAI 2025, reflecting its sustained commitment to advancing this field.

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of Pittsburgh

University of Pittsburgh University of California, Santa BarbaraSLAC National Accelerator Laboratory

University of California, Santa BarbaraSLAC National Accelerator Laboratory Harvard University

Harvard University Imperial College LondonUniversity of OklahomaDESY

Imperial College LondonUniversity of OklahomaDESY University of ManchesterUniversity of ZurichUniversity of Bern

University of ManchesterUniversity of ZurichUniversity of Bern UC Berkeley

UC Berkeley University of OxfordNikhefIndiana UniversityPusan National UniversityScuola Normale Superiore

University of OxfordNikhefIndiana UniversityPusan National UniversityScuola Normale Superiore Cornell University

Cornell University University of California, San Diego

University of California, San Diego Northwestern UniversityUniversity of Granada

Northwestern UniversityUniversity of Granada CERN

CERN Argonne National LaboratoryFlorida State University

Argonne National LaboratoryFlorida State University Seoul National University

Seoul National University Huazhong University of Science and Technology

Huazhong University of Science and Technology University of Wisconsin-MadisonUniversity of Pisa

University of Wisconsin-MadisonUniversity of Pisa Lawrence Berkeley National LaboratoryPolitecnico di MilanoUniversity of LiverpoolUniversity of Iowa

Lawrence Berkeley National LaboratoryPolitecnico di MilanoUniversity of LiverpoolUniversity of Iowa Duke UniversityUniversity of GenevaUniversity of Glasgow

Duke UniversityUniversity of GenevaUniversity of Glasgow University of WarwickIowa State University

University of WarwickIowa State University Karlsruhe Institute of TechnologyUniversità di Milano-BicoccaTechnische Universität MünchenOld Dominion UniversityTexas Tech University

Karlsruhe Institute of TechnologyUniversità di Milano-BicoccaTechnische Universität MünchenOld Dominion UniversityTexas Tech University Durham UniversityNiels Bohr InstituteCzech Technical University in PragueUniversity of OregonUniversity of AlabamaSTFC Rutherford Appleton LaboratoryLawrence Livermore National Laboratory

Durham UniversityNiels Bohr InstituteCzech Technical University in PragueUniversity of OregonUniversity of AlabamaSTFC Rutherford Appleton LaboratoryLawrence Livermore National Laboratory University of California, Santa CruzUniversity of SarajevoJefferson LabTOBB University of Economics and TechnologyUniversity of California RiversideUniversity of HuddersfieldCEA SaclayRadboud University NijmegenUniversitá degli Studi dell’InsubriaHumboldt University BerlinINFN Milano-BicoccaUniversità degli Studi di BresciaIIT GuwahatiDaresbury LaboratoryINFN - PadovaINFN MilanoUniversità degli Studi di BariCockcroft InstituteHelwan UniversityINFN-TorinoINFN PisaINFN-BolognaBrookhaven National Laboratory (BNL)INFN Laboratori Nazionali del SudINFN PaviaMax Planck Institute for Nuclear PhysicsINFN TriesteINFN Roma TreINFN GenovaFermi National Accelerator Laboratory (Fermilab)INFN BariINFN-FirenzeINFN FerraraPunjab Agricultural UniversityEuropean Spallation Source (ESS)Fusion for EnergyInternational Institute of Physics (IIP)INFN-Roma La SapienzaUniversit

degli Studi di GenovaUniversit

di FerraraUniversit

degli Studi di PadovaUniversit

di Roma

La SapienzaRWTH Aachen UniversityUniversit

di TorinoSapienza Universit

di RomaUniversit

degli Studi di FirenzeUniversit

degli Studi di TorinoUniversit

di PaviaUniversit

Di BolognaUniversit

degli Studi Roma Tre

University of California, Santa CruzUniversity of SarajevoJefferson LabTOBB University of Economics and TechnologyUniversity of California RiversideUniversity of HuddersfieldCEA SaclayRadboud University NijmegenUniversitá degli Studi dell’InsubriaHumboldt University BerlinINFN Milano-BicoccaUniversità degli Studi di BresciaIIT GuwahatiDaresbury LaboratoryINFN - PadovaINFN MilanoUniversità degli Studi di BariCockcroft InstituteHelwan UniversityINFN-TorinoINFN PisaINFN-BolognaBrookhaven National Laboratory (BNL)INFN Laboratori Nazionali del SudINFN PaviaMax Planck Institute for Nuclear PhysicsINFN TriesteINFN Roma TreINFN GenovaFermi National Accelerator Laboratory (Fermilab)INFN BariINFN-FirenzeINFN FerraraPunjab Agricultural UniversityEuropean Spallation Source (ESS)Fusion for EnergyInternational Institute of Physics (IIP)INFN-Roma La SapienzaUniversit

degli Studi di GenovaUniversit

di FerraraUniversit

degli Studi di PadovaUniversit

di Roma

La SapienzaRWTH Aachen UniversityUniversit

di TorinoSapienza Universit

di RomaUniversit

degli Studi di FirenzeUniversit

degli Studi di TorinoUniversit

di PaviaUniversit

Di BolognaUniversit

degli Studi Roma TreThis review, by the International Muon Collider Collaboration (IMCC), outlines the scientific case and technological feasibility of a multi-TeV muon collider, demonstrating its potential for unprecedented energy reach and precision measurements in particle physics. It presents a comprehensive conceptual design and R&D roadmap for a collider capable of reaching 10+ TeV center-of-mass energy.

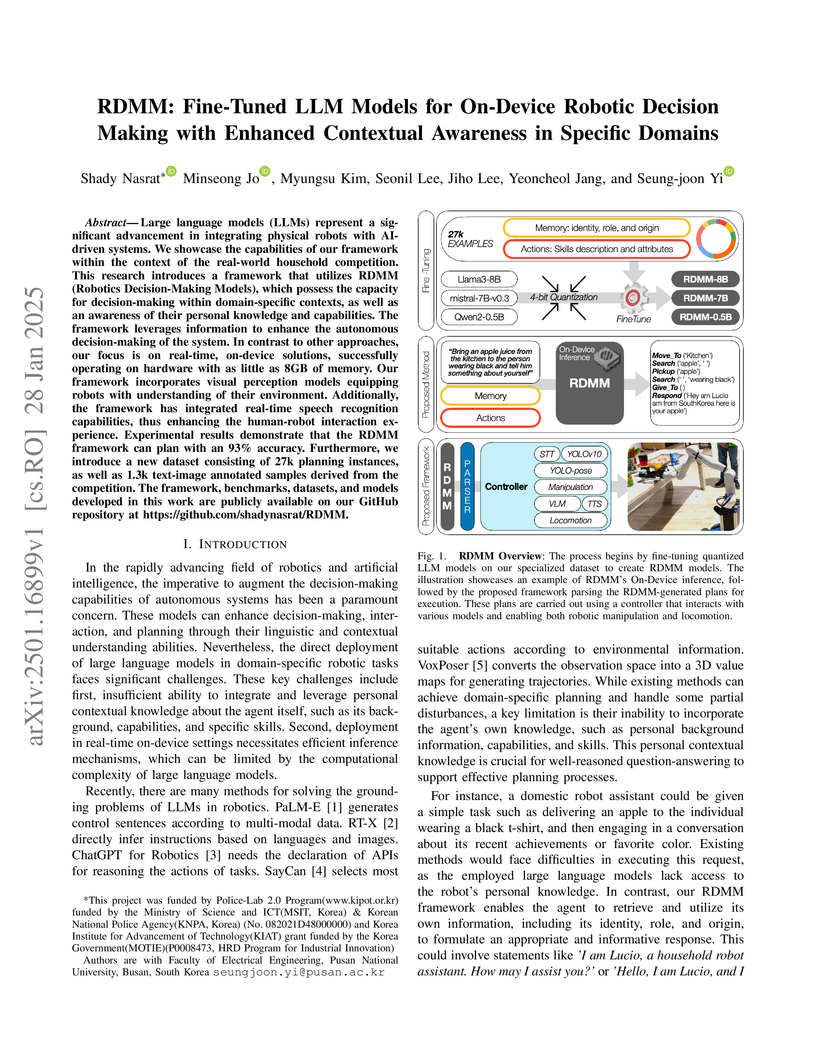

Large language models (LLMs) represent a significant advancement in integrating physical robots with AI-driven systems. We showcase the capabilities of our framework within the context of the real-world household competition. This research introduces a framework that utilizes RDMM (Robotics Decision-Making Models), which possess the capacity for decision-making within domain-specific contexts, as well as an awareness of their personal knowledge and capabilities. The framework leverages information to enhance the autonomous decision-making of the system. In contrast to other approaches, our focus is on real-time, on-device solutions, successfully operating on hardware with as little as 8GB of memory. Our framework incorporates visual perception models equipping robots with understanding of their environment. Additionally, the framework has integrated real-time speech recognition capabilities, thus enhancing the human-robot interaction experience. Experimental results demonstrate that the RDMM framework can plan with an 93\% accuracy. Furthermore, we introduce a new dataset consisting of 27k planning instances, as well as 1.3k text-image annotated samples derived from the competition. The framework, benchmarks, datasets, and models developed in this work are publicly available on our GitHub repository at this https URL.

A new COntrastive learning and Pseudo-Supervision (COPS) framework enhances depth completion by effectively fusing thermal images with LiDAR data. This approach demonstrates improved depth map accuracy in challenging environmental conditions, such as low-light and rain, compared to conventional RGB-based methods.

To achieve realistic immersion in landscape images, fluids such as water and

clouds need to move within the image while revealing new scenes from various

camera perspectives. Recently, a field called dynamic scene video has emerged,

which combines single image animation with 3D photography. These methods use

pseudo 3D space, implicitly represented with Layered Depth Images (LDIs). LDIs

separate a single image into depth-based layers, which enables elements like

water and clouds to move within the image while revealing new scenes from

different camera perspectives. However, as landscapes typically consist of

continuous elements, including fluids, the representation of a 3D space

separates a landscape image into discrete layers, and it can lead to diminished

depth perception and potential distortions depending on camera movement.

Furthermore, due to its implicit modeling of 3D space, the output may be

limited to videos in the 2D domain, potentially reducing their versatility. In

this paper, we propose representing a complete 3D space for dynamic scene video

by modeling explicit representations, specifically 4D Gaussians, from a single

image. The framework is focused on optimizing 3D Gaussians by generating

multi-view images from a single image and creating 3D motion to optimize 4D

Gaussians. The most important part of proposed framework is consistent 3D

motion estimation, which estimates common motion among multi-view images to

bring the motion in 3D space closer to actual motions. As far as we know, this

is the first attempt that considers animation while representing a complete 3D

space from a single landscape image. Our model demonstrates the ability to

provide realistic immersion in various landscape images through diverse

experiments and metrics. Extensive experimental results are

this https URL

There are no more papers matching your filters at the moment.