QuTechTU Delft

31 May 2024

Complex systems are not entirely decomposable, hence interdependences arise

at the interfaces in complex projects. When changes occur, significant risks

arise at these interfaces as it is hard to identify, manage and visualise the

systemic consequences of changes. Particularly problematic are the interfaces

in which there are multiple interdependencies, which occur where the boundaries

between design components, contracts and organisation coincide, such as between

design disciplines. In this paper, we propose an approach to digital twin-based

interface management, through an underpinning state-of-the-art review of the

existing technical literature and a small pilot to identify the characteristics

of future data-driven solutions. We set out an approach to digital twin-based

interface management and an agenda for research on advanced methodologies for

managing change in complex projects. This agenda includes the need to integrate

work on identifying systems interfaces, change propagation and visualisation,

and the potential to significantly extend the limitations of existing solutions

by using developments in the digital twin, such as linked data, semantic

enrichment, network analyses, natural language processing (NLP)-enhanced

ontology and machine learning.

20 Aug 2025

The Dynamic Risk-Aware MPPI (DRA-MPPI) framework enables mobile robots to navigate crowded environments by efficiently approximating joint collision probabilities from multi-modal human movement predictions. This method maintains high success rates (98-99%) and low collision probabilities while preserving operational efficiency in both simulations and real-robot deployments.

This survey provides a comprehensive and systematic review of Model-based Reinforcement Learning (MBRL), establishing a unified definition and a detailed taxonomy that categorizes diverse methodologies from classical approaches to modern deep learning integrations. It clarifies core concepts, identifies key challenges, and outlines future research directions, serving as a foundational resource for the field.

Robotics applications often rely on scene reconstructions to enable

downstream tasks. In this work, we tackle the challenge of actively building an

accurate map of an unknown scene using an RGB-D camera on a mobile platform. We

propose a hybrid map representation that combines a Gaussian splatting map with

a coarse voxel map, leveraging the strengths of both representations: the

high-fidelity scene reconstruction capabilities of Gaussian splatting and the

spatial modelling strengths of the voxel map. At the core of our framework is

an effective confidence modelling technique for the Gaussian splatting map to

identify under-reconstructed areas, while utilising spatial information from

the voxel map to target unexplored areas and assist in collision-free path

planning. By actively collecting scene information in under-reconstructed and

unexplored areas for map updates, our approach achieves superior Gaussian

splatting reconstruction results compared to state-of-the-art approaches.

Additionally, we demonstrate the real-world applicability of our framework

using an unmanned aerial vehicle.

Instruction-based image editing (IIE) has advanced rapidly with the success of diffusion models. However, existing efforts primarily focus on simple and explicit instructions to execute editing operations such as adding, deleting, moving, or swapping objects. They struggle to handle more complex implicit hypothetical instructions that require deeper reasoning to infer plausible visual changes and user intent. Additionally, current datasets provide limited support for training and evaluating reasoning-aware editing capabilities. Architecturally, these methods also lack mechanisms for fine-grained detail extraction that support such reasoning. To address these limitations, we propose Reason50K, a large-scale dataset specifically curated for training and evaluating hypothetical instruction reasoning image editing, along with ReasonBrain, a novel framework designed to reason over and execute implicit hypothetical instructions across diverse scenarios. Reason50K includes over 50K samples spanning four key reasoning scenarios: Physical, Temporal, Causal, and Story reasoning. ReasonBrain leverages Multimodal Large Language Models (MLLMs) for editing guidance generation and a diffusion model for image synthesis, incorporating a Fine-grained Reasoning Cue Extraction (FRCE) module to capture detailed visual and textual semantics essential for supporting instruction reasoning. To mitigate the semantic loss, we further introduce a Cross-Modal Enhancer (CME) that enables rich interactions between the fine-grained cues and MLLM-derived features. Extensive experiments demonstrate that ReasonBrain consistently outperforms state-of-the-art baselines on reasoning scenarios while exhibiting strong zero-shot generalization to conventional IIE tasks. Our dataset and code will be released publicly.

Researchers from L3S Research Center, TU Delft, and the University of Glasgow present a comprehensive survey classifying adaptive retrieval and ranking mechanisms that leverage test-time corpus feedback in Retrieval-Augmented Generation (RAG) systems. The work offers a structured overview of techniques that dynamically refine information retrieval during inference, moving beyond static retrieval to enhance RAG performance for complex tasks.

Researchers from Brno University of Technology and TU Delft developed a comprehensive pipeline for automatically generating 3D building models from 2D raster-wise floor plans, achieving up to 16 percentage points higher mean IoU compared to state-of-the-art methods on the CubiCasa benchmark.

Recently, autonomous agents built on large language models (LLMs) have

experienced significant development and are being deployed in real-world

applications. These agents can extend the base LLM's capabilities in multiple

ways. For example, a well-built agent using GPT-3.5-Turbo as its core can

outperform the more advanced GPT-4 model by leveraging external components.

More importantly, the usage of tools enables these systems to perform actions

in the real world, moving from merely generating text to actively interacting

with their environment. Given the agents' practical applications and their

ability to execute consequential actions, it is crucial to assess potential

vulnerabilities. Such autonomous systems can cause more severe damage than a

standalone language model if compromised. While some existing research has

explored harmful actions by LLM agents, our study approaches the vulnerability

from a different perspective. We introduce a new type of attack that causes

malfunctions by misleading the agent into executing repetitive or irrelevant

actions. We conduct comprehensive evaluations using various attack methods,

surfaces, and properties to pinpoint areas of susceptibility. Our experiments

reveal that these attacks can induce failure rates exceeding 80\% in multiple

scenarios. Through attacks on implemented and deployable agents in multi-agent

scenarios, we accentuate the realistic risks associated with these

vulnerabilities. To mitigate such attacks, we propose self-examination

detection methods. However, our findings indicate these attacks are difficult

to detect effectively using LLMs alone, highlighting the substantial risks

associated with this vulnerability.

Robots benefit from high-fidelity reconstructions of their environment, which should be geometrically accurate and photorealistic to support downstream tasks. While this can be achieved by building distance fields from range sensors and radiance fields from cameras, realising scalable incremental mapping of both fields consistently and at the same time with high quality is challenging. In this paper, we propose a novel map representation that unifies a continuous signed distance field and a Gaussian splatting radiance field within an elastic and compact point-based implicit neural map. By enforcing geometric consistency between these fields, we achieve mutual improvements by exploiting both modalities. We present a novel LiDAR-visual SLAM system called PINGS using the proposed map representation and evaluate it on several challenging large-scale datasets. Experimental results demonstrate that PINGS can incrementally build globally consistent distance and radiance fields encoded with a compact set of neural points. Compared to state-of-the-art methods, PINGS achieves superior photometric and geometric rendering at novel views by constraining the radiance field with the distance field. Furthermore, by utilizing dense photometric cues and multi-view consistency from the radiance field, PINGS produces more accurate distance fields, leading to improved odometry estimation and mesh reconstruction. We also provide an open-source implementation of PING at: this https URL.

Privacy and interpretability are two important ingredients for achieving trustworthy machine learning. We study the interplay of these two aspects in graph machine learning through graph reconstruction attacks. The goal of the adversary here is to reconstruct the graph structure of the training data given access to model explanations. Based on the different kinds of auxiliary information available to the adversary, we propose several graph reconstruction attacks. We show that additional knowledge of post-hoc feature explanations substantially increases the success rate of these attacks. Further, we investigate in detail the differences between attack performance with respect to three different classes of explanation methods for graph neural networks: gradient-based, perturbation-based, and surrogate model-based methods. While gradient-based explanations reveal the most in terms of the graph structure, we find that these explanations do not always score high in utility. For the other two classes of explanations, privacy leakage increases with an increase in explanation utility. Finally, we propose a defense based on a randomized response mechanism for releasing the explanations, which substantially reduces the attack success rate. Our code is available at this https URL

Deep Neural Networks (DNNs) have shown great promise in various domains. However, vulnerabilities associated with DNN training, such as backdoor attacks, are a significant concern. These attacks involve the subtle insertion of triggers during model training, allowing for manipulated predictions. More recently, DNNs used with tabular data have gained increasing attention due to the rise of transformer models. Our research presents a comprehensive analysis of backdoor attacks on tabular data using DNNs, mainly focusing on transformers. We propose a novel approach for trigger construction: in-bounds attack, which provides excellent attack performance while maintaining stealthiness. Through systematic experimentation across benchmark datasets, we uncover that transformer-based DNNs for tabular data are highly susceptible to backdoor attacks, even with minimal feature value alterations. We also verify that these attacks can be generalized to other models, like XGBoost and DeepFM. Our results demonstrate up to 100% attack success rate with negligible clean accuracy drop. Furthermore, we evaluate several defenses against these attacks, identifying Spectral Signatures as the most effective. Still, our findings highlight the need to develop tabular data-specific countermeasures to defend against backdoor attacks.

21 Jan 2025

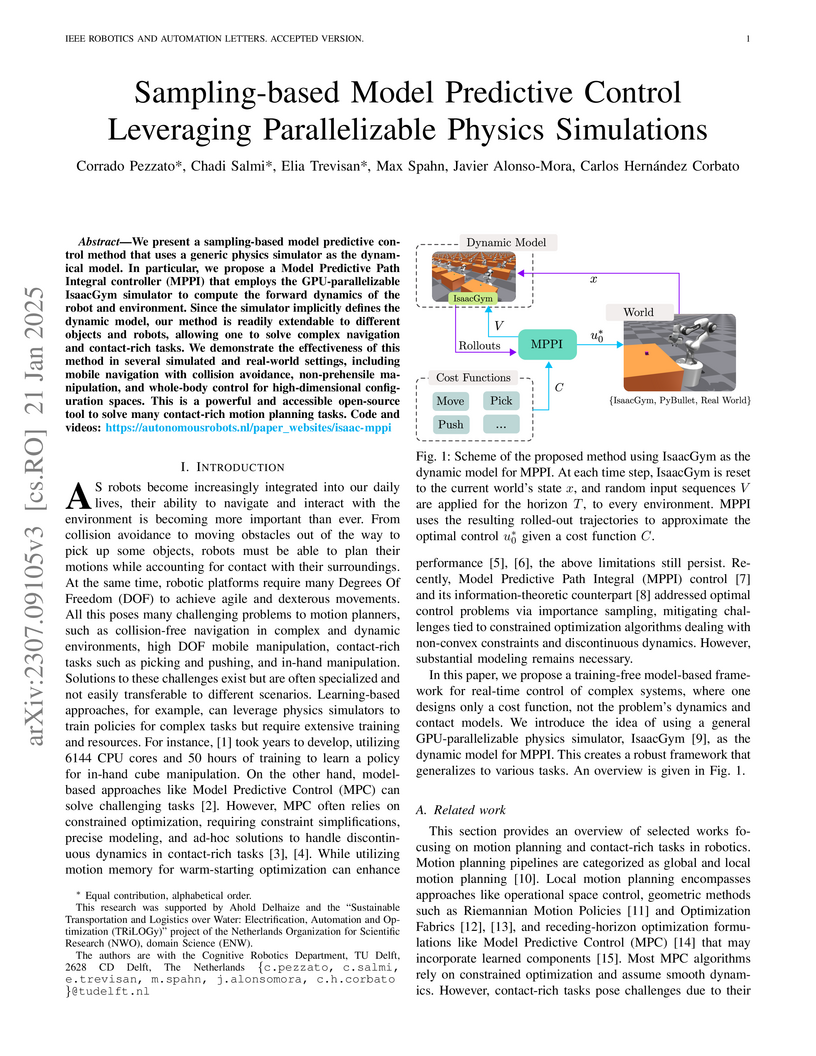

We present a method for sampling-based model predictive control that makes

use of a generic physics simulator as the dynamical model. In particular, we

propose a Model Predictive Path Integral controller (MPPI), that uses the

GPU-parallelizable IsaacGym simulator to compute the forward dynamics of a

problem. By doing so, we eliminate the need for explicit encoding of robot

dynamics and contacts with objects for MPPI. Since no explicit dynamic modeling

is required, our method is easily extendable to different objects and robots

and allows one to solve complex navigation and contact-rich tasks. We

demonstrate the effectiveness of this method in several simulated and

real-world settings, among which mobile navigation with collision avoidance,

non-prehensile manipulation, and whole-body control for high-dimensional

configuration spaces. This method is a powerful and accessible open-source tool

to solve a large variety of contact-rich motion planning tasks.

09 Sep 2025

Test-time scaling (TTS) has emerged as a new frontier for scaling the performance of Large Language Models. In test-time scaling, by using more computational resources during inference, LLMs can improve their reasoning process and task performance. Several approaches have emerged for TTS such as distilling reasoning traces from another model or exploring the vast decoding search space by employing a verifier. The verifiers serve as reward models that help score the candidate outputs from the decoding process to diligently explore the vast solution space and select the best outcome. This paradigm commonly termed has emerged as a superior approach owing to parameter free scaling at inference time and high performance gains. The verifiers could be prompt-based, fine-tuned as a discriminative or generative model to verify process paths, outcomes or both. Despite their widespread adoption, there is no detailed collection, clear categorization and discussion of diverse verification approaches and their training mechanisms. In this survey, we cover the diverse approaches in the literature and present a unified view of verifier training, types and their utility in test-time scaling. Our repository can be found at this https URL.

Much attention has been devoted to the use of machine learning to approximate physical concepts. Yet, due to challenges in interpretability of machine learning techniques, the question of what physics machine learning models are able to learn remains open. Here we bridge the concept a physical quantity and its machine learning approximation in the context of the original application of neural networks in physics: topological phase classification. We construct a hybrid tensor-neural network object that exactly expresses real space topological invariant and rigorously assess its trainability and generalization. Specifically, we benchmark the accuracy and trainability of a tensor-neural network to multiple types of neural networks, thus exemplifying the differences in trainability and representational power. Our work highlights the challenges in learning topological invariants and constitutes a stepping stone towards more accurate and better generalizable machine learning representations in condensed matter physics.

01 Jun 2025

Recently, 3D Gaussian splatting-based RGB-D SLAM displays remarkable

performance of high-fidelity 3D reconstruction. However, the lack of depth

rendering consistency and efficient loop closure limits the quality of its

geometric reconstructions and its ability to perform globally consistent

mapping online. In this paper, we present 2DGS-SLAM, an RGB-D SLAM system using

2D Gaussian splatting as the map representation. By leveraging the

depth-consistent rendering property of the 2D variant, we propose an accurate

camera pose optimization method and achieve geometrically accurate 3D

reconstruction. In addition, we implement efficient loop detection and camera

relocalization by leveraging MASt3R, a 3D foundation model, and achieve

efficient map updates by maintaining a local active map. Experiments show that

our 2DGS-SLAM approach achieves superior tracking accuracy, higher surface

reconstruction quality, and more consistent global map reconstruction compared

to existing rendering-based SLAM methods, while maintaining high-fidelity image

rendering and improved computational efficiency.

Researchers at the University of Bonn and TU Delft developed a monocular visual SLAM system that accurately estimates camera poses and provides scale-consistent dense 3D reconstruction in dynamic settings. The method integrates a deep learning model for moving object segmentation and depth estimation with a geometric bundle adjustment framework, achieving superior tracking and depth accuracy on challenging datasets.

05 Aug 2022

The standard dynamical approach to quantum thermodynamics is based on Markovian master equations describing the thermalization of a system weakly coupled to a large environment, and on tools such as entropy production relations. Here we develop a new framework overcoming the limitations that the current dynamical and information theory approaches encounter when applied to this setting. More precisely, we introduce the notion of continuous thermomajorization, and employ it to obtain necessary and sufficient conditions for the existence of a Markovian thermal process transforming between given initial and final energy distributions of the system. These lead to a complete set of generalized entropy production inequalities including the standard one as a special case. Importantly, these conditions can be reduced to a finitely verifiable set of constraints governing non-equilibrium transformations under master equations. What is more, the framework is also constructive, i.e., it returns explicit protocols realizing any allowed transformation. These protocols use as building blocks elementary thermalizations, which we prove to be universal controls. Finally, we also present an algorithm constructing the full set of energy distributions achievable from a given initial state via Markovian thermal processes and provide a Mathematica implementation solving d=6 on a laptop computer in minutes.

The SELF-multi-RAG framework enhances conversational question answering by adaptively deciding when to retrieve external knowledge and generating comprehensive conversational summaries for retrieval. It achieves approximately 13% improvement in human-evaluated response quality compared to single-turn baselines and maintains consistent performance across extended multi-turn dialogues.

Fact-checking real-world claims, particularly numerical claims, is inherently complex that require multistep reasoning and numerical reasoning for verifying diverse aspects of the claim. Although large language models (LLMs) including reasoning models have made tremendous advances, they still fall short on fact-checking real-world claims that require a combination of compositional and numerical reasoning. They are unable to understand nuance of numerical aspects, and are also susceptible to the reasoning drift issue, where the model is unable to contextualize diverse information resulting in misinterpretation and backtracking of reasoning process. In this work, we systematically explore scaling test-time compute (TTS) for LLMs on the task of fact-checking complex numerical claims, which entails eliciting multiple reasoning paths from an LLM. We train a verifier model (VERIFIERFC) to navigate this space of possible reasoning paths and select one that could lead to the correct verdict. We observe that TTS helps mitigate the reasoning drift issue, leading to significant performance gains for fact-checking numerical claims. To improve compute efficiency in TTS, we introduce an adaptive mechanism that performs TTS selectively based on the perceived complexity of the claim. This approach achieves 1.8x higher efficiency than standard TTS, while delivering a notable 18.8% performance improvement over single-shot claim verification methods. Our code and data can be found at this https URL

05 Oct 2025

Researchers from TU Delft developed the PROBE framework, the first empirically tested tool to quantify the breadth and depth of "Pre-Decision Reflection" for significant life choices, revealing substantial individual variability in thought patterns and a discrepancy between self-reported and objectively measured reflection quality. The framework identified "Belief" as the most frequent reflective category, while "Alternative Perspective" and "Insight" were least engaged, with 80% of participants elaborating less than half of their thoughts.

There are no more papers matching your filters at the moment.