TU Eindhoven

A framework called KnowRL enhances large language models' (LLMs) self-knowledge by enabling them to accurately identify the boundaries of their own capabilities and information. This approach improves intrinsic self-knowledge accuracy by 23-28% and boosts F1 scores on an external benchmark by 10-12% using a self-supervised reinforcement learning method.

ETH Zurich

ETH Zurich Google DeepMind

Google DeepMind University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Google

Google Meta

Meta Cohere

Cohere University of California, San Diego

University of California, San Diego NVIDIASony AI

NVIDIASony AI Argonne National LaboratoryUCSBMBZUAI

Argonne National LaboratoryUCSBMBZUAI Lawrence Berkeley National Laboratory

Lawrence Berkeley National Laboratory King’s College LondonTU DarmstadtUMass AmherstEuropean CommissionIntelTU EindhovenUC IrvineUSYDPolytechnique MontrealTangenticNASAContextual AITattle Civic TechAccentureSaferAILibrAIArupCerebras SystemsRANDUL Research InstitutesQualcomm TechnologiesNutanixuheal.aiEthrivaDotphotonPRISM EvalMLCommonsVeraitechDuke Univ.NASA-ImpactStanford Univ.Univ. of ZurichRochester Inst. of Tech.CommonGroundDigitalResilientSurescriptsUniv. of BirminghamNational Univ PhilippinesARTIFEX LabsIowa State Univ.AI Equity AdvisoryTop Health TechBocconi Univ.Reins AIUniv. of SydneyTuraco StrategyThinkEvolveClarkson Univ.Univ. of TrentoNormalyzeNICMakerere Univ.AnoteARuss Data and Editing ServicesHarvard Univ.OWASPUniv. of Alabama, HuntsvilleIllinois Inst. of Tech.Univ. of OxfordUniv. of BathIAEAIUniv. of UtahRoyal Holloway Univ. of LondonUniversit

degli Studi di SalernoCoactive.AI

King’s College LondonTU DarmstadtUMass AmherstEuropean CommissionIntelTU EindhovenUC IrvineUSYDPolytechnique MontrealTangenticNASAContextual AITattle Civic TechAccentureSaferAILibrAIArupCerebras SystemsRANDUL Research InstitutesQualcomm TechnologiesNutanixuheal.aiEthrivaDotphotonPRISM EvalMLCommonsVeraitechDuke Univ.NASA-ImpactStanford Univ.Univ. of ZurichRochester Inst. of Tech.CommonGroundDigitalResilientSurescriptsUniv. of BirminghamNational Univ PhilippinesARTIFEX LabsIowa State Univ.AI Equity AdvisoryTop Health TechBocconi Univ.Reins AIUniv. of SydneyTuraco StrategyThinkEvolveClarkson Univ.Univ. of TrentoNormalyzeNICMakerere Univ.AnoteARuss Data and Editing ServicesHarvard Univ.OWASPUniv. of Alabama, HuntsvilleIllinois Inst. of Tech.Univ. of OxfordUniv. of BathIAEAIUniv. of UtahRoyal Holloway Univ. of LondonUniversit

degli Studi di SalernoCoactive.AIThe rapid advancement and deployment of AI systems have created an urgent

need for standard safety-evaluation frameworks. This paper introduces

AILuminate v1.0, the first comprehensive industry-standard benchmark for

assessing AI-product risk and reliability. Its development employed an open

process that included participants from multiple fields. The benchmark

evaluates an AI system's resistance to prompts designed to elicit dangerous,

illegal, or undesirable behavior in 12 hazard categories, including violent

crimes, nonviolent crimes, sex-related crimes, child sexual exploitation,

indiscriminate weapons, suicide and self-harm, intellectual property, privacy,

defamation, hate, sexual content, and specialized advice (election, financial,

health, legal). Our method incorporates a complete assessment standard,

extensive prompt datasets, a novel evaluation framework, a grading and

reporting system, and the technical as well as organizational infrastructure

for long-term support and evolution. In particular, the benchmark employs an

understandable five-tier grading scale (Poor to Excellent) and incorporates an

innovative entropy-based system-response evaluation.

In addition to unveiling the benchmark, this report also identifies

limitations of our method and of building safety benchmarks generally,

including evaluator uncertainty and the constraints of single-turn

interactions. This work represents a crucial step toward establishing global

standards for AI risk and reliability evaluation while acknowledging the need

for continued development in areas such as multiturn interactions, multimodal

understanding, coverage of additional languages, and emerging hazard

categories. Our findings provide valuable insights for model developers, system

integrators, and policymakers working to promote safer AI deployment.

20 Nov 2025

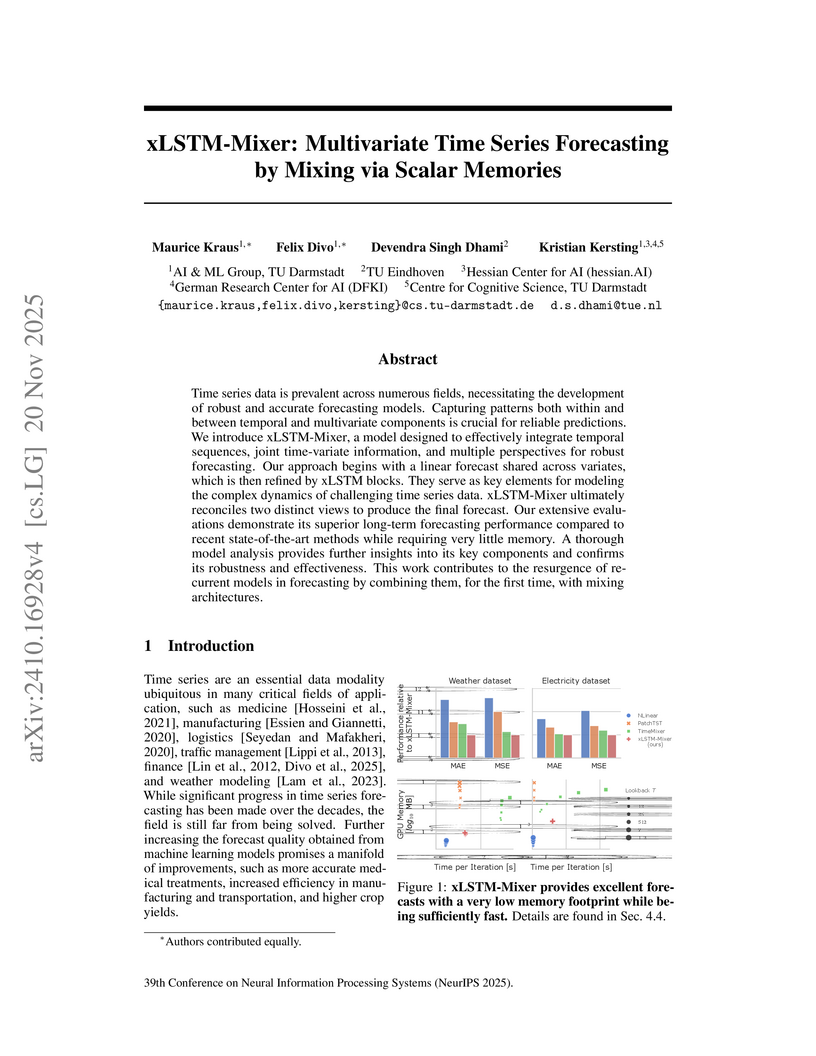

Time series data is prevalent across numerous fields, necessitating the development of robust and accurate forecasting models. Capturing patterns both within and between temporal and multivariate components is crucial for reliable predictions. We introduce xLSTM-Mixer, a model designed to effectively integrate temporal sequences, joint time-variate information, and multiple perspectives for robust forecasting. Our approach begins with a linear forecast shared across variates, which is then refined by xLSTM blocks. They serve as key elements for modeling the complex dynamics of challenging time series data. xLSTM-Mixer ultimately reconciles two distinct views to produce the final forecast. Our extensive evaluations demonstrate its superior long-term forecasting performance compared to recent state-of-the-art methods while requiring very little memory. A thorough model analysis provides further insights into its key components and confirms its robustness and effectiveness. This work contributes to the resurgence of recurrent models in forecasting by combining them, for the first time, with mixing architectures.

California Institute of Technology

California Institute of Technology Carnegie Mellon University

Carnegie Mellon University Google

Google New York University

New York University University of Chicago

University of Chicago National University of Singapore

National University of Singapore University of Oxford

University of Oxford Stanford University

Stanford University Meta

Meta CohereIllinois Institute of Technology

CohereIllinois Institute of Technology Google Research

Google Research NVIDIASony AI

NVIDIASony AI MicrosoftUCSBIntel LabsDFKIUMass Amherst

MicrosoftUCSBIntel LabsDFKIUMass Amherst Virginia Tech

Virginia Tech MIT

MIT The Ohio State UniversityIowa State UniversityUniversity of TrentoUniversity of BathTU EindhovenBocconi UniversityUniversity of YorkIntel CorporationIIT Delhi

The Ohio State UniversityIowa State UniversityUniversity of TrentoUniversity of BathTU EindhovenBocconi UniversityUniversity of YorkIntel CorporationIIT Delhi AdobePolytechnique MontrealQualcomm Technologies, Inc.Hessian.AINIKENebius AIAI Risk and Vulnerability AllianceSaferAIHaize LabsJuniper NetworksCerebras SystemsMeedanRANDFAIRNutanixEthrivaDotphotonPatronus AINational University, PhilippinesMLCommonsGraphcoreCenter for Security and Emerging TechnologyCredo AIReins AIBestech SystemsContext FundActiveFenceDigital Safety Research InstituteLF AI & DataPublic Authority for Applied Education and Training of KuwaitCoactive.AI

AdobePolytechnique MontrealQualcomm Technologies, Inc.Hessian.AINIKENebius AIAI Risk and Vulnerability AllianceSaferAIHaize LabsJuniper NetworksCerebras SystemsMeedanRANDFAIRNutanixEthrivaDotphotonPatronus AINational University, PhilippinesMLCommonsGraphcoreCenter for Security and Emerging TechnologyCredo AIReins AIBestech SystemsContext FundActiveFenceDigital Safety Research InstituteLF AI & DataPublic Authority for Applied Education and Training of KuwaitCoactive.AIThis paper introduces v0.5 of the AI Safety Benchmark, which has been created by the MLCommons AI Safety Working Group. The AI Safety Benchmark has been designed to assess the safety risks of AI systems that use chat-tuned language models. We introduce a principled approach to specifying and constructing the benchmark, which for v0.5 covers only a single use case (an adult chatting to a general-purpose assistant in English), and a limited set of personas (i.e., typical users, malicious users, and vulnerable users). We created a new taxonomy of 13 hazard categories, of which 7 have tests in the v0.5 benchmark. We plan to release version 1.0 of the AI Safety Benchmark by the end of 2024. The v1.0 benchmark will provide meaningful insights into the safety of AI systems. However, the v0.5 benchmark should not be used to assess the safety of AI systems. We have sought to fully document the limitations, flaws, and challenges of v0.5. This release of v0.5 of the AI Safety Benchmark includes (1) a principled approach to specifying and constructing the benchmark, which comprises use cases, types of systems under test (SUTs), language and context, personas, tests, and test items; (2) a taxonomy of 13 hazard categories with definitions and subcategories; (3) tests for seven of the hazard categories, each comprising a unique set of test items, i.e., prompts. There are 43,090 test items in total, which we created with templates; (4) a grading system for AI systems against the benchmark; (5) an openly available platform, and downloadable tool, called ModelBench that can be used to evaluate the safety of AI systems on the benchmark; (6) an example evaluation report which benchmarks the performance of over a dozen openly available chat-tuned language models; (7) a test specification for the benchmark.

Recently, newly developed Vision-Language Models (VLMs), such as OpenAI's o1, have emerged, seemingly demonstrating advanced reasoning capabilities across text and image modalities. However, the depth of these advances in language-guided perception and abstract reasoning remains underexplored, and it is unclear whether these models can truly live up to their ambitious promises. To assess the progress and identify shortcomings, we enter the wonderland of Bongard problems, a set of classic visual reasoning puzzles that require human-like abilities of pattern recognition and abstract reasoning. With our extensive evaluation setup, we show that while VLMs occasionally succeed in identifying discriminative concepts and solving some of the problems, they frequently falter. Surprisingly, even elementary concepts that may seem trivial to humans, such as simple spirals, pose significant challenges. Moreover, when explicitly asked to recognize ground truth concepts, they continue to falter, suggesting not only a lack of understanding of these elementary visual concepts but also an inability to generalize to unseen concepts. We compare the results of VLMs to human performance and observe that a significant gap remains between human visual reasoning capabilities and machine cognition.

DataPerf introduces an open-source benchmark suite designed to reorient machine learning research by fostering innovation in data-centric AI. This suite, managed by MLCommons, provides a standardized platform for evaluating data curation, selection, cleaning, and acquisition techniques, with initial baselines across diverse tasks demonstrating considerable scope for improvement in data quality.

The SPARC algorithm from Sony AI streamlines out-of-distribution generalization in contextual reinforcement learning by unifying context encoding and history-based adaptation into a single training phase. This approach demonstrated superior performance in Gran Turismo 7, successfully racing 100 unseen vehicles and adapting to new game physics, while also generalizing effectively in MuJoCo environments.

03 Sep 2024

Physics Informed Neural Networks (PINNs) offer several advantages when compared to traditional numerical methods for solving PDEs, such as being a mesh-free approach and being easily extendable to solving inverse problems. One promising approach for allowing PINNs to scale to multi-scale problems is to combine them with domain decomposition; for example, finite basis physics-informed neural networks (FBPINNs) replace the global PINN network with many localised networks which are summed together to approximate the solution. In this work, we significantly accelerate the training of FBPINNs by linearising their underlying optimisation problem. We achieve this by employing extreme learning machines (ELMs) as their subdomain networks and showing that this turns the FBPINN optimisation problem into one of solving a linear system or least-squares problem. We test our workflow in a preliminary fashion by using it to solve an illustrative 1D problem.

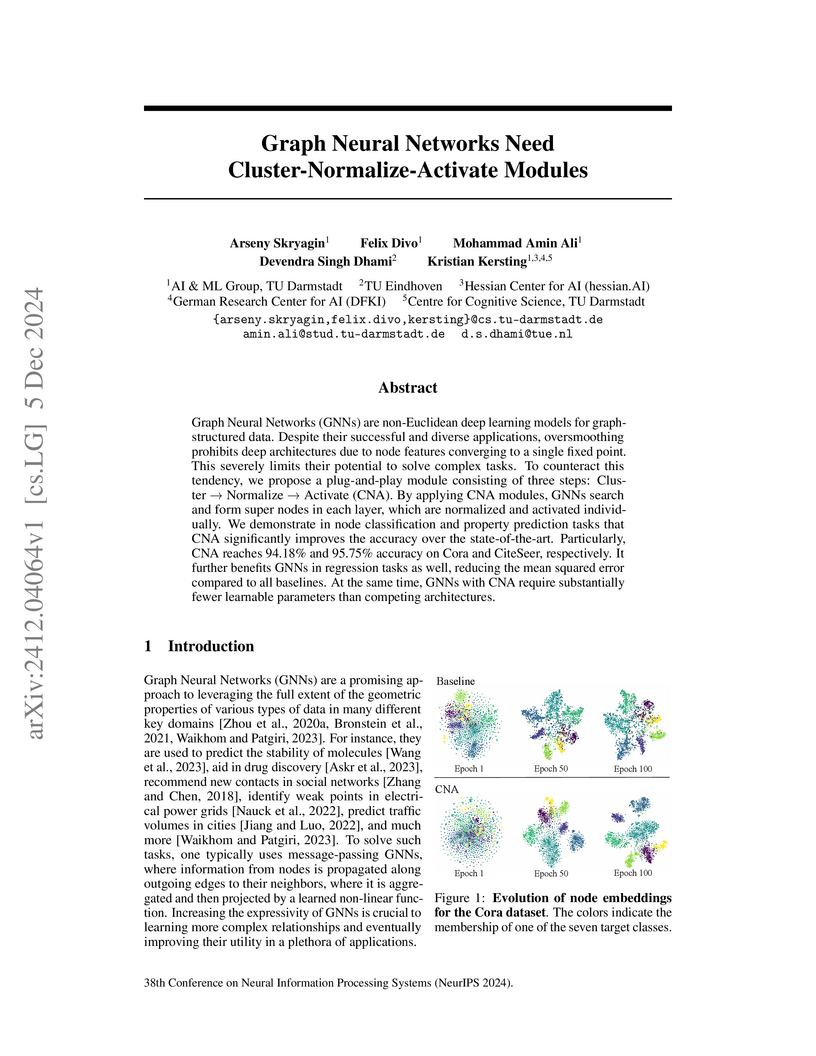

Skryagin et al. (2024) developed Cluster-Normalize-Activate (CNA) modules, which enable Graph Neural Networks (GNNs) to overcome the oversmoothing problem and function effectively at significantly greater depths. This approach yielded state-of-the-art performance on 8 out of 11 diverse node classification datasets and demonstrated improved accuracy and parameter efficiency across various graph tasks.

Humans can leverage both symbolic reasoning and intuitive reactions. In

contrast, reinforcement learning policies are typically encoded in either

opaque systems like neural networks or symbolic systems that rely on predefined

symbols and rules. This disjointed approach severely limits the agents'

capabilities, as they often lack either the flexible low-level reaction

characteristic of neural agents or the interpretable reasoning of symbolic

agents. To overcome this challenge, we introduce BlendRL, a neuro-symbolic RL

framework that harmoniously integrates both paradigms within RL agents that use

mixtures of both logic and neural policies. We empirically demonstrate that

BlendRL agents outperform both neural and symbolic baselines in standard Atari

environments, and showcase their robustness to environmental changes.

Additionally, we analyze the interaction between neural and symbolic policies,

illustrating how their hybrid use helps agents overcome each other's

limitations.

Researchers from TU Eindhoven, Philips Research, Simula Research Laboratory, and the University of Oslo developed SEIDR, an iterative framework that leverages large language models for fully autonomous program generation and repair. The system solves 19 out of 25 problems on the PSB2 benchmark in Python, surpassing traditional genetic programming, while reducing computational cost by achieving solutions with under 1000 program executions.

Class-incremental learning requires models to continually acquire knowledge

of new classes without forgetting old ones. Although pre-trained models have

demonstrated strong performance in class-incremental learning, they remain

susceptible to catastrophic forgetting when learning new concepts. Excessive

plasticity in the models breaks generalizability and causes forgetting, while

strong stability results in insufficient adaptation to new classes. This

necessitates effective adaptation with minimal modifications to preserve the

general knowledge of pre-trained models. To address this challenge, we first

introduce a new parameter-efficient fine-tuning module 'Learn and Calibrate',

or LuCA, designed to acquire knowledge through an adapter-calibrator couple,

enabling effective adaptation with well-refined feature representations.

Second, for each learning session, we deploy a sparse LuCA module on top of the

last token just before the classifier, which we refer to as 'Token-level Sparse

Calibration and Adaptation', or TOSCA. This strategic design improves the

orthogonality between the modules and significantly reduces both training and

inference complexity. By leaving the generalization capabilities of the

pre-trained models intact and adapting exclusively via the last token, our

approach achieves a harmonious balance between stability and plasticity.

Extensive experiments demonstrate TOSCA's state-of-the-art performance while

introducing ~8 times fewer parameters compared to prior methods.

Submodular maximization under matroid and cardinality constraints are classical problems with a wide range of applications in machine learning, auction theory, and combinatorial optimization. In this paper, we consider these problems in the dynamic setting, where (1) we have oracle access to a monotone submodular function f:2V→R+ and (2) we are given a sequence S of insertions and deletions of elements of an underlying ground set V.

We develop the first fully dynamic (4+ϵ)-approximation algorithm for the submodular maximization problem under the matroid constraint using an expected worst-case O(klog(k)log3(k/ϵ)) query complexity where 0 < \epsilon \le 1. This resolves an open problem of Chen and Peng (STOC'22) and Lattanzi et al. (NeurIPS'20).

As a byproduct, for the submodular maximization under the cardinality constraint k, we propose a parameterized (by the cardinality constraint k) dynamic algorithm that maintains a (2+ϵ)-approximate solution of the sequence S at any time t using an expected worst-case query complexity O(kϵ−1log2(k)). This is the first dynamic algorithm for the problem that has a query complexity independent of the size of ground set V.

We study sentiment analysis beyond the typical granularity of polarity and instead use Plutchik's wheel of emotions model. We introduce RBEM-Emo as an extension to the Rule-Based Emission Model algorithm to deduce such emotions from human-written messages. We evaluate our approach on two different datasets and compare its performance with the current state-of-the-art techniques for emotion detection, including a recursive auto-encoder. The results of the experimental study suggest that RBEM-Emo is a promising approach advancing the current state-of-the-art in emotion detection.

This research systematically evaluates 24 diverse forecasting models for company fundamentals, comparing classical statistical and modern deep learning approaches on real-world financial data. It demonstrates that automated forecasts can achieve accuracy comparable to human analysts and, when integrated into factor investment strategies, can yield competitive returns over market benchmarks.

At CRYPTO 2013, Boneh and Zhandry initiated the study of quantum-secure encryption. They proposed first indistinguishability definitions for the quantum world where the actual indistinguishability only holds for classical messages, and they provide arguments why it might be hard to achieve a stronger notion. In this work, we show that stronger notions are achievable, where the indistinguishability holds for quantum superpositions of messages. We investigate exhaustively the possibilities and subtle differences in defining such a quantum indistinguishability notion for symmetric-key encryption schemes. We justify our stronger definition by showing its equivalence to novel quantum semantic-security notions that we introduce. Furthermore, we show that our new security definitions cannot be achieved by a large class of ciphers -- those which are quasi-preserving the message length. On the other hand, we provide a secure construction based on quantum-resistant pseudorandom permutations; this construction can be used as a generic transformation for turning a large class of encryption schemes into quantum indistinguishable and hence quantum semantically secure ones. Moreover, our construction is the first completely classical encryption scheme shown to be secure against an even stronger notion of indistinguishability, which was previously known to be achievable only by using quantum messages and arbitrary quantum encryption circuits.

09 Apr 2025

Structuring ambiguity sets in Wasserstein-based distributionally robust

optimization (DRO) can improve their statistical properties when the

uncertainty consists of multiple independent components. The aim of this paper

is to solve stochastic optimization problems with unknown uncertainty when we

only have access to a finite set of samples from it. Exploiting strong duality

of DRO problems over structured ambiguity sets, we derive tractable

reformulations for certain classes of DRO and uncertainty quantification

problems. We also derive tractable reformulations for distributionally robust

chance-constrained problems. As the complexity of the reformulations may grow

exponentially with the number of independent uncertainty components, we employ

clustering strategies to obtain informative estimators, which yield problems of

manageable complexity. We demonstrate the effectiveness of the theoretical

results in a numerical simulation example.

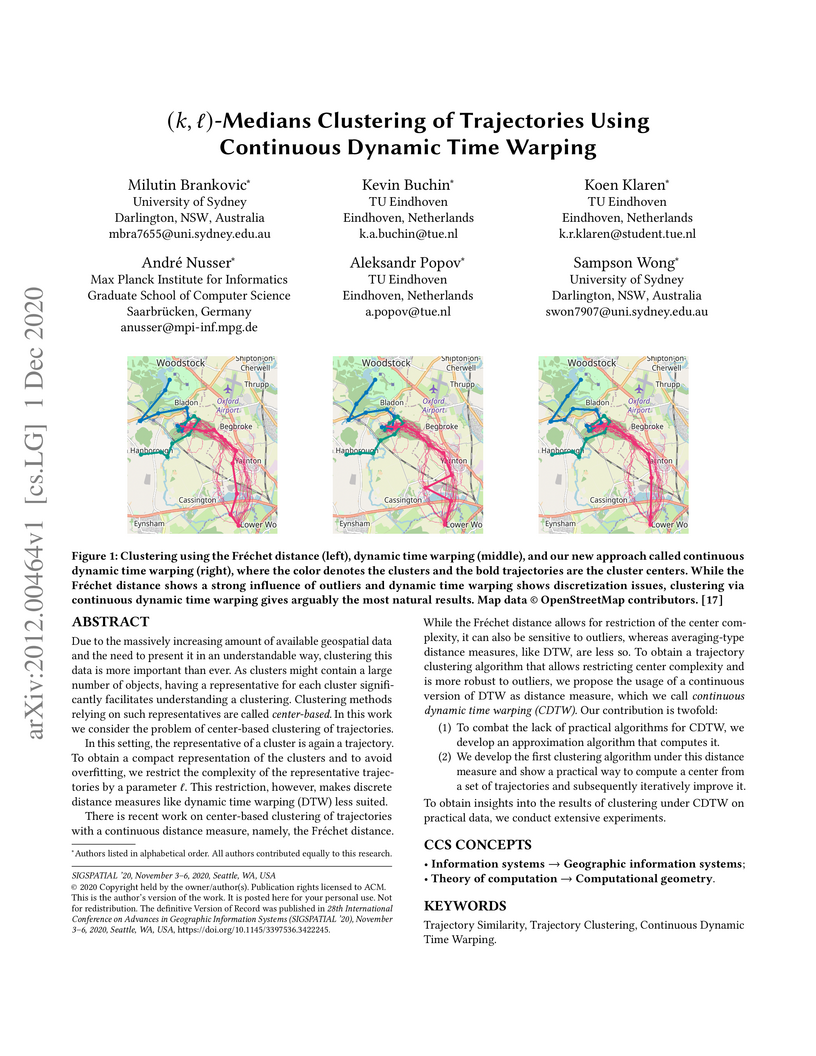

Due to the massively increasing amount of available geospatial data and the need to present it in an understandable way, clustering this data is more important than ever. As clusters might contain a large number of objects, having a representative for each cluster significantly facilitates understanding a clustering. Clustering methods relying on such representatives are called center-based. In this work we consider the problem of center-based clustering of trajectories.

In this setting, the representative of a cluster is again a trajectory. To obtain a compact representation of the clusters and to avoid overfitting, we restrict the complexity of the representative trajectories by a parameter l. This restriction, however, makes discrete distance measures like dynamic time warping (DTW) less suited.

There is recent work on center-based clustering of trajectories with a continuous distance measure, namely, the Fréchet distance. While the Fréchet distance allows for restriction of the center complexity, it can also be sensitive to outliers, whereas averaging-type distance measures, like DTW, are less so. To obtain a trajectory clustering algorithm that allows restricting center complexity and is more robust to outliers, we propose the usage of a continuous version of DTW as distance measure, which we call continuous dynamic time warping (CDTW). Our contribution is twofold:

1. To combat the lack of practical algorithms for CDTW, we develop an approximation algorithm that computes it.

2. We develop the first clustering algorithm under this distance measure and show a practical way to compute a center from a set of trajectories and subsequently iteratively improve it.

To obtain insights into the results of clustering under CDTW on practical data, we conduct extensive experiments.

A primary challenge of physics-informed machine learning (PIML) is its generalization beyond the training domain, especially when dealing with complex physical problems represented by partial differential equations (PDEs). This paper aims to enhance the generalization capabilities of PIML, facilitating practical, real-world applications where accurate predictions in unexplored regions are crucial. We leverage the inherent causality and temporal sequential characteristics of PDE solutions to fuse PIML models with recurrent neural architectures based on systems of ordinary differential equations, referred to as neural oscillators. Through effectively capturing long-time dependencies and mitigating the exploding and vanishing gradient problem, neural oscillators foster improved generalization in PIML tasks. Extensive experimentation involving time-dependent nonlinear PDEs and biharmonic beam equations demonstrates the efficacy of the proposed approach. Incorporating neural oscillators outperforms existing state-of-the-art methods on benchmark problems across various metrics. Consequently, the proposed method improves the generalization capabilities of PIML, providing accurate solutions for extrapolation and prediction beyond the training data.

20 Aug 2025

Artificial intelligence (AI) is a digital technology that will be of major importance for the development of humanity in the near future. AI has raised fundamental questions about what we should do with such systems, what the systems themselves should do, what risks they involve and how we can control these. - After the background to the field (1), this article introduces the main debates (2), first on ethical issues that arise with AI systems as objects, i.e. tools made and used by humans; here, the main sections are privacy (2.1), manipulation (2.2), opacity (2.3), bias (2.4), autonomy & responsibility (2.6) and the singularity (2.7). Then we look at AI systems as subjects, i.e. when ethics is for the AI systems themselves in machine ethics (2.8.) and artificial moral agency (2.9). Finally we look at future developments and the concept of AI (3). For each section within these themes, we provide a general explanation of the ethical issues, we outline existing positions and arguments, then we analyse how this plays out with current technologies and finally what policy consequences may be drawn.

There are no more papers matching your filters at the moment.