Tongji University

This survey paper from researchers at Tongji University and Fudan University offers a comprehensive, systematic synthesis of Retrieval-Augmented Generation (RAG) for Large Language Models. It structures the field by delineating three evolutionary paradigms—Naive, Advanced, and Modular RAG—and details advancements across retrieval, generation, and augmentation components, while also providing a thorough framework for evaluating RAG systems.

16 Jun 2025

G-Memory introduces a hierarchical, graph-based memory system designed for Large Language Model-based Multi-Agent Systems, enabling them to learn from complex collaborative histories. The system consistently improves MAS performance, achieving gains up to 20.89% in embodied action and 10.12% in knowledge question-answering tasks, while maintaining resource efficiency.

23 Sep 2025

The ReSearch framework enables Large Language Models to integrate multi-step reasoning with external search, learning interactively via reinforcement learning without supervised intermediate steps. It yields substantial performance gains on complex multi-hop question answering benchmarks and reveals emergent self-correction capabilities.

MemOS, a memory operating system for AI systems, redefines memory as a first-class system resource to address current Large Language Model limitations in long-context reasoning, continuous personalization, and knowledge evolution. This framework unifies heterogeneous memory types (plaintext, activation, parameter) using a standardized MemCube unit, achieving superior performance on benchmarks like LoCoMo and PreFEval, and demonstrating robust, low-latency memory operations.

24 Oct 2025

Researchers introduced LIBERO-Plus, a diagnostic benchmark for vision-language-action (VLA) models, revealing that current models exhibit substantial fragility to environmental perturbations and frequently ignore linguistic instructions. Fine-tuning with a generalized dataset significantly enhances their robustness.

20 Oct 2025

This survey establishes "Agentic Science" as a paradigm for autonomous scientific discovery, offering a unified framework that integrates agent capabilities, scientific workflows, and domain-specific applications across natural sciences. It charts the evolution of AI from computational tools to autonomous research partners, highlighting over 20 validated scientific discoveries made by AI agents.

09 Jun 2025

MaAS introduces an 'agentic supernet' to enable dynamic, query-dependent allocation of multi-agent system resources and architectures. The framework achieves superior performance across diverse tasks while significantly reducing inference costs and demonstrating strong adaptability compared to prior fixed-architecture methods.

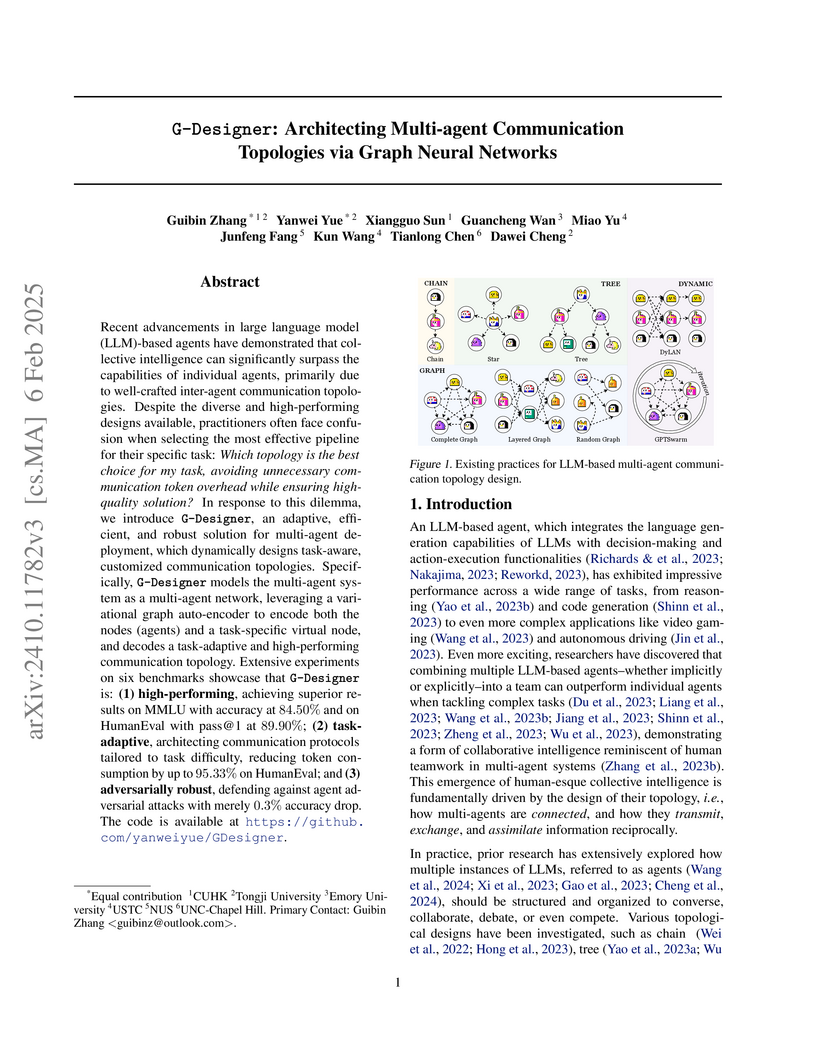

G-Designer introduces a framework using Graph Neural Networks to dynamically generate task-aware communication topologies for LLM-based multi-agent systems. This approach achieved superior performance on various benchmarks while significantly reducing token consumption by up to 95.33% and demonstrating high adversarial robustness.

This survey offers a comprehensive review of how Reinforcement Learning (RL) is applied across the entire lifecycle of Large Language Models, from pre-training to alignment and reinforced reasoning. It particularly emphasizes the role of Reinforcement Learning with Verifiable Rewards (RLVR) in advancing LLM reasoning capabilities and compiles key datasets, benchmarks, and open-source tools for the field.

SRPO (Self-Referential Policy Optimization) enhances Vision-Language-Action (VLA) models for robotic manipulation by addressing reward sparsity, generating dense, progress-wise rewards using the model's own successful trajectories and latent world representations from V-JEPA 2. The method achieved a 99.2% success rate on the LIBERO benchmark, a 103% relative improvement over its one-shot SFT baseline, and demonstrated strong generalization on the LIBERO-Plus benchmark.

SRPO enhances multimodal large language models by integrating explicit self-reflection and self-correction capabilities through a two-stage training framework. The approach achieves state-of-the-art performance among open-source models, scoring 78.5% on MathVista with SRPO-32B, and showing competitive results against leading closed-source models across diverse reasoning benchmarks.

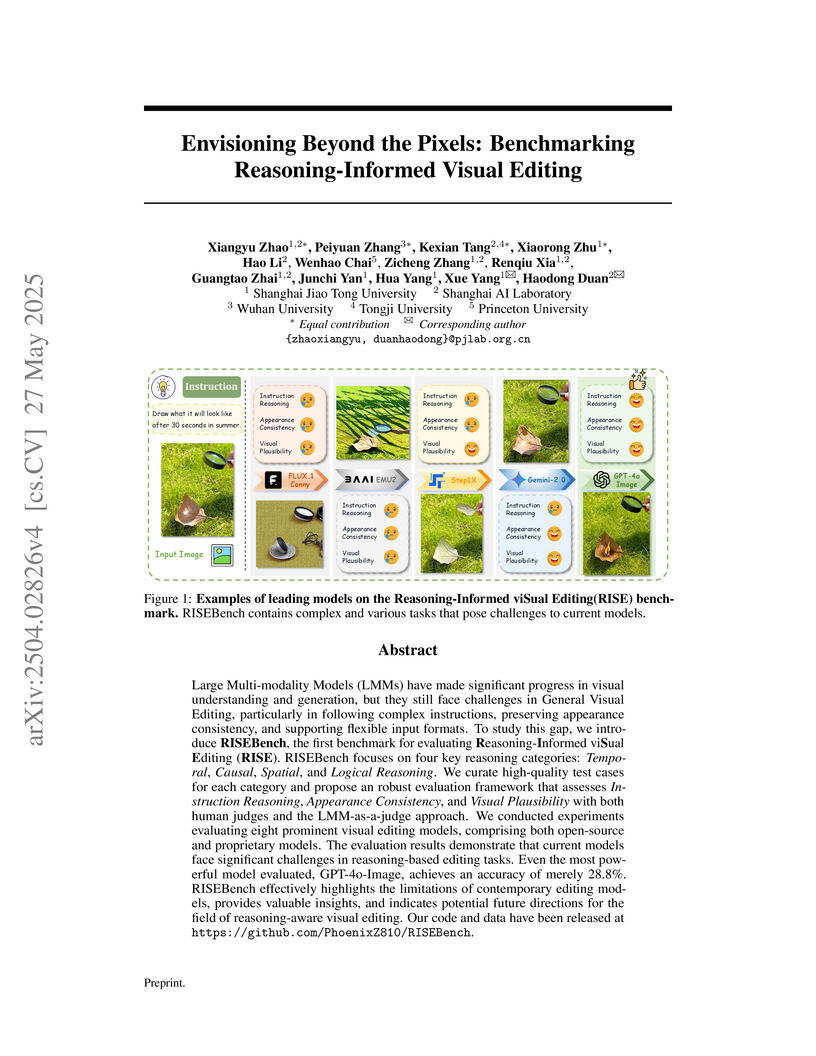

The RISEBench benchmark evaluates Large Multi-modality Models (LMMs) on Reasoning-Informed Visual Editing (RISE), assessing their capacity for visual modifications guided by complex instructions and logical inference across temporal, causal, spatial, and logical domains. Initial evaluations show leading proprietary models like GPT-4o-Image achieve only 28.9% overall accuracy, indicating substantial challenges, particularly in logical reasoning tasks.

Although large language models (LLMs) have significant potential to advance chemical discovery, current LLMs lack core chemical knowledge, produce unreliable reasoning trajectories, and exhibit suboptimal performance across diverse chemical tasks. To address these challenges, we propose Chem-R, a generalizable Chemical Reasoning model designed to emulate the deliberative processes of chemists. Chem-R is trained through a three-phase framework that progressively builds advanced reasoning capabilities, including: 1) Chemical Foundation Training, which establishes core chemical knowledge. 2) Chemical Reasoning Protocol Distillation, incorporating structured, expert-like reasoning traces to guide systematic and reliable problem solving. 3) Multi-task Group Relative Policy Optimization that optimizes the model for balanced performance across diverse molecular- and reaction-level tasks. This structured pipeline enables Chem-R to achieve state-of-the-art performance on comprehensive benchmarks, surpassing leading large language models, including Gemini-2.5-Pro and DeepSeek-R1, by up to 32% on molecular tasks and 48% on reaction tasks. Meanwhile, Chem-R also consistently outperforms the existing chemical foundation models across both molecular and reaction level tasks. These results highlight Chem-R's robust generalization, interpretability, and potential as a foundation for next-generation AI-driven chemical discovery. The code and model are available at this https URL.

R&D-Agent-Quant (R&D-Agent(Q)) is a multi-agent framework that automates the entire quantitative financial research and development pipeline by jointly optimizing financial factors and predictive models. It achieves superior investment strategy performance with up to 2 times higher annualized returns and 70% fewer factors compared to state-of-the-art baselines, demonstrating robustness across diverse financial markets.

A comprehensive survey proposes a novel two-dimensional taxonomy to organize research on integrating Large Language Models and Knowledge Graphs for Question Answering, detailing methods by complex QA categories and the specific functions KGs serve. The work reviews state-of-the-art techniques, highlights their utility in mitigating LLM weaknesses such as factual inaccuracy and limited reasoning, and identifies critical future research directions.

27 Aug 2025

NEMORI, a self-organizing agent memory system, was developed by researchers from Tongji University, Shanghai University of Finance and Economics, Beihang University, and Tanka AI, drawing inspiration from cognitive science to address the 'amnesia' of large language models. The system established new state-of-the-art performance on the LoCoMo dataset with an LLM score of 0.744 using gpt-4o-mini, while simultaneously reducing token usage by 88% compared to full context baselines.

This work uncovers that architectural decoupling in unified multimodal models (UMMs) improves performance by inducing task-specific attention patterns, rather than eliminating task conflicts. Researchers from CUHK MMLab and Meituan introduce an Attention Interaction Alignment (AIA) loss, a regularization technique that guides UMMs' attention toward optimal task-specific behaviors without architectural changes, enhancing both understanding and generation performance for models like Emu3 and Janus-Pro.

Robotic visuomotor policies achieve dramatically improved spatial generalization by removing proprioceptive state inputs. This approach leverages a relative end-effector action space and comprehensive egocentric vision to enable robust task performance across varied spatial configurations, while also enhancing data efficiency and cross-embodiment adaptation.

23 Sep 2025

Tianjin UniversityHuawei Noah’s Ark Lab Chinese Academy of Sciences

Chinese Academy of Sciences Imperial College London

Imperial College London Sun Yat-Sen University

Sun Yat-Sen University University of Manchester

University of Manchester University College LondonTongji University

University College LondonTongji University Shanghai Jiao Tong University

Shanghai Jiao Tong University Nanjing University

Nanjing University Tsinghua University

Tsinghua University Peking University

Peking University King’s College LondonTU DarmstadtPengcheng LaboratoryHong Kong University of Science and Technology (Guangzhou)

King’s College LondonTU DarmstadtPengcheng LaboratoryHong Kong University of Science and Technology (Guangzhou)

Chinese Academy of Sciences

Chinese Academy of Sciences Imperial College London

Imperial College London Sun Yat-Sen University

Sun Yat-Sen University University of Manchester

University of Manchester University College LondonTongji University

University College LondonTongji University Shanghai Jiao Tong University

Shanghai Jiao Tong University Nanjing University

Nanjing University Tsinghua University

Tsinghua University Peking University

Peking University King’s College LondonTU DarmstadtPengcheng LaboratoryHong Kong University of Science and Technology (Guangzhou)

King’s College LondonTU DarmstadtPengcheng LaboratoryHong Kong University of Science and Technology (Guangzhou)Researchers from a global consortium, including Tianjin University and Huawei Noah’s Ark Lab, developed Embodied Arena, a comprehensive platform for evaluating Embodied AI agents, featuring a systematic capability taxonomy and an automated, LLM-driven data generation pipeline. This platform integrates over 22 benchmarks and 30 models, revealing that specialized embodied models often outperform general models on targeted tasks and identifying object and spatial perception as key performance bottlenecks.

16 Feb 2025

MasRouter addresses the Multi-Agent System Routing (MASR) problem by dynamically configuring multi-agent systems to balance performance and cost. It uses a cascaded controller network to determine collaboration modes, allocate agent roles, and route agents to specific LLMs based on query characteristics, achieving Pareto optimality and improved cost-efficiency on benchmarks like HumanEval and MBPP.

There are no more papers matching your filters at the moment.