University of Extremadura

SpectralGPT introduces the first foundation model tailored for spectral remote sensing data, employing a 3D generative pretrained transformer with a specialized masking strategy. Trained on over one million spectral images, it achieves state-of-the-art performance across multiple Earth Observation tasks and exhibits robust spectral image reconstruction.

This survey provides a comprehensive review of multimodal geospatial foundation models (GFMs), detailing their core techniques, application domains, and persistent challenges. It establishes a modality-driven evaluation framework and charts future research directions to advance integrated Earth observation.

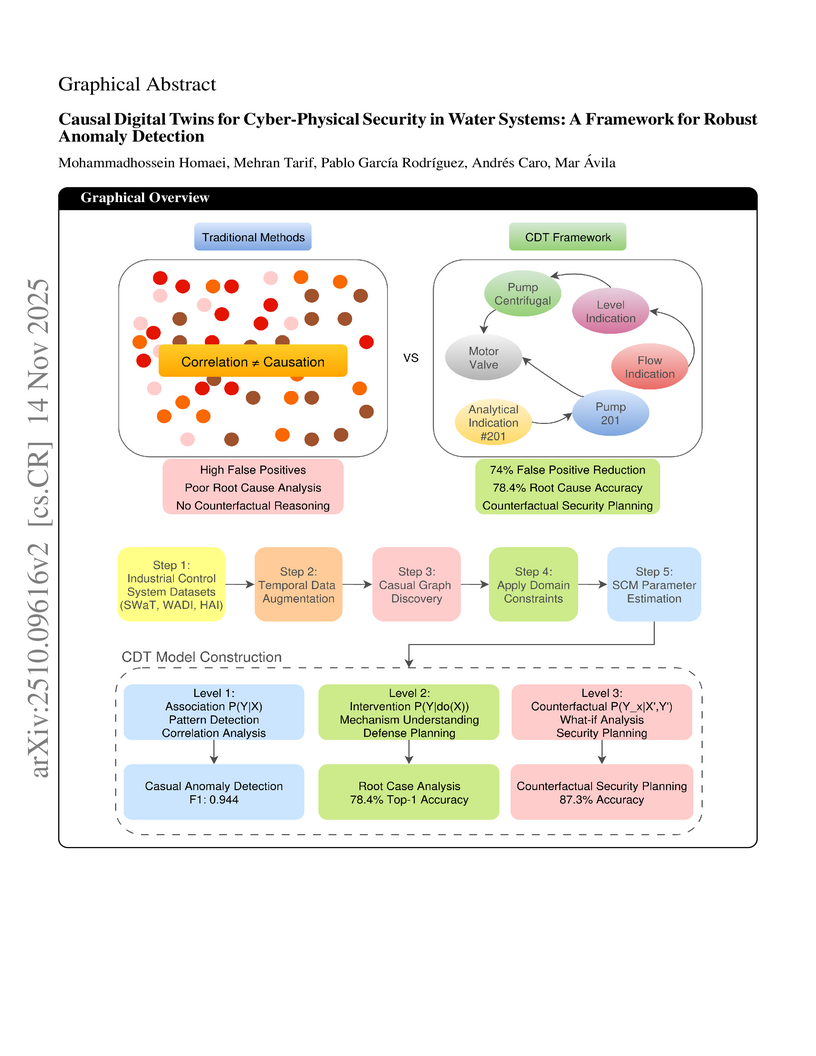

Industrial Control Systems (ICS) in water distribution and treatment face cyber-physical attacks exploiting network and physical vulnerabilities. Current water system anomaly detection methods rely on correlations, yielding high false alarms and poor root cause analysis. We propose a Causal Digital Twin (CDT) framework for water infrastructures, combining causal inference with digital twin modeling. CDT supports association for pattern detection, intervention for system response, and counterfactual analysis for water attack prevention. Evaluated on water-related datasets SWaT, WADI, and HAI, CDT shows 90.8\% compliance with physical constraints and structural Hamming distance 0.133 ± 0.02. F1-scores are 0.944±0.014 (SWaT), 0.902±0.021 (WADI), 0.923±0.018 (HAI, p<0.0024). CDT reduces false positives by 74\%, achieves 78.4\% root cause accuracy, and enables counterfactual defenses reducing attack success by 73.2\%. Real-time performance at 3.2 ms latency ensures safe and interpretable operation for medium-scale water systems.

Hyperspectral (HS) images are characterized by approximately contiguous spectral information, enabling the fine identification of materials by capturing subtle spectral discrepancies. Owing to their excellent locally contextual modeling ability, convolutional neural networks (CNNs) have been proven to be a powerful feature extractor in HS image classification. However, CNNs fail to mine and represent the sequence attributes of spectral signatures well due to the limitations of their inherent network backbone. To solve this issue, we rethink HS image classification from a sequential perspective with transformers, and propose a novel backbone network called \ul{SpectralFormer}. Beyond band-wise representations in classic transformers, SpectralFormer is capable of learning spectrally local sequence information from neighboring bands of HS images, yielding group-wise spectral embeddings. More significantly, to reduce the possibility of losing valuable information in the layer-wise propagation process, we devise a cross-layer skip connection to convey memory-like components from shallow to deep layers by adaptively learning to fuse "soft" residuals across layers. It is worth noting that the proposed SpectralFormer is a highly flexible backbone network, which can be applicable to both pixel- and patch-wise inputs. We evaluate the classification performance of the proposed SpectralFormer on three HS datasets by conducting extensive experiments, showing the superiority over classic transformers and achieving a significant improvement in comparison with state-of-the-art backbone networks. The codes of this work will be available at this https URL for the sake of reproducibility.

IGroupSS-Mamba introduces an efficient hyperspectral image classification framework leveraging a novel Interval Group S6 Mechanism and a cascaded spatial-spectral block. This approach achieves state-of-the-art accuracy on public HSI datasets while drastically reducing computational overhead compared to Transformer-based methods.

Hyperspectral imaging (HSI) is an advanced sensing modality that simultaneously captures spatial and spectral information, enabling non-invasive, label-free analysis of material, chemical, and biological properties. This Primer presents a comprehensive overview of HSI, from the underlying physical principles and sensor architectures to key steps in data acquisition, calibration, and correction. We summarize common data structures and highlight classical and modern analysis methods, including dimensionality reduction, classification, spectral unmixing, and AI-driven techniques such as deep learning. Representative applications across Earth observation, precision agriculture, biomedicine, industrial inspection, cultural heritage, and security are also discussed, emphasizing HSI's ability to uncover sub-visual features for advanced monitoring, diagnostics, and decision-making. Persistent challenges, such as hardware trade-offs, acquisition variability, and the complexity of high-dimensional data, are examined alongside emerging solutions, including computational imaging, physics-informed modeling, cross-modal fusion, and self-supervised learning. Best practices for dataset sharing, reproducibility, and metadata documentation are further highlighted to support transparency and reuse. Looking ahead, we explore future directions toward scalable, real-time, and embedded HSI systems, driven by sensor miniaturization, self-supervised learning, and foundation models. As HSI evolves into a general-purpose, cross-disciplinary platform, it holds promise for transformative applications in science, technology, and society.

Vision transformers (ViTs) have been trending in image classification tasks due to their promising performance when compared to convolutional neural networks (CNNs). As a result, many researchers have tried to incorporate ViTs in hyperspectral image (HSI) classification tasks. To achieve satisfactory performance, close to that of CNNs, transformers need fewer parameters. ViTs and other similar transformers use an external classification (CLS) token which is randomly initialized and often fails to generalize well, whereas other sources of multimodal datasets, such as light detection and ranging (LiDAR) offer the potential to improve these models by means of a CLS. In this paper, we introduce a new multimodal fusion transformer (MFT) network which comprises a multihead cross patch attention (mCrossPA) for HSI land-cover classification. Our mCrossPA utilizes other sources of complementary information in addition to the HSI in the transformer encoder to achieve better generalization. The concept of tokenization is used to generate CLS and HSI patch tokens, helping to learn a {distinctive representation} in a reduced and hierarchical feature space. Extensive experiments are carried out on {widely used benchmark} datasets {i.e.,} the University of Houston, Trento, University of Southern Mississippi Gulfpark (MUUFL), and Augsburg. We compare the results of the proposed MFT model with other state-of-the-art transformers, classical CNNs, and conventional classifiers models. The superior performance achieved by the proposed model is due to the use of multihead cross patch attention. The source code will be made available publicly at \url{this https URL}.}

Hyperspectral image (HSI) classification constitutes the fundamental research in remote sensing fields. Convolutional Neural Networks (CNNs) and Transformers have demonstrated impressive capability in capturing spectral-spatial contextual dependencies. However, these architectures suffer from limited receptive fields and quadratic computational complexity, respectively. Fortunately, recent Mamba architectures built upon the State Space Model integrate the advantages of long-range sequence modeling and linear computational efficiency, exhibiting substantial potential in low-dimensional scenarios. Motivated by this, we propose a novel 3D-Spectral-Spatial Mamba (3DSS-Mamba) framework for HSI classification, allowing for global spectral-spatial relationship modeling with greater computational efficiency. Technically, a spectral-spatial token generation (SSTG) module is designed to convert the HSI cube into a set of 3D spectral-spatial tokens. To overcome the limitations of traditional Mamba, which is confined to modeling causal sequences and inadaptable to high-dimensional scenarios, a 3D-Spectral-Spatial Selective Scanning (3DSS) mechanism is introduced, which performs pixel-wise selective scanning on 3D hyperspectral tokens along the spectral and spatial dimensions. Five scanning routes are constructed to investigate the impact of dimension prioritization. The 3DSS scanning mechanism combined with conventional mapping operations forms the 3D-spectral-spatial mamba block (3DMB), enabling the extraction of global spectral-spatial semantic representations. Experimental results and analysis demonstrate that the proposed method outperforms the state-of-the-art methods on HSI classification benchmarks.

University of Stuttgart Beihang UniversityUniversity of PisaToronto Metropolitan UniversityUniversity of Castilla - La ManchaKyushu UniversityNational Institute of InformaticsUniversidad de SevillaJohannes Kepler University LinzUniversity of ExtremaduraOslo Metropolitan UniversitySimula Research Laboratory

Beihang UniversityUniversity of PisaToronto Metropolitan UniversityUniversity of Castilla - La ManchaKyushu UniversityNational Institute of InformaticsUniversidad de SevillaJohannes Kepler University LinzUniversity of ExtremaduraOslo Metropolitan UniversitySimula Research Laboratory

Beihang UniversityUniversity of PisaToronto Metropolitan UniversityUniversity of Castilla - La ManchaKyushu UniversityNational Institute of InformaticsUniversidad de SevillaJohannes Kepler University LinzUniversity of ExtremaduraOslo Metropolitan UniversitySimula Research Laboratory

Beihang UniversityUniversity of PisaToronto Metropolitan UniversityUniversity of Castilla - La ManchaKyushu UniversityNational Institute of InformaticsUniversidad de SevillaJohannes Kepler University LinzUniversity of ExtremaduraOslo Metropolitan UniversitySimula Research LaboratoryAs quantum computers advance, the complexity of the software they can execute increases as well. To ensure this software is efficient, maintainable, reusable, and cost-effective -key qualities of any industry-grade software-mature software engineering practices must be applied throughout its design, development, and operation. However, the significant differences between classical and quantum software make it challenging to directly apply classical software engineering methods to quantum systems. This challenge has led to the emergence of Quantum Software Engineering as a distinct field within the broader software engineering landscape. In this work, a group of active researchers analyse in depth the current state of quantum software engineering research. From this analysis, the key areas of quantum software engineering are identified and explored in order to determine the most relevant open challenges that should be addressed in the next years. These challenges help identify necessary breakthroughs and future research directions for advancing Quantum Software Engineering.

Detecting fraud in modern supply chains is a growing challenge, driven by the complexity of global networks and the scarcity of labeled data. Traditional detection methods often struggle with class imbalance and limited supervision, reducing their effectiveness in real-world applications. This paper proposes a novel two-phase learning framework to address these challenges. In the first phase, the Isolation Forest algorithm performs unsupervised anomaly detection to identify potential fraud cases and reduce the volume of data requiring further analysis. In the second phase, a self-training Support Vector Machine (SVM) refines the predictions using both labeled and high-confidence pseudo-labeled samples, enabling robust semi-supervised learning. The proposed method is evaluated on the DataCo Smart Supply Chain Dataset, a comprehensive real-world supply chain dataset with fraud indicators. It achieves an F1-score of 0.817 while maintaining a false positive rate below 3.0%. These results demonstrate the effectiveness and efficiency of combining unsupervised pre-filtering with semi-supervised refinement for supply chain fraud detection under real-world constraints, though we acknowledge limitations regarding concept drift and the need for comparison with deep learning approaches.

Detection of changes in heterogeneous remote sensing images is vital,

especially in response to emergencies like earthquakes and floods. Current

homogenous transformation-based change detection (CD) methods often suffer from

high computation and memory costs, which are not friendly to edge-computation

devices like onboard CD devices at satellites. To address this issue, this

paper proposes a new lightweight CD method for heterogeneous remote sensing

images that employs the online all-integer pruning (OAIP) training strategy to

efficiently fine-tune the CD network using the current test data. The proposed

CD network consists of two visual geometry group (VGG) subnetworks as the

backbone architecture. In the OAIP-based training process, all the weights,

gradients, and intermediate data are quantized to integers to speed up training

and reduce memory usage, where the per-layer block exponentiation scaling

scheme is utilized to reduce the computation errors of network parameters

caused by quantization. Second, an adaptive filter-level pruning method based

on the L1-norm criterion is employed to further lighten the fine-tuning process

of the CD network. Experimental results show that the proposed OAIP-based

method attains similar detection performance (but with significantly reduced

computation complexity and memory usage) in comparison with state-of-the-art CD

methods.

NISQ (Noisy Intermediate-Scale Quantum) era constraints, high sensitivity to

noise and limited qubit count, impose significant barriers on the usability of

QPUs (Quantum Process Units) capabilities. To overcome these challenges,

researchers are exploring methods to maximize the utility of existing QPUs

despite their limitations. Building upon the idea that the execution of a

quantum circuit's shots needs not to be treated as a singular monolithic unit,

we propose a methodological framework, termed shot-wise, which enables the

distribution of shots for a single circuit across multiple QPUs. Our framework

features customizable policies to adapt to various scenarios. Additionally, it

introduces a calibration method to pre-evaluate the accuracy and reliability of

each QPU's output before the actual distribution process and an incremental

execution mechanism for dynamically managing the shot allocation and policy

updates. Such an approach enables flexible and fine-grained management of the

distribution process, taking into account various user-defined constraints and

(contrasting) objectives. Experimental findings show that while these

strategies generally do not exceed the best individual QPU results, they

maintain robustness and align closely with average outcomes. Overall, the

shot-wise methodology improves result stability and often outperforms single

QPU runs, offering a flexible approach to managing variability in quantum

computing.

Hyperspectral pansharpening consists of fusing a high-resolution panchromatic

band and a low-resolution hyperspectral image to obtain a new image with high

resolution in both the spatial and spectral domains. These remote sensing

products are valuable for a wide range of applications, driving ever growing

research efforts. Nonetheless, results still do not meet application demands.

In part, this comes from the technical complexity of the task: compared to

multispectral pansharpening, many more bands are involved, in a spectral range

only partially covered by the panchromatic component and with overwhelming

noise. However, another major limiting factor is the absence of a comprehensive

framework for the rapid development and accurate evaluation of new methods.

This paper attempts to address this issue.

We started by designing a dataset large and diverse enough to allow reliable

training (for data-driven methods) and testing of new methods. Then, we

selected a set of state-of-the-art methods, following different approaches,

characterized by promising performance, and reimplemented them in a single

PyTorch framework. Finally, we carried out a critical comparative analysis of

all methods, using the most accredited quality indicators. The analysis

highlights the main limitations of current solutions in terms of

spectral/spatial quality and computational efficiency, and suggests promising

research directions.

To ensure full reproducibility of the results and support future research,

the framework (including codes, evaluation procedures and links to the dataset)

is shared on this https URL,

as a single Python-based reference benchmark toolbox.

18 Feb 2020

An exploratory, descriptive analysis is presented of the national orientation

of scientific, scholarly journals as reflected in the affiliations of

publishing or citing authors. It calculates for journals covered in Scopus an

Index of National Orientation (INO), and analyses the distribution of INO

values across disciplines and countries, and the correlation between INO values

and journal impact factors. The study did not find solid evidence that journal

impact factors are good measures of journal internationality in terms of the

geographical distribution of publishing or citing authors, as the relationship

between a journal's national orientation and its citation impact is found to be

inverse U-shaped. In addition, journals publishing in English are not

necessarily internationally oriented in terms of the affiliations of publishing

or citing authors; in social sciences and humanities also USA has their

nationally oriented literatures. The paper examines the extent to which

nationally oriented journals entering Scopus in earlier years, have become in

recent years more international. It is found that in the study set about 40 per

cent of such journals does reveal traces of internationalization, while the use

of English as publication language and an Open Access (OA) status are important

determinants.

No mixed research of hybrid and fractional-order systems into a cohesive and

multifaceted whole can be found in the literature. This paper focuses on such a

synergistic approach of the theories of both branches, which is believed to

give additional flexibility and help to the system designer. It is part I of

two companion papers and introduces the fundamentals of fractional-order hybrid

systems, in particular, modeling and stability analysis of two kinds of such

systems, i.e., fractional-order switching and reset control systems. Some

examples are given to illustrate the applicability and effectiveness of the

developed theory. Part II will focus on fractional-order hybrid control.

Digital twins (DTs) help improve real-time monitoring and decision-making in

water distribution systems. However, their connectivity makes them easy targets

for cyberattacks such as scanning, denial-of-service (DoS), and unauthorized

access. Small and medium-sized enterprises (SMEs) that manage these systems

often do not have enough budget or staff to build strong cybersecurity teams.

To solve this problem, we present a Virtual Cybersecurity Department (VCD), an

affordable and automated framework designed for SMEs. The VCD uses open-source

tools like Zabbix for real-time monitoring, Suricata for network intrusion

detection, Fail2Ban to block repeated login attempts, and simple firewall

settings. To improve threat detection, we also add a machine-learning-based IDS

trained on the OD-IDS2022 dataset using an improved ensemble model. This model

detects cyber threats such as brute-force attacks, remote code execution (RCE),

and network flooding, with 92\% accuracy and fewer false alarms. Our solution

gives SMEs a practical and efficient way to secure water systems using low-cost

and easy-to-manage tools.

The statistical-mechanical study of the equilibrium properties of fluids, starting from the knowledge of the interparticle interaction potential, is essential to understand the role that microscopic interaction between individual particles play in the properties of the fluid. The study of these properties from a fundamental point of view is therefore a central goal in condensed matter physics. These properties, however, might vary greatly when a fluid is confined to extremely narrow channels and, therefore, must be examined separately. This thesis investigates fluids in narrow pores, where particles are forced to stay in single-file formation and cannot pass one another. The resulting systems are highly anisotropic: motion is free along the channel axis but strongly restricted transversely. To quantify these effects, equilibrium properties of the confined fluids are compared with their bulk counterparts, exposing the role of dimensionality. We also develop a novel theoretical framework based on a mapping approach that converts single-file fluids with nearest-neighbor interactions into an equivalent one-dimensional mixture. This exact isomorphism delivers closed expressions for thermodynamic and structural quantities. It allows us to compute the anisotropic pressure tensor and revises definitions of spatial correlations to take into account spatial anisotropy. The theory is applied to hard-core, square-well, square-shoulder, and anisotropic hard-body models, revealing phenomena such as zigzag ordering and structural crossovers of spatial correlations. Analytical predictions are extensively validated against Monte Carlo and molecular dynamic simulations (both original and from the literature), showing excellent agreement across the studied parameter ranges.

13 Mar 2023

Grover's algorithm is a well-known contribution to quantum computing. It

searches one value within an unordered sequence faster than any classical

algorithm. A fundamental part of this algorithm is the so-called oracle, a

quantum circuit that marks the quantum state corresponding to the desired

value. A generalization of it is the oracle for Amplitude Amplification, that

marks multiple desired states. In this work we present a classical algorithm

that builds a phase-marking oracle for Amplitude Amplification. This oracle

performs a less-than operation, marking states representing natural numbers

smaller than a given one. Results of both simulations and experiments are shown

to prove its functionality. This less-than oracle implementation works on any

number of qubits and does not require any ancilla qubits. Regarding depth, the

proposed implementation is compared with the one generated by Qiskit automatic

method, UnitaryGate. We show that the depth of our less-than oracle

implementation is always lower. This difference is significant enough for our

method to outperform UnitaryGate on real quantum hardware.

08 May 2024

Victor Hugo's timeless observation, "Nothing is more powerful than an idea whose time has come", resonates today as Quantum Computing, once only a dream of a physicist, stands at the threshold of reality with the potential to revolutionise the world. To comprehend the surge of attention it commands today, one must delve into the motivations that birthed and nurtured Quantum Computing. While the past of Quantum Computing provides insights into the present, the future could unfold through the lens of Quantum Software Engineering. Quantum Software Engineering, guided by its principles and methodologies investigates the most effective ways to interact with Quantum Computers to unlock their true potential and usher in a new era of possibilities. To gain insight into the present landscape and anticipate the trajectory of Quantum Computing and Quantum Software Engineering, this paper embarks on a journey through their evolution and outlines potential directions for future research. By doing so, we aim to equip readers (ideally software engineers and computer scientists not necessarily with quantum expertise) with the insights necessary to navigate the ever-evolving landscape of Quantum Computing and anticipate the trajectories that lie ahead.

Hyperspectral unmixing has been an important technique that estimates a set of endmembers and their corresponding abundances from a hyperspectral image (HSI). Nonnegative matrix factorization (NMF) plays an increasingly significant role in solving this problem. In this article, we present a comprehensive survey of the NMF-based methods proposed for hyperspectral unmixing. Taking the NMF model as a baseline, we show how to improve NMF by utilizing the main properties of HSIs (e.g., spectral, spatial, and structural information). We categorize three important development directions including constrained NMF, structured NMF, and generalized NMF. Furthermore, several experiments are conducted to illustrate the effectiveness of associated algorithms. Finally, we conclude the article with possible future directions with the purposes of providing guidelines and inspiration to promote the development of hyperspectral unmixing.

There are no more papers matching your filters at the moment.