University of Gothenburg

Large language models are rapidly transforming social science research by enabling the automation of labor-intensive tasks like data annotation and text analysis. However, LLM outputs vary significantly depending on the implementation choices made by researchers (e.g., model selection or prompting strategy). Such variation can introduce systematic biases and random errors, which propagate to downstream analyses and cause Type I (false positive), Type II (false negative), Type S (wrong sign), or Type M (exaggerated effect) errors. We call this phenomenon where configuration choices lead to incorrect conclusions LLM hacking.

We find that intentional LLM hacking is strikingly simple. By replicating 37 data annotation tasks from 21 published social science studies, we show that, with just a handful of prompt paraphrases, virtually anything can be presented as statistically significant.

Beyond intentional manipulation, our analysis of 13 million labels from 18 different LLMs across 2361 realistic hypotheses shows that there is also a high risk of accidental LLM hacking, even when following standard research practices. We find incorrect conclusions in approximately 31% of hypotheses for state-of-the-art LLMs, and in half the hypotheses for smaller language models. While higher task performance and stronger general model capabilities reduce LLM hacking risk, even highly accurate models remain susceptible. The risk of LLM hacking decreases as effect sizes increase, indicating the need for more rigorous verification of LLM-based findings near significance thresholds. We analyze 21 mitigation techniques and find that human annotations provide crucial protection against false positives. Common regression estimator correction techniques can restore valid inference but trade off Type I vs. Type II errors.

We publish a list of practical recommendations to prevent LLM hacking.

27 Feb 2024

CNRS

CNRS University of Southern California

University of Southern California National University of Singapore

National University of Singapore Georgia Institute of Technology

Georgia Institute of Technology Beihang University

Beihang University Osaka University

Osaka University Zhejiang University

Zhejiang University Cornell University

Cornell University Northwestern University

Northwestern University University of Texas at Austin

University of Texas at Austin Nanyang Technological University

Nanyang Technological University Purdue UniversityUniversity of Illinois at ChicagoUniversity of ViennaUniversity of Texas at DallasVirginia Commonwealth UniversityUniversity of California at Los Angeles

Purdue UniversityUniversity of Illinois at ChicagoUniversity of ViennaUniversity of Texas at DallasVirginia Commonwealth UniversityUniversity of California at Los Angeles University of VirginiaUniversity of MessinaPontifical Catholic University of Rio de JaneiroUniversity of South CarolinaKanazawa UniversityIndian Institute of Technology RoorkeeUniversity of GothenburgFederal University of Rio de JaneiroThalesPolitecnico di BariUniversity of California at Santa BarbaraTechnical University DelftUniversity of California at San DiegoToshiba CorporationA* STARUniversity of Duisberg-EssenInteruniversity Microelectronics Center (IMEC)Laboratoire d'Informatique, de Robotique et de Microélectronique de Montpellier

University of VirginiaUniversity of MessinaPontifical Catholic University of Rio de JaneiroUniversity of South CarolinaKanazawa UniversityIndian Institute of Technology RoorkeeUniversity of GothenburgFederal University of Rio de JaneiroThalesPolitecnico di BariUniversity of California at Santa BarbaraTechnical University DelftUniversity of California at San DiegoToshiba CorporationA* STARUniversity of Duisberg-EssenInteruniversity Microelectronics Center (IMEC)Laboratoire d'Informatique, de Robotique et de Microélectronique de MontpellierIn the "Beyond Moore's Law" era, with increasing edge intelligence, domain-specific computing embracing unconventional approaches will become increasingly prevalent. At the same time, adopting a variety of nanotechnologies will offer benefits in energy cost, computational speed, reduced footprint, cyber resilience, and processing power. The time is ripe for a roadmap for unconventional computing with nanotechnologies to guide future research, and this collection aims to fill that need. The authors provide a comprehensive roadmap for neuromorphic computing using electron spins, memristive devices, two-dimensional nanomaterials, nanomagnets, and various dynamical systems. They also address other paradigms such as Ising machines, Bayesian inference engines, probabilistic computing with p-bits, processing in memory, quantum memories and algorithms, computing with skyrmions and spin waves, and brain-inspired computing for incremental learning and problem-solving in severely resource-constrained environments. These approaches have advantages over traditional Boolean computing based on von Neumann architecture. As the computational requirements for artificial intelligence grow 50 times faster than Moore's Law for electronics, more unconventional approaches to computing and signal processing will appear on the horizon, and this roadmap will help identify future needs and challenges. In a very fertile field, experts in the field aim to present some of the dominant and most promising technologies for unconventional computing that will be around for some time to come. Within a holistic approach, the goal is to provide pathways for solidifying the field and guiding future impactful discoveries.

CNRSFreie Universität Berlin

CNRSFreie Universität Berlin University of OxfordTU Dortmund UniversityGerman Research Center for Artificial Intelligence (DFKI)University of InnsbruckCollège de FranceMax Planck Institute for the Science of LightFriedrich-Alexander-Universität Erlangen-NürnbergInstitut Polytechnique de ParisUniversity of LatviaUniversity of TurkuSaarland UniversityFondazione Bruno KesslerTU Wien

University of OxfordTU Dortmund UniversityGerman Research Center for Artificial Intelligence (DFKI)University of InnsbruckCollège de FranceMax Planck Institute for the Science of LightFriedrich-Alexander-Universität Erlangen-NürnbergInstitut Polytechnique de ParisUniversity of LatviaUniversity of TurkuSaarland UniversityFondazione Bruno KesslerTU Wien Chalmers University of TechnologyForschungszentrum JülichUniversity of RegensburgUniversity of FlorenceUniversity of AugsburgUniversity of GothenburgLeiden Institute of PhysicsDonostia International Physics CenterJohannes Kepler University LinzFraunhofer Heinrich-Hertz-InstituteSAP SEFriedrich-Schiller-University JenaEuropean Centre for Theoretical Studies in Nuclear Physics and Related Areas (ECT*)EPITA Research LabLeiden Institute of Advanced Computer ScienceÖAWVienna Center for Quantum Science and TechnologyAtominstitutUniversity of Applied Sciences Zittau/GörlitzIQOQI ViennaFraunhofer IOSB-ASTUniversit PSLInria Paris–SaclayUniversit

Paris Diderot`Ecole PolytechniqueUniversity of Naples

“Federico II”INFN

Sezione di Firenze

Chalmers University of TechnologyForschungszentrum JülichUniversity of RegensburgUniversity of FlorenceUniversity of AugsburgUniversity of GothenburgLeiden Institute of PhysicsDonostia International Physics CenterJohannes Kepler University LinzFraunhofer Heinrich-Hertz-InstituteSAP SEFriedrich-Schiller-University JenaEuropean Centre for Theoretical Studies in Nuclear Physics and Related Areas (ECT*)EPITA Research LabLeiden Institute of Advanced Computer ScienceÖAWVienna Center for Quantum Science and TechnologyAtominstitutUniversity of Applied Sciences Zittau/GörlitzIQOQI ViennaFraunhofer IOSB-ASTUniversit PSLInria Paris–SaclayUniversit

Paris Diderot`Ecole PolytechniqueUniversity of Naples

“Federico II”INFN

Sezione di FirenzeA collaborative white paper coordinated by the Quantum Community Network comprehensively analyzes the current status and future perspectives of Quantum Artificial Intelligence, categorizing its potential into "Quantum for AI" and "AI for Quantum" applications. It proposes a strategic research and development agenda to bolster Europe's competitive position in this rapidly converging technological domain.

08 Oct 2025

Researchers at Chalmers University of Technology developed Transferable Implicit Transfer Operators (TITO), a deep generative model that bridges femtosecond to nanosecond timescales in molecular dynamics simulations. TITO accurately reproduces both equilibrium and kinetic properties of molecular systems, achieving up to a 15,000-fold acceleration over conventional molecular dynamics while demonstrating transferability to unseen molecules.

We introduce FLOWR, a novel structure-based framework for the generation and

optimization of three-dimensional ligands. FLOWR integrates continuous and

categorical flow matching with equivariant optimal transport, enhanced by an

efficient protein pocket conditioning. Alongside FLOWR, we present SPINDR, a

thoroughly curated dataset comprising ligand-pocket co-crystal complexes

specifically designed to address existing data quality issues. Empirical

evaluations demonstrate that FLOWR surpasses current state-of-the-art

diffusion- and flow-based methods in terms of PoseBusters-validity, pose

accuracy, and interaction recovery, while offering a significant inference

speedup, achieving up to 70-fold faster performance. In addition, we introduce

FLOWR:multi, a highly accurate multi-purpose model allowing for the targeted

sampling of novel ligands that adhere to predefined interaction profiles and

chemical substructures for fragment-based design without the need of

re-training or any re-sampling strategies

Many researchers have reached the conclusion that AI models should be trained to be aware of the possibility of variation and disagreement in human judgments, and evaluated as per their ability to recognize such variation. The LEWIDI series of shared tasks on Learning With Disagreements was established to promote this approach to training and evaluating AI models, by making suitable datasets more accessible and by developing evaluation methods. The third edition of the task builds on this goal by extending the LEWIDI benchmark to four datasets spanning paraphrase identification, irony detection, sarcasm detection, and natural language inference, with labeling schemes that include not only categorical judgments as in previous editions, but ordinal judgments as well. Another novelty is that we adopt two complementary paradigms to evaluate disagreement-aware systems: the soft-label approach, in which models predict population-level distributions of judgments, and the perspectivist approach, in which models predict the interpretations of individual annotators. Crucially, we moved beyond standard metrics such as cross-entropy, and tested new evaluation metrics for the two paradigms. The task attracted diverse participation, and the results provide insights into the strengths and limitations of methods to modeling variation. Together, these contributions strengthen LEWIDI as a framework and provide new resources, benchmarks, and findings to support the development of disagreement-aware technologies.

17 Oct 2025

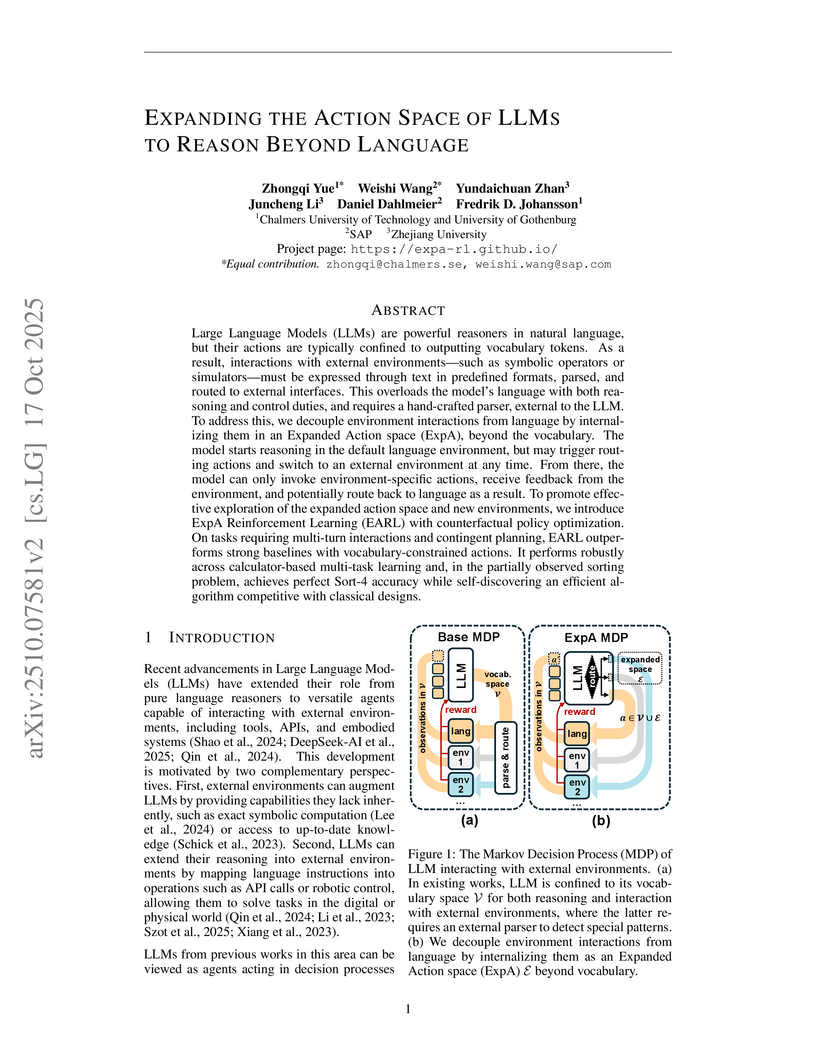

Researchers introduce the Expanded Action space (ExpA) framework and the ExpA Reinforcement Learning (EARL) algorithm, enabling Large Language Models to directly interact with external environments beyond language. This approach decouples linguistic reasoning from environmental control, achieving state-of-the-art performance on complex mathematical and contingent planning tasks, and demonstrating efficient algorithm discovery.

21 Oct 2025

Researchers developed a reproducible framework for integrating Large Language Models (LLMs) into reflexive Thematic Analysis (TA) in qualitative software engineering research. The study demonstrated that expert evaluators preferred LLM-generated codes 61% of the time over human-generated codes, while also identifying LLM limitations in latent interpretation and thematic coherence.

21 Aug 2025

Large-language models (LLMs) and agentic systems present exciting opportunities to accelerate drug discovery. In this study, we examine the modularity of LLM-based agentic systems for drug discovery, i.e., whether parts of the system such as the LLM and type of agent are interchangeable, a topic that has received limited attention in drug discovery. We compare the performance of different LLMs and the effectiveness of tool-calling agents versus code-generating agents. Our case study, comparing performance in orchestrating tools for chemistry and drug discovery using an LLM-as-a-judge score, shows that Claude-3.5-Sonnet, Claude-3.7-Sonnet and GPT-4o outperform alternative language models such as Llama-3.1-8B, Llama-3.1-70B, GPT-3.5-Turbo, and Nova-Micro. Although we confirm that code-generating agents outperform the tool-calling ones on average, we show that this is highly question- and model-dependent. Furthermore, the impact of replacing system prompts is dependent on the question and model, underscoring that even in this particular domain one cannot just replace components of the system without re-engineering. Our study highlights the necessity of further research into the modularity of agentic systems to enable the development of reliable and modular solutions for real-world problems.

Recovering unbiased properties from biased or perturbed simulations is a central challenge in rare-event sampling. Classical Girsanov Reweighting (GR) offers a principled solution by yielding exact pathwise probability ratios between perturbed and reference processes. However, the variance of GR weights grows rapidly with time, rendering it impractical for long-horizon reweighting. We introduce Marginal Girsanov Reweighting (MGR), which mitigates variance explosion by marginalizing over intermediate paths, producing stable and scalable weights for long-timescale dynamics. Experiments demonstrate that MGR (i) accurately recovers kinetic properties from umbrella-sampling trajectories in molecular dynamics, and (ii) enables efficient Bayesian parameter inference for stochastic differential equations with temporally sparse observations.

As natural language corpora expand at an unprecedented rate, manual annotation remains a significant methodological bottleneck in corpus linguistic work. We address this challenge by presenting a scalable, unsupervised pipeline for automating grammatical annotation in voluminous corpora using large language models (LLMs). Unlike previous supervised and iterative approaches, our method employs a four-phase workflow: prompt engineering, pre-hoc evaluation, automated batch processing, and post-hoc validation. We demonstrate the pipeline's accessibility and effectiveness through a diachronic case study of variation in the English consider construction. Using GPT-5 through the OpenAI API, we annotate 143,933 sentences from the Corpus of Historical American English (COHA) in under 60 hours, achieving 98%+ accuracy on two sophisticated annotation procedures. Our results suggest that LLMs can perform a range of data preparation tasks at scale with minimal human intervention, opening new possibilities for corpus-based research, though implementation requires attention to costs, licensing, and other ethical considerations.

Researchers from MIT, the Broad Institute, and the Vector Institute developed a six-test black-box framework to assess memorization risks in Electronic Health Record Foundation Models (EHR-FMs), revealing that while a benchmark model showed low embedding-level leakage, generative memorization of sensitive attributes increased with prompt detail, particularly for specific rare conditions or when predictions relied on patient-specific factors.

A Feynman diagram framework is introduced to systematically compute finite-width corrections for Neural Tangent Kernels (NTKs) and related statistics in Multi-Layer Perceptrons. This method simplifies complex algebraic derivations, enabling the calculation of a novel recursion for the mean NTK correction and demonstrating that infinite-width criticality stabilizes all NTK statistics to all orders in width, with a specific finding that diagonal NTK elements have no finite-width corrections for scale-invariant activations.

An observational study investigated how professional software engineers utilize ChatGPT across various tasks and the factors influencing their experience and trust in the tool. It revealed that engineers primarily use ChatGPT for expert consultation and training, not just code generation, with perceived usefulness and trust shaped by prompt context, user expectations, and company policies.

22 May 2025

This empirical study investigates integrating Large Language Models (LLMs) into existing code review workflows, conducted in collaboration with WirelessCar and Chalmers University of Technology. It found that developers prefer context-dependent interaction modes and that LLM assistance improves review efficiency and thoroughness, provided trust and proper integration are established.

FlexiFlow introduces a generative model that simultaneously produces molecular graphs and their low-energy conformational ensembles, achieving state-of-the-art molecular generation metrics and high-quality, diverse conformers efficiently, even for protein-conditioned ligand design.

10 Feb 2025

Reinforcement learning (RL) has emerged as a powerful tool for tackling

control problems, but its practical application is often hindered by the

complexity arising from intricate reward functions with multiple terms. The

reward hypothesis posits that any objective can be encapsulated in a scalar

reward function, yet balancing individual, potentially adversarial, reward

terms without exploitation remains challenging. To overcome the limitations of

traditional RL methods, which often require precise balancing of competing

reward terms, we propose a two-stage reward curriculum that first maximizes a

simple reward function and then transitions to the full, complex reward. We

provide a method based on how well an actor fits a critic to automatically

determine the transition point between the two stages. Additionally, we

introduce a flexible replay buffer that enables efficient phase transfer by

reusing samples from one stage in the next. We evaluate our method on the

DeepMind control suite, modified to include an additional constraint term in

the reward definitions. We further evaluate our method in a mobile robot

scenario with even more competing reward terms. In both settings, our two-stage

reward curriculum achieves a substantial improvement in performance compared to

a baseline trained without curriculum. Instead of exploiting the constraint

term in the reward, it is able to learn policies that balance task completion

and constraint satisfaction. Our results demonstrate the potential of two-stage

reward curricula for efficient and stable RL in environments with complex

rewards, paving the way for more robust and adaptable robotic systems in

real-world applications.

23 Sep 2025

Hyperparameter optimization is critical for improving the performance of recommender systems, yet its implementation is often treated as a neutral or secondary concern. In this work, we shift focus from model benchmarking to auditing the behavior of RecBole, a widely used recommendation framework. We show that RecBole's internal defaults, particularly an undocumented early-stopping policy, can prematurely terminate Random Search and Bayesian Optimization. This limits search coverage in ways that are not visible to users. Using six models and two datasets, we compare search strategies and quantify both performance variance and search path instability. Our findings reveal that hidden framework logic can introduce variability comparable to the differences between search strategies. These results highlight the importance of treating frameworks as active components of experimental design and call for more transparent, reproducibility-aware tooling in recommender systems research. We provide actionable recommendations for researchers and developers to mitigate hidden configuration behaviors and improve the transparency of hyperparameter tuning workflows.

Universitat Pompeu FabraUniversity of ExeterMBZUAIBielefeld UniversityNational Research Council CanadaCardiff UniversityUniversity of BathUCLouvainKing Abdulaziz UniversityBrigham Young UniversityUniversity of GothenburgINESC-ID LisboaInstituto Universitário de Lisboa (ISCTE-IUL)National University, Philippines

We introduce UniversalCEFR, a large-scale multilingual and multidimensional dataset of texts annotated with CEFR (Common European Framework of Reference) levels in 13 languages. To enable open research in automated readability and language proficiency assessment, UniversalCEFR comprises 505,807 CEFR-labeled texts curated from educational and learner-oriented resources, standardized into a unified data format to support consistent processing, analysis, and modelling across tasks and languages. To demonstrate its utility, we conduct benchmarking experiments using three modelling paradigms: a) linguistic feature-based classification, b) fine-tuning pre-trained LLMs, and c) descriptor-based prompting of instruction-tuned LLMs. Our results support using linguistic features and fine-tuning pretrained models in multilingual CEFR level assessment. Overall, UniversalCEFR aims to establish best practices in data distribution for language proficiency research by standardising dataset formats, and promoting their accessibility to the global research community.

07 Mar 2025

Kernel ridge regression, KRR, is a generalization of linear ridge regression

that is non-linear in the data, but linear in the model parameters. Here, we

introduce an equivalent formulation of the objective function of KRR, which

opens up for replacing the ridge penalty with the ℓ∞ and ℓ1

penalties. Using the ℓ∞ and ℓ1 penalties, we obtain robust and

sparse kernel regression, respectively. We study the similarities between

explicitly regularized kernel regression and the solutions obtained by early

stopping of iterative gradient-based methods, where we connect ℓ∞

regularization to sign gradient descent, ℓ1 regularization to forward

stagewise regression (also known as coordinate descent), and ℓ2

regularization to gradient descent, and, in the last case, theoretically bound

for the differences. We exploit the close relations between ℓ∞

regularization and sign gradient descent, and between ℓ1 regularization

and coordinate descent to propose computationally efficient methods for robust

and sparse kernel regression. We finally compare robust kernel regression

through sign gradient descent to existing methods for robust kernel regression

on five real data sets, demonstrating that our method is one to two orders of

magnitude faster, without compromised accuracy.

There are no more papers matching your filters at the moment.