University of New South Wales

A membership inference approach called CodeMI detects if a specific code snippet was used to train black-box neural code completion models, leveraging rank sets instead of raw probabilities. The method achieved 0.842 accuracy on LSTM-based models and 0.730 on CodeGPT, demonstrating its capability to identify potential intellectual property infringements and privacy risks.

When mathematical biology models are used to make quantitative predictions for clinical or industrial use, it is important that these predictions come with a reliable estimate of their accuracy (uncertainty quantification). Because models of complex biological systems are always large simplifications, model discrepancy arises - where a mathematical model fails to recapitulate the true data generating process. This presents a particular challenge for making accurate predictions, and especially for making accurate estimates of uncertainty in these predictions. Experimentalists and modellers must choose which experimental procedures (protocols) are used to produce data to train their models. We propose to characterise uncertainty owing to model discrepancy with an ensemble of parameter sets, each of which results from training to data from a different protocol. The variability in predictions from this ensemble provides an empirical estimate of predictive uncertainty owing to model discrepancy, even for unseen protocols. We use the example of electrophysiology experiments, which are used to investigate the kinetics of the hERG potassium ion channel. Here, 'information-rich' protocols allow mathematical models to be trained using numerous short experiments performed on the same cell. Typically, assuming independent observational errors and training a model to an individual experiment results in parameter estimates with very little dependence on observational noise. Moreover, parameter sets arising from the same model applied to different experiments often conflict - indicative of model discrepancy. Our methods will help select more suitable mathematical models of hERG for future studies, and will be widely applicable to a range of biological modelling problems.

The importance of numax (the frequency of maximum oscillation power) for

asteroseismology has been demonstrated widely in the previous decade,

especially for red giants. With the large amount of photometric data from

CoRoT, Kepler and TESS, several automated algorithms to retrieve numax values

have been introduced. Most of these algorithms correct the granulation

background in the power spectrum by fitting a model and subtracting it before

measuring numax. We have developed a method that does not require fitting to

the granulation background. Instead, we simply divide the power spectrum by a

function of the form nu^-2, to remove the slope due to granulation background,

and then smooth to measure numax. This method is fast, simple and avoids

degeneracies associated with fitting. The method is able to measure

oscillations in 99.9% of previously-studied Kepler red giants, with a

systematic offset of 1.5 % in numax values that that we are able to calibrate.

On comparing the seismic radii from this work with Gaia, we see similar trends

to those observed in previous studies. Additionally, our values of width of the

power envelope can clearly identify the dipole mode suppressed stars as a

distinct population, hence as a way to detect them. We also applied our method

to stars with low (0.19--18.35 muHz) and found it works well to correctly

identify the oscillations.

In recent years, predicting mobile app usage has become increasingly

important for areas like app recommendation, user behaviour analysis, and

mobile resource management. Existing models, however, struggle with the

heterogeneous nature of contextual data and the user cold start problem. This

study introduces a novel prediction model, Mobile App Prediction Leveraging

Large Language Model Embeddings (MAPLE), which employs Large Language Models

(LLMs) and installed app similarity to overcome these challenges. MAPLE

utilises the power of LLMs to process contextual data and discern intricate

relationships within it effectively. Additionally, we explore the use of

installed app similarity to address the cold start problem, facilitating the

modelling of user preferences and habits, even for new users with limited

historical data. In essence, our research presents MAPLE as a novel, potent,

and practical approach to app usage prediction, making significant strides in

resolving issues faced by existing models. MAPLE stands out as a comprehensive

and effective solution, setting a new benchmark for more precise and

personalised app usage predictions. In tests on two real-world datasets, MAPLE

surpasses contemporary models in both standard and cold start scenarios. These

outcomes validate MAPLE's capacity for precise app usage predictions and its

resilience against the cold start problem. This enhanced performance stems from

the model's proficiency in capturing complex temporal patterns and leveraging

contextual information. As a result, MAPLE can potentially improve personalised

mobile app usage predictions and user experiences markedly.

Researchers from Nanyang Technological University and collaborators developed HOUYI, a systematic black-box prompt injection attack framework for LLM-integrated applications, demonstrating 86.1% susceptibility across 36 real-world commercial applications. The study revealed severe consequences including intellectual property theft via prompt leaking and financial losses from prompt abusing, with a daily financial loss of $259.2 estimated for a single compromised application.

This survey offers a comprehensive, structured overview of GUI agents, categorizing benchmarks, architectural designs, and training methods while outlining critical open challenges. It synthesizes rapid advancements in enabling Large Foundation Models to interact with digital systems via their Graphical User Interfaces.

20 Mar 2025

A comprehensive framework introduces LLM-based Agentic Recommender Systems (LLM-ARS), combining multimodal large language models with autonomous capabilities to enable proactive, adaptive recommendation experiences while identifying core challenges in safety, efficiency, and personalization across recommendation domains.

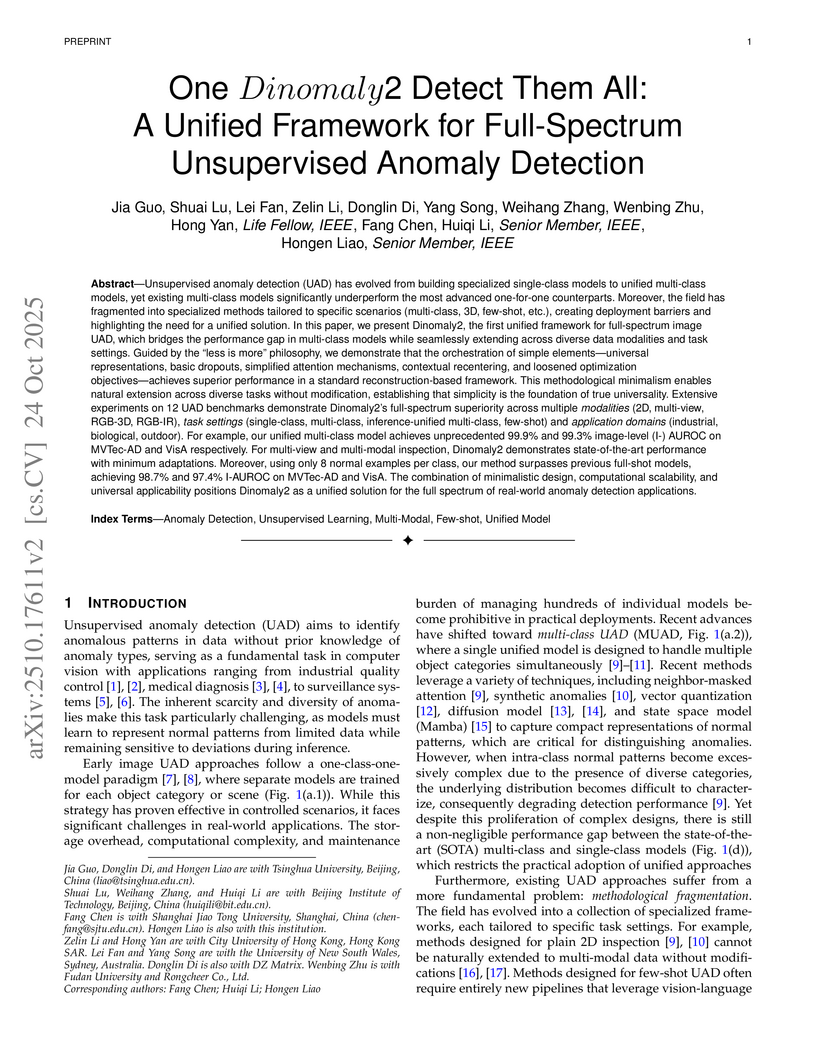

Dinomaly2 is a unified framework for unsupervised anomaly detection, leveraging a reconstruction-based approach with a pre-trained Vision Transformer backbone and simple components. The system consistently achieves state-of-the-art performance across diverse image modalities and task settings, including multi-class and few-shot scenarios, by preventing over-generalization.

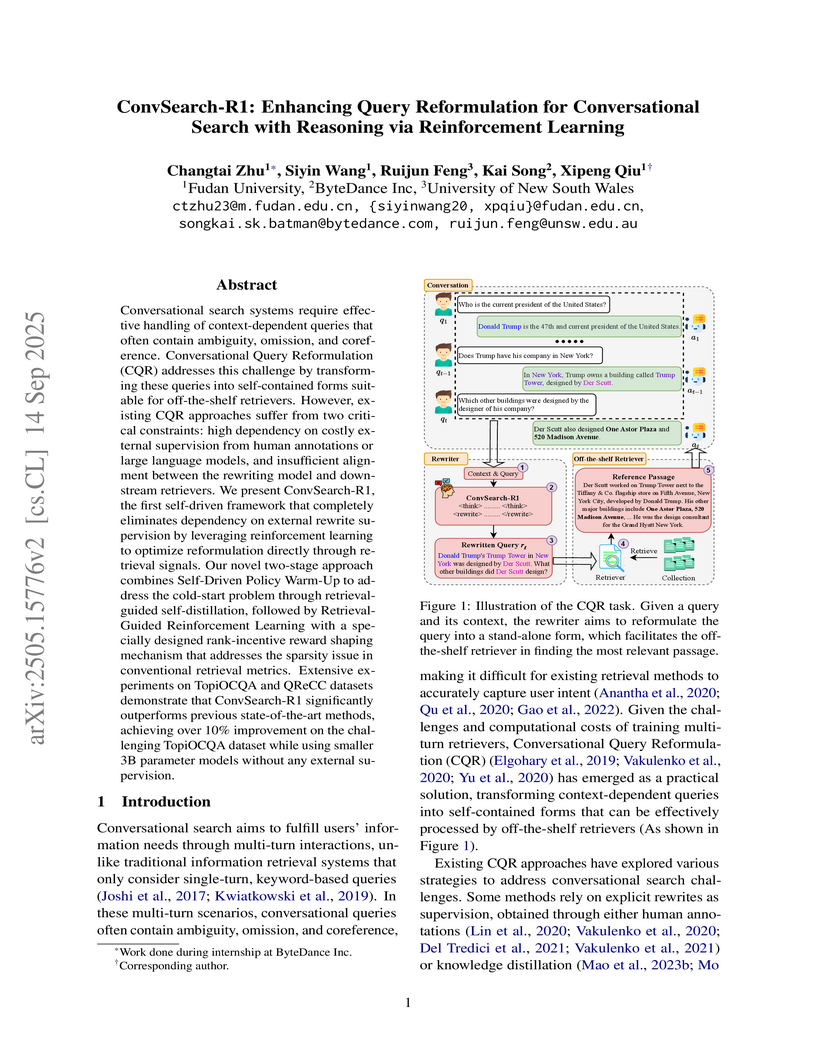

Researchers from Fudan University, ByteDance Inc., and the University of New South Wales introduced ConvSearch-R1, a self-driven reinforcement learning framework that enhances conversational query reformulation without requiring external supervision. This approach achieved state-of-the-art performance on datasets like TopiOCQA and QReCC, improving average retrieval metrics by over 10% using 3B-parameter models.

MOOSE-CHEM is an agentic Large Language Model framework that mathematically decomposes scientific hypothesis generation into tractable steps, demonstrating LLMs' ability to rediscover novel, Nature/Science-level chemistry hypotheses from unseen literature by identifying latent knowledge associations. This framework achieved high similarity to ground truth hypotheses and outperformed existing baselines on a rigorous, expert-validated benchmark.

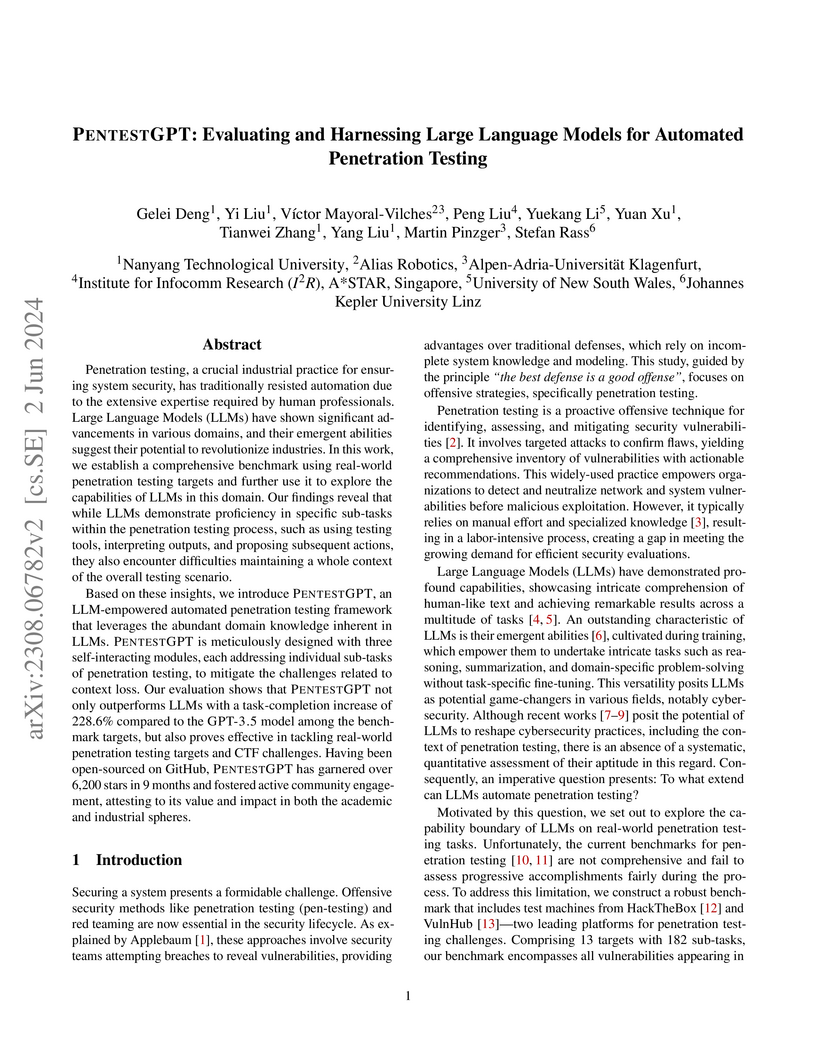

This work systematically evaluates Large Language Models (LLMs) for automated penetration testing and introduces PENTESTGPT, a modular framework that addresses LLM limitations like context loss and strategic planning. PENTESTGPT, with its Reasoning Module and Pentesting Task Tree, significantly improved task and sub-task completion rates on a custom benchmark and demonstrated practical utility in real-world HackTheBox and CTF challenges.

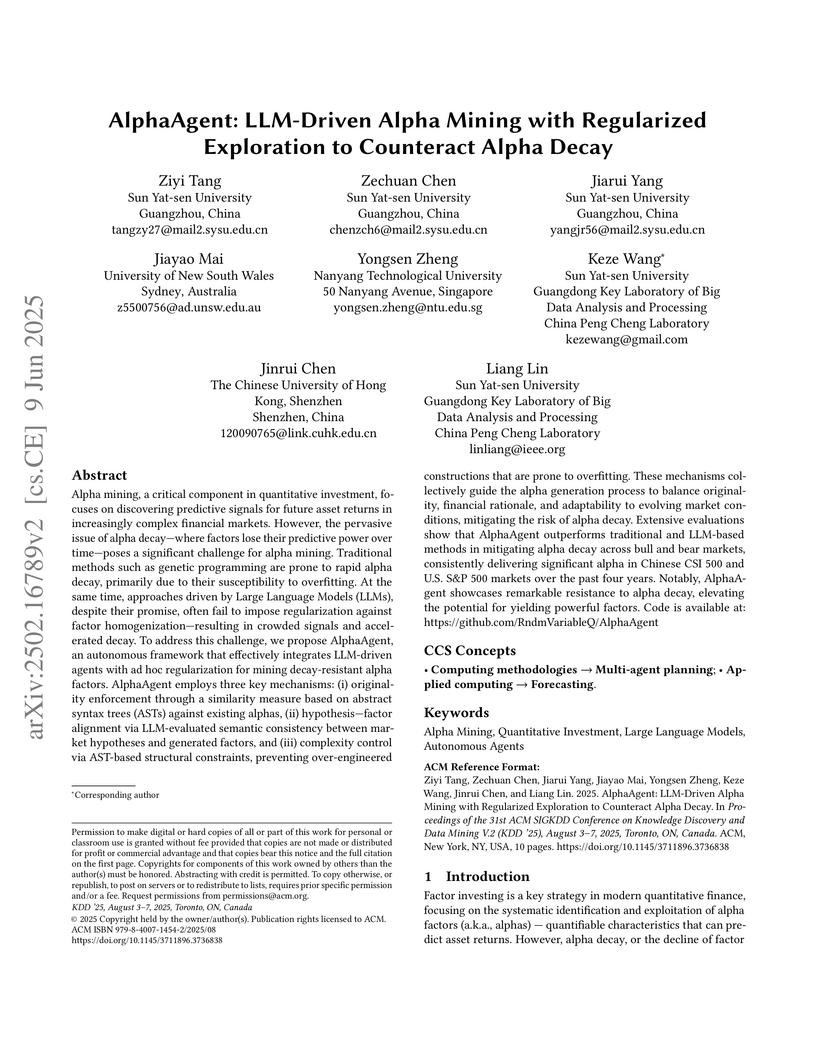

AlphaAgent is an LLM-driven multi-agent framework designed to discover decay-resistant alpha factors for quantitative investment, integrating regularization mechanisms to enhance factor originality, financial rationale, and complexity control. The system achieved consistently superior returns, predictive power, and risk management with high decay resistance on both Chinese CSI 500 and U.S. S&P 500 markets.

The Paths-over-Graph (PoG) framework from UNSW and Data61, CSIRO enhances large language model reasoning by integrating faithful and interpretable knowledge graph paths. This approach achieves new state-of-the-art performance on KGQA benchmarks while significantly reducing LLM computational costs through dynamic path exploration, pruning, and summarization.

Researchers at Inclusion AI and collaborating universities developed MultiEdit, a dataset of over 107K high-quality image editing triplets, utilizing an MLLM-driven pipeline to address the scarcity of diverse training data for instruction-based image editing. Fine-tuning open-source models with MultiEdit-Train significantly enhanced their ability to perform complex editing tasks, improving SD3's CLIPimg by approximately 9.4% and DINO by 16.1% on the new benchmark.

Researchers introduced ResearchBench, a large-scale benchmark, to evaluate Large Language Models' (LLMs) scientific discovery capabilities by decomposing the process into inspiration retrieval, hypothesis composition, and ranking. The benchmark, built with an automated framework using 2024 papers, reveals LLMs' proficiency in retrieving inspirations and ranking hypotheses, while composition remains an area for further development.

03 Oct 2025

StepChain GraphRAG, a framework developed by researchers from UTS, Data61/CSIRO, ECU, and UNSW, enhances multi-hop question answering by integrating iterative reasoning with dynamic knowledge graph construction. It achieved an average Exact Match of 57.67% and F1 score of 68.53% across three datasets, outperforming the previous state-of-the-art by an average of +2.57% EM and +2.13% F1.

Learning PDE dynamics with neural solvers can significantly improve wall-clock efficiency and accuracy compared with classical numerical solvers. In recent years, foundation models for PDEs have largely adopted multi-scale windowed self-attention, with the scOT backbone in \textsc{Poseidon} serving as a representative example.

However, because of their locality, truly globally consistent spectral coupling can only be propagated gradually through deep stacking and window shifting. This weakens global coupling and leads to error accumulation and drift during closed-loop rollouts. To address this, we propose \textbf{DRIFT-Net}. It employs a dual-branch design comprising a spectral branch and an image branch. The spectral branch is responsible for capturing global, large-scale low-frequency information, whereas the image branch focuses on local details and nonstationary structures. Specifically, we first perform controlled, lightweight mixing within the low-frequency range. Then we fuse the spectral and image paths at each layer via bandwise weighting, which avoids the width inflation and training instability caused by naive concatenation. The fused result is transformed back into the spatial domain and added to the image branch, thereby preserving both global structure and high-frequency details across scales. Compared with strong attention-based baselines, DRIFT-Net achieves lower error and higher throughput with fewer parameters under identical training settings and budget. On Navier--Stokes benchmarks, the relative L1 error is reduced by 7\%--54\%, the parameter count decreases by about 15\%, and the throughput remains higher than scOT. Ablation studies and theoretical analyses further demonstrate the stability and effectiveness of this design. The code is available at this https URL.

10 Oct 2025

Mind-Paced Speaking (MPS), a framework from StepFun and Nanyang Technological University, employs a dual-brain architecture for Spoken Language Models to integrate Chain-of-Thought reasoning into real-time speech. This method achieves an average accuracy of 93.9% on Spoken-MQA with a 762-token latency reduction compared to Think-Before-Speak, and near-zero latency with 92.8% accuracy for immediate responses.

Contrastive Language-Image Pretraining (CLIP) excels at learning

generalizable image representations but often falls short in zero-shot

inference on certain downstream datasets. Test-time adaptation (TTA) mitigates

this issue by adjusting components like normalization layers or context

prompts, yet it typically requires large batch sizes and extensive

augmentations, leading to high computational costs. This raises a key question:

Can VLMs' performance drop in specific test cases be mitigated through

efficient, training-free approaches? To explore the solution, we investigate

token condensation (TC) techniques, originally designed to enhance vision

transformer efficiency by refining token usage during inference. We observe

that informative tokens improve visual-text alignment in VLMs like CLIP on

unseen datasets. However, existing TC methods often fail to maintain

in-distribution performance when reducing tokens, prompting us to ask: How can

we transform TC into an effective ``free-lunch'' adaptation strategy for VLMs?

To address this, we propose Token Condensation as Adaptation (TCA), a

training-free adaptation method that takes a step beyond standard TC. Rather

than passively discarding tokens, TCA condenses token representation by

introducing reservoir-based domain anchor tokens for information-preserving

token reduction and logits correction. TCA achieves up to a 21.4% performance

improvement over the strongest baseline on cross-dataset benchmark and the

CIFAR-100-Corrupted dataset while reducing GFLOPs by 12.2% to 48.9%, with

minimal hyperparameter dependency on both CLIP and SigLIP series.

20 Aug 2025

The SAND framework enables large language model (LLM) agents to self-teach explicit action deliberation through an iterative self-learning process. This approach helps agents proactively evaluate and compare multiple potential actions before committing, resulting in over 20% average performance improvement and enhanced generalization on interactive tasks like ALFWorld and ScienceWorld.

There are no more papers matching your filters at the moment.